Nvidia reportedly boosts Vera Rubin performance to ward hyperscalers off AMD Instinct AI accelerators — increased boost clocks and memory bandwidth pushes power demand by 500 watts to 2300 watts

And perhaps improve yield as an added bonus.

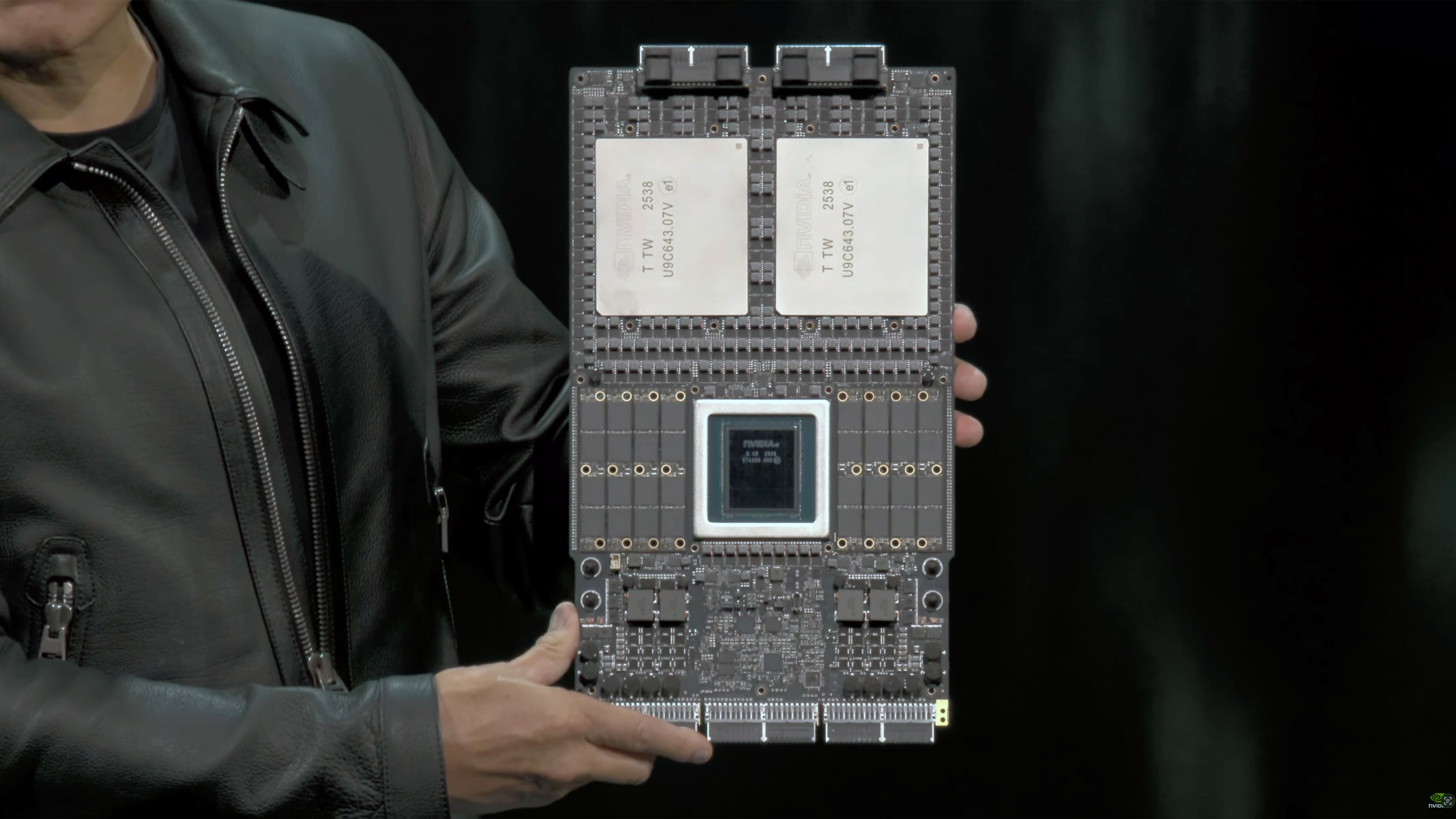

Recently, Nvidia announced that it had initiated 'full production' of its Vera Rubin platform for AI datacenters, reassuring the partners that it is on track to launch later this year and introducing ahead of its rivals, such as AMD. However, in addition to possibly bringing the release forward, Nvidia is also reportedly revamping the specifications of the Rubin GPU to offer higher performance: reports suggest a TDP increase to 2.30 kW per GPU and a memory bandwidth of 22.2 TB/s.

The Rubin GPU's power rating has now been locked in at 2.3 kW, up from 1.8 kW originally announced by Nvidia, but down from 2.5 kW expected by some market observers, according to Keybanc (via @Jukan05). The intention to increase the power rating from 1.8 kW stems from the desire to ensure that this year's Rubin-based platforms are markedly faster compared to AMD's Instinct MI455X, which is projected to operate at around 1.7 kW. The information about the power budget increase for Rubin comes from an unofficial source, but it is indirectly corroborated by SemiAnalysis, which claims that Nvidia has increased the data transfer rates of HBM4 stacks, and now each Rubin GPU boasts a memory bandwidth of 22.2 TB/s, up from 13 TB/s. We have reached out to Nvidia to try to verify these claims.

An additional ~500W of power headroom gives Nvidia multiple options to improve real-world performance rather than just specifications on paper. Most directly, it would enable higher sustained clocks under continuous training and inference loads, as well as reduced throttling when the AI accelerator is fully stressed. That extra power would also make it easier to keep more execution units running at the same time, which boosts throughput in heavy workloads where compute, memory, and interconnect are all under load at once.

In addition to stream processors (or rather tensor units), the extra power budget could be used to run HBM4 memory and PHYs at higher clocks to improve memory bandwidth. In fact, a higher power budget would also enable Nvidia to improve the performance of all links (including memory, internal interconnects, and NVLink) to more aggressive operating points while retaining sound signal margins, which is increasingly important as modern AI systems become constrained by memory bandwidth and fabric performance.

At the system level, an extra 500W TDP for AI accelerators translates into higher performance per node and per rack. Hyperscalers value higher system-level performance more than per-GPU performance per se, as fewer GPUs may be needed to complete the same job, which lowers networking load and improves cluster-level efficiency. That, of course, assumes that these hyperscalers can feed machines with a considerably higher power consumption.

Last but not least, a higher TDP would also help on the manufacturing side as it enables more flexible binning and voltage headroom, which improves usable yield without the necessity to cut down the number of execution units or lower clocks.

As a result, the extra 500W functions not only as a way to improve the performance of Rubin GPUs and the competitive position of VR200 NVL144 rack-scale solutions, but also acts as reliability headroom that ensures that the GPU can deliver predictable, sustained throughput in large-scale datacenter deployments rather than just offer higher peak numbers on paper. As a bonus, Nvidia can potentially supply more Rubin GPUs to the market, which is good for its bottom line.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

DS426 A 500W difference per GPU is a difference in cooling, especially at the macro level (datacenter cooling capacity). While value appears to go up (potentially buying fewer GPU's or getting higher peak performance), operational cost and lower efficiency aren't a good trade-off for some clients.Reply

Hopefully AMD maintains the line as there's no point in getting into a power pi**ing match with nVidia. -

blitzkrieg316 I dont want to hear another word from anyone involved about insufficient power grids and skyrocketing pricesReply -

Cooe ... Nobody is going to want this with the GARGANTUAN increase in power and cooling demands. Power and cooling are WAAAAAY more expensive for data centers/hyperscalers vs the actual servers & GPU's as they as are continual expenses whereas the GPU's themselves are a one time expense. The cost of operation with these will be absolute freaking garbage compared to both Nvidia's prior offerings or AMD Instinct. 🤷Reply