Intel's roadmap adds mysterious 'hybrid' AI processor featuring x86 CPUs, dedicated AI accelerator, and programmable IP — chip may capitalize on a market forgotten by Nvidia and AMD

However, one looming question remains

Intel this week reiterated its plans to address emerging and niche AI inference use cases with products that offer the best performance efficiency and value. Among the products Intel mentioned is a hybrid solution that combines multiple IPs in a bid to address specific use cases that require an x86 CPU, a fixed-function AI accelerator, and programmable logic.

"Over the last several quarters, we have been developing a broader AI and accelerator strategy that we plan to refine in the coming months," said Lip-Bu Tan, chief executive of Intel, during an earnings call with investors and analysts. "This will include innovative options to integrate our x86 CPUs with fixed-function and programmable accelerator IP."

A mysterious hybrid design

Based on the description provided by the head of the company, the hybrid AI solution is set to pack Intel's x86 cores, Intel's fixed-function accelerator IP (which may derive from its Xe GPUs, or from something more compute-oriented), and programmable logic to accelerate emerging workloads (perhaps licensing FPGA IP from Altera, or from QuickLogic, which seems to have hard eFPGA IP implemented on Intel 18A (note that this is speculation, not confirmation). The goal is to produce a hybrid AI accelerator capable of targeting both existing and emerging inference workloads.

"Our focus is on the emerging wave of AI workloads — reasoning models, agentic and physical AI, and inference at scale — where we believe Intel can truly disrupt and differentiate," Tan said.

Building a hybrid processor to address AI workloads is not entirely new. When AMD and Intel developed their product strategies for artificial intelligence and supercomputing early this decade, they envisioned processors combining x86 cores and GPU-based accelerators, perhaps envisioning bursty workloads where control flows will dominate execution.

Over time, it appeared that the compute performance appetites of AI workloads are so high that developers of frontier AI models prefer to use servers featuring one multi-core CPU and up to eight purebred compute GPUs, rather than hybrid processors featuring both x86 and GPU IP (i.e., execution dominating the control flow). As a result, both companies quietly shut down their hybrid projects and focused on AI accelerators based on architectures derived from GPUs.

GPUs are still on the table

When it comes to Intel, it first removed x86 IP from its codenamed Falcon Shores product and converted it into a pureblood AI GPU, then decided against releasing the product commercially, instead using it as a development vehicle for the software stack and rack-scale AI platform. As a result, Intel appears to have two AI accelerators within its AI roadmap (if nothing has changed): Crescent Island, due this year, and Jaguar Shores due in 2027.

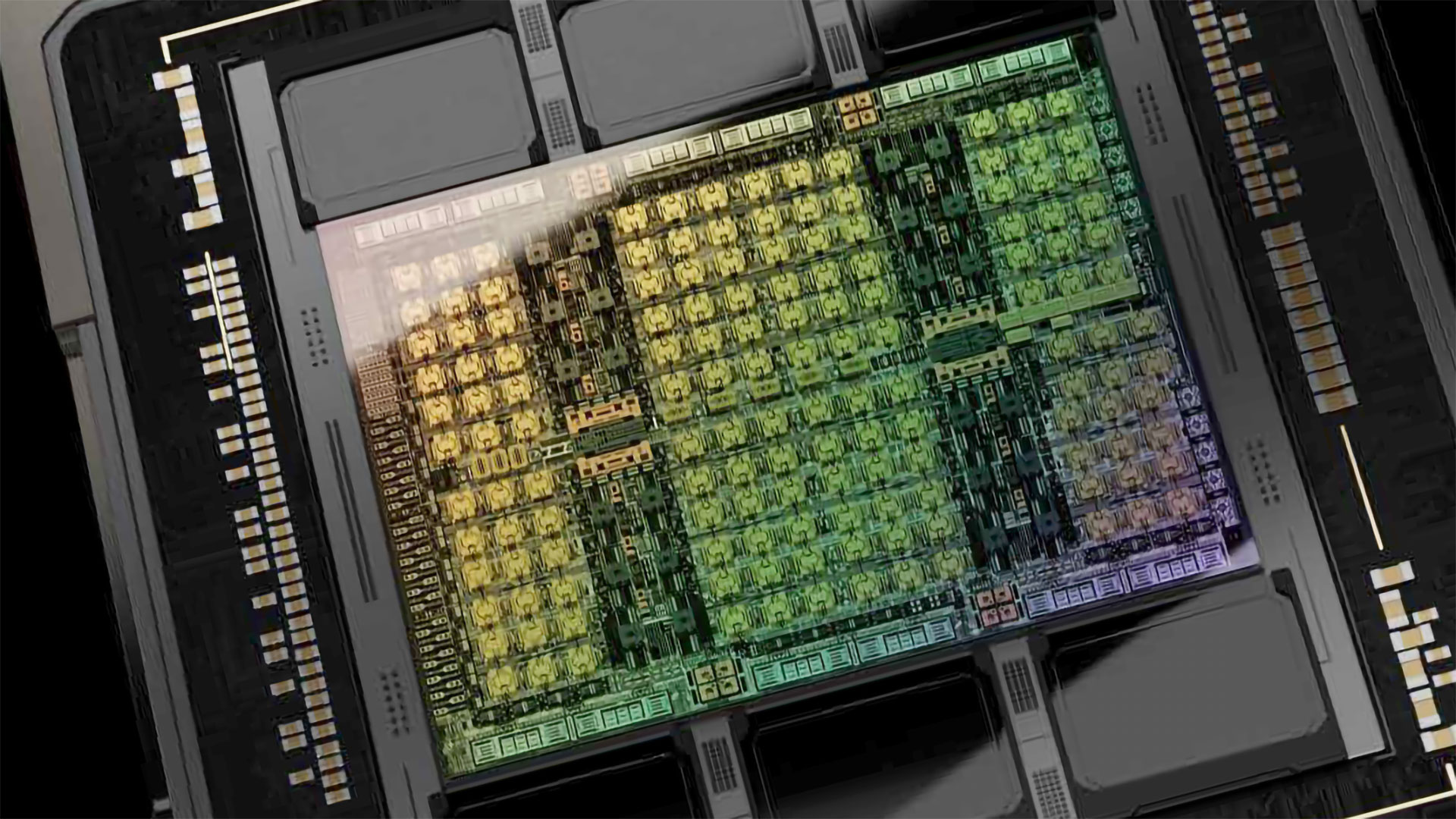

Crescent Island is an inference-optimized Data Center GPU that carries one or two high-performance processors based on the Xe3P architecture (performance-enhanced 3rd Generation Xe GPU architecture) along with 160 GB of LPDDR5X memory. The product is designed to handle a 'broad range of data types' relevant for inference workloads, cloud providers, and on-prem corporate deployments, which is why it is 'power and cost optimized for air-cooled enterprise servers.' Crescent Island will be a workhorse for accelerating mainstream AI models optimized for GPUs.

As for Jaguar Shores, this one is expected to be based on an architecture optimized for compute as well as for AI training and inference, so it will be a full-fat purebred data center GPU with plenty of HBM4 memory onboard. Meanwhile, rack-scale solutions based on Jaguar Shores are projected to feature silicon photonics interconnects to maximize the performance of massive clusters. Unless Intel repositions Jaguar Shores, this one could indeed offer formidable performance.

Where does the new mysterious design fit in?

Given that Intel has the Crescent Island and Jaguar Shores GPUs (and likely, their successors) serving very different market segments — from edge AI inference workloads to on-prem deployments to large-scale inference and training — in the roadmap, at first glance, it does not seem that Intel may need anything else, other than positioning the pair properly. However, these observations are based solely on first impressions, so it's worth digging deeper.

First up, the new product is not meant to compete against heavy-duty Rubin or Feynman GPUs or systems on their base (potentially Jaguar Shores). It is also not meant to compete with Crescent Island. But perhaps it can apply to heterogeneous, latency-sensitive, retrieval-augmented (RAG) AI workloads on-prem? On-prem data center deployments is a market that Intel has served well historically, so this is almost certainly the market it plans to address.

This market now potentially includes a stable of AI workloads, spanning recommendation systems and fraud detection, all the way to physical AI. While clearly distinct, these are all bursty, lightweight models and rule engines under strict latency agreements (SLAs), which is exactly where a tightly integrated hardware succeeds.

Agentic AI systems also fall into this category: they spend much of their time planning, branching, calling tools or databases, and then make small inference steps (albeit with limited batching), which keeps GPUs underutilized, thus demonstrating CPU-GPU synchronization overheads.

In all of the aforementioned cases, workloads are bursty, batch sizes are small, and control flow essentially dominates execution, exactly where tightly integrated heterogeneous processors — with CPUs, fixed-function acceleration, and low-latency interconnects — are a better architectural match than discrete GPU-only platforms.

An elephant in the room

Despite all of this, there is one elephant in the room for Intel to address. The programmable logic mentioned by the Intel chief exec. Given how fast AI models, and therefore workloads, are evolving, fixed-function silicon would limit flexibility, falling back to CPU and GPU hardware, and to a large degree destroying synchronization. This is where programmable logic comes into play, as it allows frequently used but evolving parts of the workload to be accelerated. However, this part requires very close integration of hardware and software. Whether or not Intel's oneAPI can deliver this remains to be seen, and might even demand its own analysis.

The hybrid future

Intel has quietly brought a hybrid AI processors back onto its roadmap: a mysterious design that combines an x86 CPU, fixed-function AI acceleration (with no details about the architecture) to its roadmap. Coupled with Crescent Island and Jaguar Shores products, this one may offer something that AMD and Nvidia do not address at this time.

If executed well, the hybrid approach could let Intel differentiate through tight integration and programmability, though its success will depend on software maturity, particularly around oneAPI.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.