China’s GPU cloud consolidates around Baidu and Huawei as domestic AI chips scale up — export controls leave gap open for homegrown solutions

Domestic AI accelerators, vertically integrated cloud stacks, and a flood of capital are reshaping China’s AI infrastructure market under U.S. export controls.

China’s GPU cloud market is consolidating rapidly around a small number of domestic champions, with Baidu and Huawei emerging as the clear leaders, as access to Nvidia’s most advanced accelerators remains restricted.

A recent Frost & Sullivan report places Baidu and Huawei together at more than 70% of China’s "GPU cloud" market — defined specifically as cloud services built on domestically designed AI chips rather than imported GPUs — reflecting a deliberate shift by Chinese Internet and telecom giants to vertically integrate AI hardware, software frameworks, and cloud services, while a parallel wave of AI chip start-ups races to public markets to fund the next stage of domestic silicon development.

This is all unfolding against the backdrop of U.S. export controls that continue to limit China’s access to leading-edge Nvidia and AMD accelerators. Since late 2022, Chinese firms have been forced to plan AI infrastructure growth around constrained supplies of downgraded GPUs or around entirely domestic alternatives. This has led to a GPU cloud market that looks increasingly different from its Western counterparts, with architectural choices and performance trade-offs shaped as much by geopolitics as the engineering itself.

Vertical integration replaces scaling

Baidu and Huawei’s dominance rests on a shared strategy of full-stack control. Rather than acting as neutral cloud providers that source GPUs from global vendors, both companies design their own AI accelerators, optimize their own software frameworks, and deploy those components at scale inside proprietary data centers.

Baidu’s AI cloud is built around its Kunlun accelerator line, now in its third generation. In April 2025, Baidu disclosed that it had brought online a 30,000-chip training cluster powered entirely by Kunlun processors. According to the company, that cluster is capable of training foundation models with hundreds of billions of parameters and simultaneously supporting large numbers of enterprise fine-tuning workloads. By tightly coupling Kunlun hardware with Baidu’s PaddlePaddle framework and its internal scheduling software, Baidu is compensating for the absence of Nvidia’s CUDA ecosystem with vertical optimization.

Huawei has taken a similar but more industrial-scale approach. Its Ascend accelerator family now underpins a growing share of AI compute deployed by state-owned enterprises and government-backed cloud projects. Huawei’s latest large-scale configuration, based on Ascend 910-series chips, emphasizes dense clustering and high-speed interconnects to offset weaker per-chip performance and lower memory bandwidth compared to Nvidia’s H100-class GPUs. Huawei has been explicit about this trade-off internally, framing cluster-level scaling as the primary path forward while advanced process nodes and EUV lithography remain out of reach.

Both companies are also pursuing heterogeneous cluster designs. Chinese cloud providers have increasingly experimented with mixing different generations and vendors of accelerators within a single training or inference pool. This approach reduces dependence on any single chip supply source but raises software complexity, requiring custom orchestration layers to manage uneven performance characteristics. Baidu and Huawei are among the few firms in China with the engineering resources to make such systems viable at production scale, which further entrenches their market position.

Domestic chips close the gap

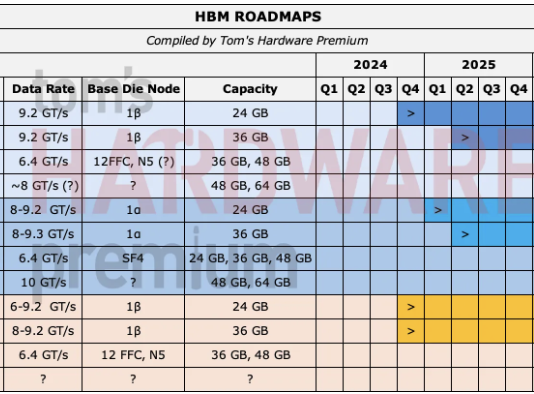

Domestic accelerators, such as Huawei’s Ascend 910B, Baidu’s Kunlun II, and the third-generation Kunlun P800, have narrowed the theoretical performance gap with Nvidia’s A100 and H100, but efficiency, yields, and memory subsystems continue to lag.

Huawei's Ascend 910B, manufactured at SMIC on an advanced yet yield-constrained process, delivers competitive compute density on paper; however, it has been produced in limited volumes. Huawei’s next-generation Ascend 910C, which uses a multi-die design to boost throughput, improves performance further but at the cost of significantly higher power consumption per rack. In large clusters, this translates into higher operating costs and more complex cooling requirements, which limit where such systems can be economically deployed.

Kunlun II, meanwhile, was broadly comparable to Nvidia’s A100 in certain workloads, while the newer P800 focuses on scalability and cloud integration rather than headline benchmarks. Baidu has been more restrained in its performance claims, instead emphasizing system-level throughput and service availability to enterprise customers.

Fueling the next phase

While Baidu and Huawei consolidate the cloud layer, a growing ecosystem of AI chip designers is moving aggressively into public markets to fund next-gen domestic silicon. Over the past year, Chinese regulators have accelerated approvals for tech IPOs, and AI chip firms have been among the biggest beneficiaries.

Companies such as Moore Threads and MetaX have debuted on domestic exchanges at valuations that imply extraordinary future growth, despite limited current market share and ongoing losses. Investor enthusiasm is being driven by policy alignment, with Beijing having made it clear that semiconductors and AI infrastructure are national priorities, and public markets are being used as a mechanism to channel capital into these sectors at scale.

This influx of funding is already reshaping competition beneath Baidu and Huawei. Several newly listed chipmakers are positioning their products explicitly for cloud deployment, with architectures optimized for large clusters rather than edge devices or consumer graphics. The risk, however, is fragmentation. Without a dominant software platform equivalent to CUDA, many of these startups depend on cloud providers like Baidu and Huawei to provide integration and market access, thereby reinforcing the central role of the GPU cloud leaders, even as it broadens the underlying hardware supply base.

A structurally different AI cloud market

China’s GPU cloud market is evolving into a structurally different system from those in the U.S. and Europe. Instead of competing primarily on access to the latest Nvidia hardware, Chinese providers are competing on integration depth, domestic supply security, and alignment with Beijing’s policy goals.

Tightening control over GPU cloud infrastructure strengthens the bargaining position across the AI value chain for both Baidu and Huawei. They are not just service providers but gatekeepers for domestic AI compute, with influence over which chips, frameworks, and tools gain traction at scale.

In the near term, this model is hardly going to displace Nvidia’s dominance globally. Chinese firms still prefer Nvidia accelerators where they are legally and practically available, and performance-per-watt remains a decisive advantage for U.S.-designed GPUs. But within China, GPU cloud growth will be driven by domestic stacks, even if they are less efficient, because they are controllable.

Luke James is a freelance writer and journalist. Although his background is in legal, he has a personal interest in all things tech, especially hardware and microelectronics, and anything regulatory.