Amazon launches Trainium3 AI accelerator, competing directly against Blackwell Ultra in FP8 performance — new Trn3 Gen2 UltraServer takes vertical scaling notes from Nvidia's playbook

Amazon's Trn3 Gen2 UltraServer beats Nvidia's GB300 NVL72 in FP8.

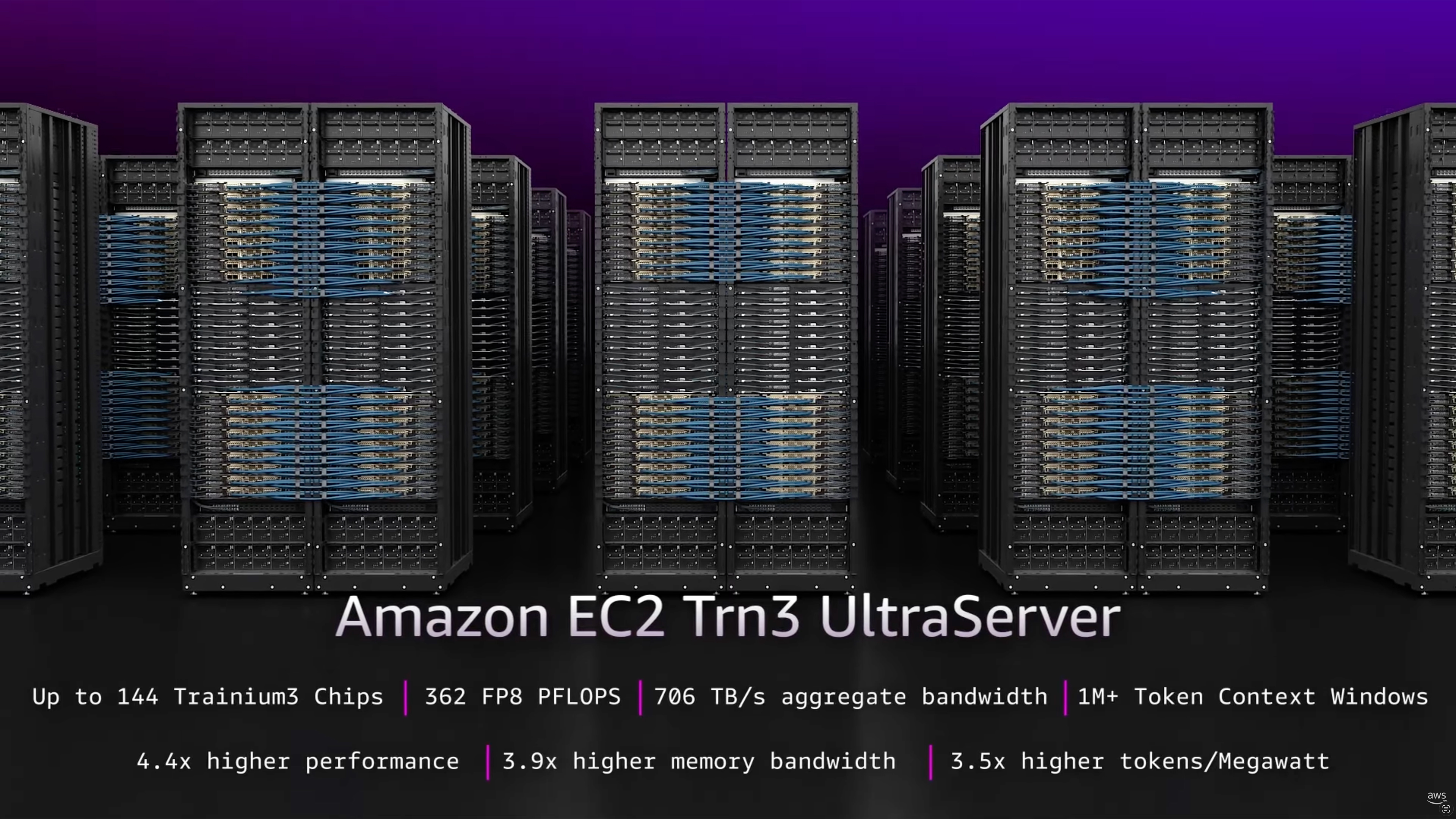

Amazon Web Services this week introduced its next-generation Trainium3 accelerator for AI training and inference. As AWS puts it, the new processor is twice as fast as its predecessor and is four times more efficient. This makes it one of the best solutions for AI training and inference in terms of cost. In absolute numbers, Trainium3 offers up to 2,517 MXFP8 TFLOPS, which is nearly two times lower compared to Nvidia's Blackwell Ultra. However, AWS's Trn3 UltraServer packs 144 Trainium3 chips per rack, and offers 0.36 ExaFLOPS of FP8 performance, therefore matching the performance of Nvidia's NVL72 GB300. This is a very big deal, as very few companies can challenge Nvidia's rack-scale AI systems.

AWS Trainium3

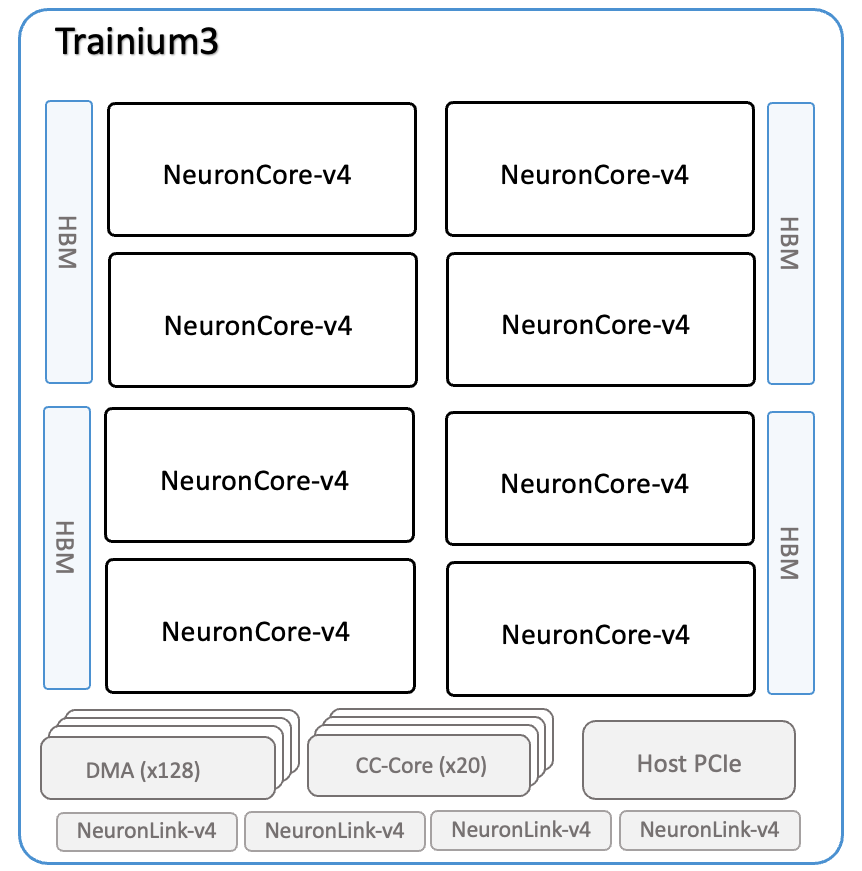

The AWS Trainium3 is a dual-chiplet AI accelerator that is equipped with 144 GB of HBM3E memory using four stacks, which provides peak memory bandwidth of up to 4.9 TB/s. Each compute chiplet, allegedly made by TSMC using its 3nm-class fabrication process, contains four NeuronCore-v4 cores (which feature an extended ISA compared to predecessors) and connects two HBM3E memory stacks. The two chiplets are connected using a proprietary high-bandwidth interface and share 128 independent hardware data-movement engines (which are key for the Trainium architecture), collective communication cores that coordinate traffic between chips, and four NeuronLink-v4 interfaces for scale-out connectivity.

Accelerator name | Trainium2 | Trainium3 | B200 | B300 (Ultra) |

Architecture | Trainium2 | Trainium3 | Blackwell | Blackwell Ultra |

Process Technology | ? | N3E or N3P | 4NP | 4NP |

Physical Configuration | 2 x Accelerators | 2 x Accelerators | 2 x Reticle Sized GPUs | 2 x Reticle Sized GPUs |

Packaging | CoWoS-? | CoWoS-? | CoWoS-L | CoWoS-L |

FP4 PFLOPs (per Package) | - | 2.517 | 10 | 15 |

FP8/INT6 PFLOPs (per Package) | 1299 | 2.517 | 5 | 5 |

INT8 POPS (per Package) | - | - | 5 | 0.33 |

BF16 PFLOPs (per Package) | 0.667 | 0.671 | 2.5 | 2.5 |

TF32 PFLOPs (per Package) | 0.667 | 0.671 | 1.15 | 1.25 |

FP32 PFLOPs (per Package) | 0.181 | 0.183 | 0.08 | 0.08 |

FP64/FP64 Tensor TFLOPs (per Package) | - | - | 40 | 1.3 |

Memory | 96 GB HBM3 | 144 GB HBM3E | 192 GB HBM3E | 288 GB HBM3E |

Memory Bandwidth | 2.9 GB/s | 4.9 GB/s | 8 TB/s | 8 TB/s |

HBM Stacks | 8 | 8 | 8 | 8 |

Inter-GPU communications | NeuronLink-v3 1.28 TB/s | NeuronLink-v4 2.56 TB/s | NVLink 5.0, 200 GT/s | 1.8 TB/s bidirectional | NVLink 5.0, 200 GT/s | 1.8 TB/s bidirectional |

SerDes speed (Gb/s unidirectional) | ? | ? | 224G | 224G |

GPU TDP | ? | ? | 1200 W | 1400 W |

Accompanying CPU | Intel Xeon | AWS Graviton and Intel Xeon | 72-core Grace | 72-core Grace |

Launch Year | 2024 | 2025 | 2024 | 2025 |

A NeuronCore-v4 integrates four execution blocks: a tensor engine, a vector engine, a scalar engine, a GPSIMD block, and 32 MB of local SRAM that is explicitly managed by the compiler instead of being cache-controlled. From a software development standpoint, the core is built around a software-defined dataflow model in which data is staged into SRAM by DMA engines, processed by the execution units, and then written back as near-memory accumulation enables DMA to perform read-add-write operations in a single transaction. The SRAM is not coherent across cores and is used for tiling, staging, and accumulation rather than general caching.

- The Tensor Engine is a systolic-style matrix processor for GEMM, convolution, transpose, and dot-product operations and supports MXFP4, MXFP8, FP16, BF16, TF32, and FP32 inputs with BF16 or FP32 outputs. Per core, it delivers 315 TFLOPS in MXFP8/MXFP4, 79 TFLOPS in BF16/FP16/TF32, and 20 TFLOPS in FP32, and it implements structured sparsity acceleration using M:N patterns (such as 4:16, 4:12, 4:8, 2:8, 2:4, 1:4, and 1:2), allowing the same 315 TFLOPS peak on supported sparse workloads.

- The Vector Engine for vector transforms provides about 1.2 TFLOPS FP32, hardware conversion into MXFP formats, and a fast exponent unit with four times the throughput of the scalar exponent path for attention workloads. The unit supports various data-types, including FP8, FP16, BF16, TF32, FP32, INT8, INT16, and INT32.

- The Scalar Engine also provides about 1.2 TFLOPS FP32 for control logic and small operations across FP8-to-FP32 and integer data types.

Perhaps the most interesting component of NeuronCore-v4 is the GPSIMD block, which integrates eight fully-programmable 512-bit vector processors that can execute general-purpose code written in C/C++ while accessing local SRAM. GPSIMD is integrated into NeuronCore because not everything in real AI models maps cleanly to a tensor engine. Modern AI workloads contain a lot of code for unusual data layouts, post-processing logic, indexing, and model-specific math. These are hard or inefficient to express as matrix operations, and running them on the host CPU would introduce latency and costly data transfers. GPSIMD solves this by providing real general-purpose programmable vector units inside the core, so such logic runs directly next to the tensors at full speed and using the same local SRAM.

In short, NeuronCore-v4 operates as a tightly coupled dataflow engine in which tensor math, vector transforms, scalar control, and custom code all share a local 32MB scratchpad and are orchestrated by the Neuron compiler rather than by a warp scheduler used on Nvidia hardware.

Performance-wise, Trainium3 outperforms its direct predecessor in FP8 compute (well, MXFP8) by almost two times and hits 2.517 PFLOPS per package (clearly ahead of Nvidia's H100/H200, but behind Blackwell B200/B300) and adds MXFP4 support. However, Trainium3's BF16, TF32, and FP32 performance remains on par with Trainium2, which clearly shows that AWS is betting on MXFP8 for training and inference going forward. To that end, it does not develop its BF16 (which is widely used for training nowadays) and FP32 capabilities, as it seems to feel comfortable with the performance it has, given that these formats are now used primarily for gradient accumulation, master weights, optimizer states, loss scaling, and some precision-sensitive operations.

One interesting capability that Trainium3 has that is worth mentioning is the Logical NeuronCore Configuration (LNC) feature, which lets the Neuron compiler fuse four physical cores into a wider automatically synchronized logical core with combined compute, SRAM, and HBM, which could be useful for very wide layers or big sequence lengths that are common with very large AI models.

AWS's Trn3 UltraServers: Almost beating Nvidia's GB300 NVL72

Much of Nvidia's success in the recent quarters was driven by its rack-scale NVL72 solutions featuring 72 of its Blackwell GPUs. Supporting a massive scale-up world size and an all-to-all topology, which is especially important for Mixture-of-Experts (MoE) and autoregressive inference. This gives Nvidia a massive advantage over AMD and developers of custom accelerators, such as AWS. To enable this capability, Nvidia had to develop NVLink switches, sophisticated network cards, and DPUs, a massive silicon endeavor. However, it looks like AWS's Trn3 UltraServers will give Nvidia's GB300 NVL72 a run for its money.

Name | Trn2 UltraServer | Trn3 Gen1 UltraServer | Trn3 Gen2 UltraServer | GB200 NVL72 | GB300 NVL72 |

GPU Architecture | Trainium2 | Trainium3 | Trainium3 | Blackwell | Blackwell Ultra |

GPU/GPU+CPU | Xeon + Trainium3 | Xeon + Trainium3 | Graviton + Trainium3 | GB200 | GB300 |

Compute Chiplets | 96 | 128 | 288 | 144 | 144 |

GPU Packages | 48 | 64 | 144 | 72 | 72 |

FP4 PFLOPs (Dense) | - | 161.1 | 362.5 | 720 | 1080 |

FP8 PFLOPS (Dense) | 83.2 | 161.1 | 362.5 | 360 | 360 |

FP16/BF16 PFLOPS (Dense) | 164 (sparse) | 42.9 | 96.6 | 180 | 180 |

FP32 PFLOPS | 11.6 | 11.7 | 26.4 | 5.76 | 5.76 |

HBM Capacity | 6 TB | 9 TB | 21 TB | 14 TB | 21 TB |

HBM Bandwidth | 185.6 TB/s | 313.6 TB/s | 705.6 TB/s | 576 TB/s | 576 TB/s |

CPU | Xeon Sapphire Rapids | Xeon | Graviton | 72-core Grace | 72-core Grace |

NVSwitch | - | - | - | NVSwitch 5.0 | NVSwitch 5.0 |

NVSwitch Bandwidth | ? | ? | ? | 3600 GB/s | 3600 GB/s |

Scale-Out | ? | ? | ? | 800G, copper | 800G, copper |

Form-Factor Name | ? | ? | ? | Oberon | Oberon |

Launch Year | 2024 | 2025 | 2025 | 2024 | 2025 |

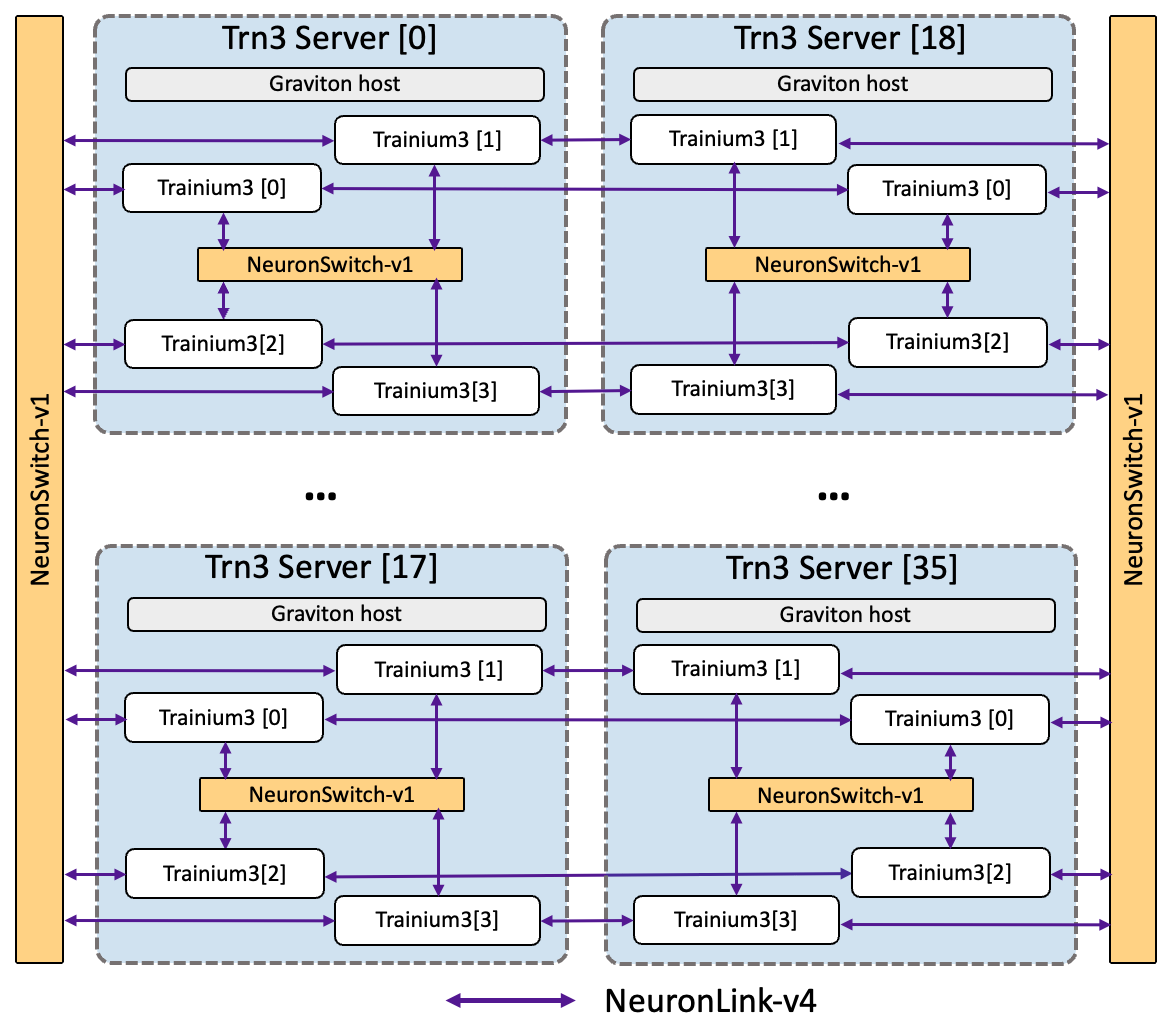

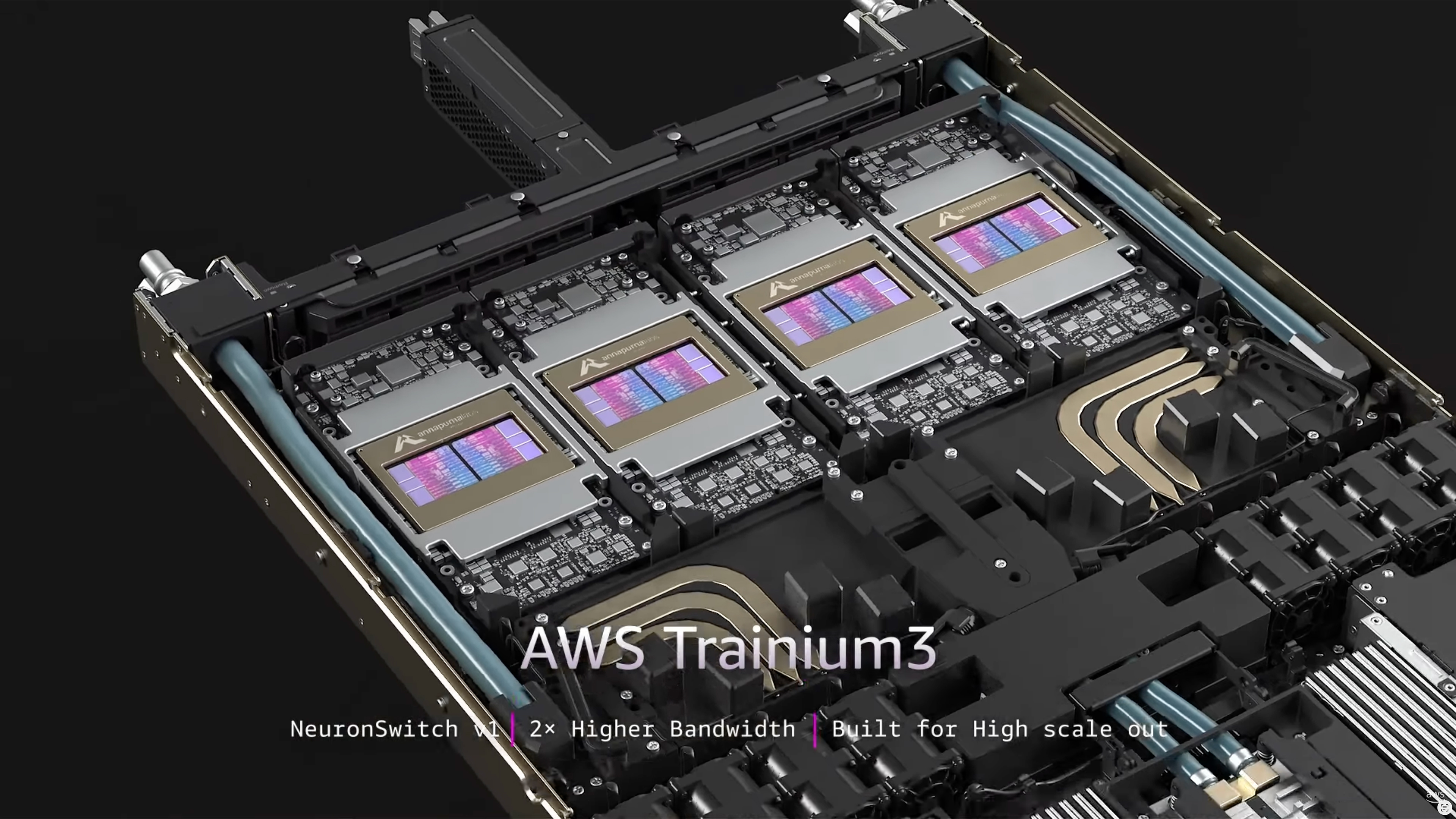

Trn3 UltraServers, powered by Trainium3 AI accelerators, will be offered in two sizes: one configuration packs 64 accelerators and presumably an Intel Xeon CPU, while the larger variant brings together 144 accelerators and an Arm-based Graviton in a single rack-scale solution. In the larger system, the 144 Trainium3 accelerators are distributed across 36 physical servers with one Graviton CPU and four Trainium3 chips installed in each machine. In many ways, such an arrangement resembles Nvidia's NVL72 approach, which uses Nvidia's CPU, GPU, and connectivity silicon, highlighting AWS' direction of building vertically integrated AI platforms.

Within a server, Trainium3 accelerators are linked through a first NeuronSwitch-v1 layer using NeuronLink-v4 (at 2 GiB/s per device, though it is unclear whether we are talking about a single direction bandwidth, or aggregated bidirectional bandwidth), and communication between different servers is routed through two additional NeuronSwitch-v1 fabric layers, again carried over NeuronLink-v4. Unfortunately, AWS does not publish aggregate NeuronSwitch-v1 bandwidth across the domain.

From a performance standpoint, the larger configuration with 144 Trainium3 delivers 362.5 MXFP8/MXFP4 PetaFLOPS (dense) performance, which (on par with GB300 NVL72), 96.624 PFLOPS of BF16/FP16/TF32 throughput, and 26.352 PFLOPS in FP32. The system is also equipped with 21 TB of HBM3E memory, featuring an aggregate memory bandwidth of 705.6 TB/s, leaving Nvidia's GB300 NVL72 behind in this metric.

In general, Trn3 Gen2 UltraServer appears very competitive against Nvidia's GB300 NVL72 in terms of FP8 performance. FP8 is about to get more popular for training, so betting on this format makes a lot of sense. Of course, Nvidia has an ace up its sleeve in the form of NVFP4, which is positioned both for inference and training, and armed with this format, the company's Blackwell-based machines are unbeatable. The same applies to BF16, which got faster compared to Trainium2, but not enough to beat Nvidia's Blackwell.

Overall, while the AWS Trn3 Gen2 UltraServer with 144 Trainium3 accelerator looks quite competitive when it comes to FP8 compared to Nvidia's Blackwell-based NVL72 machines, Nvidia's solution is more universal in general.

AWS Neuron going the way of CUDA

In addition to rolling out new AI hardware, AWS announced a broad expansion of its AWS Neuron software stack at its annual re:Invent conference this week. AWS positions this release as a shift toward openness and developer accessibility, so the update promises to make Trainium platforms easier to adopt, let standard machine learning frameworks run directly on Trainium hardware, give users deeper control over performance, and even expose low-level optimization paths for experts.

A major addition is native PyTorch integration through an open-source backend named TorchNeuron. Using PyTorch's PrivateUse1 mechanism, Trainium now appears as a native device type, which enables existing PyTorch code to execute without modification. TorchNeuron also supports interactive eager execution, torch.compile, and distributed features such as FSDP and DTensor, and it works with popular ecosystems including TorchTitan and Hugging Face Transformers. Access to this feature is currently restricted to select users as part of the private preview program.

AWS also introduced an updated Neuron Kernel Interface (NKI) that gives developers direct control over hardware behavior, including instruction-level programming, explicit memory management, and fine-grained scheduling, exposing Trainium's instruction set to kernel developers. In addition, the company has released the NKI Compiler as open source under Apache 2.0. The programming interface is available publicly, while the compiler itself remains in limited preview.

AWS also released its Neuron Explorer, a debugging and tuning toolkit that lets software developers and performance engineers improve how their models run on Trainium. This is done by tracing execution from high-level framework calls, all the way down to individual accelerator instructions, while offering layered profiling, source-level visibility, integration with development environments, and AI-guided suggestions for performance tuning.

Finally, AWS introduced its Neuron Dynamic Resource Allocation (DRA) to integrate Trainium directly into Kubernetes without the need for custom schedulers. Neuron DRA relies on the native Kubernetes scheduler and adds hardware-topology awareness to enable complete UltraServers to be allocated as a single resource and then flexibly assign hardware for each workload. Neuron DRA supports Amazon EKS, SageMaker HyperPod, and UltraServer deployments, and is provided as open-source software with container images published in the AWS ECR public registry.

Both Neuron Explorer and Neuron DRA are designed to simplify cluster management and give users fine-grained control over how Trainium resources are assigned and used. In a nutshell, AWS is moving closer to making its Trainium-based platforms much more ubiquitous than they are today, in an effort to make them more competitive against CUDA-based offerings from Nvidia.

In a nutshell

This week, Amazon Web Services released its 3rd Generation Trainium accelerator for AI training and inference, as well as accompanying Trn3 UltraServers rack-scale solutions. For the first time, Trn3 Gen2 UltraServers rack-scale machines will rely solely on AWS in-house hardware, including CPU, AI accelerators, switching hardware, and connectivity fabrics, signalling that the company has adopted Nvidia's vertical integration hardware strategy.

AWS claims that its Trainium3 processor offers roughly 2X higher performance and 4X better energy efficiency than Trainium2 as each accelerator delivers up to 2.517 PFLOPS (MXFP8) — beating Nvidia's H100, but trailing B200 — and is accompanied by 144 GB of HBM3E with 4.9 TB/s of bandwidth. Meanwhile, Trn3 Gen2 UltraServers scale to 144 accelerators for about 0.36 ExaFLOPS FP8 performance, which brings it on par with Nvidia's GB300 NVL72 rack-scale solution. Nonetheless, Nvidia's hardware still looks more universal than AWS's.

To catch up with Nvidia, Amazon also announced major updates to its Neuron software stack to make Trainium-based platforms easier to use, allow standard machine-learning frameworks to run natively on the hardware, give developers greater control over performance, and open access to low-level tuning for experts.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.