The Rise Of Client-Side Deep Learning

As chips become smaller and more powerful, and as new ways to accelerate deep learning are discovered, it’s not just large data centers that can run the “artificial intelligence” in your devices, but also small embedded chips can be put into anything from IoT devices to self-driving cars.

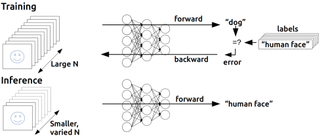

Deep Learning Training

There are two stages when working with deep neural networks. In the first stage, the neural network is “trained.” That’s when the parameters of the network are determined using examples of labeled inputs and a desired output. For instance, the network could be trained to “learn” how a human face looks by feeding it millions of pictures with human faces.

In the past, machine learning engineers used to code these parameters manually, which was a highly complex task and produced less than stellar results. It was just too hard for humans to think of all the needed parameters and algorithms to create an “artificial intelligence” that could “see” and recognize objects and humans. Thanks to deep neural networks, these parameters can now be generated automatically by training them to recognize certain items.

Deep Learning Inference

In the second stage, the network is deployed to run “inference,” which is the part of the process that classifies new unknown inputs using the previously trained parameters. For example, if a few new pictures are introduced to the neural network, it can determine which of them has a human face in it. This inference stage requires far fewer resources to run than the training stage, which is why it can now also be done locally on embedded chips and small devices.

It is widely accepted that the best way to train deep neural networks right now is to use GPUs because of their speed and efficiency compared to CPUs. Some companies, including Microsoft and Intel (through Altera), believe that field programmable gate arrays (FPGAs) could provide a sufficient alternative to GPUs for high-performance and efficient training of deep neural networks in the future.

However, the next big fight for efficiency will also be for the inference stage of the process, which can now be done on hardware that requires only a few Watts of power.

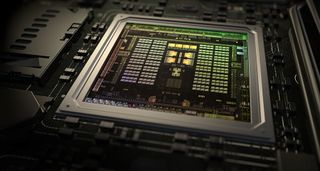

Nvidia Bets On GPUs For Inference

Nvidia believes that GPUs are not only ideal for deep neural network (DNN) training because of the inherent efficiency of graphics processors for such tasks, but also for DNN inference. In order to train deep neural networks to become moderately good for an intended purpose, you need large clusters of high-end GPUs.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Therefore, for the foreseeable future, training DNNs on embedded chips won’t be practical, but running inference on them is quite doable because of the much lower performance requirements to achieve a desired result.

In a recent test performed by Nvidia that compared its Tegra X1 with Intel’s Core i7 6700K, Nvidia’s embedded chip demonstrated performance that compared favorably with the Intel CPU, but with an order of magnitude lower power consumption. The Jetson TX1 (which has a performance of 1TFLOPs vs 1.2 TFLOPS for Tegra X1) achieved a top processing speed of 258 images/second with an efficiency of 45 img/sec/W, whereas the Core i7 achieved a peak performance of 242 img/sec with an efficiency of 3.9 img/sec/W.

Although Nvidia’s previous CUDA Deep Neural Network (cuDNN) software library was focused on DNN training, the current cuDNN 4 also introduced optimizations for inference in both small and large GPUs, which should further improve the performance of deep neural networks running on both the Tegra X1 and the company’s larger GPUs.

Nvidia is also working on a GPU Inference Engine (GIE) that optimizes trained neural networks and delivers GPU-accelerated inference at runtime for web, embedded and automotive applications. This software engine will be part of Nvidia's Deep Learning SDK soon. Deep learning software seems to be an area where Nvidia has a leadership position right now, with its GPUs supporting a wide range of deep learning applications.

Nvidia’s Jetson TX1 embedded module is targeted mainly at autonomous vehicles that need to recognize objects and process a large number of pictures or frames in a short amount of time so the cars can react quickly when needed. However, the Jetson TX1 could also be used in robots or drones, or other devices that need teraFLOPS+ performance on a relatively low power budget (under 10W for the Tegra X1 SoC).

Movidius Bets On ‘Vision Processing Units’ (VPUs)

Movidius’ goal is to create an embedded chip that beats even conventional smartphone SoCs in power consumption but can still deliver enough performance to run deep neural networks. This is why the company went with a more custom and highly-parallel “vision-focused” architecture that combines programmable SIMD-VLIW processors with hardware accelerators for video decoding and other tasks.

The Myriad 2 vision processing unit (VPU) can perform 8-, 16-, and 32-bit integer and 16- and 32-bit floating point arithmetic. It also has unique features such as hardware support for sparse data structures and higher local thread memory than what is typically found in a GPU.

Movidius’ VPU, which has a TDP of 1.2W and achieves a performance of 150 GOPS (giga operations per second) inside the recently announced Fathom neural compute stick, can be put into anything from wearables to tablets such as Google’s Project Tango, but also in surveillance cameras and drones, robots, and AR/VR headsets for gesture and eye tracking.

Movidius provides multiple solutions for 3D depth, natural user interfaces, object-tracking with its software development kit that are optimizing for its vision processing unit. Developers can also customize those solutions or run their own proprietary solutions on the platform.

More Efficient Client-Side Deep Learning

In the context of total power consumption for a given outcome, sending data that is first processed in the cloud to a client device through long-distance networks can be significantly less efficient than processing it locally on the device. The only issue concerns how much performance you can squeeze into a small device and whether that level of performance is enough for the results you’re trying to achieve with deep learning inference. However, client-side inference also has an advantage in low latency, which can enable all sorts of deep learning applications that wouldn’t be possible through the cloud.

Processing information such as personal pictures and videos in the cloud through deep learning can also be a big privacy risk, not just from the company doing the processing of that data, but also from attackers looking to obtain that processed information. A surveillance camera, for instance, would be less at risk of getting hacked remotely if it performed its analytics locally instead of in the cloud.

Both Nvidia and Movidius see that deep learning is the “next big thing” in computation, which is why Nvidia is optimizing its GPUs for it with each new generation, and why Movidius created a specialized platform dedicated to deep learning and other vision-oriented tasks.

Just as cloud-based deep learning is poised to grow at a fast clip over the next few years, we’re going to see more embedded chips take a shot at deep learning as well, as more of our devices try to become more aware of our surroundings through computer vision and need to process that information quickly, intelligently, and in a power-efficient way.

Lucian Armasu is a Contributing Writer for Tom's Hardware. You can follow him at @lucian_armasu.

-

TechyInAZ Replyskynet

Nuff said. :)

It will be interesting how this all plays out. Who knows, maybe in the very soon future we might have an AI that is really close to thinking on it's own with all these deep learning networks. That's a scary thought. -

InvalidError Reply

I'd say it is still a few decades too soon to be scared since such an AI using foreseeable future tech would still be several times larger than IBM's Watson.17955480 said:Who knows, maybe in the very soon future we might have an AI that is really close to thinking on it's own with all these deep learning networks. That's a scary thought.

-

clonazepam Replyskynet

Nuff said. :)

It will be interesting how this all plays out. Who knows, maybe in the very soon future we might have an AI that is really close to thinking on it's own with all these deep learning networks. That's a scary thought.

I feel like an open source project by AMD and NVIDIA could replace the US Senate and the House without leaving us in too bad of shape lol -

mavikt I'm glad they're recognizing that you need computing muscles to train these things... I remember 1997-98 when I was an exchange student in Spain taking an course in 'Redes neurales'; Neural networks, trying to train a very small neural network to control and inverted pendulum. I had the assignement run training nightly on a PII 200 I bought to get throught the course (amongst others).Reply

I don't think I ever got a good network 'configuration' out of the training. Might have been due to bad input or the Italian guy I was working with... -

mavikt Reply

Yes, especially since current uP technology seem to be nudging against a physical barrier preventing us from getting smaller and faster as quick as before. Unfortunatley that feel comforting, since you don't know what the mad doctor would do...

I'd say it is still a few decades too soon to be scared since such an AI using foreseeable future tech would still be several times larger than IBM's Watson.17955480 said:Who knows, maybe in the very soon future we might have an AI that is really close to thinking on it's own with all these deep learning networks. That's a scary thought.

-

bit_user Reply

This was rather silly marketing propaganda, not surprisingly. They compared the TX-1's GPU to the Skylake CPU cores. Had they used the i7-6700K's GPU, it would've stomped the TX-1 in raw performance, and had more comparable efficiency numbers.17955270 said:In a recent test performed by Nvidia that compared its Tegra X1 with Intel’s Core i7 6700K, Nvidia’s embedded chip demonstrated performance that compared favorably with the Intel CPU, but with an order of magnitude lower power consumption.

The only real takeaway from that comparison is that deep learning is almost perfect for GPUs. But that's pretty obvious to anyone who knows anything about it.

-

bit_user Reply

Did you actually read the article?17955464 said:skynet

He's not talking about machines performing abstract reasoning or carrying out deep thought processes, or anything like that. The whole article is basically about client devices becoming powerful enough to run complex pattern recognition networks that have been generated in the cloud. This really has nothing to do with Sky Net.

And there's not even any real news, here. It's just a background piece that's specifically intended to educate people about what these new capabilities are, that client devices are gaining. I'd hope people would be less likely to fear neural networks running in their phones, after reading this.

I'm starting to get annoyed by people reflexively trotting out that refrain, every time the subject of machine learning comes up. If we're to avoid a Sky Net scenario, then people will need to become more sophisticated in distinguishing the enabling technologies for true cognition, from run-of-the-mill machine learning that are the bread-and-butter of companies like Facebook and Google.

-

bigdog44 There is already a consortium that's developing invisible NPU/VPU SoC's that are imbedded in M-glass. The main developer behind this has worked some IP magic with Qualcomm and Intel as well as some big-whigs across the pond. You'd be amazed at the things they're doing with 500 MHz scaled products.Reply -

bit_user Reply

Link, pls. If there's a consortium, there should be some sort of public announcement, at least.17958055 said:There is already a consortium that's developing invisible NPU/VPU SoC's that are imbedded in M-glass.

What are the intended applications - windows, eyeglasses, automotive. etc.?

IMO, it sounds awfully expensive, and I'm not clear on the point of embedding it in glass. I also wonder how vulnerable modern ICs are to sunlight. Heat dissipation would also be a problem. And then there's power.

Most Popular