Does Undervolting Improve Radeon RX Vega 64's Efficiency?

Installing Our Water Cooler

There's a lot being written about overclocking and undervolting AMD’s Radeon RX Vega 64. Today, we're taking the card's thermals out of the equation to dig deeper into the relationship between clock rate and voltage.

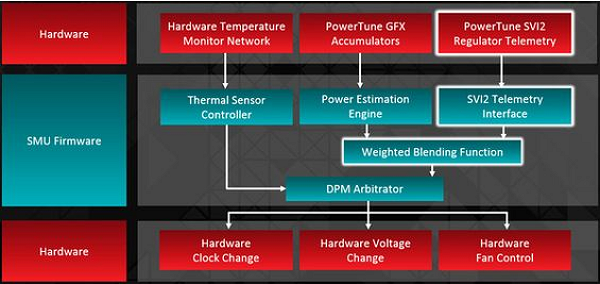

Telemetry at Its Finest

Before we can start, we have to explore how AMD's PowerTune technology operates. It evaluates the GPU’s most important performance characteristics in real-time, while querying the thermal sensors and factoring in the voltage regulator’s telemetry data as well. All of this information is transferred to the pre-programmed Digital Power Management (DPM) arbitrator.

This arbitrator knows the GPU’s power, thermal, and current limits set by the BIOS and the driver, as well as any changes made to the default driver settings. Within these boundaries, the arbitrator controls all voltages, frequencies, and fan speeds in an effort to maximize the graphics card’s performance. If even one of the limits is exceeded, the arbitrator can throttle voltages, clock rates, or both.

Voltages: AMD PowerTune vs. Nvidia GPU Boost

AMD’s Radeon RX Vega 64 also uses Adaptive Voltage and Frequency Scaling (AVFS), which we're already familiar with from its latest APUs and Polaris GPUs. In light of varying wafer quality, this feature is supposed to ensure that every individual die performs at its peak potential. It's similar to Nvidia’s GPU Boost technology. As a result, each GPU has its own individual load line in the voltage settings. However, some things have changed since Polaris’ implementation.

AMD’s WattMan facilitates almost complete freedom to manually set the voltage for the two highest DPM states. This is different from GPU Boost, which only allows a type of offset to be defined for manual voltage changes, and full voltage control can’t be forced via the curve editor. As we see later, the added freedom can be a blessing or a curse, because manually-set voltages for the DPM states can counteract, or even completely cancel, AVFS.

Our monitoring allowed us to directly measure how the card’s voltages behave using a manual setting with and without a power limit. The results are surprising; they're very different from what you see on a Polaris-based card.

We’d also like to do a little myth-busting. All of the clock rate gains we achieved via undervolting were due to temperature decreases on air-cooled cards. Eliminating temperature from the equation like we’re doing in this test turns everything on its head. Sensationalist headlines become urban legends in the process.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

What We Tested

In order to make the results easier to understand and compare, we settled on five different settings. These are completely sufficient to demonstrate the respective extremes:

- Stock Settings "Balanced Mode"

- Undervolted: Voltage Set to 1.0V using Default Power Limit

- Overclocked: Increased Power Limit by +50%

- Overclocked: Increased Power Limit by +50%, Increased GPU Clock Frequency by 3%

- Overclocked: Increased Power Limit by +50%, Increased GPU Clock Frequency by 3%; Voltage Set to 1.0V

Undervolting the two adjustable DPM states to below 1.0V resulted in instability across many different scenarios. It was mostly possible to achieve 0.95V, but the clock rate fell disproportionately in response. Lowering the voltage to under 1.0V while using the maximum power limit resulted in a crash as soon as a 3D application was started.

Building A Big Cooling Solution

First things first: we need to build a thermal solution able to provide the same temperatures at 400W that it provides at stock settings. In the end, the only way to achieve this is by using a closed loop and a compressor cooler. This setup can guarantee a constant 20°C for the GPU’s cold plate.

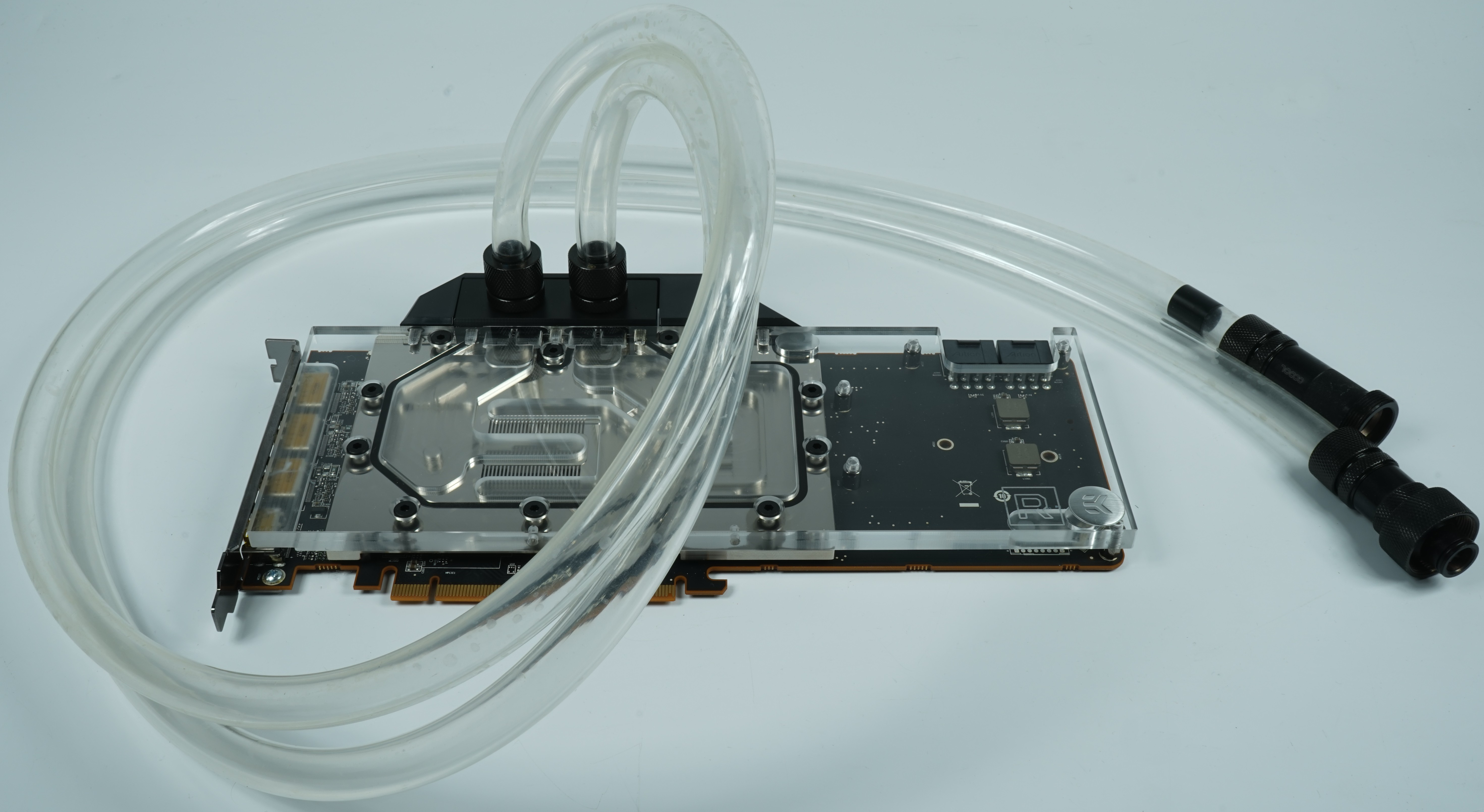

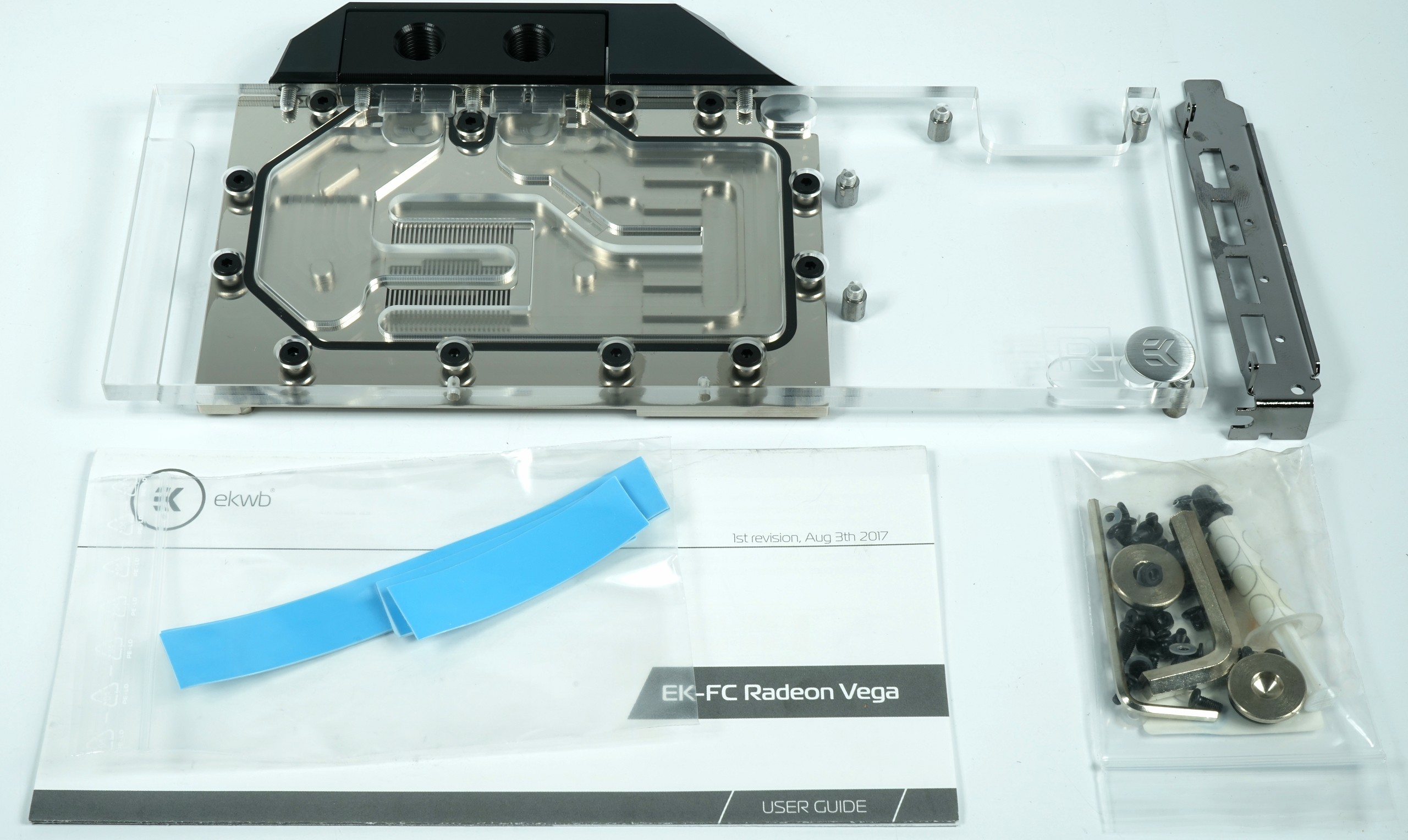

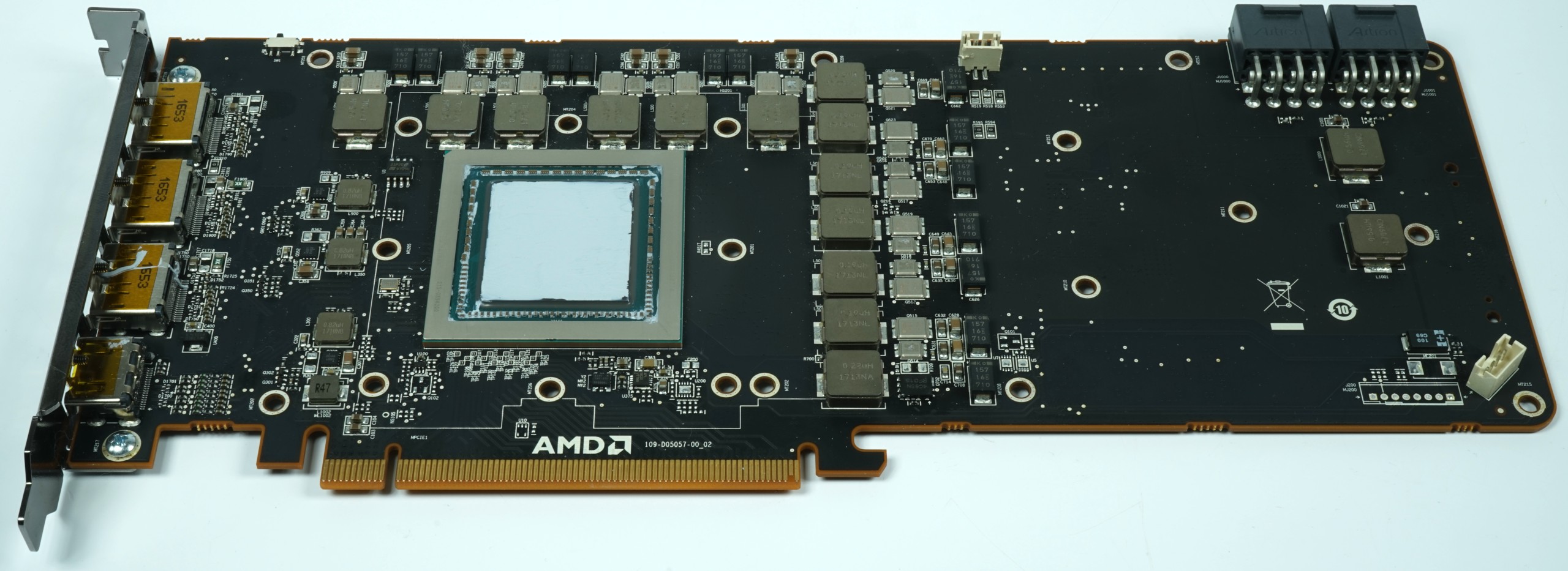

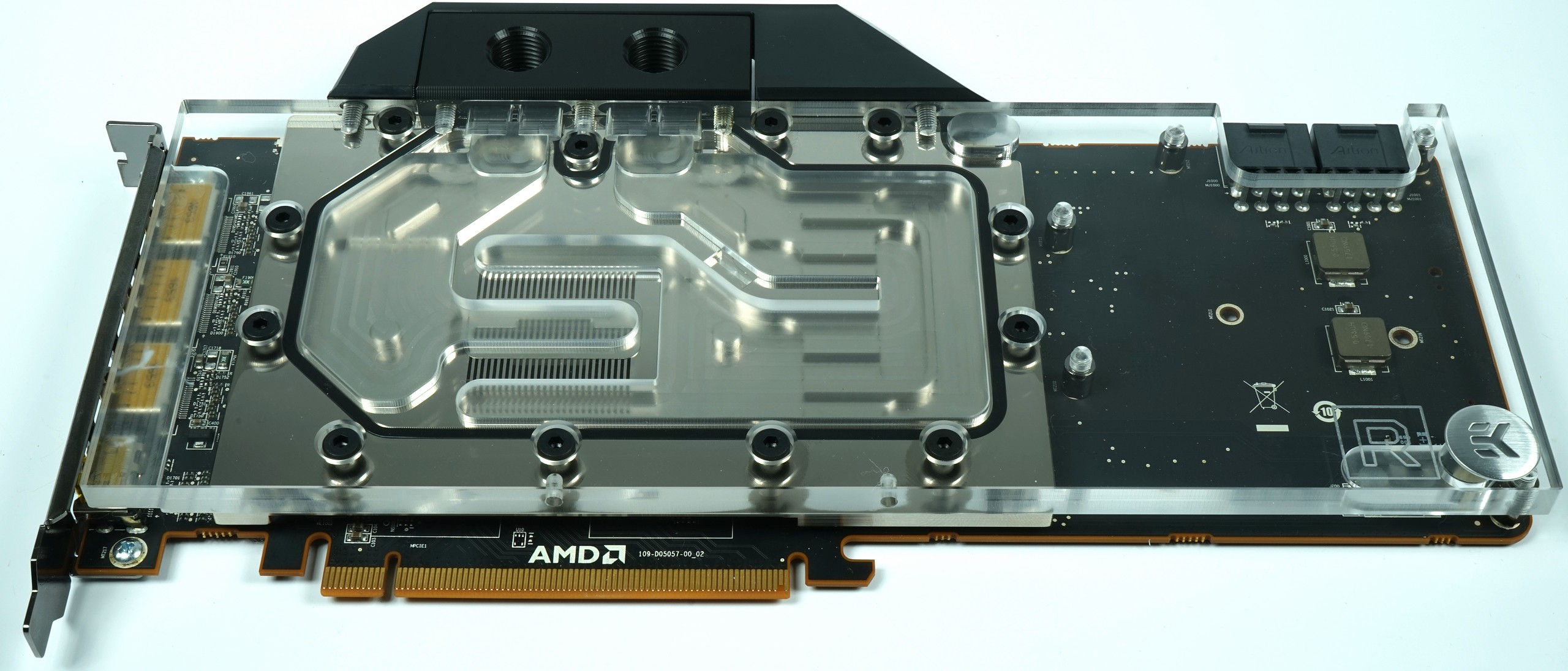

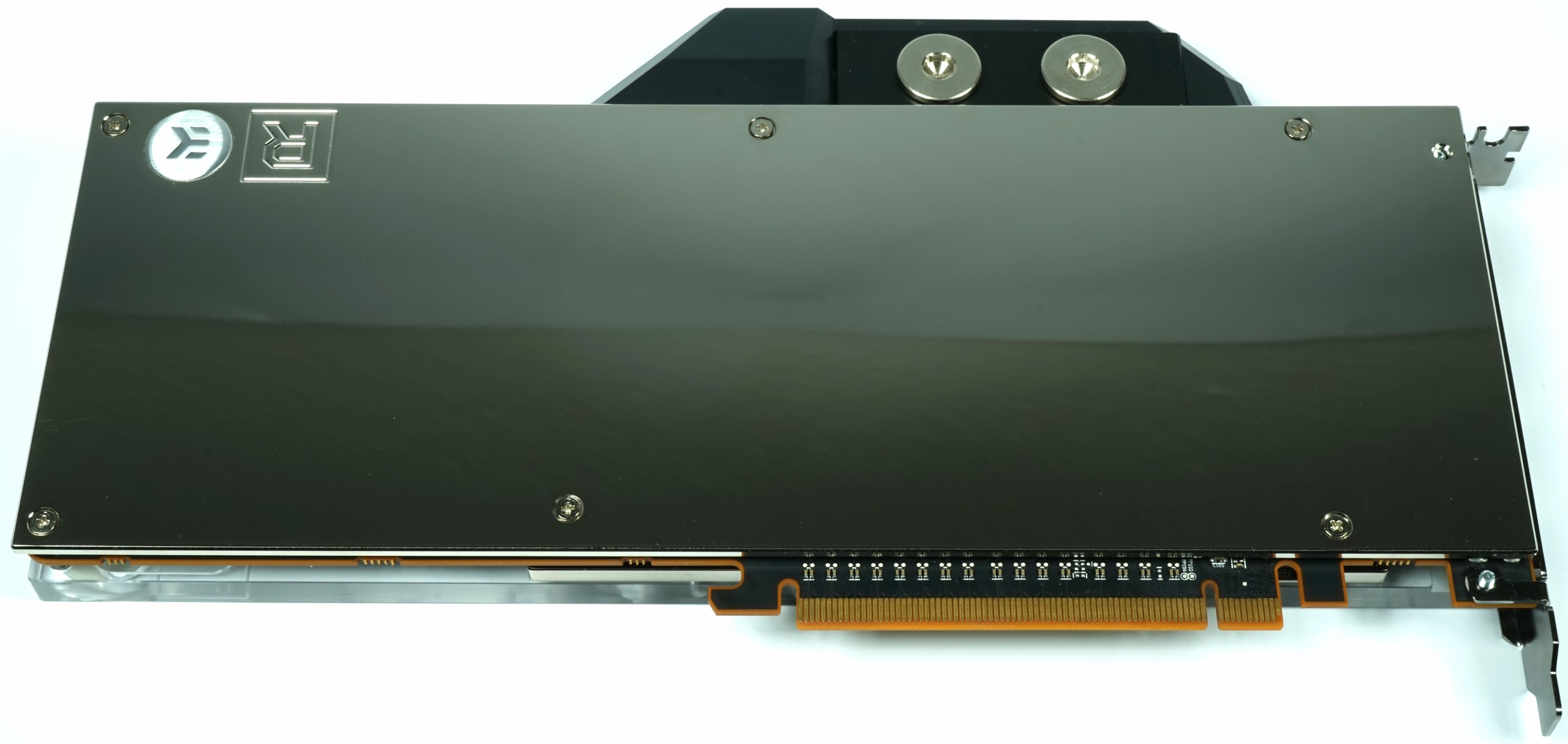

In addition to Alphacool's Eiszeit 2000 Chiller, we're using the EK-FC Radeon Vega by EK Water Blocks. It’s made of nickel-plated copper, and makes contact with the GPU, HBM2, the voltage regulation circuitry, and the chokes. All told, the setup does exactly what we need it to.

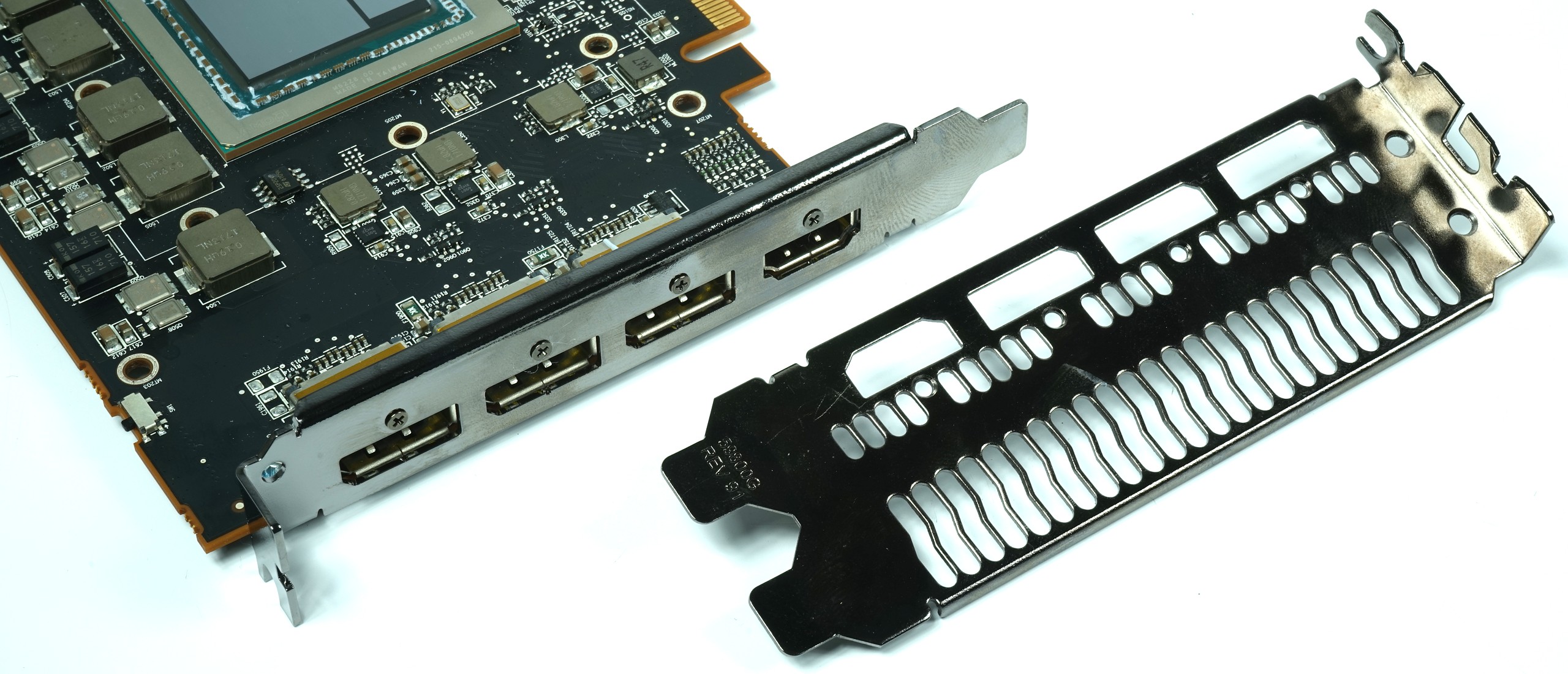

In order to avoid the somewhat ridiculous aesthetic of a single-slot water cooler on a dual-slot graphics card, we switched the original bracket out for a bundled single-slot one. Countersunk screws sit on top of the slot cover (rather than in it) due to its holes, but this is a relatively small blemish.

After cleaning the old thermal paste off of AMD's interposer, a thin layer of fresh stuff is applied to the surface with a small spatula. A little leftover residue on the die might not look great. But too much pressure during the clean-up process could permanently damage the package, so you have to be careful.

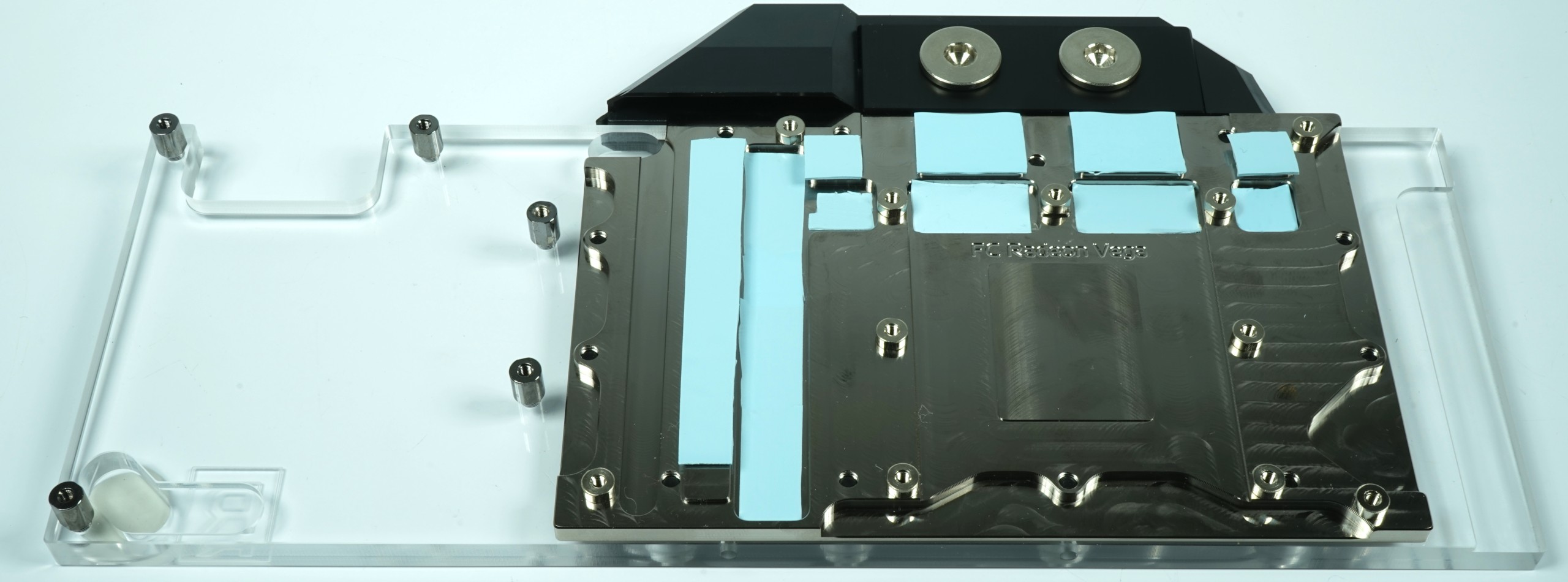

Next, the thermal pads are applied to their target areas on the water block. EK's instructions would have us put them on the graphics card instead. The reason why we do this differently, however, is that we prefer to put the board on the cooler (which is lying on the table), rather than the other way around. With the thermal pads on the water block, they don’t fall off in the process.

Once the graphics card is screwed into place, it’s ready for action. The installation process is quick and easy. Just be mindful of the interposer.

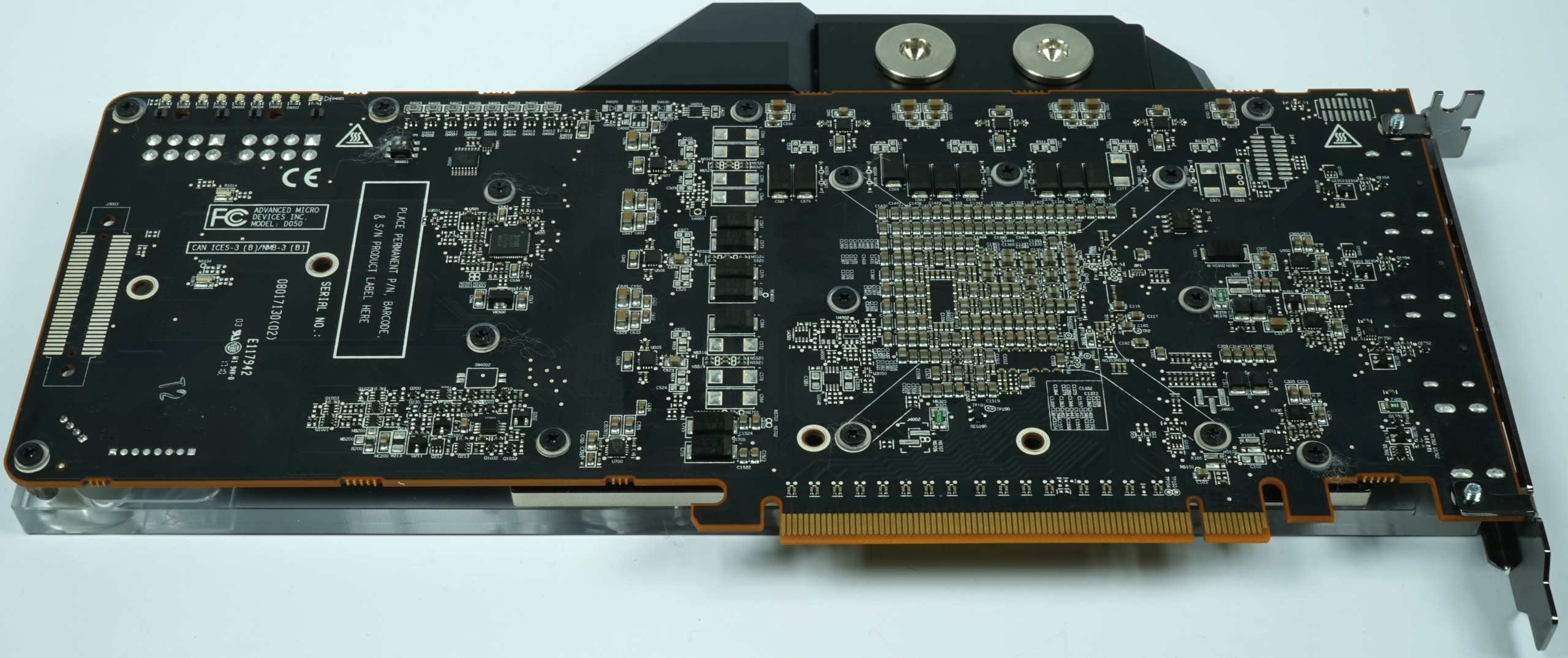

The exposed back side shows the many screws and their nylon washers used to secure the water block. Around the package alone, seven screws hold everything tightly together.

Enthusiasts looking for a bit of aesthetic flair and slightly better thermal performance (cool those phase doublers off!) can attach the fitted backplate.

We removed the backplate for our measurements because we just couldn’t bring ourselves to drill holes through it.

Test System and Methodology

We introduced our new test system and methodology in How We Test Graphics Cards. If you'd like more detail about our general approach, check that piece out. Note that we've upgraded our CPU and cooling solution since then in order to avoid any potential bottlenecks when benchmarking fast graphics cards.

The hardware used in our lab includes:

| Test Equipment and Environment | |

|---|---|

| System | - Intel Core i7-6900K @ 4.3 GHz- MSI X99S Xpower Gaming Titanium- Corsair Vengeance DDR4-3200- 1x 1TB Toshiba OCZ RD400 (M.2 SSD, System)- 2x 960GB Toshiba OCZ TR150 (Storage, Images)- be quiet Dark Power Pro 11, 850W PSU |

| Cooling | - EK Water Blocks EK-FC Radeon Vega- Alphacool Eiszeit 2000 Chiller- Thermal Grizzly Kryonaut (Used when switching coolers) |

| Ambient Temperature | - 22°C (Air Conditioner) |

| PC Case | - Lian Li PC-T70 with Extension Kit and Mods |

| Monitor | - Eizo EV3237-BK |

| Power Consumption Measurement | - Contact-free DC Measurement at PCIe Slot (Using a Riser Card) - Contact-free DC Measurement at External Auxiliary Power Supply Cable - Direct Voltage Measurement at Power Supply - 2 x Rohde & Schwarz HMO 3054, 500MHz Digital Multi-Channel Oscilloscope with Storage Function - 4 x Rohde & Schwarz HZO50 Current Probe (1mA - 30A, 100kHz, DC) - 4 x Rohde & Schwarz HZ355 (10:1 Probes, 500MHz) - 1 x Rohde & Schwarz HMC 8012 Digital Multimeter with Storage Function |

| Thermal Measurement | - 1 x Optris PI640 80 Hz Infrared Camera + PI Connect - Real-Time Infrared Monitoring and Recording |

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Installing Our Water Cooler

Next Page Voltages, Frequencies & Power Consumption

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

TJ Hooker Good article, thanks for the analysis!Reply

Was a similarly indepth look at overclocking/undervolting performance with Polaris done, that I missed? Or is there just whatever is included the various Polaris 10 review articles (e.g. RX 480 review)? If it's the latter, does anyone know which review would contain the most information regarding ths? -

Cryio I don't think this article went in-depth enough. Dunno where I've read, but I've seen Vega 64 consume just half or 2 thirds as much power while only loosing 15% performance. Which is tremendous IMO.Reply -

TJ Hooker Reply

Tom's found something similar to that in their review of the Vega 56, with a 28% reduction in power consumption only costing you an 11% performance hit. http://www.tomshardware.com/reviews/radeon-rx-vega-56,5202-22.html20238896 said:I don't think this article went in-depth enough. Dunno where I've read, but I've seen Vega 64 consume just half or 2 thirds as much power while only loosing 15% performance. Which is tremendous IMO.

But that's going into underclocking, whereas this article was very focused on undervolting and what effect that has on power consumption.

Although it would be very interesting to see an article that looks into tweaking both clockspeed and voltage to see what sort of efficiency can be achieved. -

Xorak Maybe it's too early in the morning for me, but I'm a little confused about what we actually found here.. Looks like the highest overclock was achieved using default voltage and the maximum power limit, with a 3% frequency increase.. Under the condition of using a somewhat absurd cooling solution..Reply

So are we to infer that with an air cooled card, undervolting in and of itself is not desirable, but is likely to help more than it hurts because we run out of thermal headroom before the lower voltage becomes a limiting factor? -

Wisecracker Thanks for all the bench work and reporting.Reply

Tweaking on AVFS appears to be the *Brave New Frontier* (or, "We're all beta testers for AMD" :lol: ). The fancy cooling was impressive but as noted under- clocking/volting brings out unseen efficiencies in Vega/Polaris. That's certainly not at the top of the list of many Tech Heads but gives AMD better insight going forward as they bake new wafers, fix bugs and tweak the arch.

I suspect the nVidia bunch does the same thing, but the woods are filling-up with volt- and BIOS Mods for Polaris and Vega. There's a thread at Overclock pushing 500 pages! Manipulating volt tables is becoming an art ...

More than anything, what I believe this shows is that AMD (and GloFo) boxed themselves into 'One Size Fits All' with gaming cards because of varying consistencies in the chips, and just like desktop Zen they were the last picks of the litter behind the big money makers --- enterprise, HPC/deep learning, Apple, etc.

-

Ethereum Currency The RX Vega 56 has outweighed many other graphics cards as it is fast and has superb graphics: http://rxvega56.zohosites.euReply -

photonboy Do I need a degree on Tweakology to get the most out of an AMD graphics card?Reply

I still can't comprehend how there are so many engineering issues going on with the design of VEGA, as well as the continued challenges in how to optimize an overclock.

You'd think in 2017 you could just tell the computer to figure out the optimal overclock. We get closer with NVidia; I can use EVGA Precision OC to optimize the voltage/frequency profile though even that isn't perfect as it needed to be restarted many times to finish and when done it wasn't stable in some games.

You'd think the graphics card could continually send itself known data to validate the VRAM and GPU at all times (using a very small percentage of the cycles).... oh, errors? Well, then drop to the next lowest frequency/voltage point. Is that so hard? -

ClusT3R Do you have like a manual with the values and the software I want to try to remove more power and add more clockspeed as I can I have vega 56Reply -

GoldenBunip The biggest gains on Vega is keeping the HBM cool. It will click to 1100 then (but no more) resulting in huge performance gains. Just really hope we crack the stupid bios locks soon.Reply -

zodiacfml I don't understand what is going on here.Reply

Why do we have to put an overkill cooling system for the question "cDoes Undervolting Improve Radeon RX Vega 64's Efficiency?