Intel’s 24-Core, 14-Drive Modular Server Reviewed

First Impressions

Setting up the MFSYS25 wasn’t that difficult. As soon as we got it out of the crate, we plugged it in and off it went. The system, while not fully loaded, was still pretty heavy. It took two of us to move it a short distance to an empty space in the lab. Once all the cables were plugged in, we powered up the chassis and were ready to start evaluating the system.

As soon as you plug the power cables into one or more of the MFSYS25’s power supplies, you might be overwhelmed by the initial jet-engine-like roar of spinning fans coming from the back of the server. We were fearful that the system would run at this noise level all the time. But after several minutes, the machine eventually calmed down, producing modest noise. For a server this big, the low level of noise generated is pretty impressive.

It seems that Intel had this in mind when it put this system together. The MFSYS25 enclosure was custom built by Silentium. Using Silentium’s Active Silencer design, the noise level coming from the MFSYS25’s chassis is reduced by about 10 decibels. While Silentium's Website states that this is a significant reduction in noise level, with so many components built into such a small package, it would be difficult to compare the MFSYS25 to other machines.

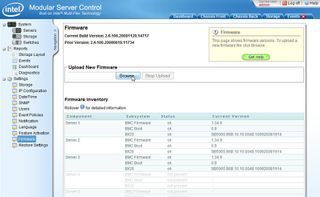

Intel recommended that I update the MFSYS25 with the latest firmware package. The process didn’t take too long. First, I downloaded the most recent firmware package from Intel's Website. Then, using the Modular Server Control’s firmware update user interface, I found the downloaded file on my desktop and started the upload. It took about 16 minutes for the entire process to finish including reboots of each compute module. The wait was not too bad if you consider that the firmware update ran upgrades on the all the compute, power, and storage modules during the update.

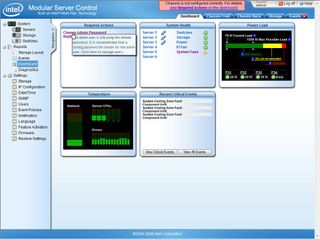

At first, I used a laptop connected directly to the management module to work with the Modular Server Control application. As time went on, I put the machine on an isolated network and gave it a static IP address so I could connect to it from different machines. I then connected all three compute modules to the network as well. Next, I changed the admin password to something a little more secure.

Security may have not been the only reason why I got rid of the default password. I kind of had a “2001: Space Odyssey” moment as there was a constant reminder displayed in the Dashboard tab’s Required Actions box whenever I logged in, reminding me that I was still using the default password. I think I changed the password just to appease the system. Either way…it’s good practice to secure your password and I can appreciate how Intel helps the admin enforce a password change, at the very least.

I have also been impressed by the amount and accessibility and reliability built into hot-swappable devices for the MFSYS25. Identifying what’s hot swappable is easy, as removable devices are physically color-coded with green tabs. This includes all the disk drives and all the modules in the chassis. For logic-based devices like the management module and the Ethernet switch module, configurations are backed up to flash media sitting on the chassis midplane and recoverable through the Modular Server Control.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Structure-wise, I was pretty happy with the design of the MFSYS25. One initial concern I did have was about the latches used to lock the MFS5000SI compute modules to the chassis’ main bezel. In order to remove a compute module from the main chassis, you have to press a green release button on the front of the module. This in turn disengages the release handles and unlatches the MFS5000SI from the chassis.

Having seen various blade servers on the market, I know that parts that rely on repetitive mechanical actions tend to wear down. The piece of metal that holds the compute module release handles is a small bump of metal that may, over time, bend out of shape or loosen. However, Intel did a nice job in designing the hold-and-release system by including additional slots in the compute module’s bezel that guide and keep the release arms in place. This provides a nice and secure fit once the compute module is locked inside the MFSYS25’s chassis.

However, I did have a problem with one of the main cooling modules. After having the chassis shutdown for a couple days of downtime, I powered up the chassis and saw the single amber LED on the front panel of the main

-

kevikom This is not a new concept. HP & IBM already have Blade servers. HP has one that is 6U and is modular. You can put up to 64 cores in it. Maybe Tom's could compare all of the blade chassis.Reply -

sepuko Are the blades in IBM's and HP's solutions having to carry hard drives to operate? Or are you talking of certain model or what are you talking about anyway I'm lost in your general comparison. "They are not new cause those guys have had something similar/the concept is old."Reply -

Why isn't the poor network performance addressed as a con? No GigE interface should be producing results at FastE levels, ever.Reply

-

nukemaster So, When you gonna start folding on it :pReply

Did you contact Intel about that network thing. There network cards are normally top end. That has to be a bug.

You should have tried to render 3d images on it. It should be able to flex some muscles there. -

MonsterCookie Now frankly, this is NOT a computational server, and i would bet 30% of the price of this thing, that the product will be way overpriced and one could buid the same thing from normal 1U servers, like Supermicro 1U Twin.Reply

The nodes themselves are fine, because the CPU-s are fast. The problem is the build in Gigabit LAN, which is jut too slow (neither the troughput nor the latency of the GLan was not ment for these pourposes).

In a real cumputational server the CPU-s should be directly interconnected with something like Hyper-Transport, or the separate nodes should communicate trough build-in Infiniband cards. The MINIMUM nowadays for a computational cluster would be 10G LAN buid in, and some software tool which can reduce the TCP/IP overhead and decrease the latency. -

less its a typo the bench marked older AMD opterons. the AMD opteron 200s are based off the 939 socket(i think) which is ddr1 ecc. so no way would it stack up to the intel.Reply

-

The server could be used as a Oracle RAC cluster. But as noted you really want better interconnects than 1gb Ethernet. And I suspect from the setup it makes a fare VM engine.Reply

Most Popular