Intel Shares Potential Fix for High Idle Power Consumption on Arc GPUs

Seeking parity with the competition

At the launch of Intel's Arc A770 and Arc A750 graphics cards, reviewers noticed a strange pattern: Intel cards were pulling significantly higher power at idle than comparable AMD and Nvidia graphics cards. Intel revealed in a blog post today that it managed to hit two rabbits with one stone: it recognized higher than expected idle power consumption affecting its Arc series of graphics cards, while simultaneously offering a fix. One possible issue for some users, however, is that applying the fix requires fiddling with motherboard BIOS settings — something not all users are comfortable doing.

Intel's guide requires that users enter their BIOS settings to change the PCI-Express-related power options. Namely, users should change the Active-state power management (ASPM) setting on their motherboards, a technology that allows PCIe devices to enter lowered power-states when they're not in heavy use. After a romp through the BIOS, Intel directs users to also change some relevant settings within Windows' power options.

First, users should make sure that their OS can control PCIe power states by changing their BIOS' "Native ASPM" setting to "Enabled". Then, Intel says users should enable "PCI Express root port ASPM" and select "L1 Substates" from the subsequent options. The terms used to describe these settings may vary according to manufacturer, so make sure to read your motherboards' manual if you find yourself at a loss.

And that's assuming your motherboard even exposes the related settings. After poring through the BIOS settings on two different Intel platforms (one an older i9-9900K with MSI MEG Z390 Ace motherboard and the other a newer Z690 system with an MSI Z690-A DDR4 WiFi paired with Intel's 12900K CPU), no such options were to be found. The Z390 board was already using the latest BIOS, but we checked and found an updated BIOS for the Z690 board. Flashing the BIOS exposed the necessary options, thankfully, though there was no fix for the older board.

Your mileage may vary depending on the motherboard, how old it is, and its manufacturer. Some boards may even have these settings active by default.

After you've fiddled with your BIOS, it's time to boot up Windows, and you'll still likely see abnormally high idle power consumption on your Arc card. You now need to navigate to Windows' Power settings: simply search for "Power" on Windows' search bar, and then select the option "Edit Power Plan."

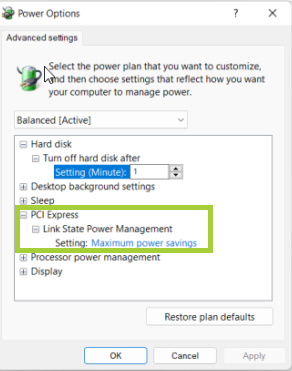

While editing your preferred Power Plan, expand the "Change advanced power settings" option; look for the "PCI Express" setting, and click its "+" button to the left to expand additional options, and set the "Link State Power Management" setting to "Maximum power savings." Simple enough in theory; how's it work in practice?

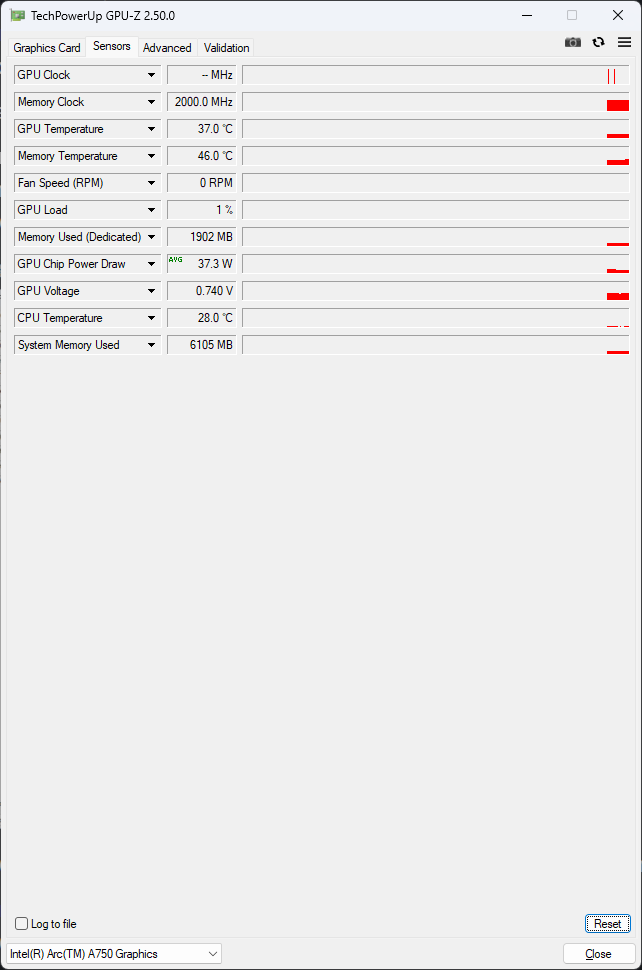

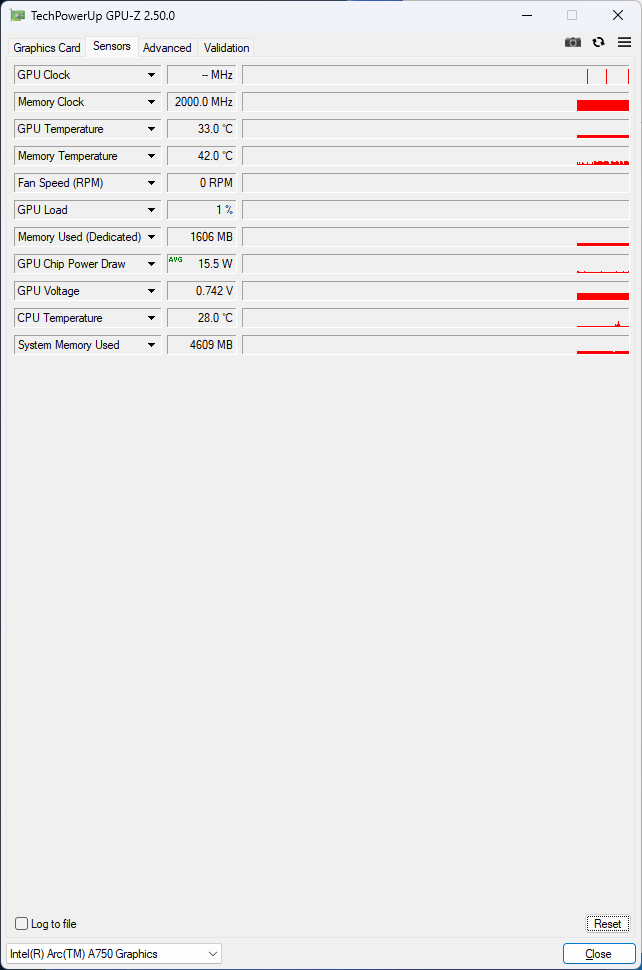

After testing Intel's Arc A750 and Arc A770 power consumption at idle with and without Intel's fix applied, we can say there was a significant difference in power consumption... on one of the cards. For the Arc A750, Intel's fix reduced idle power consumption by over 50%: its average idle power draw was reduced to 15.5 W, compared to 37.3 W with standard BIOS and Windows power settings.

As for the A770, it strangely showcased the same average power consumption before and after the fix. We rebooted (multiple times), swapped cards, sacrificed a virgin tube of thermal past... all for naught. We're still poking at it, but it's something to keep in mind. Perhaps a future driver update will help out.

| Row 0 - Cell 0 | Before BIOS Changes (Avg) | After BIOS Changes (Avg) |

| Arc A770 | 38.9 | 38.9 |

| Arc A750 | 37.3 W | 15.5 W |

Our own review of Intel's Arc A770 And Arc A750 graphics cards showed that Intel delivered capable products towards the mainstream gaming crowd. The company achieved that with lesser power efficiency against comparable AMD and Nvidia designs, however. Averaging an 8-game benchmark, the Arc A770 delivered 11% less performance than Nvidia's RTX 3060 Ti while consuming as much as the RTX 3070. That may not look like much - and it isn't, in the grand scheme of things. Except that in this day and age where electricity prices keep climbing and every consumer grapples with higher costs of living, higher power consumption does translate to higher power bills. Cutting on power consumption inefficiencies thus becomes more important than ever. An average of 22 W difference between the Intel fix being applied and not may not look like much, but depending on your local electrical power pricing and whether or not you keep your PC on at all times, you could be saving ~30$ per year.

Interestingly, Intel says that its Arc graphics cards were designed to work with the latest technologies available, indicating that users whose motherboards don't have ASPM enabled are behind the technological curve for its graphics cards. It's an interesting statement; ASPM was introduced as part of the PCI Express 2.0 specification, so we're not exactly on the cutting edge of power-saving features.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel's statement also rings somewhat hollow when we consider that both AMD and Nvidia manage to achieve lower idle power consumption for graphics cards that directly compete with Intel's Arc 7-series - without any need for a BIOS intervention on the users' part. In fact, Nvidia's latest powerhouse, the RTX 4090, can consume just 4.5 W on average when at idle.

At the same time, Intel says that it will include fixes for this high idle power consumption in later architectures. The nature of the fix and Intel's own statements indicate that this is something the company can't fix solely through its drivers. Perhaps these are all elements of Arc Alchemist being the first discrete GPU architecture from Intel. We'll see what happens with subsequent iterations.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

PlaneInTheSky The reason I stick with Nvidia is because you're basically a beta tester by buying Intel or AMD GPU that simply lack the features Nvidia software has.Reply

Yesterday I was talking to someone who had screen tearing in their game, and I said I just set the game at the desired framerate through Nvidia's control panel. He uses an AMD GPU and his reaction was one of disbelief, the fact I could just lock the framerate per application, even my browser's FPS.

He is now considering an Nvidia GPU.

To compete with Nvidia, it will require a lot better software and drivers than the garbage AMD and Intel are putting out.

Neither AMD or Intel even has native DX9 support, they're not even beta products, they're just missing core features. It's an afterthought for AMD and Intel. With Nvidia, GPU is their core business, and it shows.

People said Intel selling dedicated GPU would be good for the consumer. No it's not, it has been nothing but issues, Intel is releasing half-assed drivers just like AMD.

I consider the only real competition to Nvidia being ARM with PowerVR, Qualcomm, general Mali (ST micro / Samsung etc). -

-Fran- I wish I could have this problem and whine about it online, but I can't find the darn ARC cards anywhere in the UK xD!Reply

EBuyer, which seems to be the only retailer with them, still has them as "coming soon" and there's no other location/place they're being sold here. And they're not even cheap at £400.

What the hell, Intel? Was MLiD right on the money?

Regards. -

bit_user Reply

This last point tells me you're not serious. Everything I've heard about PowerVR's drivers suggests they're the worst in the industry.PlaneInTheSky said:I consider the only real competition to Nvidia being ARM with PowerVR, Qualcomm, general Mali (ST micro / Samsung etc).

I can't say much about Mali, but I think it's entirely unproven in Windows. -

PlaneInTheSky ReplyThis last point tells me you're not serious. Everything I've heard about PowerVR's drivers suggests they're the worst in the industry.

fake news, Apple used PowerVR for their devices, the drivers are very stable -

bit_user Reply

You know that Apple dropped PowerVR from their devices, right?PlaneInTheSky said:fake news, Apple used PowerVR for their devices, the drivers are very stable

My statements are based on what I've heard from app developers. So, unless you have a good source to backup your claims, I smell a troll. -

ezst036 ReplyPlaneInTheSky said:fake news, Apple used PowerVR for their devices, the drivers are very stable

Nvidia PCIe GPUs are completely unsupported on Macintosh, right? -

rluker5 My Asrock A380 goes from a bit under 17w to a bit over 14w with notably more fluctuations between 17w and 12w.Reply

Not a ton of savings, but it is doing something. It does seem somewhat dependent on resolution. Maybe only some of the silicon, like the 3d part is getting the savings.

It is nice to see the drop on the A750. I have a reference one on the way and it is going into my living room itx where quiet matters.

And Newegg is usually out of them. The A380 seemed like a normal purchase before they went on backorder, but the others were only available for a bit at launch, then I saw A750s in stock when I checked yesterday. Back out of stock right now. Better than the other brands at launch, but I imagine the demand for these is probably less. -

Thunder64 ReplyPlaneInTheSky said:The reason I stick with Nvidia is because you're basically a beta tester by buying Intel or AMD GPU that simply lack the features Nvidia software has.

Yesterday I was talking to someone who had screen tearing in their game, and I said I just set the game at the desired framerate through Nvidia's control panel. He uses an AMD GPU and his reaction was one of disbelief, the fact I could just lock the framerate per application, even my browser's FPS.

He is now considering an Nvidia GPU.

To compete with Nvidia, it will require a lot better software and drivers than the garbage AMD and Intel are putting out.

Neither AMD or Intel even has native DX9 support, they're not even beta products, they're just missing core features. It's an afterthought for AMD and Intel. With Nvidia, GPU is their core business, and it shows.

People said Intel selling dedicated GPU would be good for the consumer. No it's not, it has been nothing but issues, Intel is releasing half-assed drivers just like AMD.

I consider the only real competition to Nvidia being ARM with PowerVR, Qualcomm, general Mali (ST micro / Samsung etc).

You are spewing a much of nonsense. The AMD drivers suck mantra is quite tired. -

cyrusfox Reply

Tired but still true, my 5700 XT still has graphical glitches from time to time (Windows/lightroom,handbrake workload). Nvidia is still king of drivers, (AS long as you stay on n-2 generation for cards, my gt1030 started to get buggy on newer driver launches...)Thunder64 said:The AMD drivers suck mantra is quite tired.