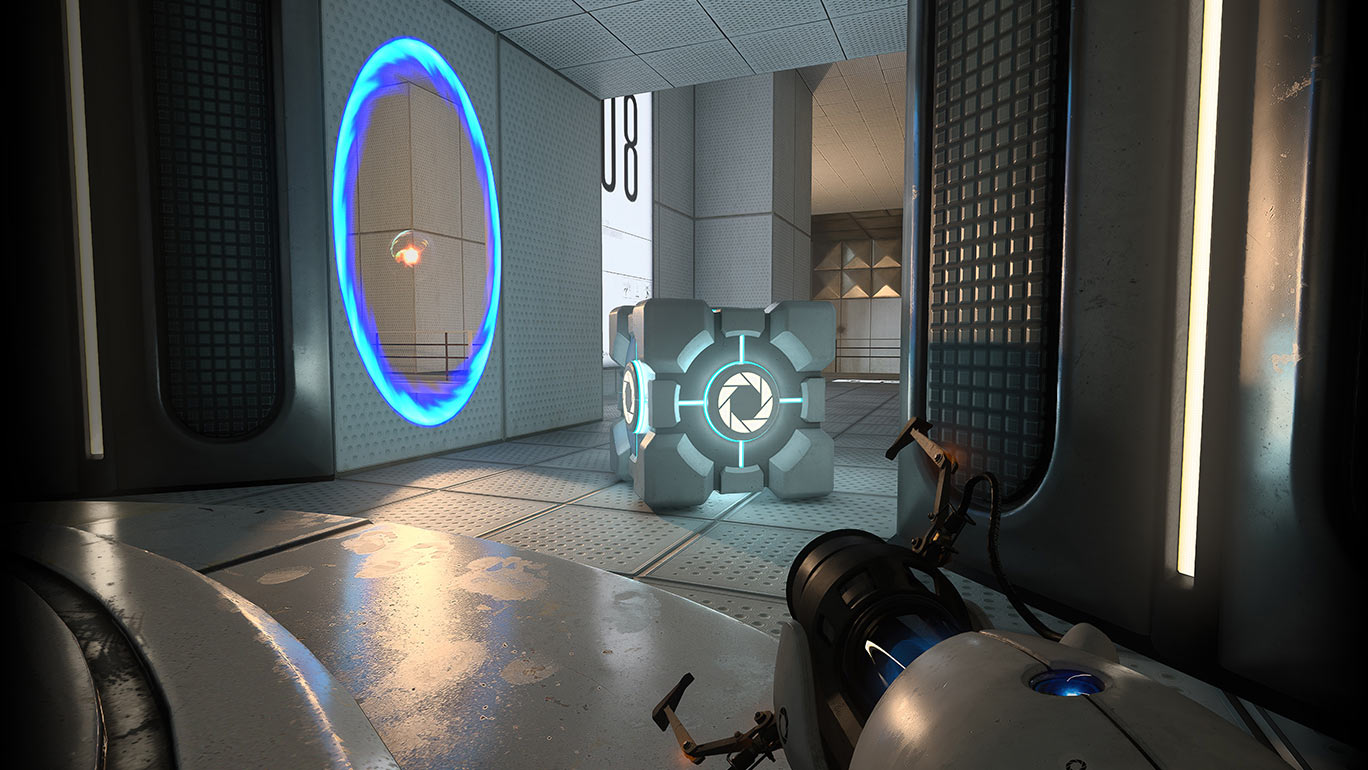

Intel Strives to Make Path Tracing Usable on Integrated GPUs

Is mainstream ray tracing around the corner?

A new article from Intel explains the latest advances in path-traced light simulation and neural graphics research that its engineers have been working on. One of the biggest improvements Intel is striving toward is far more efficient path-traced rendering, which could enable integrated GPUs to run path-tracing in real time.

The article links to three new papers advertising new path-traced optimizations that will be presented at SIGGRAPH, EGSR, and HPG by the Intel Graphics Research Organization. These optimizations are designed to alleviate and improve GPU performance by reducing the number of calculations required to simulate light bounces.

The first paper shown presents a new method of computing reflections on a GGX microfacet surface. GGX is a graphics technology that allows computers to capture simulated light bounces that are reflected in different directions. With this new method, materials are "reduced" to a hemispherical mirror that is substantially more simple to simulate.

The second paper shows off a more efficient method of rendering glittery surfaces in a 3D environment. According to Intel, simulating glittery surfaces is an "open challenge." However, with this new method, the average number of visible glitter from each pixel can be taken into account. That way, the GPU only needs to render the correct amount of visible glitter visible to the eye.

Finally, another paper presents a more efficient method of constructing photo trajectories in different illumination scenarios, known as Markov Chain Mixture Models for Real-Time Direct Illumination. The explanation is very complex, but the end result is a more efficient rendering technique to output complex direct illumination in real time.

These three techniques obviously aren't going to guarantee that integrated GPUs will be able to run path-tracing smoothly. But they are aimed at improving the core aspects of path tracing, including ray tracing, shading, and sampling, which will help improve real-time path tracing performance on integrated GPUs (as well as discrete GPUs), according to Intel.

Getting real-time light simulation to work on integrated GPUs effectively would be a huge milestone. Even though real-time ray tracing has technically been around since 2018, it still takes a massive amount of processing power to run. Most of the world's Best GPUs still can't operate most modern AAA games at 60FPS at high resolutions with ray-tracing graphics enabled. If Intel can get path tracing to work well on its own integrated graphics solutions, it would take the tech mainstream and make light simulation easier to run on discrete GPUs as well.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

Actually, the MOST important point to be included is that, Intel is working on some of these neural tools that will enable real-time path tracing with 70% - 90% of the compression of native path tracing renderers.Reply

At the same time the neural rendering technique will also offer better performance, due to the compression. Intel has already discussed this in the "Path Tracing a Trillion Triangles" session.

Their method achieves 70%-95% compression compared to "vanilla" path tracing. -

Amdlova I belive intel will show Middle finger to amd and nvidia on next gen graphics. Raytracing a and raw power.Reply -

hotaru.hino Reply

I looked into this more since 70-90% compression implies either a huge amount of redundancy or a huge amount of data being thrown away, or both. And neither makes sense.Metal Messiah. said:Intel is working on some of these neural tools that will enable real-time path tracing with 70% - 90% of the compression of native path tracing renderers.

So looking into it some more, my best guess is the actual data being presented to the renderer is just enough input parameters to feed an AI to generate the rest of the geometry. And as long as you're not seeding the AI with random data all the time and they're using the same exact model, it should spit out the same result. -

bit_user Reply

I saw that same link on another tech site which covered this story. It doesn't seem to link to the session mentioned, nor can I find it from any of the links on that page. Not only that, I can't find it anywhere on their site, nor on the linked youtube channel.Metal Messiah. said:Intel has already discussed this in the "Path Tracing a Trillion Triangles" session.

I think they got that link from Intel's article. My best guess is that it's planned to be one of the sessions at Siggraph 2023. I can't be sure, because they haven't published the 2023 Syllabus.

BTW, that's the official site of the classic text:

Good book ...and actually not that expensive, if you price it per page or per kg.

; ) -

TerryLaze ReplyMetal Messiah. said:Actually, the MOST important point to be included is that, Intel is working on some of these neural tools that will enable real-time path tracing with 70% - 90% of the compression of native path tracing renderers.

At the same time the neural rendering technique will also offer better performance, due to the compression. Intel has already discussed this in the "Path Tracing a Trillion Triangles" session.

Their method achieves 70%-95% compression compared to "vanilla" path tracing.

If you look at how zip and rar show compression ratios then that 70-95% means that they compress the data by 5-30%hotaru.hino said:I looked into this more since 70-90% compression implies either a huge amount of redundancy or a huge amount of data being thrown away, or both. And neither makes sense.

So looking into it some more, my best guess is the actual data being presented to the renderer is just enough input parameters to feed an AI to generate the rest of the geometry. And as long as you're not seeding the AI with random data all the time and they're using the same exact model, it should spit out the same result. -

bit_user Reply

Battlemage isn't rumored to be huge. So, it won't contend for the high-end. It should be more efficient than Alchemist, and therefore more competitive in the mid-range and entry-level.Amdlova said:I belive intel will show Middle finger to amd and nvidia on next gen graphics. Raytracing a and raw power. -

bit_user Reply

Just think of it like image compression or video compression. Yes, there's a lot of redundancy, but the real reason they can achieve such high compression ratios is that they're willing to accept lossy compression.hotaru.hino said:I looked into this more since 70-90% compression implies either a huge amount of redundancy or a huge amount of data being thrown away, or both. And neither makes sense.

No, this isn't about compressing geometry - it's for approximating lighting, reflection, refraction. You're much less likely to notice approximations there than in geometry. Heck, conventional game engines are full of hacks for approximating those sorts things with a lot less accuracy than what they're proposing.hotaru.hino said:So looking into it some more, my best guess is the actual data being presented to the renderer is just enough input parameters to feed an AI to generate the rest of the geometry. -

hotaru.hino Reply

The only place I'm seeing the 70-95% compression is in this pagebit_user said:No, this isn't about compressing geometry - it's for approximating lighting, reflection, refraction. You're much less likely to notice approximations there than in geometry. Heck, conventional game engines are full of hacks for approximating those sorts things with a lot less accuracy than what they're proposing.

And taking the only section where the value is mentioned is in here, which talks about geometry.

If AI is in play here, then this tells me they're using it to reduce a complex model into just enough data that an AI can use and fill in the gaps as needed. So sure, this could imply lossy compression since the AI may never generate an exact copy of an original, ground truth model. But at the same time, if this is used to create other kinds of geometry in the same class (e.g., a tree) by simply tweaking some parameters, then is it really data (de)compression at that point?

A versatile toolbox for real-time graphics ready to be unpackedOne of the oldest challenges and a holy grail of rendering is a method with constant rendering cost per pixel, regardless of the underlying scene complexity. While GPUs have allowed for acceleration and efficiency improvements in many parts of rendering algorithms, their limited amount of onboard memory can limit practical rendering of complex scenes, making compact high-detail representations increasingly important.

With our work, we expand the toolbox for compressed storage of high-detail geometry and appearances that seamlessly integrate with classic computer graphics algorithms. We note that neural rendering techniques typically fulfill the goal of uniform convergence, uniform computation, and uniform storage across a wide range of visual complexity and scales. With their good aggregation capabilities, they avoid both unnecessary sampling in pixels with simple content and excessive latency for complex pixels, containing, for example, vegetation or hair. Previously, this uniform cost lay outside practical ranges for scalable real-time graphics. With our neural level of detail representations, we achieve compression rates of 70–95% compared to classic source representations, while also improving quality over previous work, at interactive to real-time framerates with increasing distance at which the LoD technique is applied.

I mean, I can run Stable Diffusion to create way more data than the 5-6GB or so model used to run it.

Or more to generalize this even further, if I have an algorithm that takes a 32-bit seed and spits out a 256x256 maze, and assuming each cell is a byte, is this a 16384:1 compression system? -

ET3D Reply

No, but if you get a 256x256 maze and can find a 32-bit seed that generates it, then it's 16384:1 compression.hotaru.hino said:Or more to generalize this even further, if I have an algorithm that takes a 32-bit seed and spits out a 256x256 maze, and assuming each cell is a byte, is this a 16384:1 compression system?

I'm not sure what you misunderstand, because "just enough data that an AI can use and fill in the gaps as needed" is pretty much on spot for AI compression. It doesn't imply lossy at all (even though that could be the case). You could have the AI regenerate the data and keep in addition the exact difference from the source (possibly compressed in another manner) and then get an exact copy. You could of course not correct and use what the AI generated, and then you'd indeed get something lossy, just a lot more efficient for the quality than other compression methods. -

Yup, I don't understand the underlying tech. I'm glad Intel is doing what AMD promissed, ray tracing for everyone. (is it correct here? I don't know the difference between ray/path tracing)Reply