Nvidia's AI Tech Can Turn Standard Video Into a Slow-Motion Showcase

Nvidia has developed a method to convert standard video recordings into detailed slow-motion video clips. The company trained a neural network to fill in the missing frames and simulate ultra-high framerate video.

Nvidia is always looking for new ways to demonstrate the power of its GPUs in deep-learning scenarios, and the company’s latest demo has our attention. Nvidia today revealed that it developed a method to create high-quality slow-motion video from low-framerate source material using a trained neural network. The team of researchers that developed the technique plan to present their findings at the annual Computer Vision and Pattern Recognition (CVPR) conference in Salt Lake City, Utah on June 21.

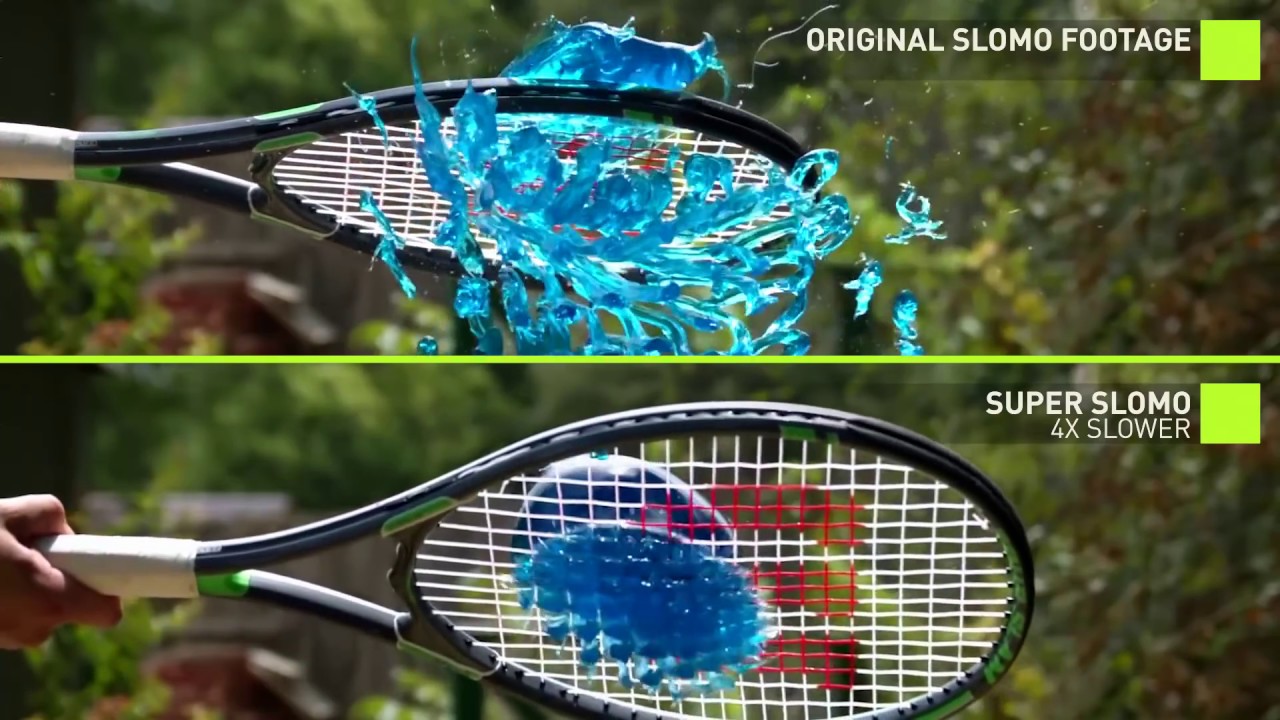

Nvidia’s new AI-powered Super SloMo video system can analyze the existing frames of a 30-fps (or better) source video and automatically generate frames to fill in the gaps to produce smooth slow-motion video.

To train the system, a team of AI researches at Nvidia ran “over 11,000 videos of everyday and sports activities shot at 240 frames-per-second” through the cuDNN-accelerated PyTorch deep learning framework powered by an array of Tesla V100 GPUs, to create a prediction model that can accurately interpret and predict motion in video sequences and generate frames, to reduce the playback speed without causing jittery motion.

“Our method can generate multiple intermediate frames that are spatially and temporally coherent,” the researchers said. “Our multi-frame approach consistently outperforms state-of-the-art single frame methods.”

To reduce the playback speed of a 30-fps video by a factor of four, the AI system would need to generate 190 additional frames for each second of video. It’s hard to believe that a neural network could accurately predict that many missing frames, but that’s just the tip of the iceberg.

Nvidia’s researchers also demonstrated that its AI system could increase the framerate of existing slow-motion video to reduce its playback speed further. The team pushed a handful of slow-motion video clips from The Slow Mo Guys YouTube channel through the AI slow-motion system to create ultra-slow-motion video clips that retain the detail and smoothness of the original clips.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Genuine visual impressiveness aside, Nvidia’s slow-motion system can convert existing video into slow-motion video, but it’s not a substitute for a real slow-motion camera. The clips that the system produced of the SlowMoGuys’ videos look crisp and clear, but that’s because of the source material. Examples of standard framerate video reduced the slow-motion highlight the downside to such a technique. True slow-motion video enables you to see the details of the scene with high precision and make precise scientific measurements.But standard video slowed down using AI doesn’t allow you to see the finer details of each frame. Also, AI-generated frames obviously don't represent actual recorded reality, so it can't take the place of true high-framerate footage for many research tasks.

Nvidia’s AI-generated Super SloMo video system isn’t yet publicly available, and the company gave no indication as to when it would be made public.

Kevin Carbotte is a contributing writer for Tom's Hardware who primarily covers VR and AR hardware. He has been writing for us for more than four years.

-

bit_user Motion interpolation is a hard problem that's pretty well-suited to AI. I wouldn't use it for slow-mo, so much as framerate conversion (i.e. converting between 24, 25, 30, 50, and 60 fps) and motion smoothing, like that featured in many TVs.Reply

You could even use it to extrapolate frames, in VR or videogames, when frames are late leaving the rendering pipeline. -

Co BIY Pretty soon this tech will allow the easy "Photoshopping" of videos to include that which never happened.Reply

You will not be able to believe your eyes. -

redgarl People are missing the point that this is not intended for any kind of video smoothing, it is intended for visual prediction.Reply

Basically Nvidia AI car technology is having a huge issue. It is relating on video feed entirely. What Nvidia wants to do is introducing prediction capability for self-driving car. By analyzing cars behaviors feeds, they can predict how an autonomous car can react.

It is something necessary on the short term, but it doesn't solve the problem at all. -

pensive69 '...By analyzing cars behaviors feeds, they can predict how an autonomous car can react....'Reply

i'd add a suggestion to the auton vehicle types.

also remember they need to focus on what other human operated vehicles are doing and how they react.

the auton vehicle isn't out there alone.

maybe they also need a auton vehicle social network or neural network to communicate how they are reacting and manage any "bad actors". -

bit_user Reply

Except it's a method for video interpolation - not extrapolation.21069607 said:People are missing the point that this is not intended for any kind of video smoothing, it is intended for visual prediction.

Basically Nvidia AI car technology is having a huge issue. It is relating on video feed entirely. What Nvidia wants to do is introducing prediction capability for self-driving car. By analyzing cars behaviors feeds, they can predict how an autonomous car can react.

It is something necessary on the short term, but it doesn't solve the problem at all.

And nowhere does the article, their blog post, or the research paper reference autonomous driving.

I'm not sure you realize just how many places AI is starting to show up. Nvidia is involved in lots of AI research that has nothing to do with autonomous driving.

BTW, if you're going to make factual-sounding posts from your imagination, it would probably be a good idea to start them with "I wonder if...". And if it is well-sourced information, then cite your sources. -

bit_user Reply

Yes, inter-vehicular communication networks are a real thing. Look it up.21070055 said:maybe they also need a auton vehicle social network ... to communicate how they are reacting and manage any "bad actors". -

stdragon That's amazing! I wonder if this can already improve on the technique used in TVs to interpolate 30 FPS to 60 without creating the dreaded "soap opera" effect.Reply -

bit_user Reply

Um, I think the "soap opera effect" is inherent in high-framerate video. It's entirely a subconscious association people have with the juddery 24 fps of film and the smooth 60i of soap operas. Leave your motion interpolater on, for a while, and the effect will naturally disappear. Then, you'll miss the motion interpolation whenever you don't have it.21071627 said:That's amazing! I wonder if this can already improve on the technique used in TVs to interpolate 30 FPS to 60 without creating the dreaded "soap opera" effect.

https://en.wikipedia.org/wiki/Motion_interpolation#Soap_opera_effect

For me, the improvement in visual clarity of using motion interpolation is a huge win. The only negative is the artifacts, most of which Nvidia's solution could hopefully eliminate.

See also:

https://en.wikipedia.org/wiki/Display_motion_blur