TSMC's N3 And Intel's Tiled Design Could Enable Monstrous iGPUs

Arrow Lake projected to offer Xe GPU with up to 384 EUs.

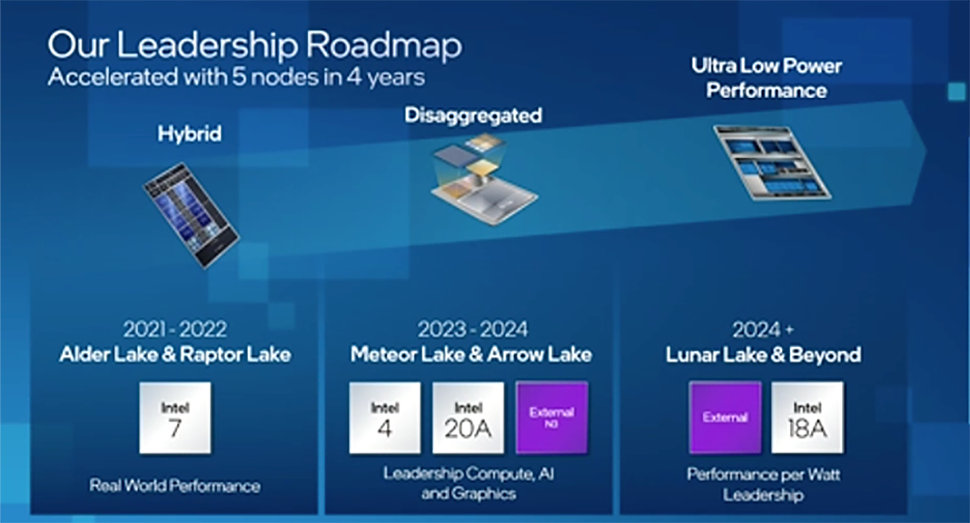

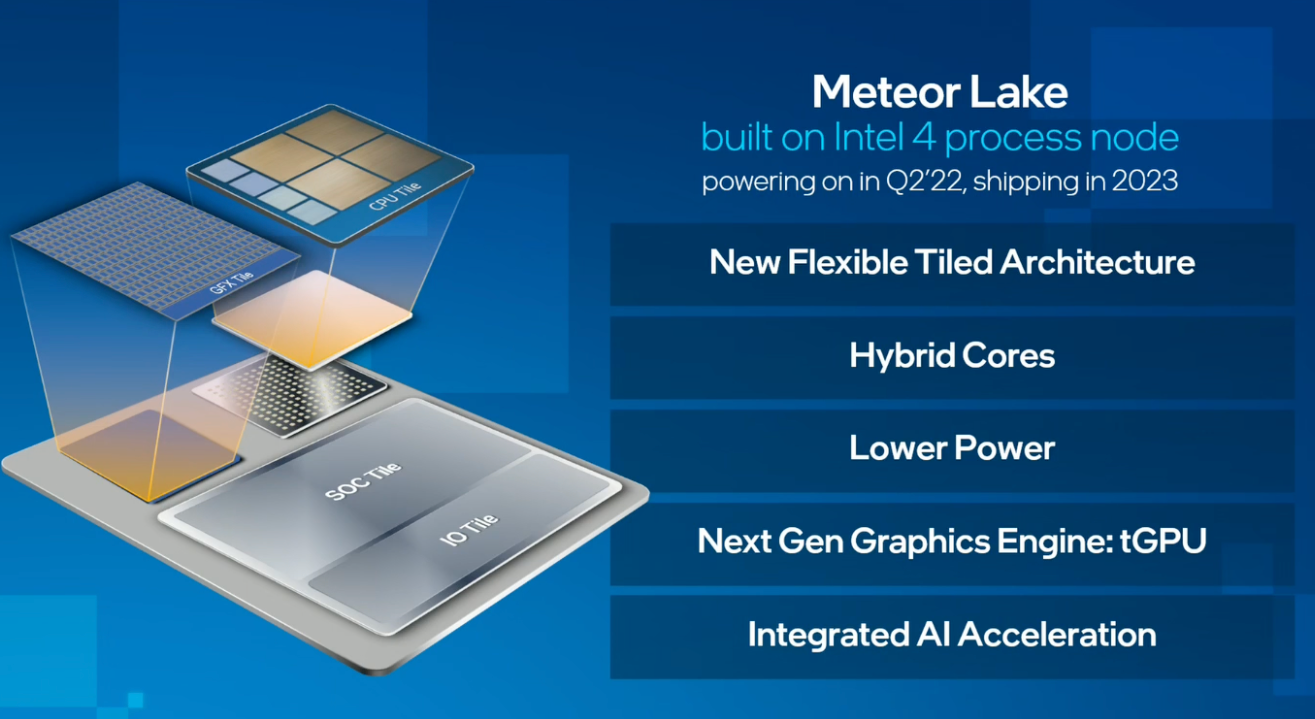

Multi-chiplet tiled design gives Intel a lot of freedom regarding transistor count, performance, and features for its upcoming CPUs. Intel aims to enhance general-purpose and graphics performance with its codenamed Meteor Lake and Arrow Lake processors due in 2023 and 2024. Apparently, equipping its CPUs with monstrous integrated GPUs (iGPUs) is in Intel's master plan. Within two years, Intel is rumored to at least quadruple the performance of its iGPUs.

Intel has consistently increased the iGPU performance of its processors for several years now. The company's Ivy Bridge chips arrived ten years ago, featuring an iGPU with up to 16 execution units (EUs). However, Intel's highest-performing Tiger Lake (and Alder Lake) processors feature a considerably more advanced Xe iGPU with up to 96 EUs. Intel does not integrate bigger iGPUs into its processors today because of large die sizes, which sometimes mean high development costs, high production costs, and potentially poor yields. Intel will reduce die sizes of individual components with tiled designs, which will lower development costs and theoretically ensure decent yields.

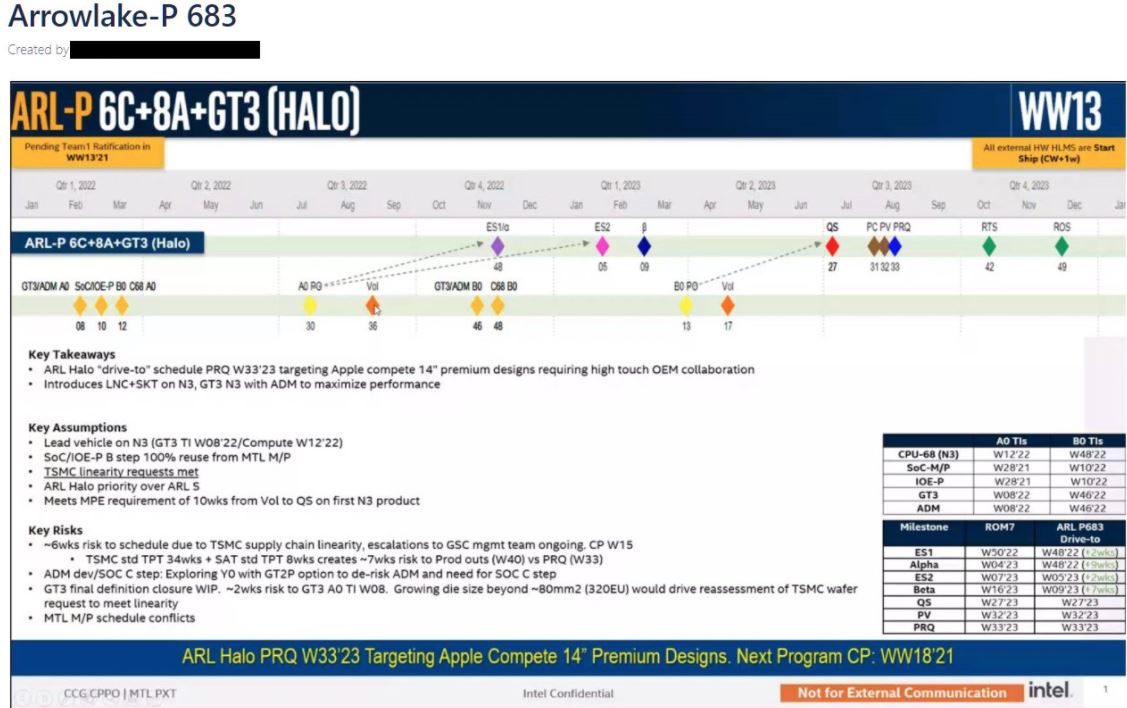

As reported, Meteor Lake and Arrow Lake processors will place their iGPUs on a separate tile produced by TSMC using its N3 fabrication process, which provides some additional design flexibility. With Arrow Lake, Intel is rumored to equip its iGPU with either 320 EUs or 384 EUs (which corresponds to 2560 – 3072 stream processors, assuming that Intel’s architecture will not be completely revamped in two years), depending on whether the information comes from AdoredTV (320 EUs) or another source who claims that Intel’s plans are now bolder and include an iGPU with 384 EUs.

At this point, it is hard to say who is right and who is wrong since Intel's plans are not public (and Intel does not comment on unreleased products). However, it is evident that at least two sources claim that Intel plans to dramatically increase the performance of its iGPUs. Furthermore, such an increase would be consistent with Intel's multi-year integrated graphics strategy. Still, considering the fact that the plans are not official, they could change.

Radically increasing the performance of its integrated GPUs will give Intel's highly-integrated Meteor Lake and Arrow Lake processors an edge over entry-level discrete offerings by AMD and Nvidia. As a result, this move could allow the chip giant to increase its per-CPU prices. Furthermore, these upcoming CPUs will be more competitive against Apple's forthcoming M-series system-on-chips for MacBook Pro laptops that feature high-end integrated GPUs.

Making graphics tiles of Meteor Lake and Arrow Lake CPUs using TSMC's N3 node (or N3E node given its improved process window) will enable Intel to learn how to design GPUs using this manufacturing technology. Therefore, it could transit its standalone graphics processor to this fabrication process fairly quickly, which will provide the company a strategic advantage over rivals.

Another advantage of Intel's usage of TSMC's N3/N3E for graphics will be offering high-performance iGPUs to address market segments currently dominated by cheap discrete GPUs like AMD's Navi 24 or Nvidia's GA107/GP107/GP108.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

InvalidError Depending on how much of a premium Intel is going to ask for big-IGP CPU variants, we may be one step closer to sub-$300 GPUs becoming obsolete.Reply -

helper800 I long for the day that I can put in a CPU with integrated graphics that give mid tier or higher discrete performance from the previous gen cards. That would surly light a fire under AMD and Nvidia's butts on the low end.Reply -

hotaru.hino The problem with pushing higher performing iGPUs though is the memory subsystem has to keep up, otherwise VRAM has to be baked into the part. For example, the RTX 3050 has a memory bandwidth of 224 GB/sec. If we want something similar on an iGPU, with DDR5-6400 (rated for 51.2 GB/sec), you'd need at least a quad-channel memory subsystem.Reply

Not to mention the additional power/heat/etc. -

helper800 Reply

Not as simple as adding a stick of DDR6 16gb module on the side of the CPU socket is it? Well, Quad channel memory in a desktop without a gigantic price premium has been something we have not seen move into the main stream yet. I honestly don't know why but would assume cost of the motherboard and memory subsystem development for the CPU is not easily added cost effectively. Now with the DDR5 generation having the memory controller on the sticks themselves does that eliminate some of that issue?hotaru.hino said:The problem with pushing higher performing iGPUs though is the memory subsystem has to keep up, otherwise VRAM has to be baked into the part. For example, the RTX 3050 has a memory bandwidth of 224 GB/sec. If we want something similar on an iGPU, with DDR5-6400 (rated for 51.2 GB/sec), you'd need at least a quad-channel memory subsystem.

Not to mention the additional power/heat/etc. -

InvalidError Reply

Not a problem: Intel can simply reuse the same memory tiles they are using in Ponte Vecchio or some future iteration thereof. An easy 4-8GB of VRAM with 500+GB/s of bandwidth there.hotaru.hino said:The problem with pushing higher performing iGPUs though is the memory subsystem has to keep up, otherwise VRAM has to be baked into the part.

Huh? No. The memory controller is still in the CPU. What has moved from motehrboard to DIMMs is the memory VRM. The other thing new with DDR5 is that DIMMs also have a command buffer chip so the CPU's memory controller only needs to drive one chip per DIMM for commands instead of driving the fan-out to every memory chip, reducing the bus load by up to 16X. The extra buffer chip is part of the reason for DDR5's increased latency.hotaru.hino said:Now with the DDR5 generation having the memory controller on the sticks themselves does that eliminate some of that issue? -

helper800 Reply

Ahh, I was mistaken, shiny new RAM version moved some stuff around and I must have misread that part.InvalidError said:Huh? No. The memory controller is still in the CPU. What has moved from motehrboard to DIMMs is the memory VRM. -

hotaru.hino Reply

Queue developers or something whining about how they can't access that for CPU related stuff because they paired the part up with a high-end GPU and they want "super ultra fast RAM"InvalidError said:Not a problem: Intel can simply reuse the same memory tiles they are using in Ponte Vecchio or some future iteration thereof. An easy 4-8GB of VRAM with 500+GB/s of bandwidth there. -

spongiemaster Reply

Judging by the current market, the only thing such a move would persuade AMD and Nvidia to do is abandon that part of the market completely and focus on releasing even more expensive tiers at the top to see have far they can push it. They'd probably be happy to not have to release a stripped down sub $300 GPU and instead send all their silicon to high end GPU's and enterprise products.helper800 said:I long for the day that I can put in a CPU with integrated graphics that give mid tier or higher discrete performance from the previous gen cards. That would surly light a fire under AMD and Nvidia's butts on the low end. -

helper800 Reply

No, I think they would have to unload their, not up to snuff, silicon into the low end market regardless it would just see increased competition and thus lower pricing to match.spongiemaster said:Judging by the current market, the only thing such a move would persuade AMD and Nvidia to do is abandon that part of the market completely and focus on releasing even more expensive tiers at the top to see have far they can push it. They'd probably be happy to not have to release a stripped down sub $300 GPU and instead send all their silicon to high end GPU's and enterprise products. -

InvalidError Reply

CPUs don't generally need all that much memory bandwidth since each core has a much larger amount of L2/L3 cache to work with than GPUs do. If your algorithm has no data locality (cannot work from cache) then you get screwed by memory latency no matter how much memory bandwidth you may have.hotaru.hino said:Queue developers or something whining about how they can't access that for CPU related stuff because they paired the part up with a high-end GPU and they want "super ultra fast RAM"