IBM intros Telum II processor — 5.5GHz chip with onboard DPU claimed to be up to 70% faster

IBM's new processor brings AI to mainframes.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

IBM has announced its next-generation Telum II processor with a built-in AI accelerator for next-generation IBM Z mainframes that can handle both mission-critical tasks and AI workloads. The new processor can potentially improve performance "by up to 70% across key system components," compared to the original Telum, released in 2021, according to an email we received from IBM.

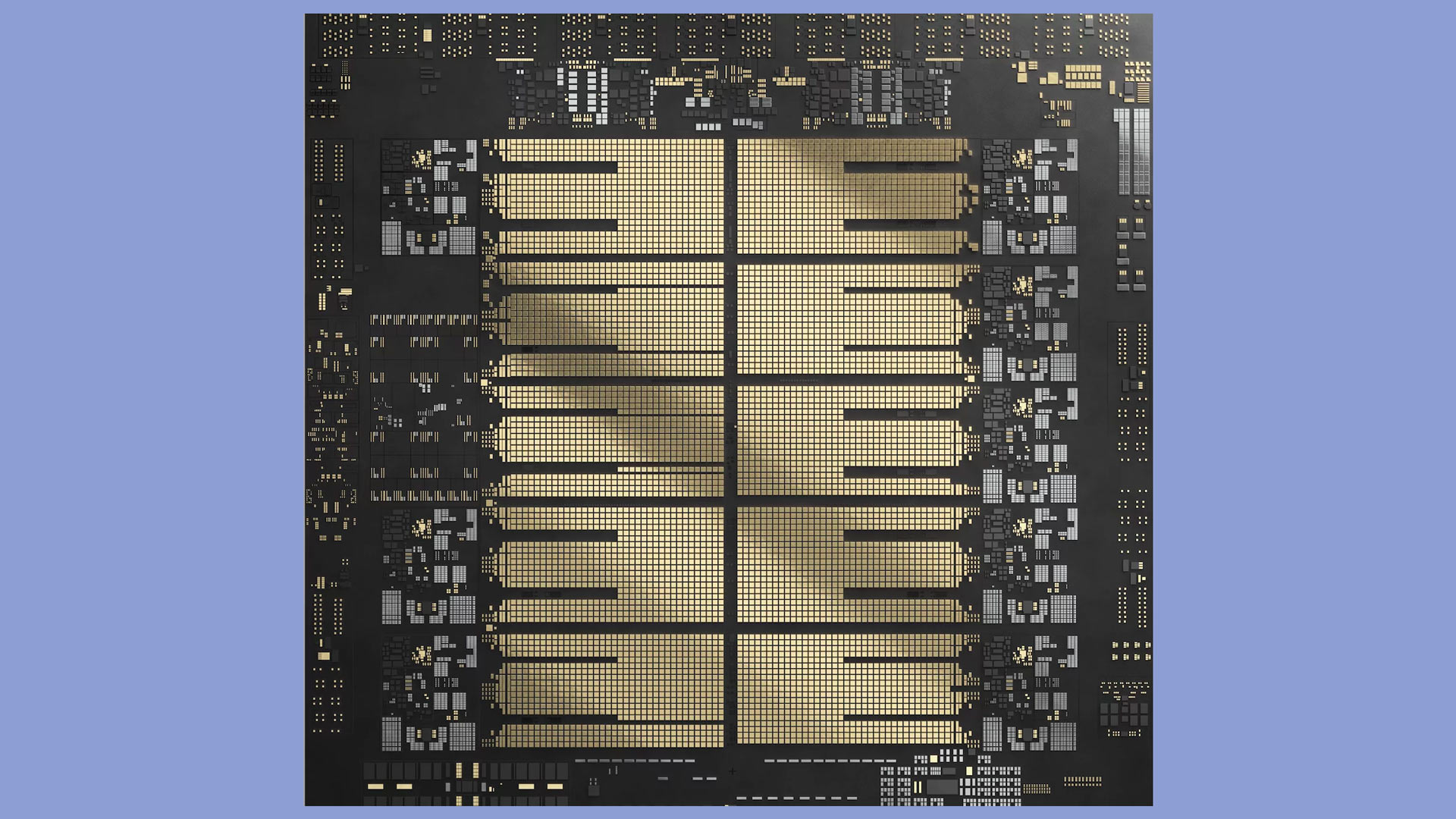

The Telum II processor packs eight high-performance cores with improved branch prediction, store writeback, and address translation operating at 5.5GHz as well as 36MB of L2 cache, a 40% increase over its predecessor. The CPU also supports virtual L3 and L4 caches expanding to 360MB and 2.88GB, respectively. A key feature of Telum II is its improved AI accelerator, which delivers four times the computational power of its predecessor, reaching 24 trillion operations per second (TOPS) with INT8 precision. The accelerator's architecture is optimized for handling AI workloads in real time with low latency. In addition, Telum II has a built-in DPU for faster transaction processing. Telum II is made on Samsung's 5HPP process technology and contains 43 billion transistors.

System-level improvements in Telum II allow each AI accelerator within a processor drawer to receive tasks from any of the eight cores, ensuring balanced workloads and maximizing the available 192 TOPS per drawer across all accelerators when fully configured.

In addition to Telum II, IBM introduced its new Spyre AI accelerator add-in-card developed in collaboration with IBM Research and IBM. This processor contains 32 AI accelerator cores and shares architectural similarities with the AI accelerator in Telum II. The Spyre Accelerator can be integrated into the I/O subsystem of IBM Z through PCIe connections to boost the system's AI processing power. Spyre packs 26 billion transistors and is made on Samsung's 5LPE production node.

Both the Telum II processor and the Spyre Accelerator are designed to support ensemble AI methods, which involve using multiple AI models to improve the accuracy and performance of tasks. An example of this is in fraud detection, where combining traditional neural networks with large language models (LLMs) can significantly enhance the detection of suspicious activities, according to IBM.

Both the Telum II processor and Spyre Accelerator will be available in 2025, though IBM does not specify whether it will be early in the year or late in the year.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

jp7189 24 TOPs int8 seems really low. Why bother wasting the tranistors on that when any add-in accelerator would be many times that performance? E.g. an H100 is over 3,000 int8 TOPs.Reply -

gg83 Reply

I think IBM has seen the future and they have a master plan.jp7189 said:24 TOPs int8 seems really low. Why bother wasting the tranistors on that when any add-in accelerator would be many times that performance? E.g. an H100 is over 3,000 int8 TOPs. -

Blastomonas Seems weak compared to other offerings. Not sure what the appeal is.Reply

Also don't see much value in quoting performance compared to its former gen. 70% improvement on crap is not an improvement. Besides, after 3 years I would expect at least as much. -

bit_user I really have to wonder how long the mainframe market is going to sustain critical mass to keep funding the development of these custom CPUs.Reply

I think it's because some of IBM's customers want AI capability with mainframe reliability. However, you're right that it's still very limiting. These things are way too expensive to achieve GPU-level performance through scaling, so this level of performance feels more like a tease than anything real.jp7189 said:24 TOPs int8 seems really low. Why bother wasting the tranistors on that when any add-in accelerator would be many times that performance? E.g. an H100 is over 3,000 int8 TOPs. -

bit_user Reply

The appeal is for businesses that are already locked into the mainframe ecosystem or who need the zero-downtime reliability. In some sectors & countries, there might even be regulatory requirements forcing them to use mainframes.Blastomonas said:Seems weak compared to other offerings. Not sure what the appeal is. -

NinoPino @AuthorThe follow sentence have something strange : "IBM introduced its new Spyre AI accelerator add-in-card developed in collaboration with IBM Research and IBM.". I suppose it should end with "...IBM Research."Reply -

NinoPino Reply

It is 24x8 = 192 TOPs. Not so low imho.jp7189 said:24 TOPs int8 seems really low. Why bother wasting the tranistors on that when any add-in accelerator would be many times that performance? E.g. an H100 is over 3,000 int8 TOPs.

And considering the fact that any core can redirect the AI work on any accelerator, this means that it can work as 8x24, 1x192, 2x96, 24+168, and so on. Great flexibility. -

JRStern This is mainframe architecture?Reply

What is a DPU - some kind of smart controller?

Ask me about old 360 models, a bit of 370s, but I haven't really looked at IBM architectures since before Y2K. -

gg83 Reply

IBM research collaboration with IBM Research team and IBM's own IBM research department.NinoPino said:@AuthorThe follow sentence have something strange : "IBM introduced its new Spyre AI accelerator add-in-card developed in collaboration with IBM Research and IBM.". I suppose it should end with "...IBM Research."