Why you can trust Tom's Hardware

Power Consumption on Intel Core i9-13900KS

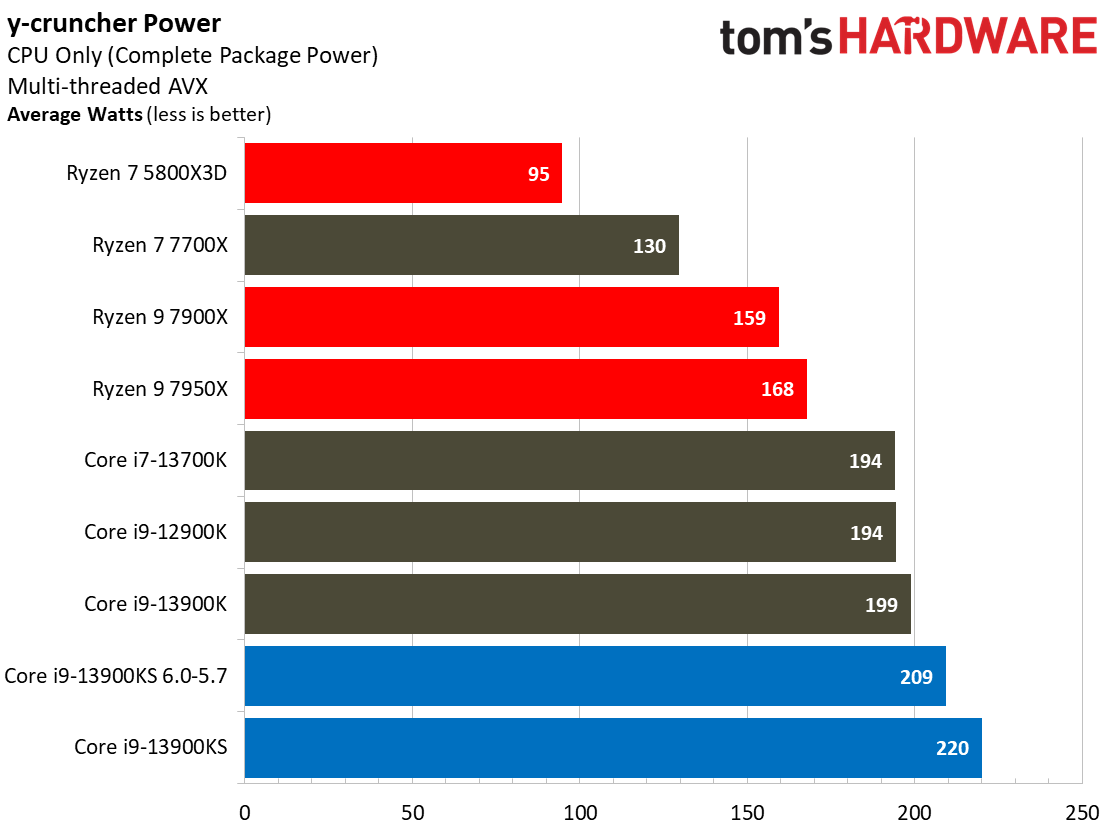

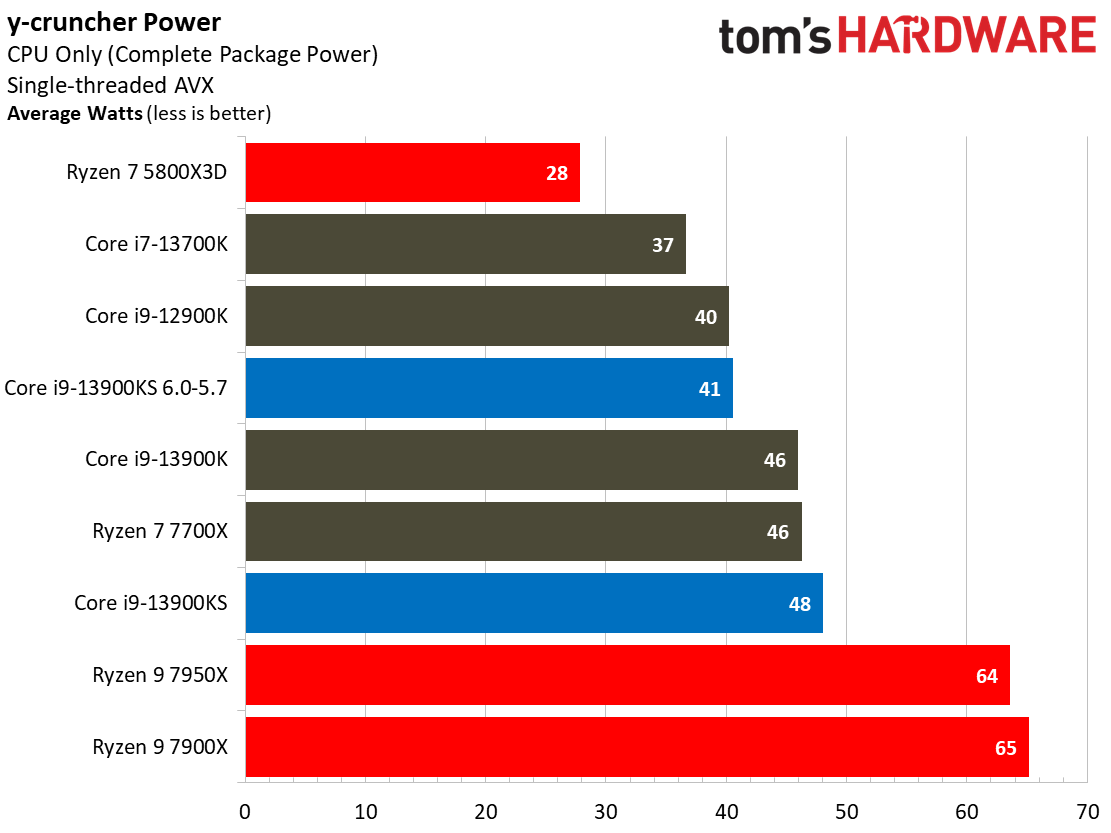

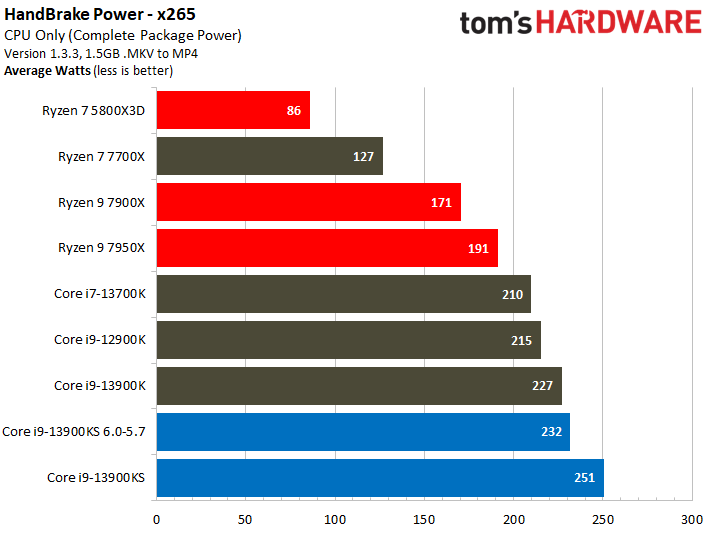

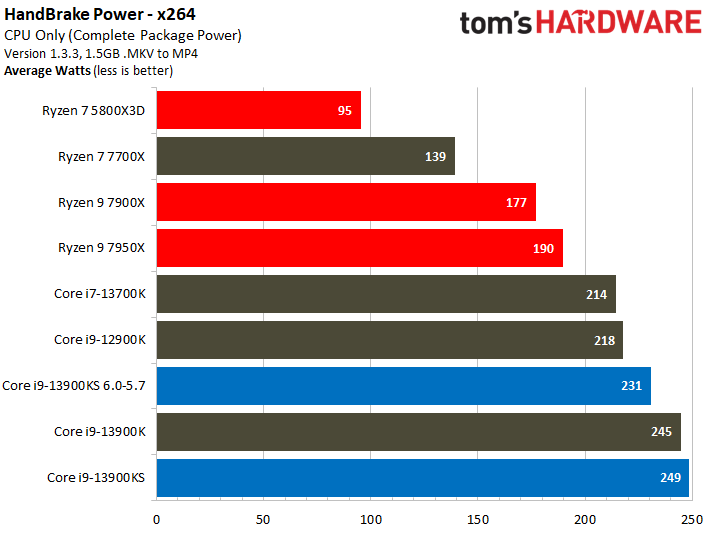

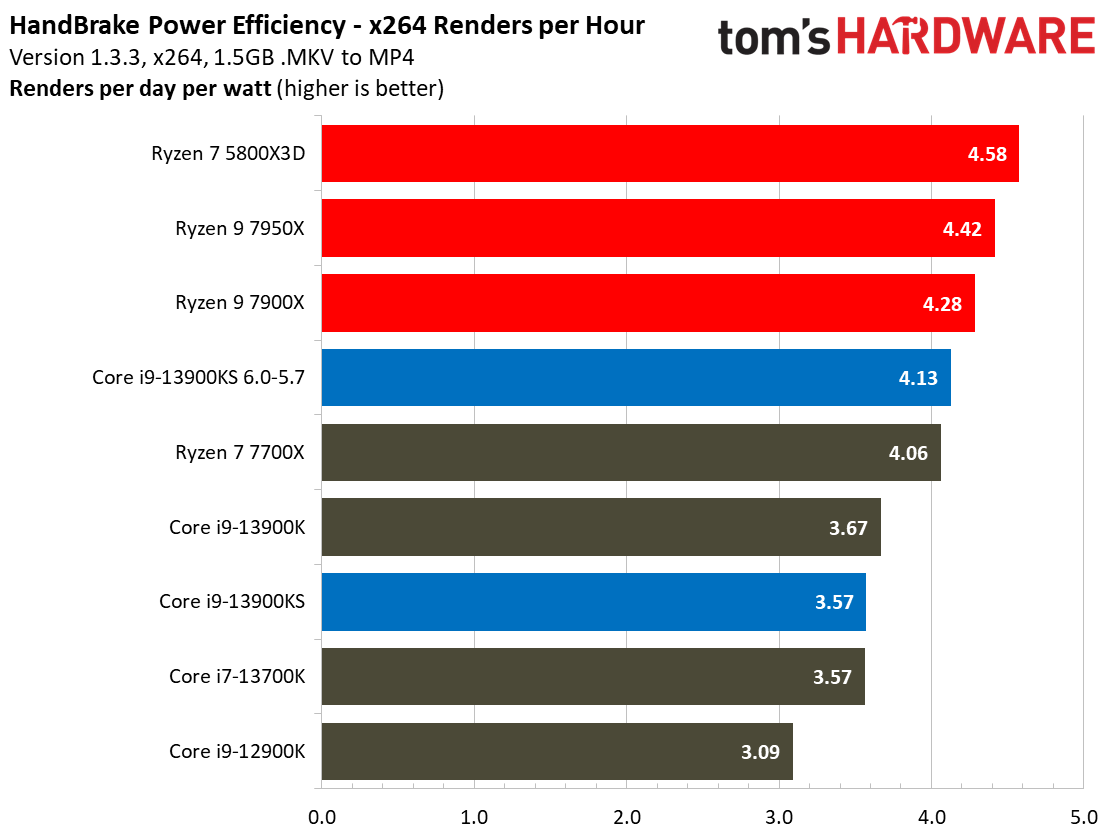

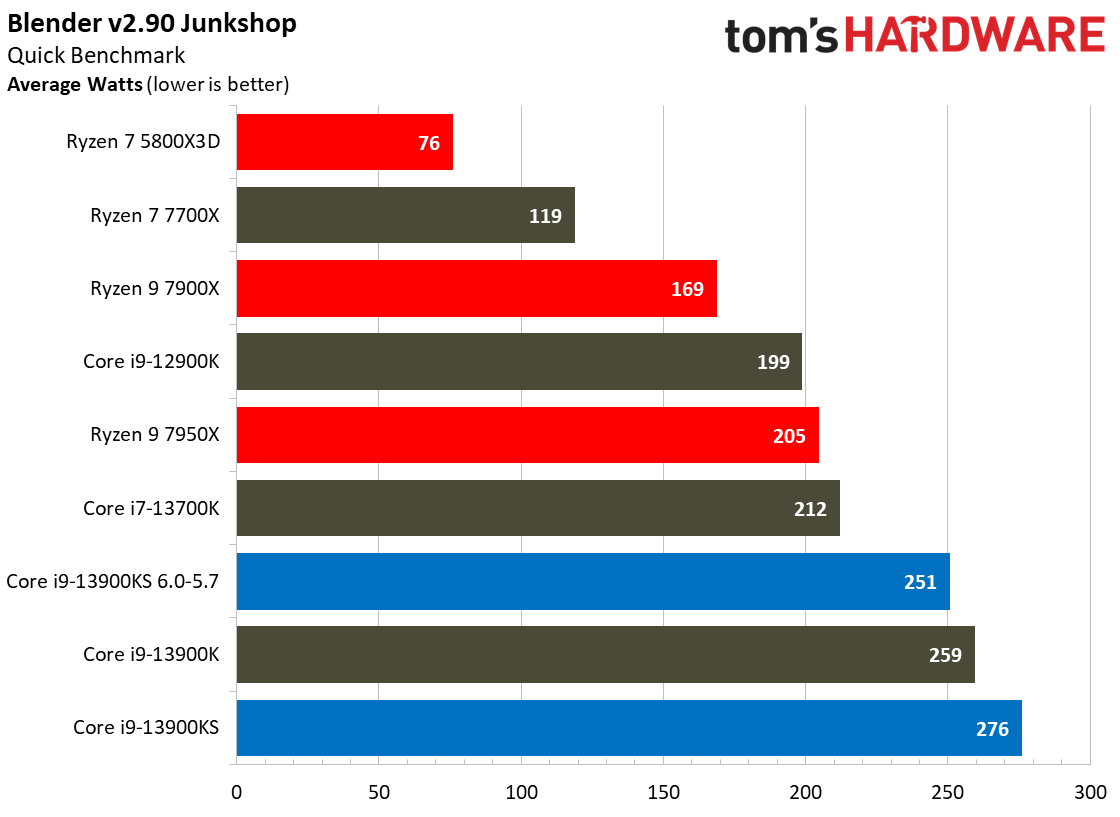

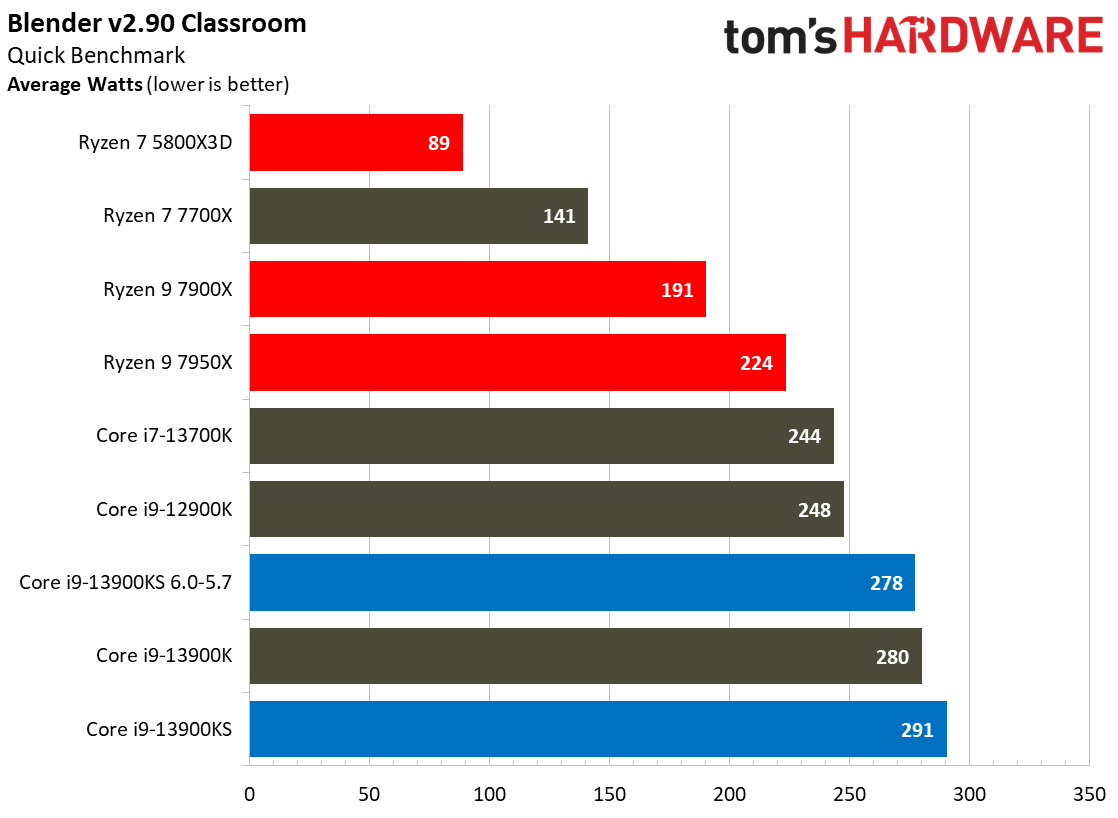

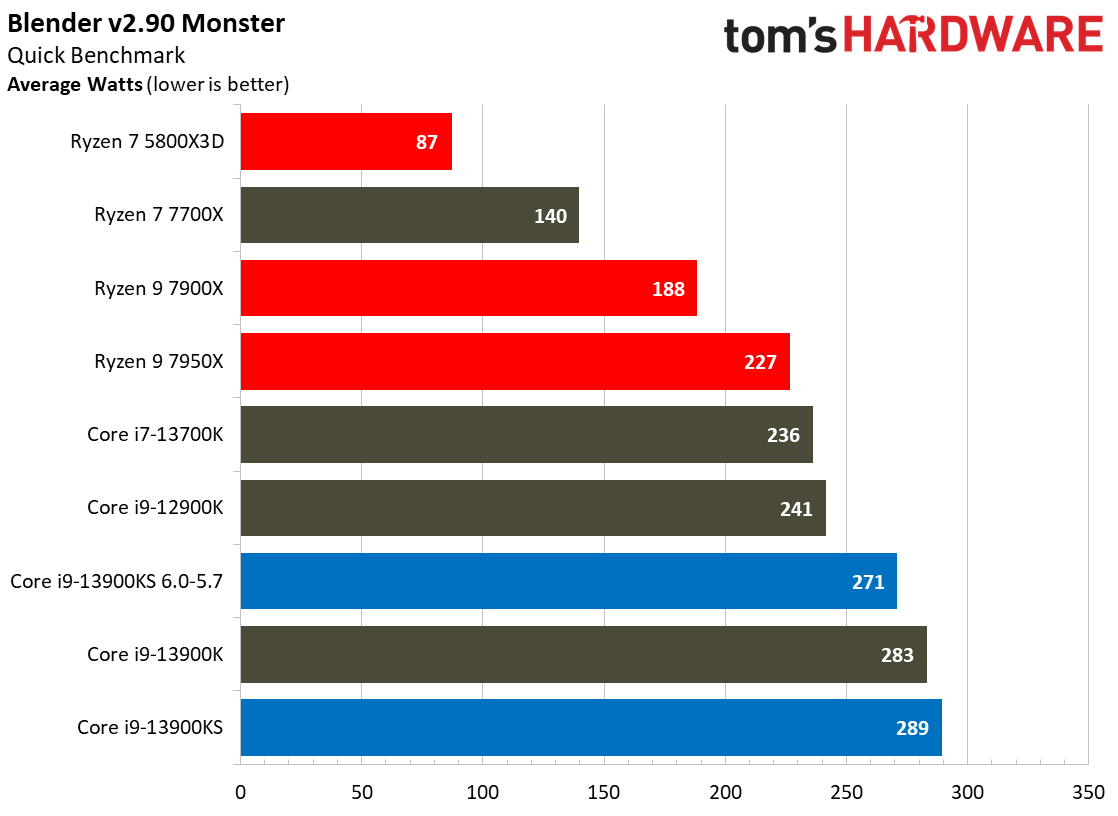

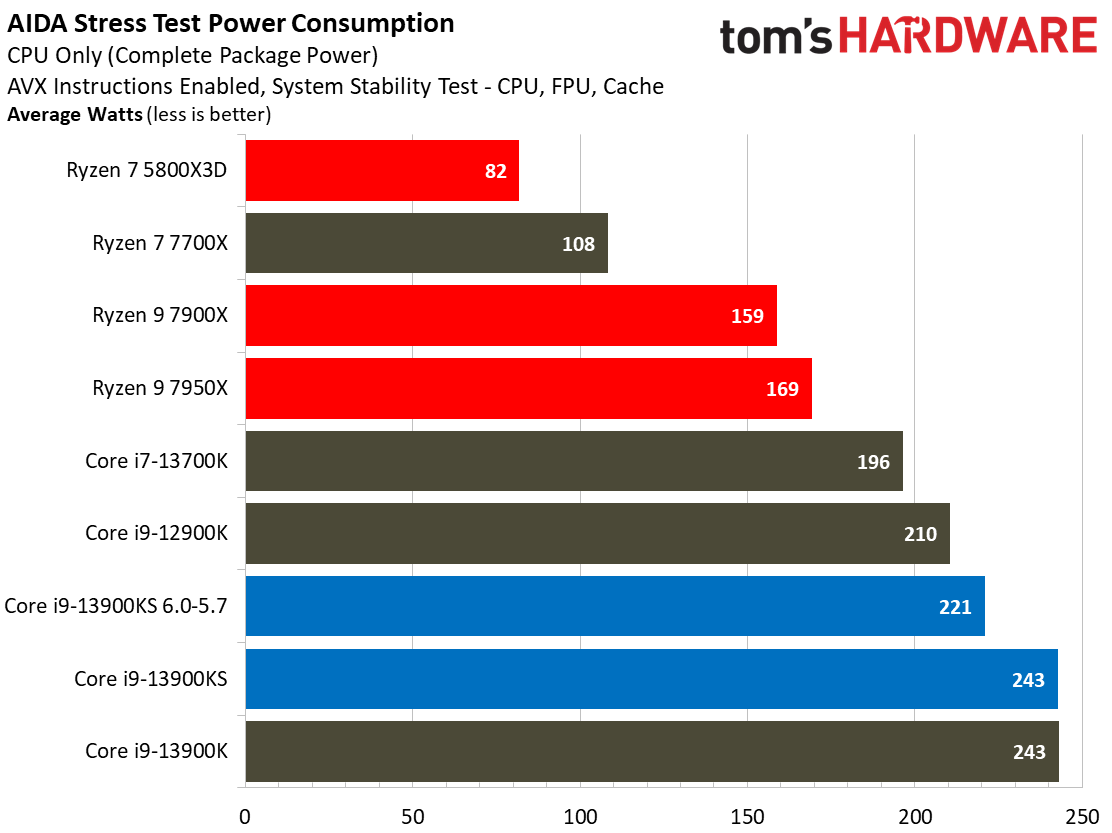

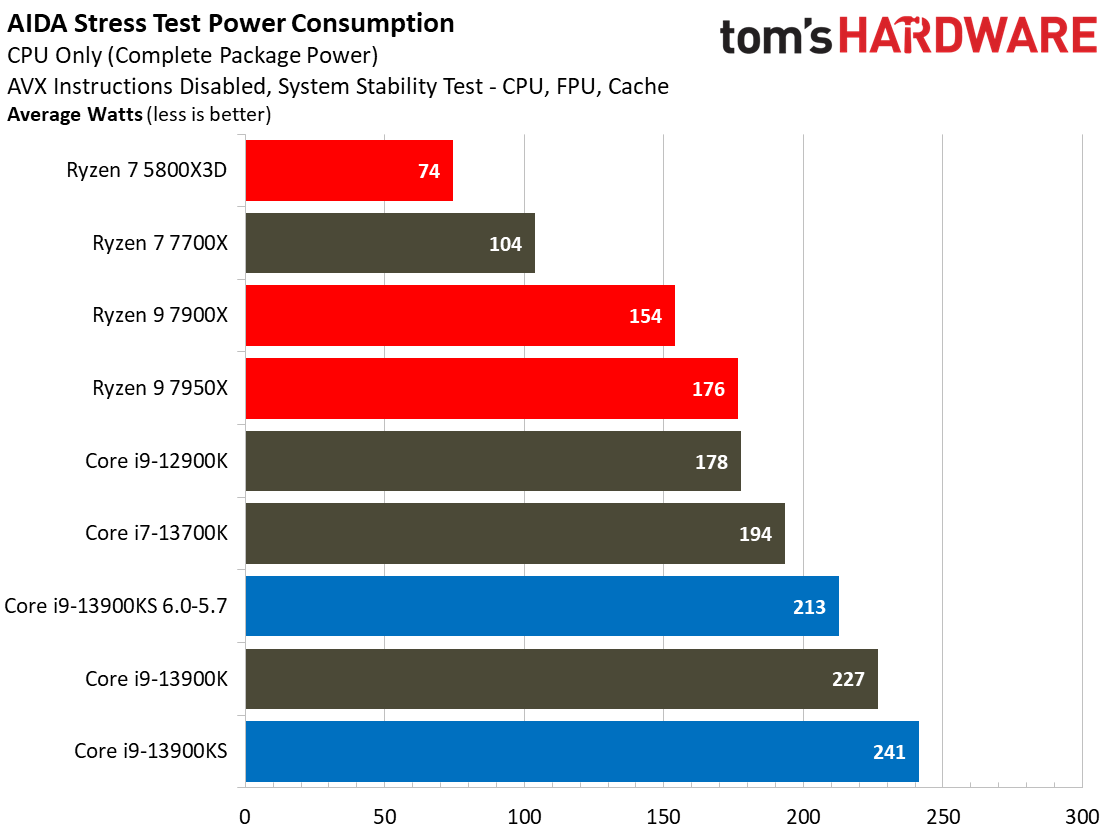

These power measurements show that the Core i9-13900KS will rarely reach its 320W power limit with standard applications — there simply isn't enough thermal headroom due to the difficulty of dissipating this amount of heat from such a small area (thermal density).

More exotic cooling, like custom water loops or sub-ambient, would likely allow the chip to pull more power within its 100C thermal envelope, albeit for little practical performance gain.

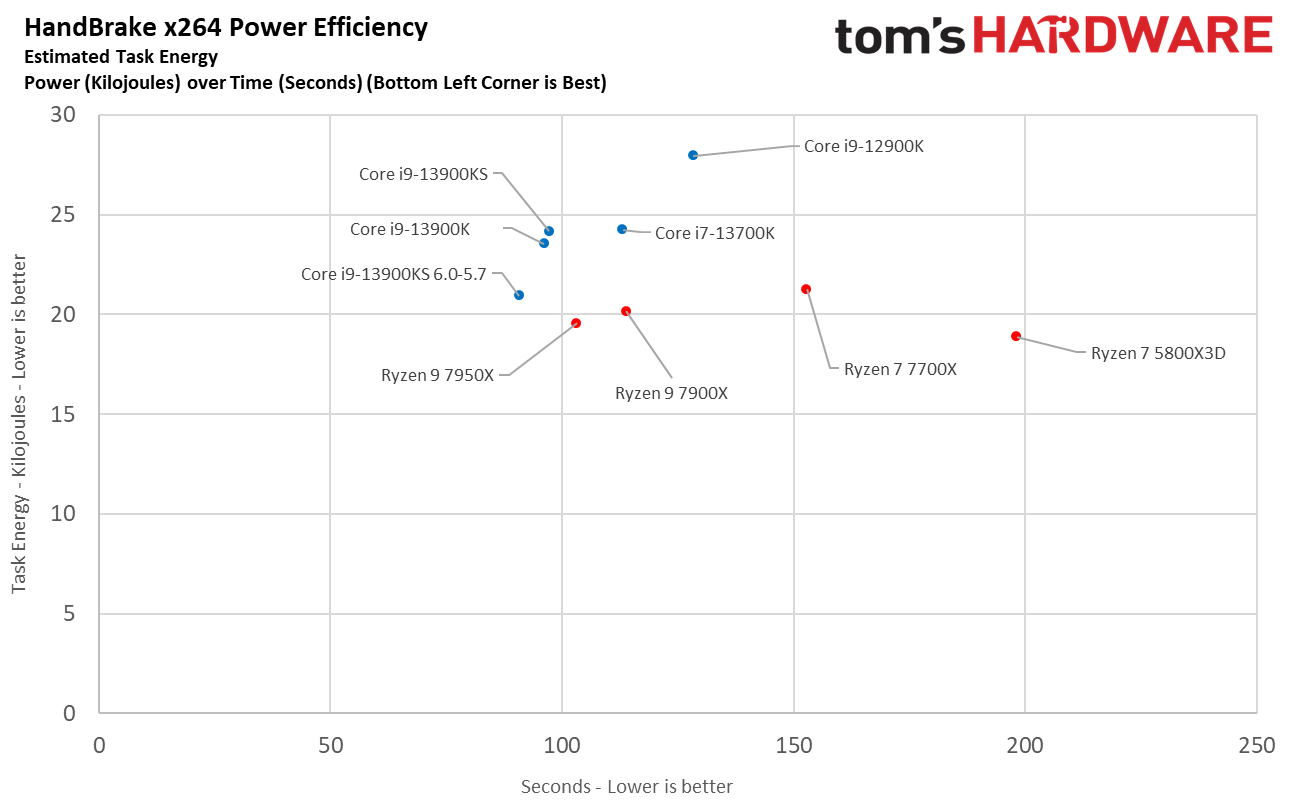

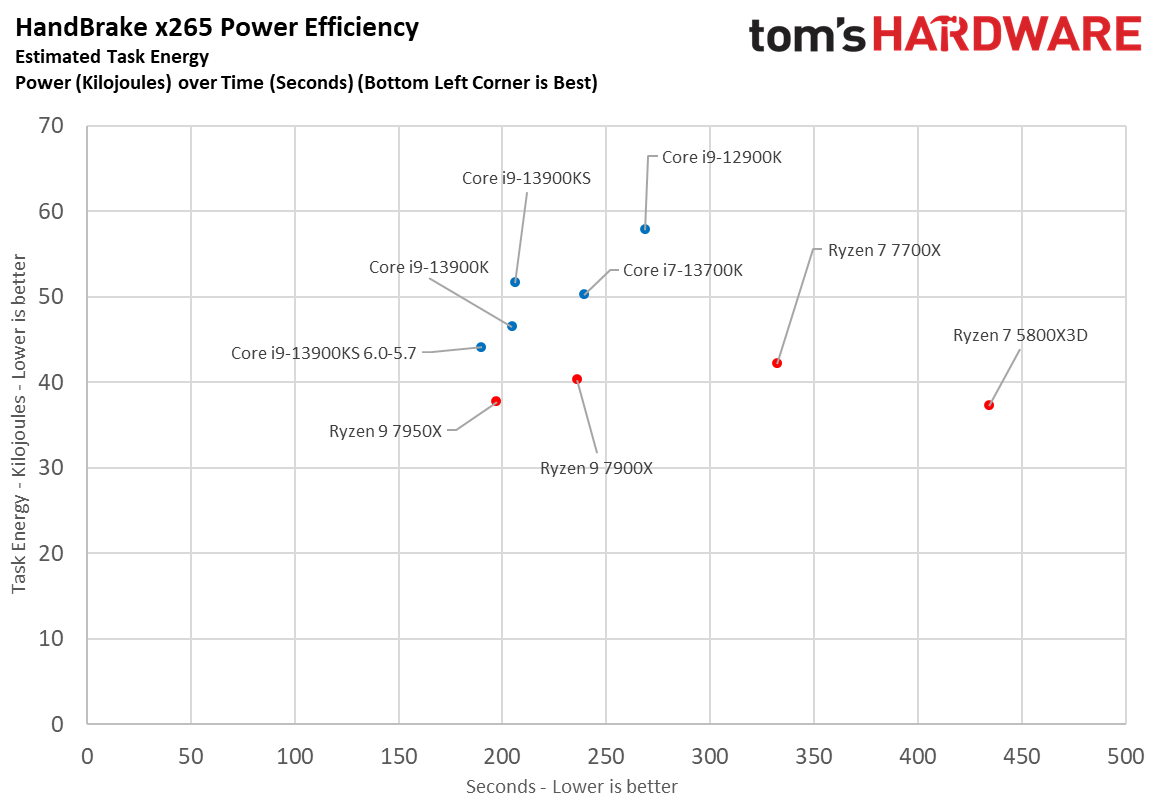

Here we take a slightly different look at power consumption by calculating the cumulative energy required to perform x264 and x265 HandBrake workloads, respectively. We plot this 'task energy' value in Kilojoules on the left side of the chart.

These workloads are comprised of a fixed amount of work, so we can plot the task energy against the time required to finish the job (bottom axis), thus generating a really useful power chart.

Remember that faster compute times, and lower task energy requirements, are ideal. That means processors that fall the closest to the bottom left corner of the chart are the best.

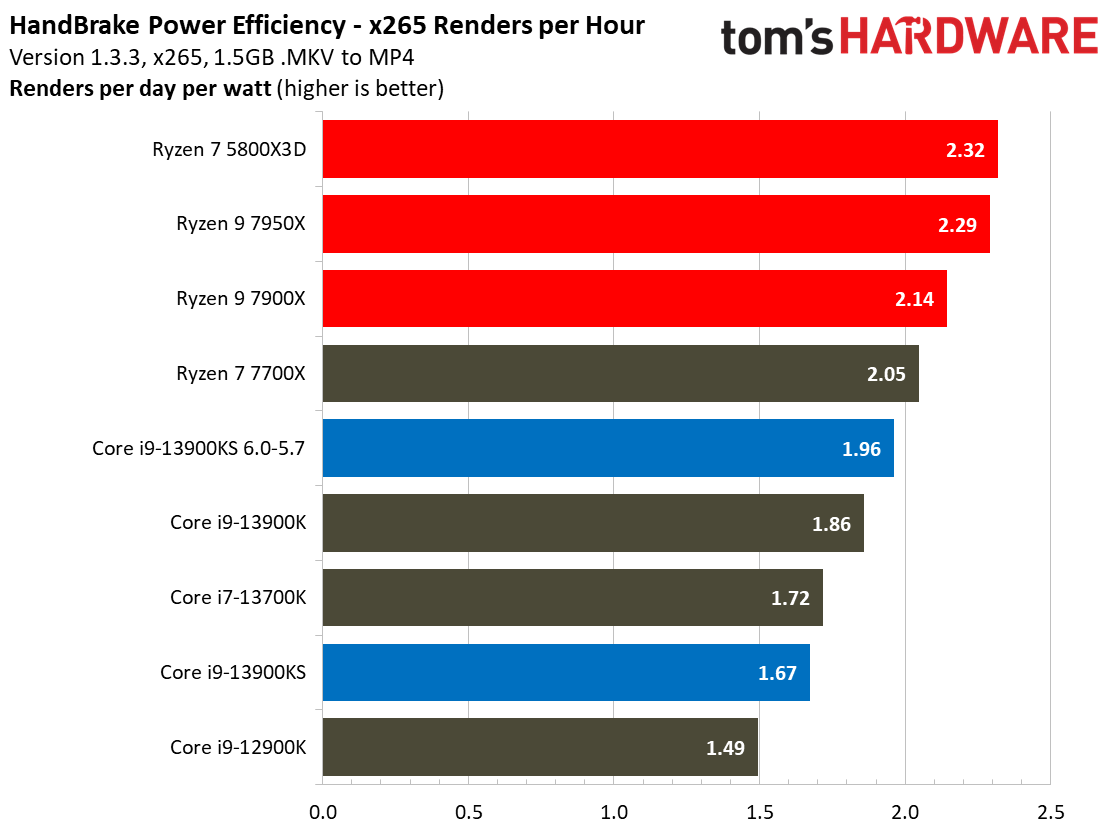

The 13900KS sucks significantly more power than the 13900K in these tests, but that doesn't deliver much of a performance gain — increasing power consumption when the chip is already near the top of the voltage/frequency curve is an incredibly inefficient way to gain very little extra performance.

Intel Core i9-13900KS Overclocking and Test Setup

- Intel Core i9-13900KS @ 6.0-5.7: Turbo multiplier: Two cores at 6 GHz, 5.7 GHz all-p-core, 4.4 GHz e-core, 1.29V vCore, DDR5-6800 XMP 3.0

- Intel Core i9-13900K @ 5.6: 5.6 GHz all-core p-core, 4.4 GHz e-core, 1.32V vCore, DDR5-6800 XMP 3.0

- AMD PBO Configs: Precision Boost Overdrive (Motherboard), Scalar 10X, DDR5-6000 EXPO

We used DDR5 memory with all chip testing in this article. Notably, if you opt for DDR4 with the Raptor Lake processors, you'll lose a few percentage points of performance, on average (testing here). The overclocked Ryzen configs (marked as 'PBO' in the charts) use DDR5-6000 memory, Precision Boost Overdrive, and a Scalar 10X setting.

We overclocked the Core i9-13900KS via turbo multipliers to 6 GHz on two p-cores and 5.7 GHz when more than two cores are active, while dialing in a 4.4 GHz all-core overclock on the e-cores. The chip only required a 1.29V vCore to sustain these frequencies. You can see the heat output and more overclocking details in our stress tests in the thermal section on the prior page.

We didn't use Intel's "Extreme Power Profile" for our stock Intel tests; instead, we're sticking with our standard policy of allowing the motherboard to exceed the recommended power limits, provided the chip remains within warrantied operating conditions. This means our power settings exceed the 'Extreme' recommendations — almost all enthusiast-class motherboards ignore the power limits by default anyway. Hence, our completely removed power limits reflect the out-of-box experience. Naturally, these lifted power limits equate to more power consumption and, thus, more heat.

Microsoft recently advised gamers to disable several security features to boost gaming performance. As such, we disabled secure boot, virtualization support, and fTPM/PTT for maximum performance. You can find further hardware details in the table at the end of the article.

| Intel Socket 1700 DDR5 (Z790) | Core i9-13900KS, i9-13900K, i7-13700K, i5-13600K |

| Motherboard | MSI MPG Z790 Carbon WiFi |

| RAM | G.Skill Trident Z5 RGB DDR5-6800 - Stock: DDR5-5600 | OC: XMP DDR5-6800 |

| AMD Socket AM5 (X670E) | Ryzen 9 7950X, Ryzen 9 7900X, Ryzen 7 7700X |

| Motherboard | ASRock X670E Taichi |

| RAM | G.Skill Trident Z5 Neo DDR5-6000 - Stock: DDR5-5200 | OC/PBO: DDR5-6000 |

| AMD Socket AM4 (X570) | Ryzen 9 5950X, 5900X, 5700X, 5600X, 5800X3D |

| Motherboard | MSI MEG X570 Godlike |

| RAM | 2x 8GB Trident Z Royal DDR4-3600 - Stock: DDR4-3200 | OC/PBO: DDR4-3800 |

| All Systems | 2TB Sabrent Rocket 4 Plus, Silverstone ST1100-TI, Open Benchtable, Arctic MX-4 TIM, Windows 11 Pro |

| Gaming GPU | Asus RTX 4090 ROG Strix OC |

| ProViz GPU | Gigabyte GeForce RTX 3090 Eagle |

| Application GPU | Nvidia GeForce RTX 2080 Ti FE |

| Cooling | Corsair H115i, Corsair H150i |

| Overclocking note | All configurations with overclocked memory also have tuned core frequencies and/or lifted power limits. |

- MORE: AMD vs Intel

- MORE: Zen 4 Ryzen 7000 All We Know

- MORE: Raptor Lake All We Know

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Intel Core i9-13900KS Overclocking, Power Consumption, Test Setup

Prev Page Intel Core i9-13900KS Thermals, Power Consumption, and Boost Clocks Next Page Gaming Benchmarks on Intel Core i9-13900KS

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

Brian D Smith Less 'overclocking' and more on 'underclocking' articles please.Reply

That would be helpful for the ever growing segment who does NOT need the testosterone rush of having the 'fastest' ... and wants more info on the logical underclocking to...well, do things like get the most out of a CPU without the burden of water-cooling, it's maintenance and chance of screwing up their expensive systems.

These CPU's and new systems would be flying off the shelves much faster than they are if only people did not have to take such measures for all the heat they generate. It's practically gone from being able to 'fry an egg' on a CPU to 'roasting a pig'. :( -

bit_user Seems like the article got a new comment thread, somehow. The original thread was:Reply

https://forums.tomshardware.com/threads/intel-core-i9-13900ks-review-the-worlds-first-6-ghz-320w-cpu.3794179/

I'm guessing because it had previously been classified as a News article and is now tagged as a Review. -

bit_user Thanks for the thorough review, @PaulAlcorn !Reply

Some of the benchmarks are so oddly lopsided in Intel's favor that I think it'd be interesting to run them in a VM and trap the CPUID instruction. Then, have it mis-report the CPU as a Genuine Intel of some Skylake-X vintage (because it also had AVX-512) and see if you get better performance than the default behavior.

For the benchmarks that favor AMD, you could try disabling AVX-512, to see if that's why.

Whatever the reason, it would be really interesting to know why some benchmarks so heavily favor one CPU family or another. I'd bet AMD and Intel are both doing this sort of competitive analysis, in their respective labs. -

letmepicyou Reply

Well, we've seen the video card manufacturers code drivers to give inflated benchmark results in the past. Is it so outlandish to think Intel or AMD might make alterations in their microcode or architecture in favor of high benchmark scores vs being overall faster?bit_user said:Thanks for the thorough review, @PaulAlcorn !

Some of the benchmarks are so oddly lopsided in Intel's favor that I think it'd be interesting to run them in a VM and trap the CPUID instruction. Then, have it mis-report the CPU as a Genuine Intel of some Skylake-X vintage (because it also had AVX-512) and see if you get better performance than the default behavior.

For the benchmarks that favor AMD, you could try disabling AVX-512, to see if that's why.

Whatever the reason, it would be really interesting to know why some benchmarks so heavily favor one CPU family or another. I'd bet AMD and Intel are both doing this sort of competitive analysis, in their respective labs. -

bit_user Reply

Optimizing the microcode for specific benchmarks is risky, because you don't know that it won't blow up in your face with some other workload that becomes popular in the next year.letmepicyou said:Is it so outlandish to think Intel or AMD might make alterations in their microcode or architecture in favor of high benchmark scores vs being overall faster?

That said, I was wondering whether AMD tuned its branch predictor on things like 7-zip's decompression algorithm, or if it just happens to work especially well on it.

To be clear, what I'm most concerned about is that some software is rigged to work well on Intel CPUs (or AMD, though less likely). Intel has done this before, in some of their 1st party libraries (Math Kernel Library, IIRC). And yes, we've seen games use libraries that effectively do the same thing for GPUs (who can forget when Nvidia had a big lead in tessellation performance?). -

hotaru251 Intel: "We need a faster chip"Reply

eng 1: what if we make it hotter & uncontrollably force power into it?

eng 2: what if we try soemthign else that doesnt involve using guzzling power as answer?

intel: eng1 you're a genius! -

bit_user Reply

Part of the problem might be in Intel's manufacturing node. That could limit the solution space for delivering competitive performance, especially when it also needs to be profitable. Recall that Intel 7 not EUV, while TSMC has been using EUV since N7.hotaru251 said:Intel: "We need a faster chip"

eng 1: what if we make it hotter & uncontrollably force power into it?

eng 2: what if we try soemthign else that doesnt involve using guzzling power as answer?

intel: eng1 you're a genius! -

froggx Reply

Intel has at least once in the past disabled the ability to undervolt. Look up the "plundervolt" vulnerability. Basically around 7th and 8th gen CPUs it was discovered that under very specific conditions that most users would never encounter, undervolting allowed some kind of exploit. The solution: push a windows update preventing CPU from being set below stock voltage. I have a kaby lake in a laptop that was undervolted a good 0.2v, knocked a good 10°C off temps. One day it started running hotter and surprise! I can still overvolt it just fine though, I guess that's what matters for laptops. Essentially, as useful as undervolting can be, Intel doesn't see it as something worthwhile compared to "security."Brian D Smith said:Less 'overclocking' and more on 'underclocking' articles please.

That would be helpful for the ever growing segment who does NOT need the testosterone rush of having the 'fastest' ... and wants more info on the logical underclocking to...well, do things like get the most out of a CPU without the burden of water-cooling, it's maintenance and chance of screwing up their expensive systems.

These CPU's and new systems would be flying off the shelves much faster than they are if only people did not have to take such measures for all the heat they generate. It's practically gone from being able to 'fry an egg' on a CPU to 'roasting a pig'. :( -

TerryLaze Reply

Being able to withstand higher extremes is a sign of better manufacturing not worse.bit_user said:Part of the problem might be in Intel's manufacturing node. That could limit the solution space for delivering competitive performance, especially when it also needs to be profitable. Recall that Intel 7 not EUV, while TSMC has been using EUV since N7.

Intel CPUs can take a huge amount of W and also of Vcore without blowing up, these are signs of quality.

TSMC getting better is how AMD was able to double the W in this generation.

You don't have to push it just because it is pushable.

jv3uZ5VlnngView: https://www.youtube.com/watch?v=jv3uZ5Vlnng