Tesla scraps custom Dojo wafer-level processor initiative, dismantles team — Musk to lean on Nvidia and AMD more

Set to increase reliance on AMD and Nvidia hardware for now.

Tesla's custom Dojo wafer-level processor has always been an ambitious and promising hardware project, but despite early success with the company's bespoke chip, Musk has still used Nvidia GPUs in addition to Dojo. Today, this reportedly comes to an end as Tesla has decided to dismantle its Dojo supercomputer program, reassign its remaining staff to other computing projects, and turn more heavily to outside technology providers like AMD or Nvidia, according to Bloomberg. Tesla has not formally confirmed the Dojo shutdown.

Elon Musk, chief executive of Tesla, has recently ordered the Dojo effort to be entirely wound down, according to the report. As a result, Peter Bannon, the head of the Dojo project at Tesla, is reportedly set to leave the company. In fact, about 20 members of the team have left to join DensityAI, a startup created by former Tesla executives, in recent weeks, according to Bloomberg. Those who remain will be moved to other internal data centers and computing roles within Tesla, if the information is accurate.

The company reportedly plans to deepen its partnerships with major suppliers of AI processors for data centers: Nvidia will keep supplying GPUs to Tesla, but Tesla will increase reliance on AMD. TSMC will produce the AI5 processor for next-generation Tesla vehicles starting in 2025, whereas Samsung Foundry will produce its successor, the AI6 processors, sometime towards the end of the decade.

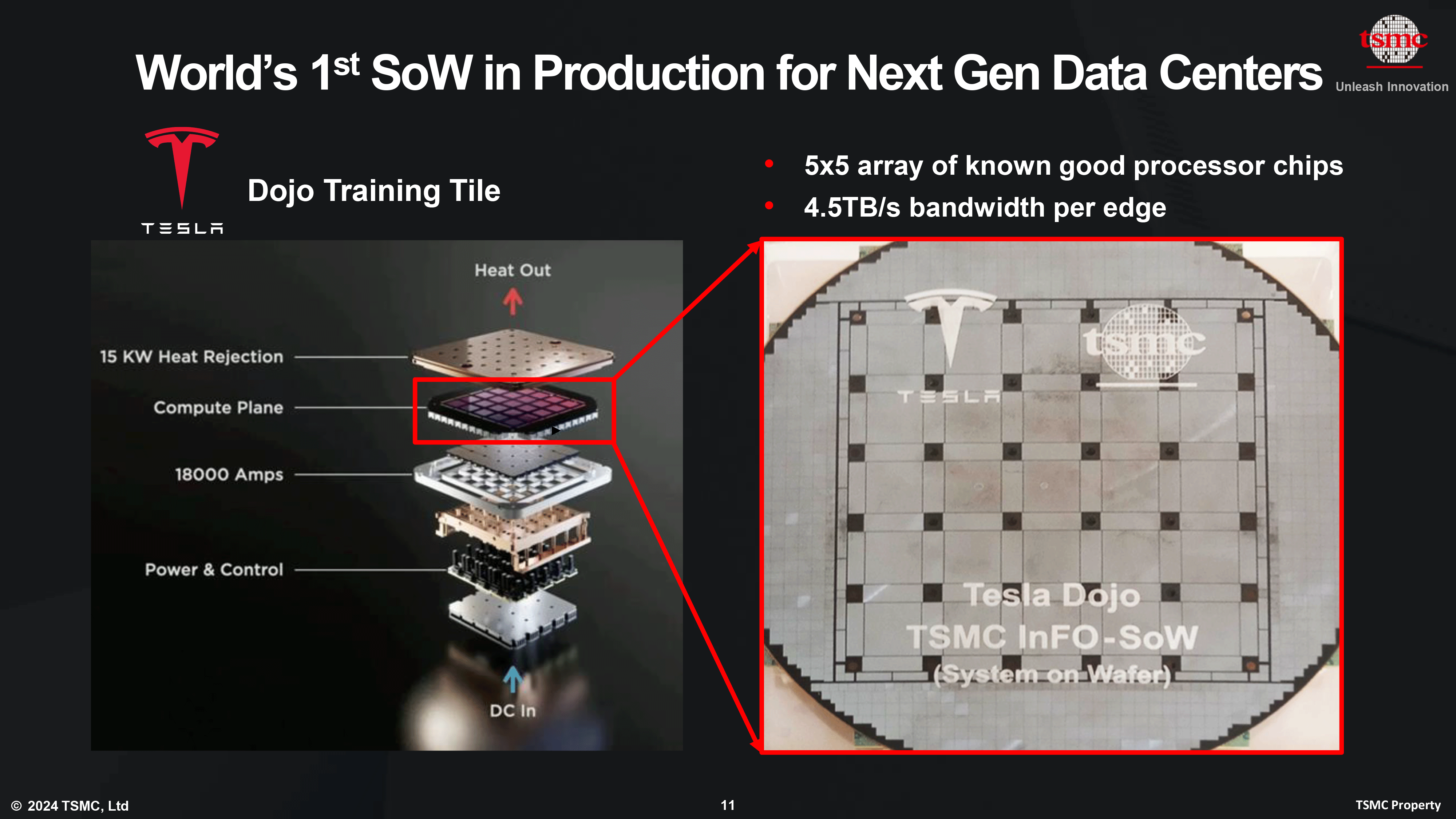

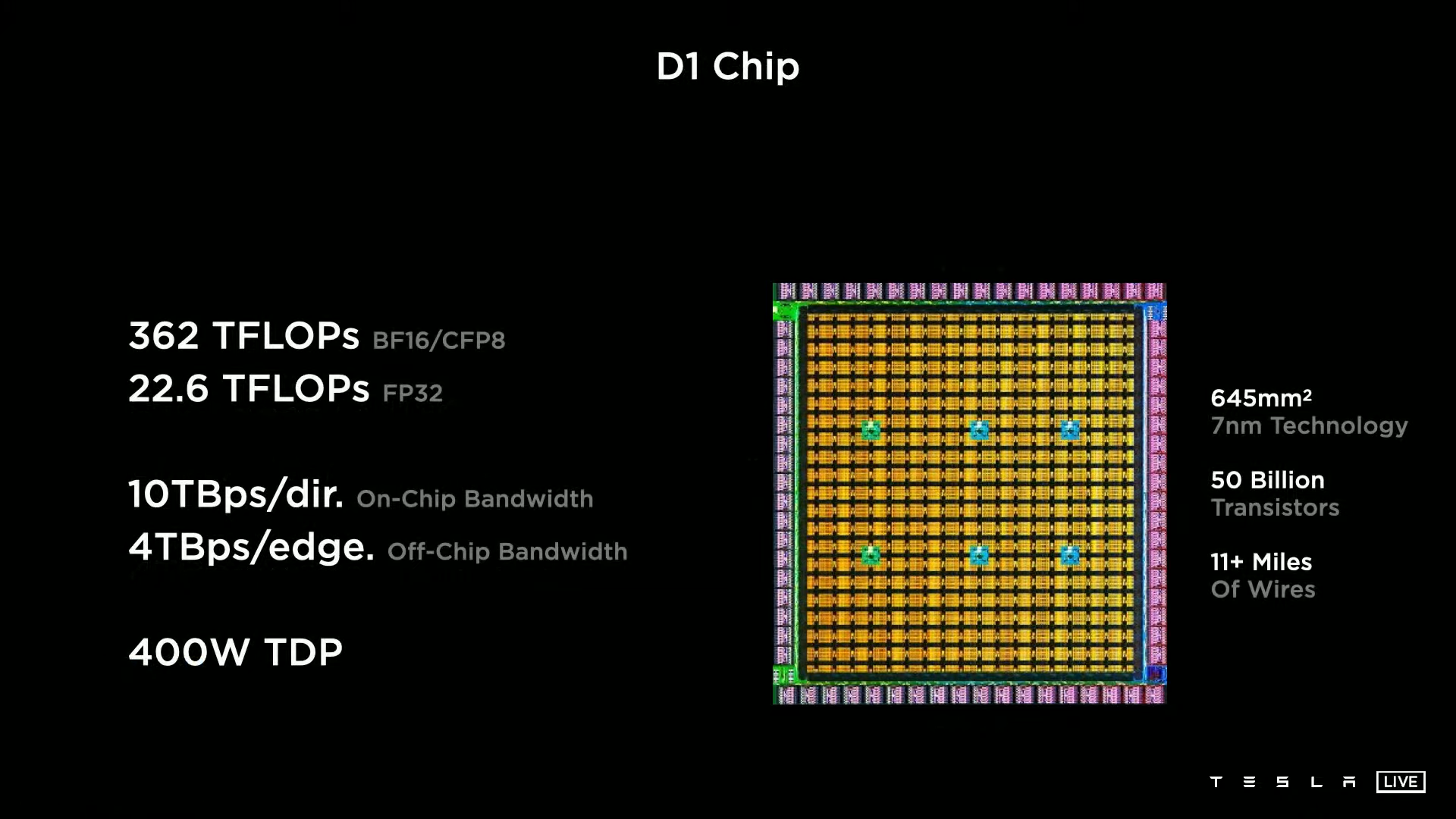

Tesla officially started its Dojo supercomputer project in 2021 and attempted to build wafer-scale processors for AI training. The company planned to use its own cluster built on its own proprietary hardware to train AI powering the full self-driving (FSD) capability of its cars and the Optimus humanoid robot. However, Tesla never completely relied on Dojo supercomputers and used third-party hardware as well.

"We are pursuing the dual path of Nvidia and Dojo," Musk said at an earnings call in 2023. "But I would think of Dojo as a long shot. It is a long shot worth taking because the payoff is potentially very high."

However, Dojo had its own limitations when it came to memory capacity, and servers based on a wafer-scale processor were hard to produce as they used a lot of proprietary components. In fact, the roll-out of Dojo 2 hardware has been pretty slow, and the company expected to have a cluster equivalent to 100,000 of Nvidia's H100 GPUs up and running in 2026. Essentially, this meant that Tesla would run a cluster equivalent to xAI's Colossus in Fall 2024, but two years later. Recently, Musk implied that he would like Tesla cars' hardware and Dojo supercomputer hardware to run on the same architecture.

"I think about Dojo 3 and the AI6 as the first [converged architecture designs]," Musk said in a July 23 earnings call (via Investing.com). "It seems like intuitively, we want to try to find convergence there where it is basically the same chip that is used where we use, say, two of them in a car or an Optimus and maybe a larger number on a on a [server] board, a kind of 5 - 12 twelve on a board or something like that. […] That sort of seems like intuitively the sensible way to go."

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

If Tesla follows Musk's direction, it will continue developing its own hardware for both edge devices and data centers, this time based on a converged architecture that avoids relying on exotic design decisions and proprietary components. Then again, this has not been formally confirmed yet.

Follow Tom's Hardware on Google News to get our up-to-date news, analysis, and reviews in your feeds. Make sure to click the Follow button.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

autobahn DOJO wafer got DOGE'ed! I wonder what this means for a company like Cerebras that is working on this same tech. Is there something flawed with the approach of one giant wafer?Reply -

Notton Reply

Yeah, pretty much everything.autobahn said:Is there something flawed with the approach of one giant wafer?

It's using the entire wafer, so there's no yield rate. Instead it's failure rate, or how much of the silicon can be used.

It's not just the print, but how well it's bonded to a substrate.

How to deliver power, how to keep it running cool, how to prevent damage from warping when heated.

I'm sure there's more, but bigger chips are generally are troublesome compared to small chips. It's why AMD went with chiplets that worked fine with CPUs/APUs, but failed at GPUs. -

Bikki Elon reasoning is like this:Reply

- Dojo cant match Nvidia hardware and software any time soon

- The cost of developing this is through the roof, Tesla is not big enough for this

- The consumer facing Tesla dont need to be server chip giant

- Inference chip is good cause no-body produce better edge vision inference chip atm, will be sole focus for now -

Bikki Reply

Very insightful, thank you. I still think the main reason this get the axe is development / usability. (Looking at Cerebras, they’re still going strong). You have very strong alternative hardware for training (Nvidia) and low usage (only 100k h100 deployed for Tesla vs 500k xAI), compare to sky high developing cost just not worth it.Notton said:Yeah, pretty much everything.

It's using the entire wafer, so there's no yield rate. Instead it's failure rate, or how much of the silicon can be used.

It's not just the print, but how well it's bonded to a substrate.

How to deliver power, how to keep it running cool, how to prevent damage from warping when heated.

I'm sure there's more, but bigger chips are generally are troublesome compared to small chips. It's why AMD went with chiplets that worked fine with CPUs/APUs, but failed at GPUs. -

abufrejoval I've been expecting this for more than two years now, and for two main reasons:Reply

Scale: while DOJO's variable precision design seemed to make it much more generic than many more popular ones, the whole thing really was only made for a single insider job for Tesla. And no single job can sustain the cost of current chip designs, you not only need to sell outside, you need many product shoulders to lean on. I wonder how the latter will turn out for Cerebras, but I can't see them last for many generations, either.

Musk's autistic obsession against sensor fusion and reliance on vision-only: yes, humans don't have LIDAR or Radar, but we still do sensor fusion with sound, inner-ear velocimeters, our skin and even our stomachs. And of course we carry a vastly richer model of the physical world within us than can be recorded through cameras alone. Babies may have a richer understanding of physics than DOJO ever did.Too bad for the environment those chips will go into a landfill without delivering much use, but a least the science seems to have found another place to grow. -

pointa2b Reply

He's out there doing things and taking risks, and more often than not they are successful (hence his net worth).fiyz said:Isn't it great being a CEO, and spending other people's money on failed ventures? -

wussupi83 Sad to see only because it would've been nice to see Nvidia have a bit of competition. But I understand this may have been a niche application anyway Glad to see Tesla is open to adopting more AMD hardware though. Hopefully their efforts help bring more hardware and software alternatives to the market.Reply