Nvidia's business model hinges on profitable AI use cases, says SK Group boss [Updated]

Industry concerned over the imbalance between AI development costs and revenues generated.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

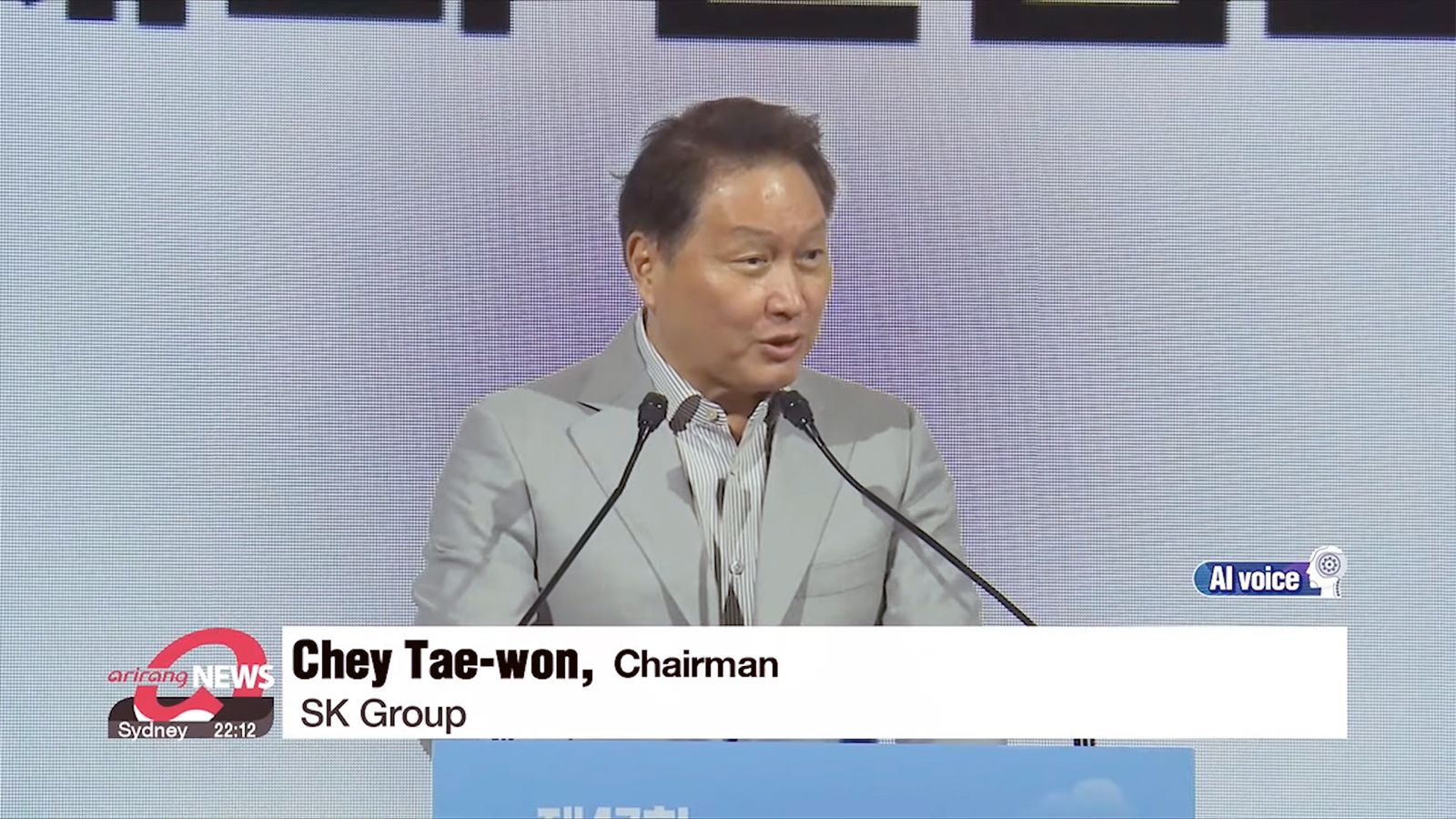

EDIT 7/23/2024 7:30am PT: SK hynix informed us that the source material misquoted Chey Tae-won. We have adjusted the text below to reflect the correction.

Amended article:

The Chairman of the Korean Chamber of Commerce and Industry (KCCI) and SK Group, Chey Tae-won told attendees at the recent 47th KCCI Jeju Forum that the current AI boom is similar to the California Gold Rush in the mid-1800s. Hence, Nvidia's commanding lead hinges on profitable AI use cases.

Chey expects that AI chip maker Nvidia will continue to be among the top corporations by capitalization over the next three years, much like how pickax and jeans makers prospered during the peak of the gold rush. However, "When there was no more gold, the sellers became unable to sell pickaxes," the Korea Times quotes Chey saying during the forum. He continued, "Without making money, the AI boom could vanish, just as the gold rush disappeared."

Nvidia became the world's most valuable company last month, propelled by its record sales of data center GPUs in 2023. While its stock price has already experienced some market correction, it is still the third most valuable corporation in the world. With no signs that AI development is slowing down, we can expect Nvidia to retain its lead for the foreseeable future.

However, the cost of training the next generation of AI LLMs is increasing exponentially. Anthropic CEO Dario Amodei said that AI models currently undergoing training cost $1 billion, with $100 billion models expected to arrive as early as 2025. The increasing costs of AI development have Goldman Sachs concerned, with some of its staff saying that AI is overhyped and asking whether the massive investments in it will ever pay off.

We believe that AI is now a part of our reality, but unless businesses find a profitable use for this tool, then there's a chance the AI race could turn into a bubble that will implode. If that happens, investment in specialized hardware designed to run these processes will take a nosedive, potentially taking Nvidia with it.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Nevertheless, we do not expect Team Green to fold just because of this scenario. After all, AI wasn't even around when Jensen Huang thought of Nvidia. The company could always fall back on the gaming industry as its primary customer base, and its cards will always find use in other applications.

The real threat to Nvidia would be its competitors. Although AMD makes some decent GPUs, it lags in supersampling technology, with some considering FSR 3.0

to still be miles behind DLSS 3.5. It is quickly catching up, though, and Intel is also making inroads in the GPU market with its Intel Arc GPUs and XeSS supersampling technology.

Individuals and institutions will keep on buying Nvidia's products as long as it has a product that delivers unparalleled performance — but its competitors are not asleep at the wheel. And with AI companies like Microsoft, Amazon, Google, and OpenAI investing in their own hardware research, there might come a time when Nvidia will be left in the dust for AI acceleration.

Jowi Morales is a tech enthusiast with years of experience working in the industry. He’s been writing with several tech publications since 2021, where he’s been interested in tech hardware and consumer electronics.

-

ET3D NVIDIA's business model is likely to fall apart in any case. AI inference, and even training, can be done more efficiently than with a general purpose accelerator, and is easier to create. Although NVIDIA has a head start, it might not be able to hold it against the competition.Reply -

bit_user Reply

Certainly not more efficiently. You get greater efficiency by going in the direction of specialization, not generalization.ET3D said:NVIDIA's business model is likely to fall apart in any case. AI inference, and even training, can be done more efficiently than with a general purpose accelerator, and is easier to create.

I'm waiting for them to drop features like fp64, which you really don't need for the vast majority of AI use cases. They could also cut way back on fp32. If they streamlined their current architecture to be better tuned specifically for AI, I think they shouldn't have much trouble remaining competitive. The only remaining question is whether they could maintain such substantial margins.ET3D said:Although NVIDIA has a head start, it might not be able to hold it against the competition. -

bit_user Reply

Are you serious, bro? Not only does the field of AI go back to the 1940's (most prominently highlighted in the writings of Alan Turing), but even the earliest work on artificial neural networks dates back about that far!The article said:AI wasn't even around when Jensen Huang thought of Nvidia.

https://en.wikipedia.org/wiki/History_of_artificial_intelligence

By 1982, Hopfield nets were drawing new interest to digital artificial neural networks. That was some 10 years before Nvidia's founding.

Yeah, people didn't start talking about harnessing graphics hardware for other computational problems until the late 1990's (a practice that came to be known as GPGPU), and therefore Nvidia's founders wouldn't even have considered it, but that's a far narrower claim than AI being some recent invention.

Wow, that was sure a whopper! -

Pierce2623 Reply

You do realize he literally said the small matrix math accelerators they’re adding to everything these days are MORE efficient than a GPU not less, right?bit_user said:Certainly not more efficiently. You get greater efficiency by going in the direction of specialization, not generalization.

I'm waiting for them to drop features like fp64, which you really don't need for the vast majority of AI use cases. They could also cut way back on fp32. If they streamlined their current architecture to be better tuned specifically for AI, I think they shouldn't have much trouble remaining competitive. The only remaining question is whether they could maintain such substantial margins. -

Pierce2623 While it’s not nearly as profitable as commercial accelerators, Nvidia can always fall back on owning 80% of the discrete GPU market. When Nvidia will really be in trouble in when x86 systems go mostly in the APU direction. I mean who would buy a $500 GPU and a $300 CPU over a $500-$600 APU that’s competitive on both fronts?Reply -

bit_user Reply

Who said? I don't see that phrase in either the article or the comments.Pierce2623 said:You do realize he literally said the small matrix math accelerators they’re adding to everything these days are MORE efficient than a GPU not less, right?

Also, I disagree with that assertion. I've looked at AMX benchmarks, if that's what you've got in mind, but go ahead and show us what you've got. -

ThomasKinsley I'm hearing mixed signals on this topic. On the one hand, consumer AI is supposedly a hot new market with phones supporting AI garnering increased sales. On the other hand, these sales increases still pale in comparison to pre-covid phone sales. I would characterize this as wildly successful for businesses with only mild interest among consumers.Reply -

watzupken Reply

While I am not a fan of Nvidia, I feel if AI continues to boom, Nvidia will continue to thrive. You can tell from retail consumer's behavior where AMD hardware may offer a better bang for buck, but people tend to prefer to pay more for a lesser performant product just because of RT, DLSS, and mainly the perception that Nvidia products are better. So competition will chip some sales away, but I feel most people and companies will stick with Nvidia.ET3D said:NVIDIA's business model is likely to fall apart in any case. AI inference, and even training, can be done more efficiently than with a general purpose accelerator, and is easier to create. Although NVIDIA has a head start, it might not be able to hold it against the competition.

Having said that, the comment from the SK group boss is common sense. At this point, the AI master race is going on, but the real question is how they will be monetizing AI services, and how receptive will consumers be to a paying model? Again, OpenAI/ ChatGPT became widely popular because its free. Switching to a paying model (and likely one that is not cheap), is going to mean that only a minority of people will be willing to use the paid for service. But will that be enough to recoup the sunk and running cost of training and maintaining? I think it is not a matter of whether the AI bubble will implode, but rather when. -

bit_user Reply

A lot of companies are already paying for it. They have an incentive not to use free services, because they want to protect their IP from leaking out, as well as preventing their IP from becoming tainted by external IP being fed to their employees via AI. So, this requires models that are trained on carefully vetted material, as well as a guarantee not to use queries or other metadata in training of models shared with other customers.watzupken said:Switching to a paying model (and likely one that is not cheap), is going to mean that only a minority of people will be willing to use the paid for service.

Corporate users pay for lots of services. Office suite, email, cloud storage, github, bookkeeping software, customer relationship tools, etc. The real question is how much value they can derive from it, because that will ultimately determine how much they're willing to pay.watzupken said:But will that be enough to recoup the sunk and running cost of training and maintaining? I think it is not a matter of whether the AI bubble will implode, but rather when. -

thestryker I think it's mostly just a situation for as the market exists nvidia is significantly overvalued as part of the current AI bubble. The question is certainly whether monetization is figured out or the bubble bursts. Nvidia making moves with the Grace line ought to also keep them going even if the AI rush collapses as they should be able to be used for HPC.Reply

Of course there's also the dominance in consumer discrete graphics as it stands today, but we'll see what Strix Halo ends up looking like.

Nvidia has had to deal with two crypto booms so I'm sure management is doing everything they can to minimize risk here. Just a wild guess but I'd assume their biggest additional cost right now is just in sheer volume of hardware manufacturing and that they're not doing a ton of additional hiring.