SIPEW 2008: All About Benchmarking

Scheduling in Server Farms

Open vs. Closed Systems

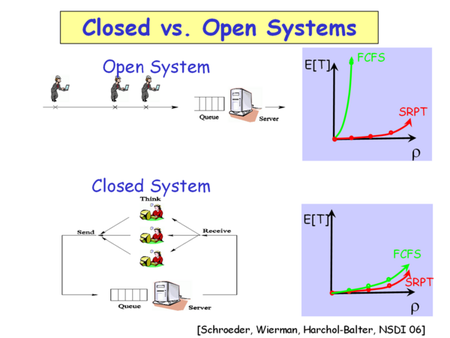

In the next step, Harchol-Balter looked at task dispatching in server farms, where routing becomes an important issue. For these large scale applications, it is very important to first think about the workload type that your system will be taking on. Her speech now dealt with the difference between open and closed systems. In an open system, your solution will be available to work on tasks that are very much predictable; think of a web server or web server farm. In such a case, it makes a lot of sense to analyze and determine the ideal scheduling and dispatching strategy in an effort to minimize response time and maximize performance. It is called an open system because there can be different tasks from various sources, and there will be different results. However, a closed system dispatches tasks that are less predictable, because they result from the results of prior workloads, and an analysis of tasks is used to determine further processing.

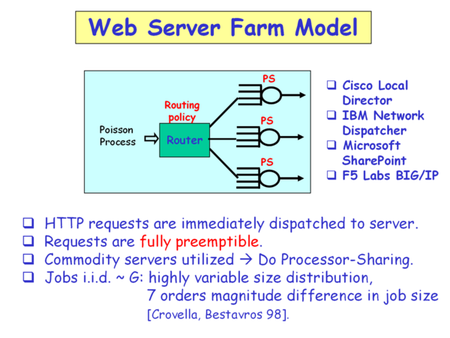

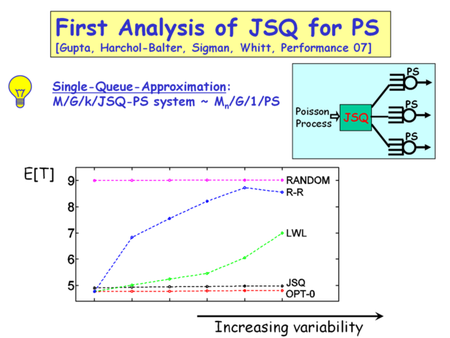

In the following example, a web server farm is used to create an environment in which incoming tasks are distributed to systems. All the servers operate based on preemptive processor sharing, just as most of the multitasking operating systems work today. The main question now is how to distribute the workload to the servers in an effort to get the shortest response times, and hence the best performance. In this example, the solution deciding on the routing policy (see image below) needs to be aware of the workload to be able to make a decision on the scheduling technique (see below).

A Poisson process, as used in this example, is a “stochastic process in which events occur continuously and independently of one another. A well-known example is radioactive decay of atoms.” (Text source: Wikipedia). HTTP requests are classified as Poisson processes. Will there be performance differences according to various scheduling techniques?

Task Scheduling Policies for Server Farms

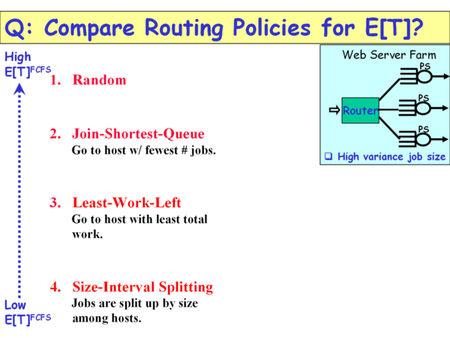

Harchol-Balter discusses four possible task scheduling techniques:

- RandomIncoming tasks are assigned to a random system with no other policy behind.

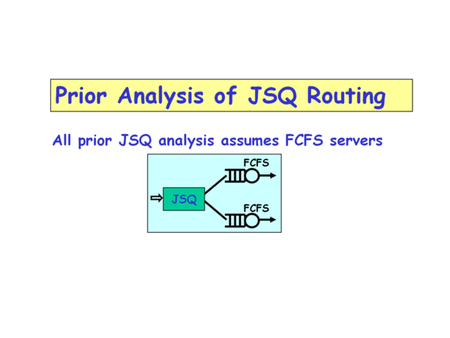

- Join-Shortest-Queue In this case, tasks are forwarded to the server that has the fewest jobs to process. This technique requires a prior analysis of the server system and usually also assumes a first-come, first-served policy.

- Least-Work-LeftThis is similar to join-shortest-queue, but actually considers the task that has to be processed. The systems with the least actual workload will receive the next jobs first. Here, jobs are typically ordered for dispatching by workload.

- Size Interval SplittingThis approach distributes workload evenly to all available clients, which creates a good workload balance across your servers, but increases response time.

The entire topic of scheduling has been discussed for more than 40 years, and it is obvious that there are various strategies that may or may not be suitable for individual requirements. The most important parameter certainly is the workload itself, and a detailed analysis of closed as opposed to open systems. The selection of proper policies and hardware comes in a second step.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: Scheduling in Server Farms

Prev Page Scheduling for Individual Servers Next Page Power Efficiency Testing with SPECpower_ssj2008