Scientists share design so you can make your own 3D-printable 'eFlesh' for robots — affordable, easy to produce, and highly-tactile robot sensor grips can be printed at home

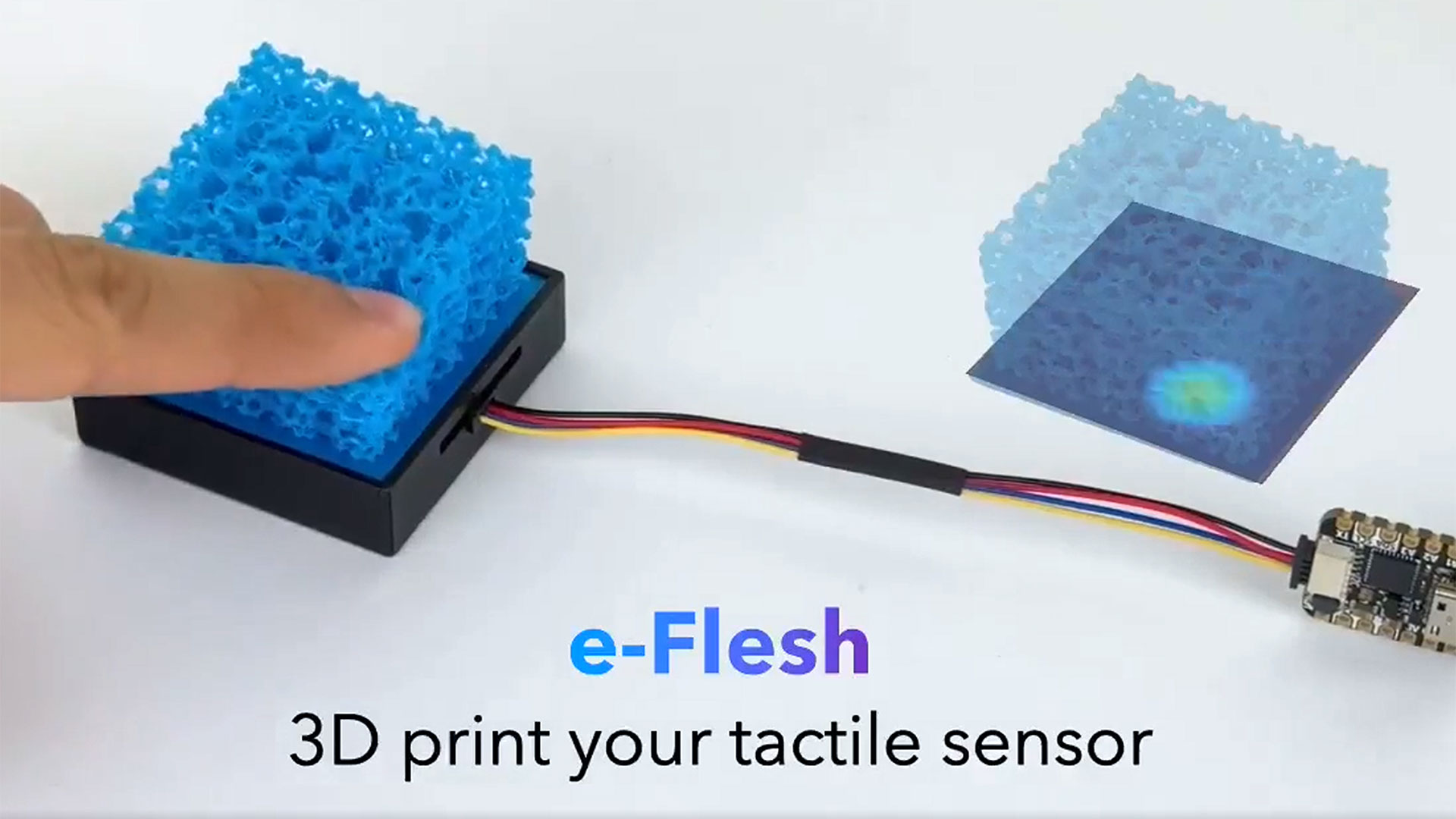

'eFlesh' is touted as a low-cost magnetic tactile sensor, and videos show it being produced on a Bambu Lab X1E 3D printer.

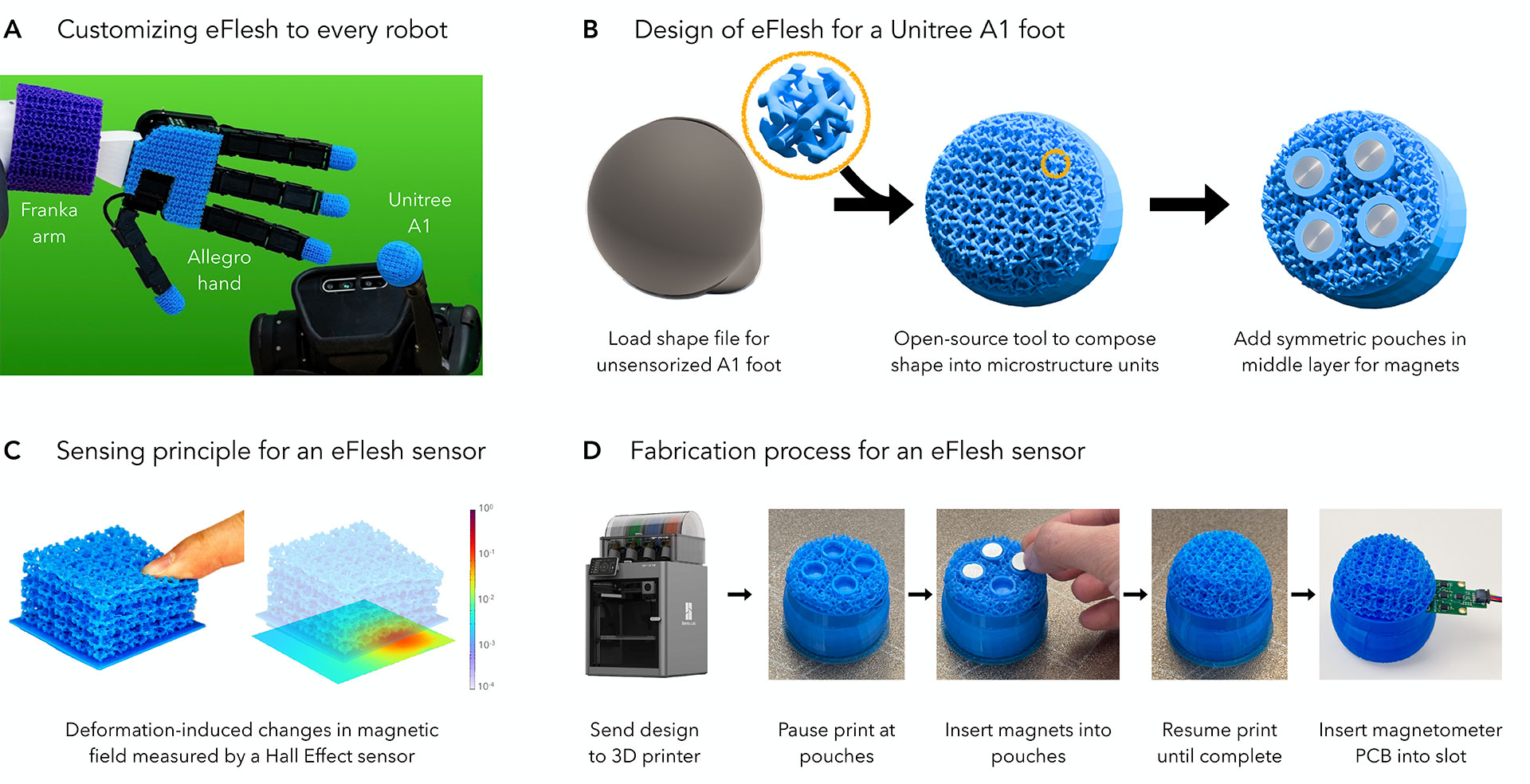

The macabre-sounding 3D-printable 'eFlesh' has a very practical purpose – to help robotic sensors and grips work well with a wide range of objects in unstructured environments, and due to the work and generosity of researchers, you can download and print the material yourself. A group of New York University scientists has showcased eFlesh in compelling action - responding to tactility, and firmly but gently gripping and manipulating objects like (presumably uncooked) eggs, USB devices, and fluffy plushies. Generously, the scientists have even shared fabrication details, 3D files, trained models, and code so you can make your own eFlesh devices.

In the video embedded above, we see that eFlesh is quick and easy to print. 3D printer watchers will notice that the scientists used a Bambu Lab X1E 3D printer for the square sensor-grip example. Other videos show that the TPU filament 3D printing process was paused at about half an inch from the bed to insert a grid of magnets – key to its sensing functionality.

The scientists behind this innovation say that “Building an eFlesh sensor requires only four components: a hobbyist 3D printer, off-the-shelf magnets (costing less than $5), a simple CAD model of the desired shape, and a magnetometer circuit board.” They have decided to promote broad accessibility of this technology by releasing an open source design, including the 3D-printable STLs, and supporting software code. There’s even a CAD-to-eFlesh STL conversion tool available.

Robot sensing, moving, and manipulation of irregular or fragile objects has long been a conundrum for designers. That this solution is accessible, and purportedly cheap and effective, making it all the more welcome. Specifically, the New York University scientists say that eFlesh has “contact localization accuracy of 0.5 mm, with force prediction errors of 0.27 N along the z-axis and 0.12 N in the x/y-plane.”

Some canny coding is behind the “learning-based slip detection model that generalizes to unseen objects with 95% accuracy.” And the developers of eFlesh say that their “visuotactile control policies that improve manipulation performance by 40% over vision-only baselines.” This smart functionality is demonstrated in precise tasks like robotic arm plug insertion and credit card swiping.

So, these scientists may have just democratized tactile robotic sensing and manipulation. Shame about the name, though.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.