SRC and Stanford Enable Chip Pattern Etching for 14nm

In a project sponsored by the Semiconductor Research Corporation (SRC), researchers at Stanford University claim to have solved one of the major semiconductor manufacturing problems standing in the way of further scaling.

Stanford scientists were able to successfully demonstrate a new directed self-assembly (DSA) process not just for regular test patterns, but for irregular patterns that are required for the manufacture of smaller semiconductors. It was the first time that this next-generation process was used to contact hole patterns at 22 nm, but the scientists claim that the technique will enable pattern etching for next-generation chips down to 14 nm.

"This is the first time that the critical contact holes have been placed with DSA for standard cell libraries of VLSI chips. The result is a composed pattern of real circuits, not just test structures," said Philip Wong, the lead researcher at Stanford for the SRC-guided research. "This irregular solution for DSA also allows you to heal imperfections in the pattern and maintain higher resolution and finer features on the wafer than by any other viable alternative."

The research group also noted that the process is much more environmentally-friendly as a "healthier" solvent - polyethylene glycol monomethyl ether acetate (PGMEA) - is used for the coating and etching process.

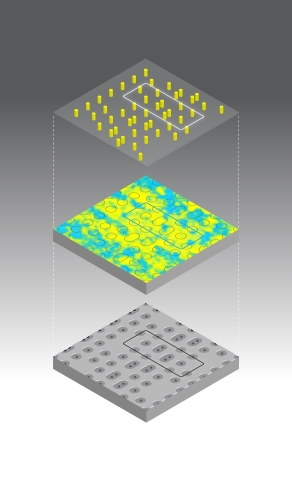

Leveraging the new DSA process, the researchers manufactured chips by covering a wafer surface with a block copolymer film and using "common" lithographic techniques to carve structures into the wafer surface and create a pattern of irregularly placed "indentations." These indentations are used to as templates "to guide movement of molecules of the block copolymer into self-assembled configurations." According to the researchers, these templates can be modified in their shape and size, which enables distance between holes to be reduced more than current techniques allow.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Douglas Perry was a freelance writer for Tom's Hardware covering semiconductors, storage technology, quantum computing, and processor power delivery. He has authored several books and is currently an editor for The Oregonian/OregonLive.

-

IClassStriker I don't get it, they are making smaller and smaller chips even though they could improve on the current ones. I would be fine if they still make 32nm die, but add more cores and higher clock speeds. Most computers have sufficient cooling anyways.Reply -

IndignantSkeptic It's just amazing how many times scientists can keep Moore's law going. It helps make me think that Dr. Aubrey de Grey may be correct about the unbelievable future of biotechnology.Reply -

IClass - the world is moving to smaller components due to smaller form factors and mobility. If everyone still did all their computing work on desktops, then I totally agree with you. A well done 32 nm process in a chassy with sufficient cooling would do well for a powerhouse system (at least 8 cores, dual graphics, etc.). To get that level of performance out of a mobile platform, they will need to go to 14nm. I have no problem with that as long as they also build a 14nm system with 32-64 cores and that is 5+ Ghz :).Reply

-

bak0n Well then hurry and get me my 14nm GPU that doesn't require an extra power connector!!!111221!Reply -

robot_army IClass Smaller dies mean companies can add more transistors, for either additional cores or hight performance per clock. this Allows companies to maintain profit margins and price points, more silicon would equal more cost to consumers!Reply -

zzz_b @IClassStrikerReply

The problem is the localized heat on the chips, which can't be dissipated.

-

mpioca IClassStrikerI don't get it, they are making smaller and smaller chips even though they could improve on the current ones. I would be fine if they still make 32nm die, but add more cores and higher clock speeds. Most computers have sufficient cooling anyways.Reply

1. lower power consumption --> cheaper operation, lower operating heat 2. less material needed for production --> cheaper products.

It's basically a no-brainer to choose the more shrinked die. -

CaedenV IClassStrikerI don't get it, they are making smaller and smaller chips even though they could improve on the current ones. I would be fine if they still make 32nm die, but add more cores and higher clock speeds. Most computers have sufficient cooling anyways.1) to compete with ARM on the low end Intel NEEDs to get smaller parts in order to lower material, heat, and battery costs. Sure, they could cram a lot more transisters in a CPU on 32nm before having heat issues, but someone needs to pay for the costs of developing the small tech for atom and other extremely low power CPU technologies that compete with ARM, and Intel has decided a long time ago that it will be the desktop CPUs that will pave the way because desktop users do not mind paying more for the product, while devices that use Atom products are extremely price sensitive. I mean, imagine how power efficient a 22nm Atom would be? On a 32nm process they are down to 3.5W TDP, and they operate much lower than that when under a normal load. But they are not on 22nm because it is cheaper to do these on the old fabs.Reply

2) More cores does not help 90+% of the people who use a computer. 2 cores is enough for web browsing and media consumption (hell, you can even game pretty decently on a duel core). Civilian applications tend to only use 1-2 cores, and heavy applications have a hard time using more than 4. If you need more than 4 cores then there are other solutions (SBE, Xeon) which can bring you many more cores, and duel CPU configurations (I think the new Xeon CPUs can even do quad configurations). So if you need more cores, there are solutions for you, but all the cores in the world are not going to help you one bit until software takes advantage of it, so other solutions must be found.

3) It is cheaper and easier to shrink the die than it is to modify the instruction set (though that is always happening as well). Once we hit the 8-12nm wall of CPU die shrinks we will begin to see major changes to code, how code is processed, and a complete revolution to the x86 architecture and instructions. We will also begin to see 3D/Stacked CPU designs, and other more creative approaches to getting things more streamlined. but we are still several years away from that.