Baidu Upgrades Cloud Services With Nvidia Tesla P40 GPUs And Deep Learning Platform

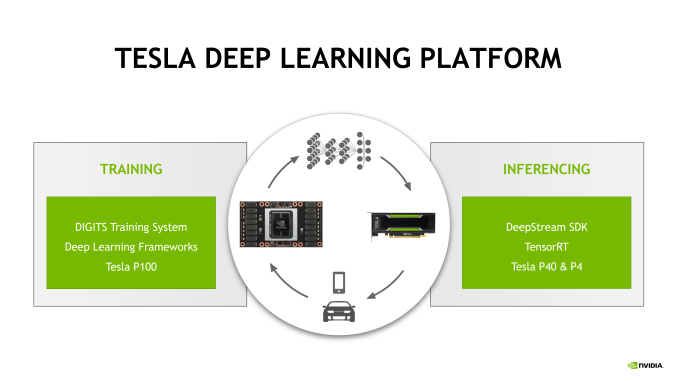

Nvidia announced that Baidu, the biggest search engine in China, now uses the company’s deep learning software platform as well as the latest Pascal-based Tesla P40 GPUs for both training and inference.

Baidu Modernizes Cloud Platform

Baidu, like other companies interested in machine learning, has been using Nvidia’s GPUs for many years. However, the company seems to be looking to get the latest Pascal-based GPUs to take advantage of their increased performance and efficiency.

“Our partnership with NVIDIA has long provided Baidu with a competitive advantage,” said Shiming Yin, vice president and general manager of Baidu Cloud Computing. "Baidu Cloud Service powered by NVIDIA's deep learning software and Pascal GPUs will help our customers accelerate their deep learning training and inference, resulting in faster time to market for a new generation of intelligent products and applications,” he added.

As a search engine and cloud services company, Baidu seems to have realized that machine learning is critical for the company’s success. Therefore, it's continuing to invest big in staying up to date with hardware platforms, as well as in machine learning research to improve its services.

Tesla P40

Baidu bought some Tesla P40 “inference GPUs,” but Nvidia has said before that they can also be used for training neural networks. The P40 is Nvidia’s highest-performing inference chip right now, due to its support for 8-bit integer computation.

Nvidia recently pitted the Tesla P40 against Google’s own inference chip, the Tensor Processing Unit (TPU). The GPU did well against the TPU in at least one metric: inference with sub-10ms latency.

Considering that one of the reasons why the “AI on the edge” trend is growing now is because machine learning over the internet has too high latency, this can be quite an important metric. However, as discussed previously, it’s not the only metric that matters, as performance/Watt can often be the most important metric in data centers.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

However, Nvidia has many customers with many different needs, and for applications such as natural language processing, traffic analysis, intelligent customer service, personalized recommendations, and understanding video. This is also why even Google continues to use GPUs where they make the most sense. Nvidia also numbers customers from other major cloud services companies such as Amazon, Microsoft, IBM, and Alibaba, who all use machine learning to enhance their services in different ways.

Lucian Armasu is a Contributing Writer for Tom's Hardware US. He covers software news and the issues surrounding privacy and security.

-

Matt1685 Why are you mentioning the TPU? As far as I know it's not available for anyone to use outside Google except as part of a Google service (such as Google's translation service).Reply