Nvidia FCAT VR: Benchmarking Performance In Real-World Games

Unfortunately, the tools we normally use for testing graphics cards, such as Fraps, PresentMon, and OCAT, are not suitable for collecting performance data in virtual reality through HTC’s Vive or Oculus’ Rift; they can measure frames that the game engine generates, but miss all of what happens afterwards in the VR runtime before those frames appear in the HMD. VRMark and VRScore get us part-way there with synthetic measurements, but we're usually more interested in making real-world comparisons.

Enter FCAT VR, which is conceptually similar to the original version, which we wrote about in Challenging FPS: Testing SLI And CrossFire Using Video Capture. This time, however, Nvidia is out to enable enthusiasts with a way to test their hardware in real-world VR apps, compile meaningful information, and present it in a way that doesn't require you to know your way around Perl.

What exactly are we looking for here? Nvidia does a pretty stellar job describing the VR pipeline and where things can go wrong, so we’ll borrow from the company’s explanation:

“Today’s leading high-end VR headsets, the Oculus Rift and HTC Vive, both refresh their screen at a fixed interval, 90 Hz, which equates to one frame every ~11.1ms. V-sync is enabled to prevent tearing, which can cause major discomfort to the user.

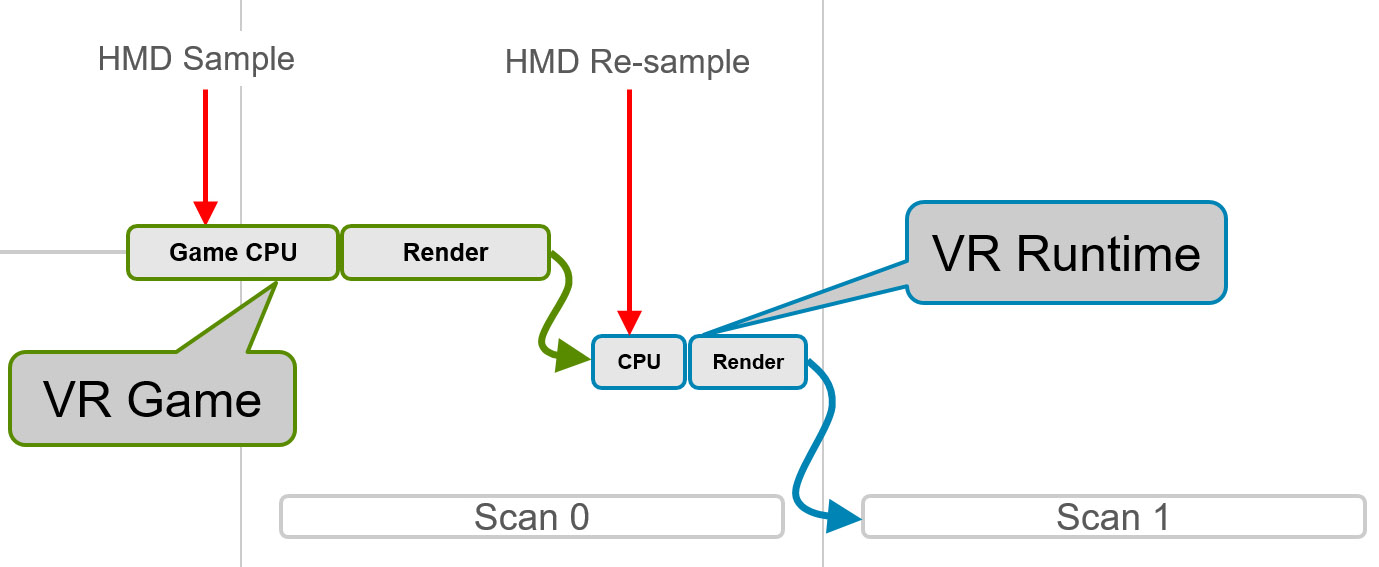

The mechanism for delivering frames can be divided into two parts: the VR game and the VR runtime. When timing requirements are satisfied and the process works correctly, the following sequence is observed:

- The VR game samples the current headset position sensor and updates the camera position to correctly track a user’s head position.

- The game then establishes a graphics frame, and the GPU renders the new frame to a texture (not the final display).

- The VR runtime reads the new texture, modifies it, and generates a final image that is displayed on the headset display. Two interesting modifications include color correction and lens correction, but the work done by the VR runtime can be much more elaborate.

The following figure shows what this looks like in a timing chart.

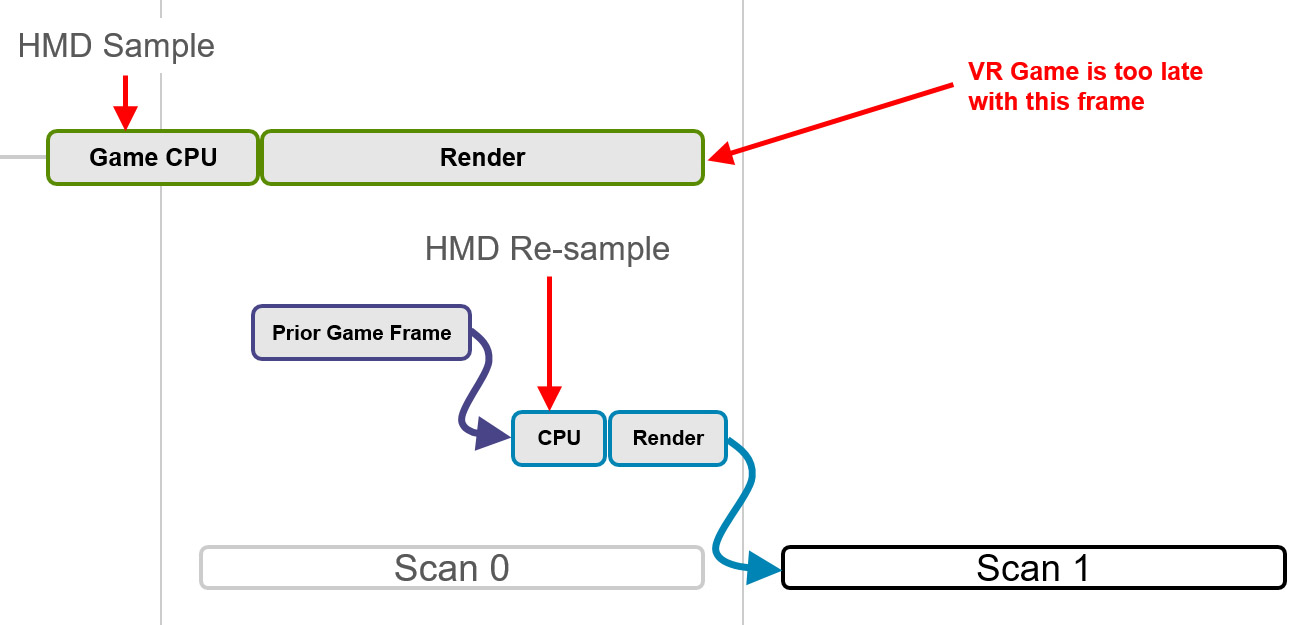

The job of the runtime becomes significantly more complex if the time to generate a frame exceeds the refresh interval. In that case, the total elapsed time for the combined VR game and VR runtime is too long, and the frame will not be ready to display at the beginning of the next scan.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In this case, the HMD would typically redisplay the prior rendered frame from the runtime, but for VR that experience is unacceptable because repeating an old frame on a headset display ignores head motion and results in a poor user experience.

Runtimes use a variety of techniques to improve this, including algorithms that synthesize a new frame rather than repeat the old one. Most of the techniques center on the idea of re-projection, which uses the most recent head sensor location input to adjust the old frame to match the current head position. This does not improve the animation embedded in a frame—which will suffer from a lower frame rate and judder—but the fluid experience of head motion is improved.

FCAT VR captures four key performance metrics for Rift and Vive:

- Dropped frames (app miss/app drop)

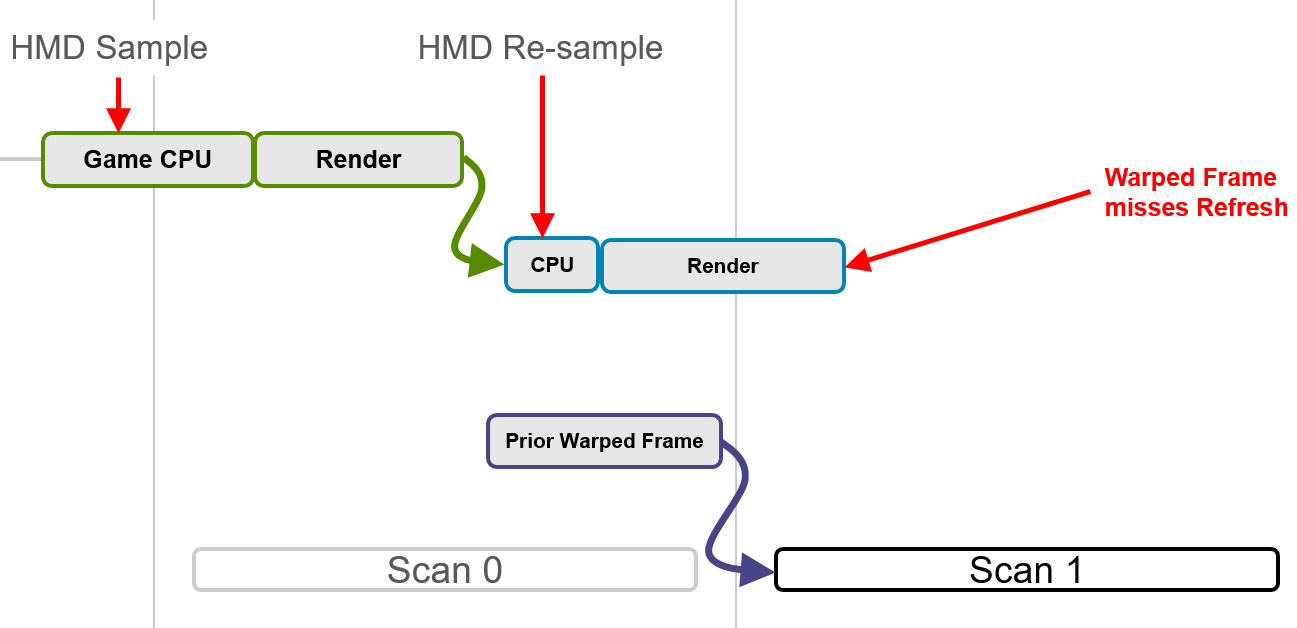

- Warp misses

- In the software version of FCAT, frame time data

- Frames synthesized by asynchronous spacewarp

Whenever the frame rendered by the VR game arrives too late to be displayed in the current refresh interval, a frame drop occurs and causes the game to stutter. Understanding these drops and measuring them provides insight into VR performance.

Warp misses are a more significant issue for the VR experience. A warp miss occurs whenever the runtime fails to produce a new frame (or a re-projected frame) in the current refresh interval. In the preceding figure, a prior warped frame is reshown by the GPU. The user experiences this frozen time as a significant stutter.”

FCAT VR: Simplifying Testing With A Local GUI

FCAT VR accesses the performance information provided by Oculus’ runtime logged to Event Tracing for Windows (ETW). Per the FCAT documentation, the following are measured in milliseconds:

- Game Start: Timestamp when Game starts preparing frame

- Game Complete CPU/GPU: Timestamp when Game has prepared frame (all CPU-side work is done), and then another timestamp when frame is finished on GPU

- Queue Ahead: Amount of queue ahead that was allowed for the frame

- Runtime Sample: Timestamp for warp start. Usually fixed amount of time before v-sync

- Runtime Complete: Warp finished on GPU

- V-sync: V-sync interrupt for HMD (Nvidia-only)

There are also integer counters in Nvidia’s output file to report app and warp misses.

Testing on a Vive is similar, except that FCAT ties into an API exposed by SteamVR to generate its timestamps. The list of captured events is different (longer, in fact). However, we come up with the same performance statistics.

Here's what's especially cool about Nvidia's software tool: because it's fed granular timing information from ETW and SteamVR, we can calculate what Nvidia calls unconstrained frame rate—that is, the performance we would have seen were it not for a 90 Hz refresh rate. This is particularly powerful in that we’re able to estimate headroom based on how long the render takes.

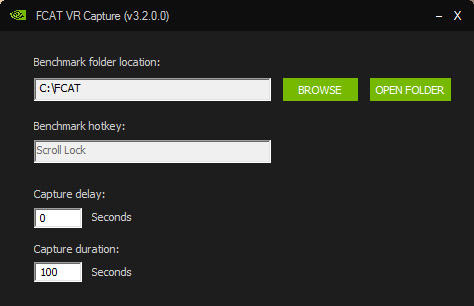

Meet The VR Capture Utility

Nvidia's tool consists of a simple UI that lets you specify a log file destination and a benchmarking hotkey. You fire up the capture utility and then launch your VR application. A red bar on the right side of the HMD tells you that FCAT Capture is running, but idle. Hitting Scroll Lock (the only hotkey supported) turns the bar green until you press it again, ending the run. Nvidia also adds options for capture delay and capture duration, making it easy to control how tests start and stop.

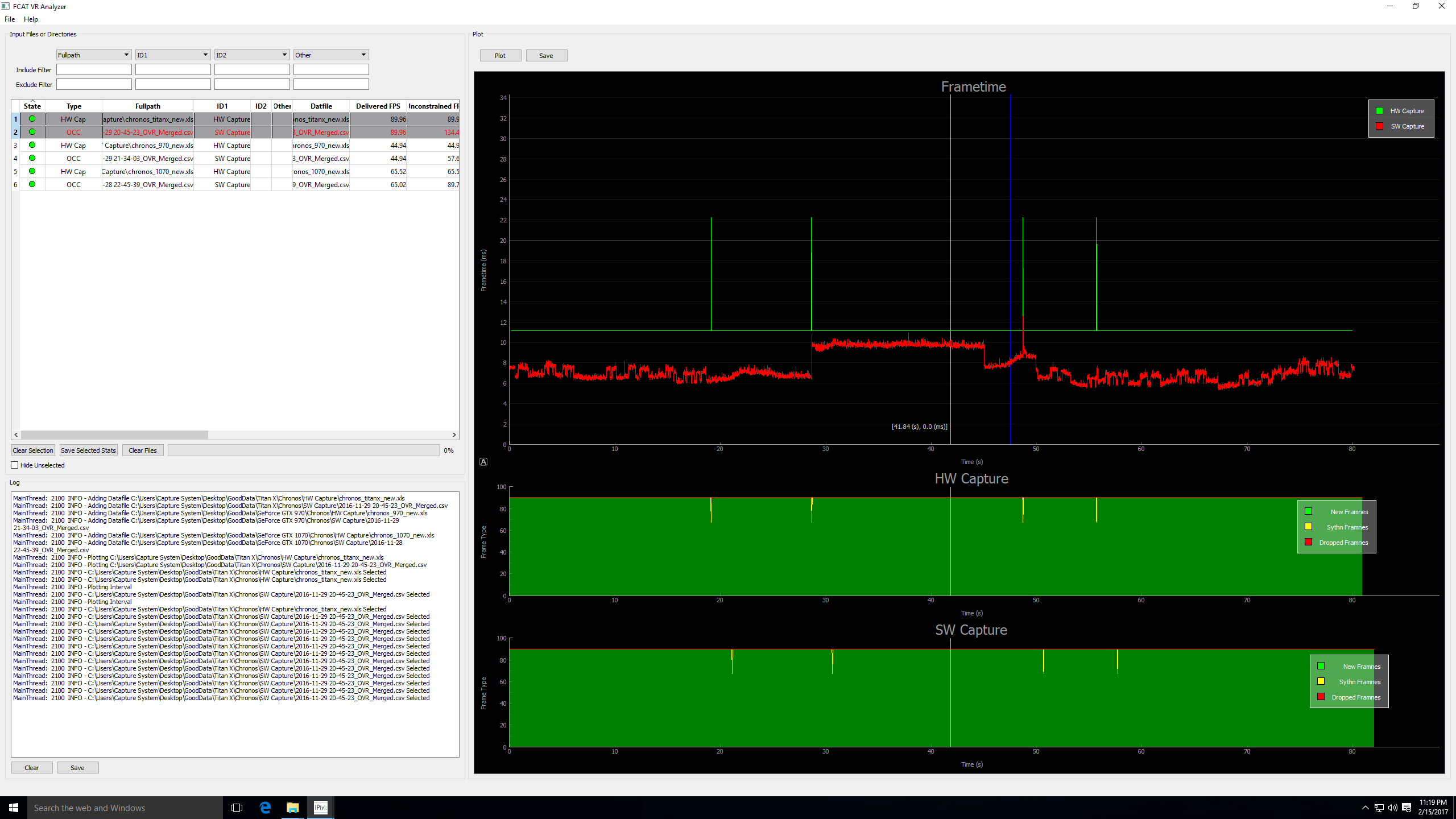

In an effort to encourage broader adoption of FCAT VR, Nvidia created a Python-based GUI into which we'll be able to drag log files and create charts much more easily.

This VR Analyzer conveys:

- The delivered frame rate, or what actually shows up on-screen. If you have a perfect run, you'll see 90 FPS. If your card spends half of its time at 90 and half of its time at 45 FPS, this field averages out to 67.5 FPS.

- The unconstrained frame rate, or what you'd see if it weren't for the 90 Hz display interval.

- Refresh intervals, or 90 times the benchmark's duration.

- New frames

- Dropped frames (app misses)

- Frames synthesized by asynchronous spacewarp

- Warp misses

- Average frame time

All of that data paints an interesting picture of what's happening on-screen, complementing the subjective observations we relied on previously for VR testing. We expect to do a lot more work with FCAT VR in the next couple of weeks, so let us know if there are specific experiments you'd like to see run!