Nvidia Lifts Some Video Encoding Limitations from Consumer GPUs

Nvidia quietly boosts the video encoding capabilities of its GPUs.

Nvidia has quietly removed some of the concurrent video encoding limitations from its consumer graphics processing units, so they can now encode up to five simultaneous streams. The move may simplify the life of video enthusiasts, but Nvidia's data center grade and professional GPUs will continue to have an edge over consumer products as now Nvidia does not restrict the number of concurrent sessions on them. Obviously, the speed of encoding can suffer with more simultaneous encodes.

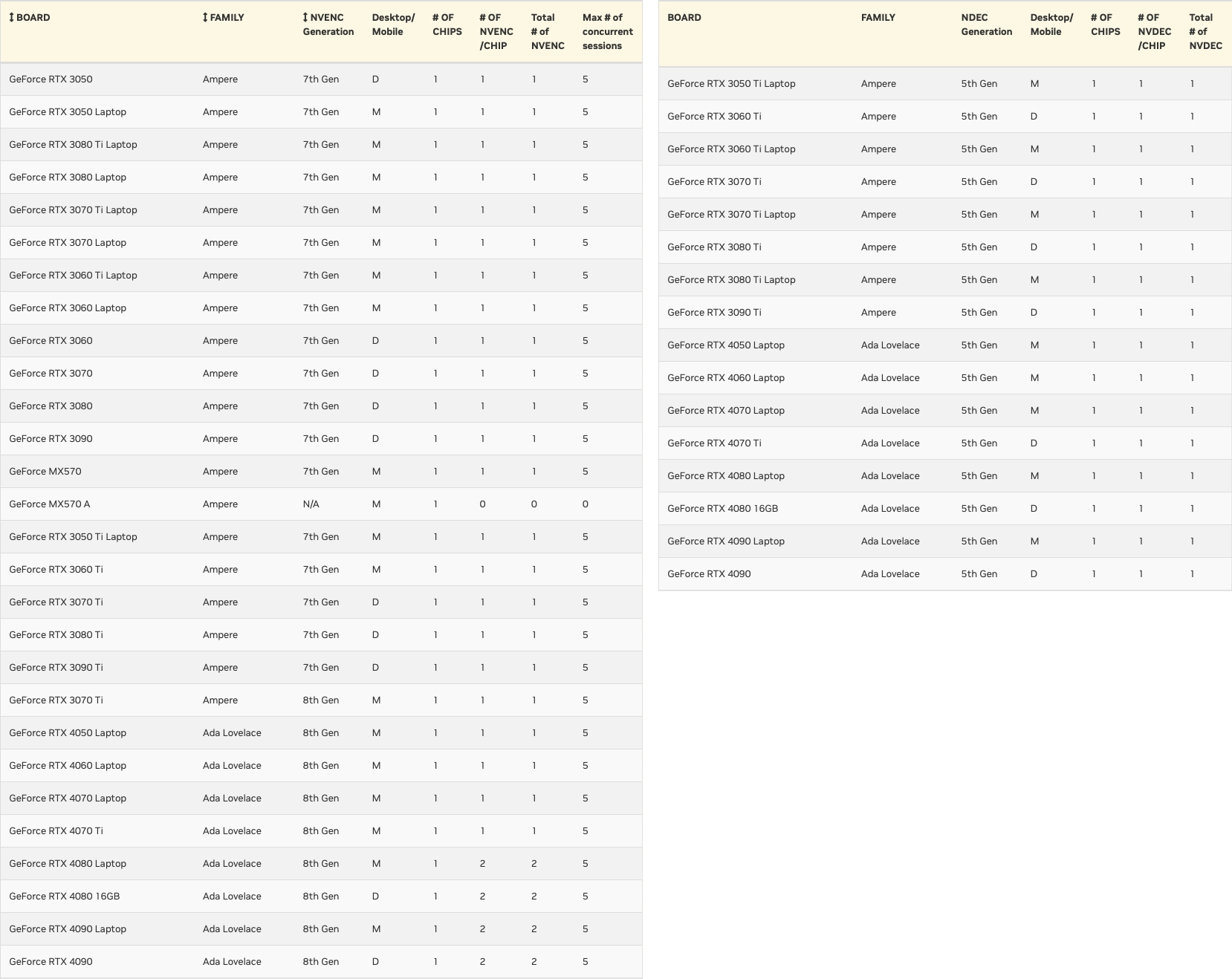

Nvidia has increased the number of concurrent NVENC encodes on consumer GPUs from three to five, according to the company's own Video Encode and Decode GPU Support Matrix. This is effective for dozens of products based on the Maxwell 2nd Gen, Pascal, Turing, Ampere, and Ada Lovelace microarchitectures (except for some MX-series products) and released in the last eight years or so.

The number of concurrent NVDEC decodes isn't restricted, though if you're after real-time decoding, there are limits to how many streams can be handled at once. Nvidia provides a table showing the relative decode speeds of various GPUs by codec, though it doesn't indicate the resolution of those streams. Still, even second generation Maxwell GPUs could do over 400 fps of decode, enough for at least six simultaneous 1080p (?) streams — assuming you don't run out of VRAM.

The change does not affect the number of NVENC and NVDEC hardware units activated in Nvidia's consumer GPUs. For example, Nvidia's latest AD102 graphics processor, based on the Ada Lovelace architecture, features three hardware NVENC encoders and three NVDEC decoders. All three are enabled on Nvidia's RTX 6000 Ada and L40 boards for workstations and data centers, but only two are active on consumer-grade GeForce RTX 4090. The AD103 and AD104 each have two NVENC and four NVDEC units, but GeForce cards only enable two of the NVDEC units.

Historically, Nvidia has restricted the number of concurrent encoding sessions on all of its graphics cards. Consumer-oriented GeForce boards supported up to three simultaneous NVENC video encoding sessions while Nvidia's workstation and data center solutions running the same silicon and aimed at ProViz, video streaming services, games streaming services, and virtual desktop infrastructure (VDI) could support 11–17 concurrent NVENC sessions depending on the quality and hardware. It turned out a couple of years ago that Nvidia's restrictions could be removed by a relatively simple hack.

But Nvidia's stance on NVENC and NVDEC limitations has evidently changed a bit. Now, consumer GPU support up to five concurrent NVENC encode sessions. Workstation-grade and data center-grade boards do not have any restrictions and the actual number of concurrent sessions depends on actual hardware capabilities, codec choice, and video quality.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

Giroro This makes yesterday's news that the "RTX 4000 SFF Ada Generation" only supports 2 NVENC streams even more confusing. Unless that news was about the number of NVENC/NVDEC hardware codecs, not the max number of streams.Reply

Nvidia's motivation to increase the limit probably stems from how windows's generally-unused gaming/stream recording features are usually camping on one of the NVEC streams, but OBS needs 2 streams if you want to run a live stream and record game footage at the same time.

So maybe they finally realized that they are selling "ultimate game streaming" cards that need to be hacked/fully-unlocked actually run a game stream.

Knowing Nvidia, step 2 will be to prevent their most-influential celebrity customers from unlocking full use of the hardware built into their "bargain bin" $2000 RTX 4090.

Step 3, maybe they'll start bricking hacked cards, when they think they can get away with it. -

AtrociKitty This is an improvement, but 5 streams is still artificially limiting most cards. Here's a nice tool to show how many streams various Nvidia cards can actually handle.Reply

In addition to streaming, many people use Nvidia cards for transcoding work in home media servers. I have an RTX 3060 12GB in mine, which can theoretically handle 10 simultaneous 4k to 1080p transcodes per the tool above. That means the increased limit of 5 is still cutting performance in half.

Unlocking this full NVENC performance is really easy, because it's only a driver limitation (you don't need to "hack" the card). I'm not going to repost the instructions here, but the entire process is patching a single DLL. I get that Nvidia wants to maintain market segmentation, but it's still dumb that they're doing it in such an artificial way when the hardware is capable of much more. -

Giroro Possible correction: Nvidia's Decode matrix does not list a maximum number of sessions for Decode, So unsure if they limit the number of sessions for decoding.Reply -

ThisIsMe Likely they realized how limited their consumer cards and some pro cards were in this area compared to the Intel A series cards. They are less expensive, produce nearly identical quality (+/- depending on various options), close in performance, and no artificial limits.Reply

Some of the crazy video streaming servers that have been built with Intel cards are wild. -

trebory6 So I made an account just to point this out, but if you google it, there are driver patches on GitHub that remove the encoding session limitations on consumer Nvidia cards.Reply

Patch gets removed every driver update, so you have to re-apply it every time you update the driver. Might void warranties, but if you're unconcerned with that and need the unrestricted video processing power, then there you go.

Personally I think artificially restricting the hardware that I paid for is extremely anti-consumer and a very dirty and greedy tactic by a company, so I have no qualms sharing this information. It's not even for safety or card integrity purposes but literally just for monetary gain so people will pay more for professional cards. -

JarredWaltonGPU Reply

Edited this out now. I'm not sure where Anton got the "one decoding session max" idea from. All you have to do is open up eight YouTube windows to see that's not true. ¯\(ツ)/¯Giroro said:Possible correction: Nvidia's Decode matrix does not list a maximum number of sessions for Decode, So unsure if they limit the number of sessions for decoding. -

Giroro Reply

In fairness, it's easy to conflate the number of hardware blocks with the number of sessions. I do it occasionally too.JarredWaltonGPU said:Edited this out now. I'm not sure where Anton got the "one decoding session max" idea from. All you have to do is open up eight YouTube windows to see that's not true. ¯\(ツ)/¯ -

RedBear87 Reply

Unless the page was quietly updated later this was mentioned in the article, with a link pointing an older Tom's Hardware article linking that GitHub patch.trebory6 said:So I made an account just to point this out, but if you google it, there are driver patches on GitHub that remove the encoding session limitations on consumer Nvidia cards

It turned out a couple of years ago that Nvidia's restrictions could be removed by a relatively simple hack.

On another note, the link to Video Encode and Decode GPU Support Matrix doesn't work on my end, it says "Bad Merchant" even on a browser with no addons and customisations activated. And I'm just nitpicking it, but technically this update was also carried out for at least a few 1st Gen Maxwell cards (actually I think it's all of the first generation Maxwell that had the NvEnc module in the first place), not just the 2nd Gen Maxwell as mentioned in the article.