120 Gbps Thunderbolt 5 and more PCIe 5.0 lanes coming to Intel's Arrow Lake desktop CPUs, Barlow Ridge controller debuts

Also more PCIe 5.0 lanes.

Arrow Lake desktop CPUs will apparently come with support for Thunderbolt 5, a first, per a leak of internal Intel papers courtesy of @yuuki_ans, a leaker with an impeccable track record. Although the hardware leaker has since deleted their post that contained screenshots of the documents, we saved them prior to their deletion.

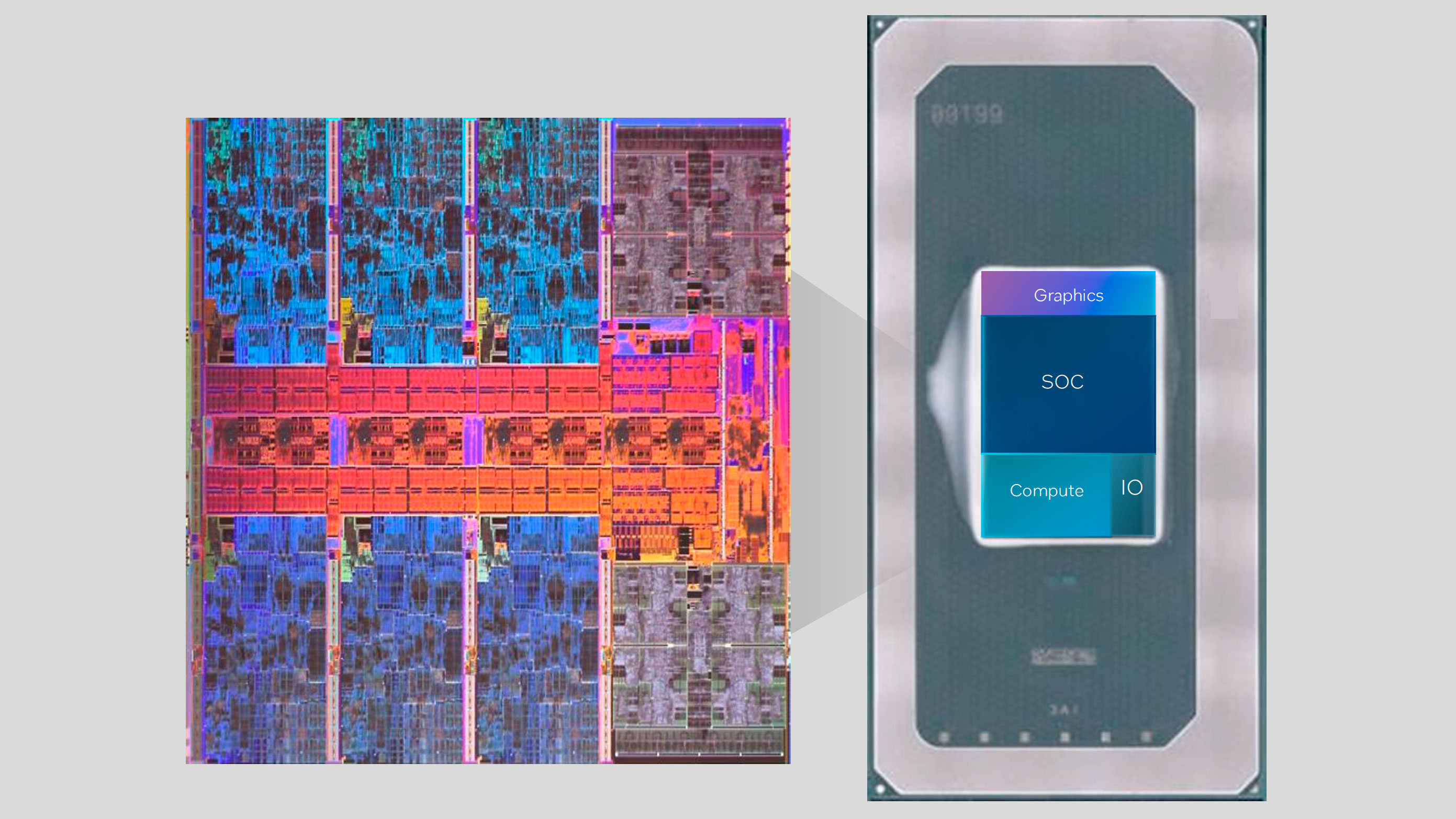

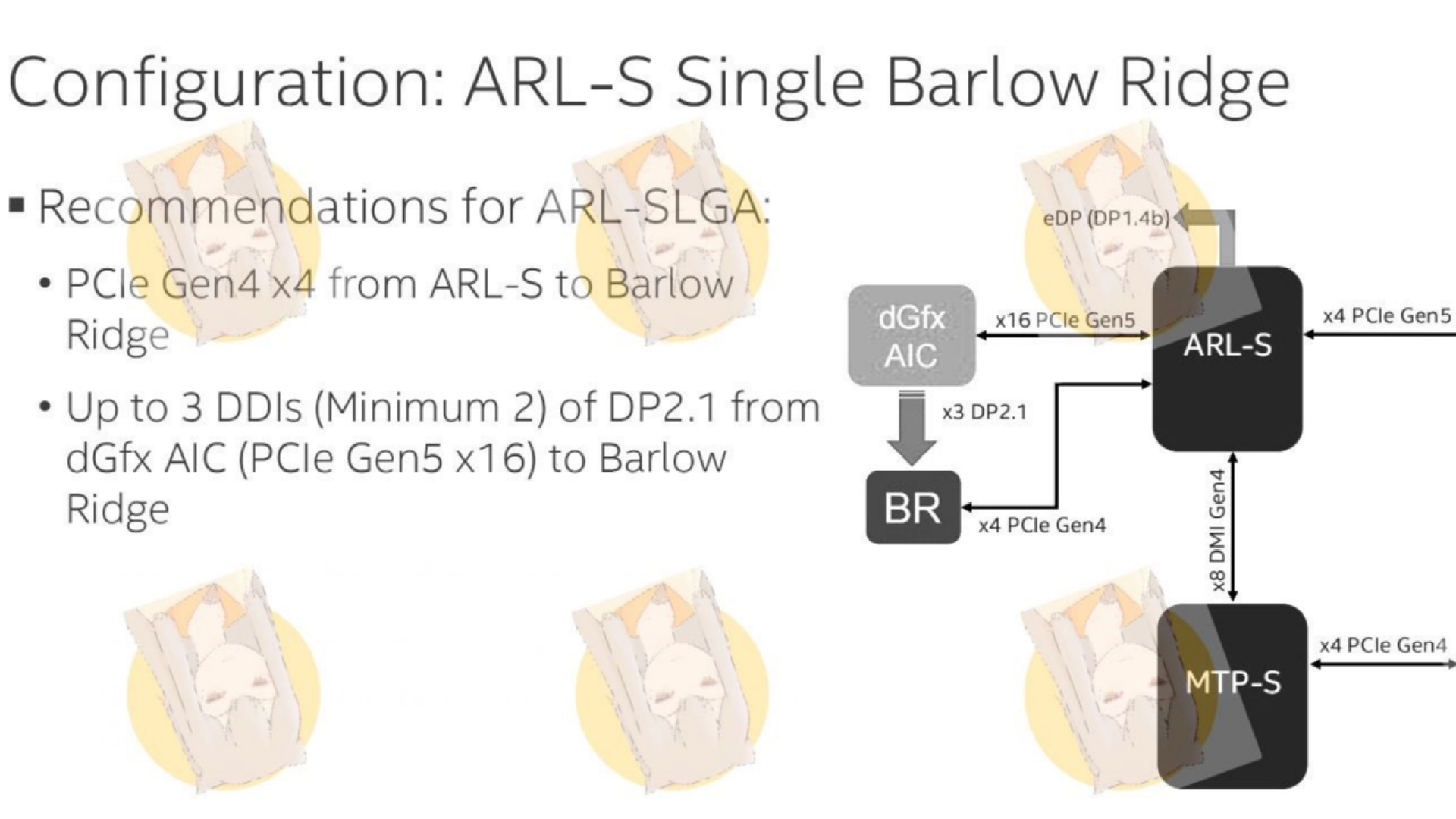

The leaked documents included a look at the PCIe lane map for Arrow Lake, which includes an explicit mention of Barlow Ridge, the name of the Thunderbolt 5 controller that Intel introduced last September. Barlow Ridge is equipped with four PCIe 4.0 lanes, giving it 80Gbps worth of bandwidth in both directions, twice that of both Thunderbolt 3 and Thunderbolt 4. Additionally, Barlow Ridge can do 120Gbps in one direction and 40Gbps in another for special circumstances where that would improve performance.

This will be the first time Thunderbolt 5 comes to desktops and the second generation of CPUs it appears in. Currently, Thunderbolt 5 is only present in certain Raptor Lake HX-equipped laptops where manufacturers have opted to install a Barlow Ridge chip. It's not completely clear if Barlow Ridge will be integrated into Arrow Lake CPUs themselves, or will be featured on certain motherboards.

The PCIe lane map also indicates that Intel has increased the amount of PCIe 5.0 lanes, bringing the total up to 20. This is an important number because it means Arrow Lake desktops can fully populate all PCIe lanes on a GPU and an SSD at the same time. Current generation Raptor Lake CPUs offer only 16 PCIe 5.0 lanes and four PCIe 4.0 lanes while a GPU uses 16 lanes, and an SSD uses four.

When a user installs both a GPU and a PCIe 5.0 SSD into a 12th, 13th, or 14th Gen PC, it forces those two parts to share those lanes. The SSD will get the full four lanes it needs, while the GPU gets just eight of the 12 remaining lanes, with four going unused (or for another PCIe 5.0 SSD). With PCIe 5.0 GPUs on the horizon and newer generations of PCIe 5.0 SSDs coming out steadily, it's good timing for Intel to finally launch a platform that can give the full amount of lanes to both GPUs and SSDs

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Matthew Connatser is a freelancing writer for Tom's Hardware US. He writes articles about CPUs, GPUs, SSDs, and computers in general.

-

Ogotai yep.. still not enough..Reply

when you add hardware, and lanes are shared, or something else looses lanes, like the gpu... there are issues. to use the hardware better, there needs to be enough lanes for every thing -

suryasans In the PC market, Few devices that can take this massive bandwidth, among them are nVME adapter to thunderbolt 5 and external dGPU enclosure to thunderbolt 5.Reply -

Notton Reply

get a HEDT then?Ogotai said:yep.. still not enough..

when you add hardware, and lanes are shared, or something else looses lanes, like the gpu... there are issues. to use the hardware better, there needs to be enough lanes for every thing

16x 5.0 lanes is overkill for current GPUs. These GPUs take up 3 to 4 slots when desktop cases only feature up to 7 slots anyways.

Like what are you trying to run? 7x Apex storage X21's? -

cyrusfox Reply

For the desktop space, I see limited utility and agree with your sentiment, but it is 2x to 3x what TB3/4 achieved, and for mobile, its a great leap forward for bandwidth and improved applications of this technology (Docks, eGPU, Storage/networking). It helps remove proprietary standards like those currently in use (e.g. ROG xg mobile connector which has 8x lanes pcie gen 4 so 128gbps compared to the 120gbps TB5 can offer).Ogotai said:yep.. still not enough..

when you add hardware, and lanes are shared, or something else looses lanes, like the gpu... there are issues. to use the hardware better, there needs to be enough lanes for every thing

USB-C spec is going to get more and more complicated -

Ogotai Reply

ive looked, thread ripper, too expensive.Notton said:get a HEDT then?

16x 5.0 lanes is overkill for current GPUs. These GPUs take up 3 to 4 slots when desktop cases only feature up to 7 slots anyways.

Like what are you trying to run? 7x Apex storage X21's?

you are forgetting mobo makers are adding more and more m.2 slots, usb ports. while you may not need them, for my main comp, gpu, sound card, sata drives, and a raid card, pice lanes quickly run out...

cyrus, wasnt talking about TB, i was referring to the " more pcie lanes " part. -

ThomasKinsley TB is a great standard and should become a mainstream solution. I don't know if it's industry fees that's holding it back, but it's a shame that it's taking so long.Reply -

abufrejoval "Desktop" with 20 PCIe lanes?Reply

That's no desktop, but basically a high-end mobile part being shoved into that range.

That might make sense to Intel, because it either allows them to drop a die variant or sell the older desktop chips as HEDT. Because AFIAK those are 28 lane devices on 12-14th Gen Intel and Zen 3-4 AMD.

On desktops GPUs don't need to share any of their 16 lanes with an SSD, because they have 4 extra lanes just for that.

On mobile parts and APUs it's pretty much as you describe. And while those make for great desktops, too they aren't in the same class.

So is this just a simple mistake or a sign of Intel trying to devaluate the good old PC middle class into using entry level hardware?

No amount of TB5 oversubscribing a PCIe v4 DMI uplink would wash away that marketing stunt, which Intel has previously pulled with PCIe v3 slots below a DMI link at v2 speeds... -

TJ Hooker Reply

The 20 lanes mentioned in this article are just those directly connected to the CPU, not including those provided through the chipset. Intel has never supported more than 20 direct CPU lanes on a mainstream platform, and AMD tops out at 24 (since AM4 anyway, don't remember what it was like pre-Zen). Unless you count those that are reserved for special purposes like connecting to the chipset, in which case Arrow Lake S would have 32 based on what's shown in this article.abufrejoval said:"Desktop" with 20 PCIe lanes?

That's no desktop, but basically a high-end mobile part being shoved into that range.

That might make sense to Intel, because it either allows them to drop a die variant or sell the older desktop chips as HEDT. Because AFIAK those are 28 lane devices on 12-14th Gen Intel and Zen 3-4 AMD. -

bit_user Hold my beer...Reply

Barlow Ridge is equipped with four PCIe 4.0 lanes, giving it 80Gbps worth of bandwidth in both directions, twice that of both Thunderbolt 3 and Thunderbolt 4. Additionally, Barlow Ridge can do 120Gbps in one direction and 40Gbps in another for special circumstances where that would improve performance.

Um... so PCIe 4.0 x4 has a nominal throughput of 7.88 GB/s, in each direction. That translates to just 63.04 Gbps. You don't get 80 Gbps (uni) out of that, and for darn sure don't get 120!

It's not completely clear if Barlow Ridge will be integrated into Arrow Lake CPUs themselves, or will be featured on certain motherboards.

I think the fact that it has its own codename and is connected via PCIe pretty clearly tells us it's a discrete part.

I hope you don't need all of those Gbps, then.cyrusfox said:It helps remove proprietary standards like those currently in use (e.g. ROG xg mobile connector which has 8x lanes pcie gen 4 so 128gbps compared to the 120gbps TB5 can offer).

** takes a sip ** -

bit_user Reply

Mainstream sockets have enough bandwidth. The solution to the lane-count problem could be just a handful of motherboards with a mainstream socket and a PCIe switch attached to the PCIe 5.0 x16 link. Its 63 GB/s of bandwidth provides plenty to go around, since you'd never have all devices trying to use 100% of their share at the exact same time.Ogotai said:ive looked, thread ripper, too expensive.