AMD enhances multi-GPU support in latest ROCm update: up to four RX or Pro GPUs supported, official support added for Pro W7900 Dual Slot

The new update also includes beta support for Microsoft's Windows Subsystem for Linux

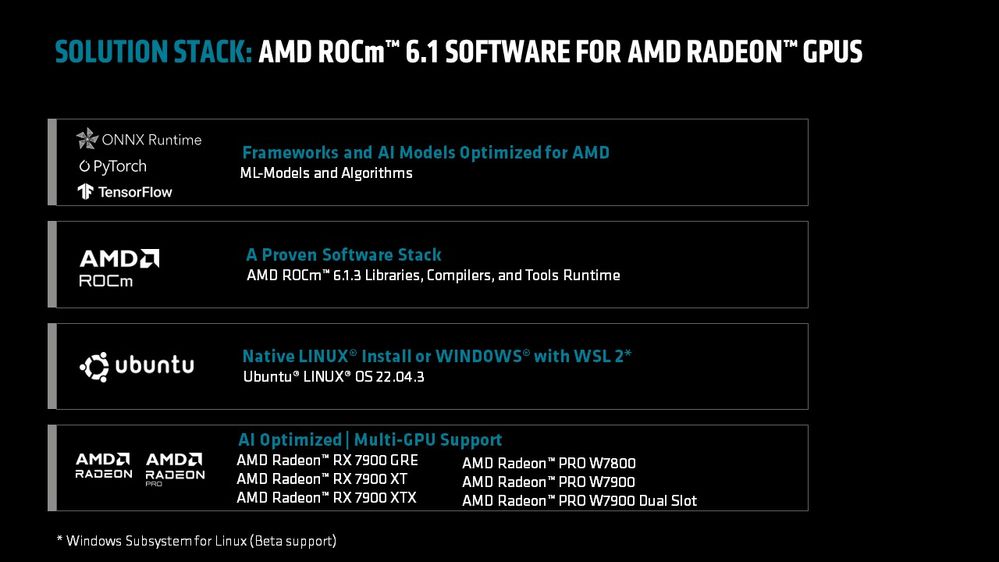

AMD has introduced a new update to its ROCm open-source software stack, mostly focusing on enhanced multi-GPU support. The new update, version 6.1.3, adds support for up to four qualified GPUs (integrated into a single machine), beta-level support for Windows Subsystem for Linux, and TensorFlow Framework support.

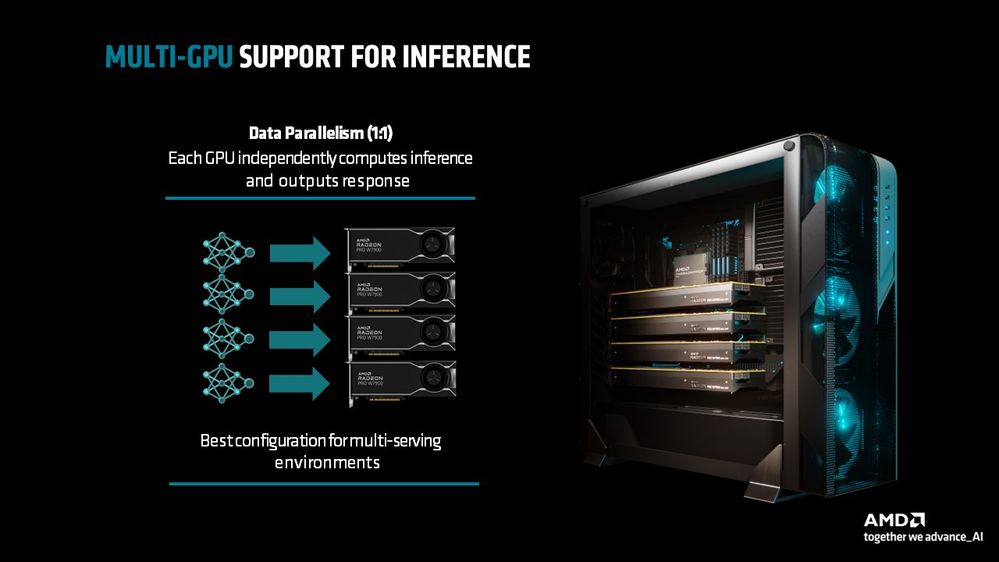

The biggest update is the additional multi-GPU support. Users of AMD's ROCm suite of open-source software solutions can now use up to four GPUs to greatly speed up processing time. Surprisingly, not only are some of AMD's Pro series GPUs supported, a few of the company's gaming-centric RX-series GPUs are also supported.

Official GPU support for ROCm's new multi-GPU support capabilities includes the Radeon RX 7900 XTX, RX 7900 XT, and RX 7900 GRE. Support for AMD's Pro series includes the Radeon Pro W7800, Pro W7900, and the all-new Pro W7900 Dual Slot variant.

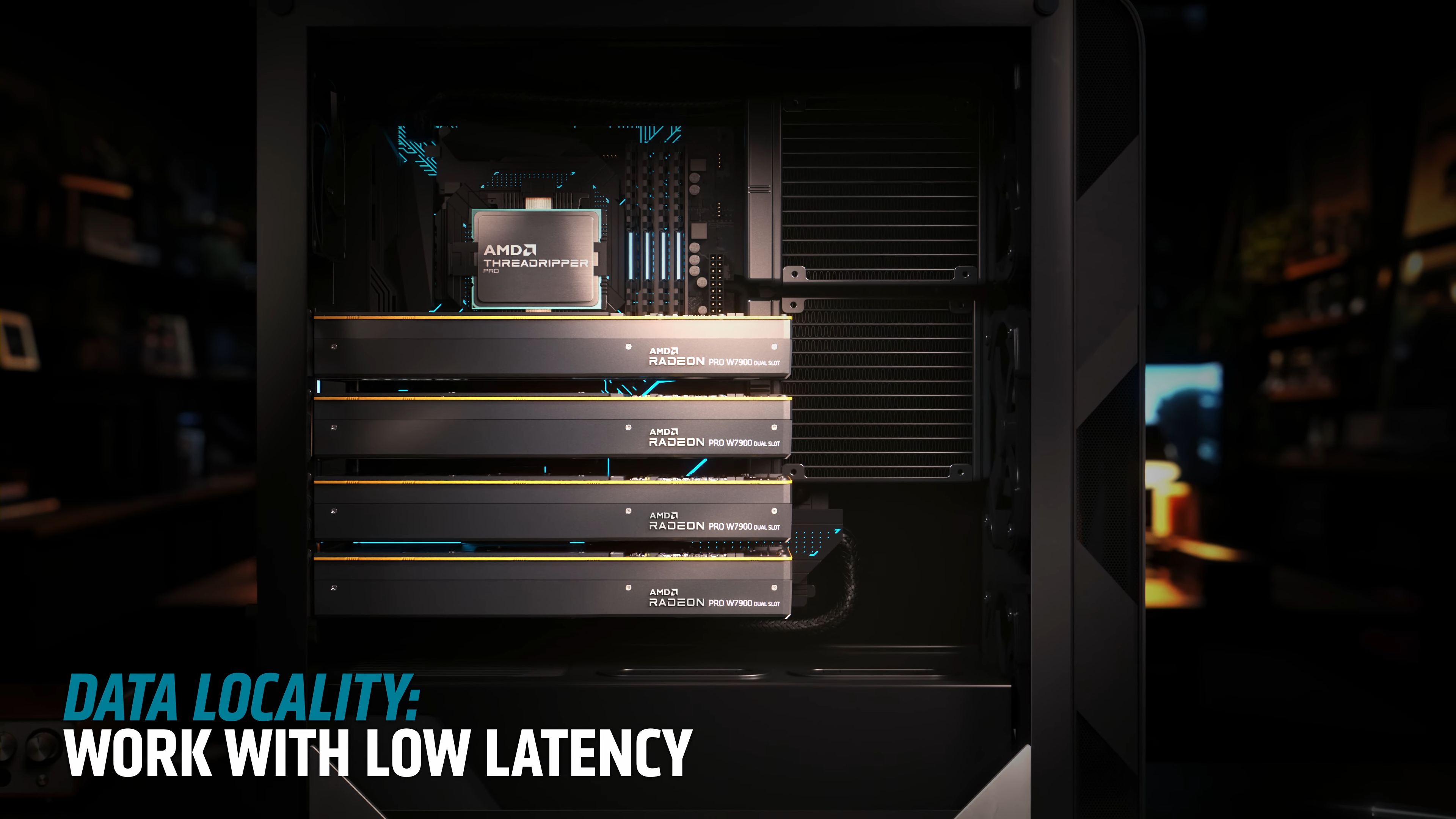

The enhanced multi-GPU support is aimed at building scalable AI desktops for high-speed hardware-accelerated AI workflows. GPUs are the cornerstone of high-performance AI, so adding up to four high-performance Radeon GPUs to a single workstation will significantly increase a system's AI performance compared to running a single GPU.

ROCm 6.1.3 also adds official support for the dual-slot variant of AMD's W7900 workstation GPU. For full details about the card, you can check out our previous coverage. But basically, the new GPU is a trimmed-down variant of the vanilla three-slot W7900, sporting a slimmer dual-slot blower-style cooler design. The best part is that AMD made no compromises to the GPU's specs. The W7900 dual slot shares the exact same fully enabled Navi 31 die as its triple slot counterpart.

The W7900 Dual Slot was made primarily with AMD's new ROCm update in mind. The dual slot nature of the new card, will allow users to equip up to four W7900 dual slot GPUs in a single machine.

Microsoft's Windows Subsystem for Linux (WLS 2) is now supported in the new ROCm 6.1.3 update in beta format. This addition will allow Windows-based machines to take advantage of AMD's ROCm software suite inside of Windows when utilizing a Linux terminal. This will give the Windows Linux subsystem more capabilities and enable users to run more Linux software inside of Windows without the need to have a dedicated Linux setup to run the same software.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Last but not least, AMD has announced qualification of the TensorFlow framework with ROCm 6.1.3. This new addition expands AMD's AI software support, which already includes PyTorch and ONNX, further improving AMD's AI GPU capabilities. TensorFlow is a framework that allows users to create machine learning models that can run in any environment.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

oofdragon Where is Cross Fire??? They surely can make it in 2024 since they did it 2014, 2004? 8800XT will be around a 7900XT for $500 they say, well, make it CF and it will beat the 4090 no problmReply -

oofdragon I've been always AMD but next gen I want 4090 perf for 1440p and/or at least 1.3x4090 perf for 4K, hope they make it somehow or I'll have to go Nvidia 😭Reply -

abufrejoval Reply

In both cases the big issue is the extra headaches and diminishing returns for the extra GPUs.oofdragon said:Where is Cross Fire??? They surely can make it in 2024 since they did it 2014, 2004? 8800XT will be around a 7900XT for $500 they say, well, make it CF and it will beat the 4090 no problm

It's like baking a cake or building a house: adding another person doesn't always double the output or half the time. When you add 5, 500 or 5000 there is a good chance no cake nor house will ever happen, unless your problem doesn't suffer too much from Amdahl's law and your solution process is redesigned to exploit that.

It's very hard to actually gain from the extra GPUs, because cross-communications at PCIe speeds vs. local VRAM is like sending an e-mail in-house and having to hand-write and hand deliver it as soon as it needs to reach someone in the next building.

In some cases like mixture of expert models there are natural borders you can exploit. I've also experimented with an RTX4070 and RTX4090 because they were the only ones I could fit into a single workstation for the likes of Llama-2. Some frameworks give you fine controll on which layers of the network to load on which card so you can to exploit where layers are less connected.

But in most cases it just meant that token rates went down to the 5 token/s you also get with pure CPU inference, because that's just what an LLM on normal DRAM and PCIe v4 x16 will give you, no matter how much compute you put into the pile.

ML models or workloads need to be designed to very specific splits to suffer the least from a memory space that may be logically joined but is effectively partitions via tight bottlenecks. And so far that's a very manual job that doesn't even port to a slightly different setup elsewhere. -

systemBuilder_49 Except AMD is so stingey with pcie slots (only 24 on the 1000x - 9000x cpus) that this feature is USELESS to all but threadripper customers. Nice one, AMD, democratizing AI for nothing but their richest customers!Reply -

LabRat 891 Reply

I reccommend you take a look @oofdragon said:Where is Cross Fire??? They surely can make it in 2024 since they did it 2014, 2004? 8800XT will be around a 7900XT for $500 they say, well, make it CF and it will beat the 4090 no problm

nuj4JOe7Tak

'Crossfire' is gone, long-gone now. M-GPU only works on VK/DX12, where supported.

However, AMD has already figured out how to 'bond' GPUs together over InfinitiFabric. The feature merely has not been offered to the consumer space.

I may be incorrect, but I believe InfinitiFabric inter-GPU communication is involved with ROCm Multi-GPU, too. -

Amdlova Reply

I gave up from amd... I take the worse card from nvidia the infamous rtx 4060ti 16gb :)oofdragon said:I've been always AMD but next gen I want 4090 perf for 1440p and/or at least 1.3x4090 perf for 4K, hope they make it somehow or I'll have to go Nvidia 😭

Don't wait to go green team.

Get one on cheap before the new cards come out. -

DS426 Reply

Entry-level Threadripper (7960X) is not terribly expensive (~$1,400) and gives 88 usable PCI-E lanes on the TRX50 platform. A quad 7900 XTX system will probably need that much CPU, depending on the AI workload. We're still talking about performant, relatively lower cost AI systems here with no full-blown EPYC or Xeon server systems.systemBuilder_49 said:Except AMD is so stingey with pcie slots (only 24 on the 1000x - 9000x cpus) that this feature is USELESS to all but threadripper customers. Nice one, AMD, democratizing AI for nothing but their richest customers!

BTW, AM4 had 24 lanes but AM5 has 28 lanes, and they are PCIe 5.0 capable. I don't know that 7900 XTX needs more than PCIe 4.0 x8 bandwidth (or maybe a small bottleneck??), so at least a dual GPU setup seems more than feasible to me as 16 lanes (minimum) are dedicated to PCIe slots. -

DS426 Reply

Coming from a RX 6700XT? Why?Amdlova said:I gave up from amd... I take the worse card from nvidia the infamous rtx 4060ti 16gb :)

Don't wait to go green team.

Get one on cheap before the new cards come out. -

Amdlova Reply

My rx6700xt died playing helldivers 2 lives 13 months no rma for meDS426 said:Coming from a RX 6700XT? Why? -

abufrejoval Reply

Lanes come at a cost, actually a huge cost in terms of die area and power consumption.systemBuilder_49 said:Except AMD is so stingey with pcie slots (only 24 on the 1000x - 9000x cpus) that this feature is USELESS to all but threadripper customers. Nice one, AMD, democratizing AI for nothing but their richest customers!

AMD gives you options: Make do with 16 lanes on APUs, 24-28 lanes on "desktop" SoCs and plenty more with Threadripper and EPYC.

Not everyone wants to pay extra for extra lanes on lower tier SoCs.

And some may be able to make do without having the full complement of lanes for every GPU: in GPU mining a single lane was good enough while with LLMs even 64 PCIe v5 lanes may still be too slow to be useful.

In theory you could even employ switches, which is what these ASmedia chips are, too.

Whether you're stingy with your money or AMD is stingy on the lanes is a difference in perspective that complaining cannot bridge.