RAID card delivers 128TB of NVMe storage at 28 GB/s speeds — HighPoint SSD7749M2 houses up to 16 M.2 2280 SSDs

128 TB of flash in a desktop workstation? Possible, says HighPoint.

HighPoint has introduced its new SSD7749M2 RAID card, which can house up to 16 M.2 SSDs and install up to 128 TB of flash memory into a typical desktop workstation. The card offers sequential read/write performance of up to 28 GB/s over a PCIe Gen4 x16 interface. These are quite massive performance specifications that come at a rather whopping price point.

HighPoint's SSD7749M2 SSD RAID card relies on the company's PCIe 'x48 lane PCIe Switching Technology,' something that could be a very advanced PCIe switch (or several PCIe) that allocates x16 lanes of upstream bandwidth to connect to the host platform and provides each SSD with x2 lanes of dedicated downstream bandwidth. This configuration offers decent signal integrity, minimizes latency, and enables the SSD7749M2 to achieve impressive data throughput, reaching real-world transfer speeds of up to 28 GB/s, according to HighPoint.

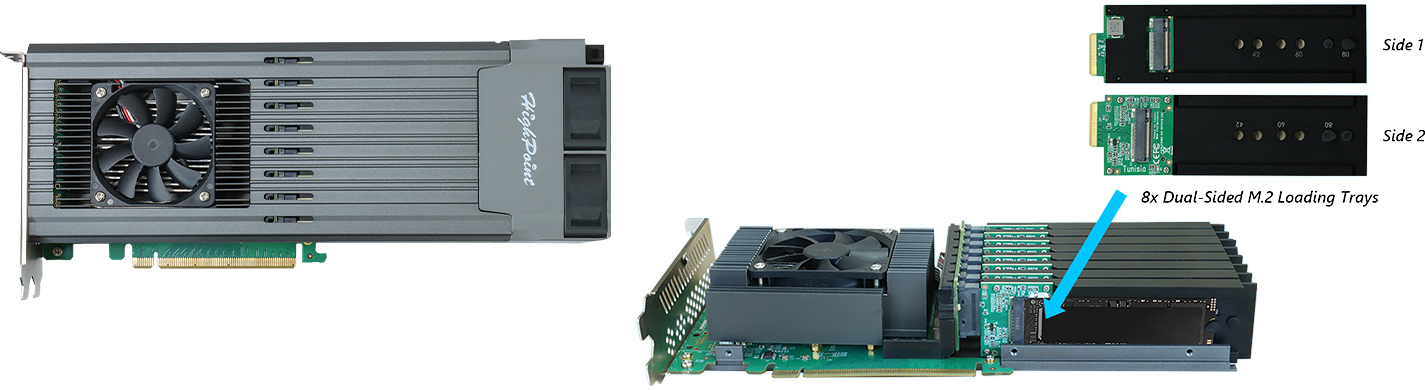

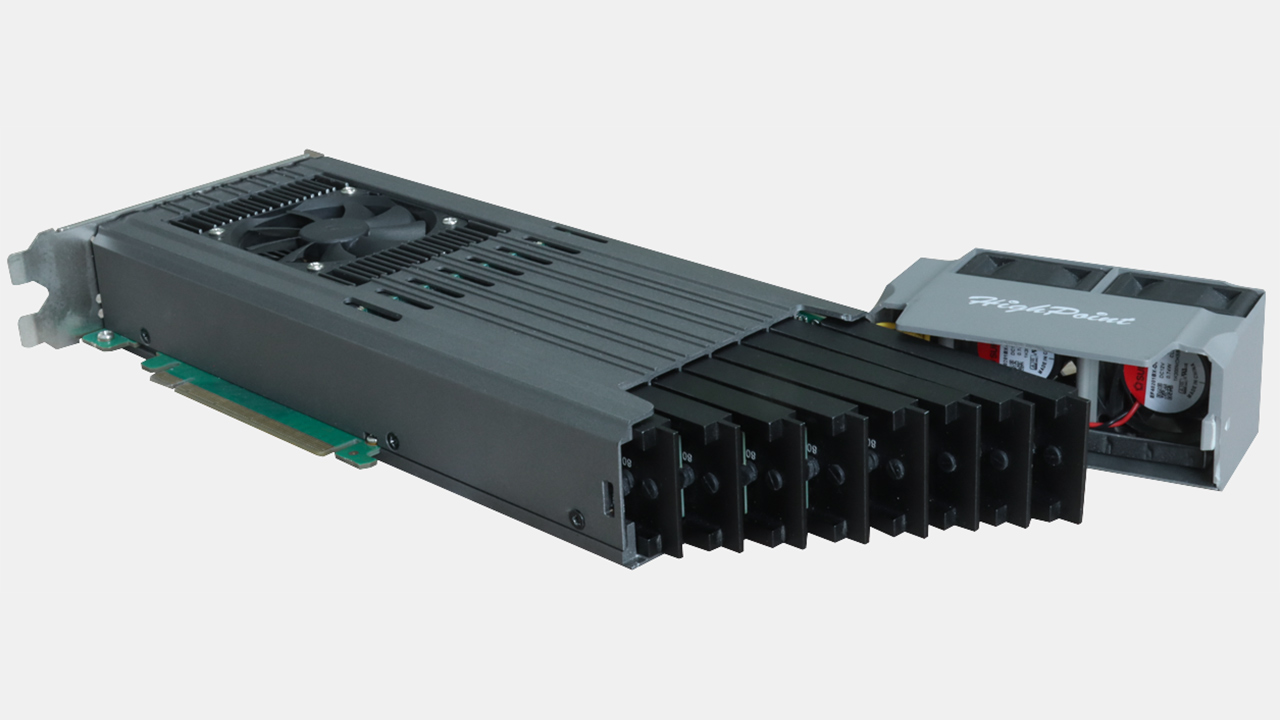

A notable design feature of the SSD7749M2 is dual-sided, vertically aligned M.2 trays, which can hold up to 16 M.2-2280 form-factor drives on a single side of the PCB. This opens doors to installing an SSD7749M2-based storage subsystem into regular desktop PCs and simplifies servicing. The beauty of such alignment is that these SSDs can carry a heatspreader to ensure this consistent performance; the wrong side is that it limits compatibility to several drives. HighPoint recommends the usage of Samsung 990 Pro or Sabrent Rocket 4Plus drives.

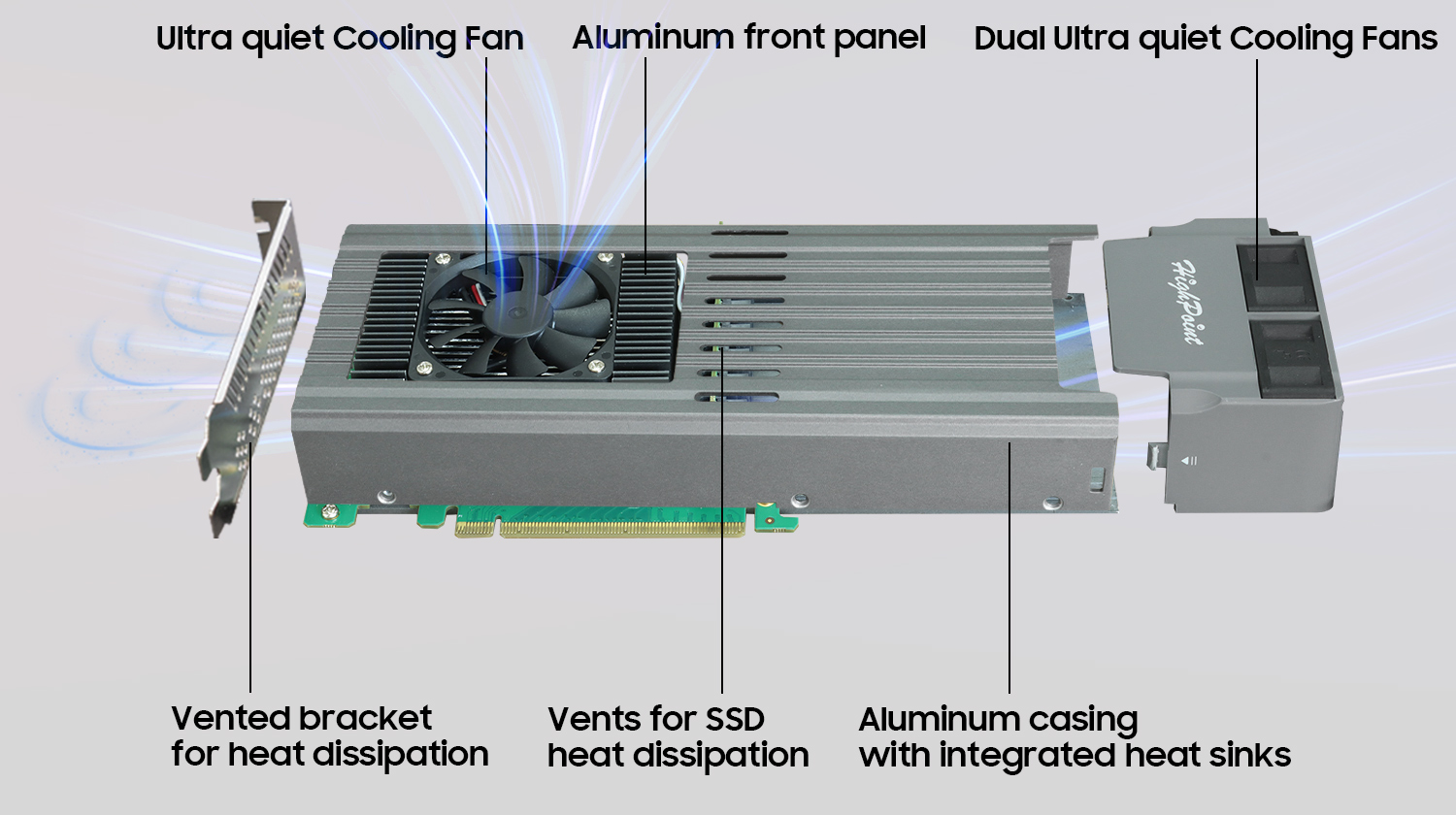

Speaking of cooling in general, the SSD7749M2 SSD RAID card comes with an active cooling system for its PCIe switch (or switches?) and two high-pressure fans to cool down the drives. This, of course, means that the storage subsystem is probably loud, but then again, if you need 128 TB of NAND memory at 28 GB/s, then there are disadvantages, too.

The SSD7749M2 is not exactly aimed at gaming desktops but rather at workstations and servers. The device features an advanced NVMe storage management and monitoring suite that allows administrators to configure and maintain the platform's NVMe ecosystem. It treats RAID arrays as single physical disks for versatile use as application drives, scratch disks, data archiving, or hosting bootable OSs and virtualization platforms.

Additionally, the SSD7749M2 is secured by the Gen4 Data Security Suite, which offers SafeStorage encryption and a hardware-secure boot to prevent unauthorized access and protect against malicious code during system boot-up. This ensures security even if the physical disks are lost or stolen.

The SSD7749M2, HHighPoint's RAID add-in card(AIC), will start shipping in late August 2024 with a base model priced at $1999. Additional configurations, which include pre-installed SSDs like the Sabrent Rocket 4 Plus and Samsung 990 PRO, will be available, although their specific pricing details have not yet been disclosed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

abufrejoval I want to hit myself over the head without end for not thinking about this form factor myself: it solves so many isssues at once in a very elegant manner!Reply

Turning things 90° somehow seems extra hard for someone who grew up with Lego, while thinking general modularity is never a problem...

Of course the price of PCIe switches is still putting this way beyond what I'd be willing to pay when CPUs with an IOD with more capabilites and power cost less and deliver more. -

Darryl_Fallon_222 What is supposed to be so "advanced" about that PCIev4 switch ?Reply

There are quite a few PCIev5 chips with plenty more lanes that could double that bandwidth easily.

OR more. WHole thing could be done w2ith all PCIev5 lanes, with full 4 lanes per each M.2 stick.

Look at Microchip's portfolio.

Sure, those aren't cheap, but neither is this card.

This thing is literally just a friggin PCIe switch chip with a vent and a couple connectors. -

abufrejoval Reply

the strategic purchase spree which allowed Avago/Broadcom to create an effective monopoly on PCIe switching to raise the price for commodity items far beyond SoCs that can do much more, I guessQuinn2_Santini3 said:What is supposed to be so "advanced" about that PCIev4 switch ? -

twotwotwo A chaotic motherboard design would be to support bifurcation to the point you can connect 16 SSDs as PCIe 4.0 x1 devices; I wonder where things would break if you tried to do that.Reply

As is, mobos with bifurcation + the four-drive cards + PCIe 4 SSDs having turned into the less expensive not-cutting-edge option => some pretty wild and economical-for-what-they-are setups are possible. -

Rob1C Beat handily by Apex's 1/3rd more expensive cards, with 3x the warranty, more speed, more capacity, and lower latency. A PCIe 5 version is available soon, 50M IOPs.Reply -

abufrejoval Reply

I believe rigs with nearly all lanes exposed as x1 slots were a somewhat popular niche during GPU crypto-mining, very little "chaos" about them, just an option that perfectly conforms to PCIe standards.twotwotwo said:A chaotic motherboard design would be to support bifurcation to the point you can connect 16 SSDs as PCIe 4.0 x1 devices; I wonder where things would break if you tried to do that.

As is, mobos with bifurcation + the four-drive cards + PCIe 4 SSDs having turned into the less expensive not-cutting-edge option => some pretty wild and economical-for-what-they-are setups are possible.

Now would that break things? There is a good chance of that, because vendors often just test the most common use cases, not everything permissible by a standard.

So I'd hazard that a system with 16 M.2 SSDs connected via x1 might actually fail to boot, even if it really should.

But once you got a Linux running off SATA or USB there is no reason why this shouldn't just work. And with a 4 lane PCIe slot often left for networking on standard PC boards, a 25Gbit Ethernet NIC on a PCIe v3 PC otherwise destined for the scrap yard could recycle a bunch of older NVMe drives, with a more modern base 40Gbit might make sense, too.

For building leading edge new stuff this isn't very attractive, I'd say. For using getting some use of out of stuff that's fallen behind in speed and capacity on its own, there may be potential, if time, effort and electricity happen to be cheap around you. -

jalyst Reply

Link???Rob1C said:Beat handily by Apex's 1/3rd more expensive cards, with 3x the warranty, more speed, more capacity, and lower latency. A PCIe 5 version is available soon, 50M IOPs.