AMD Radeon RX 570 4GB Review

Why you can trust Tom's Hardware

Asus Strix RX 570 OC

Asus’ updated model sports a boost frequency of 1300 MHz and a memory clock rate of 1750 MHz. Both specifications represent minor steps up from the RX 470's 1270 MHz core and 1650 MHz GDDR5.

Unfortunately, the Strix RX 470 OC wasn't able to consistently maintain those frequencies due to its modest cooling solution and board layout. What we're looking to answer today is whether the RX 570-based version changes anything. Naturally, we start by taking Asus' card completely apart to examine it.

Specifications

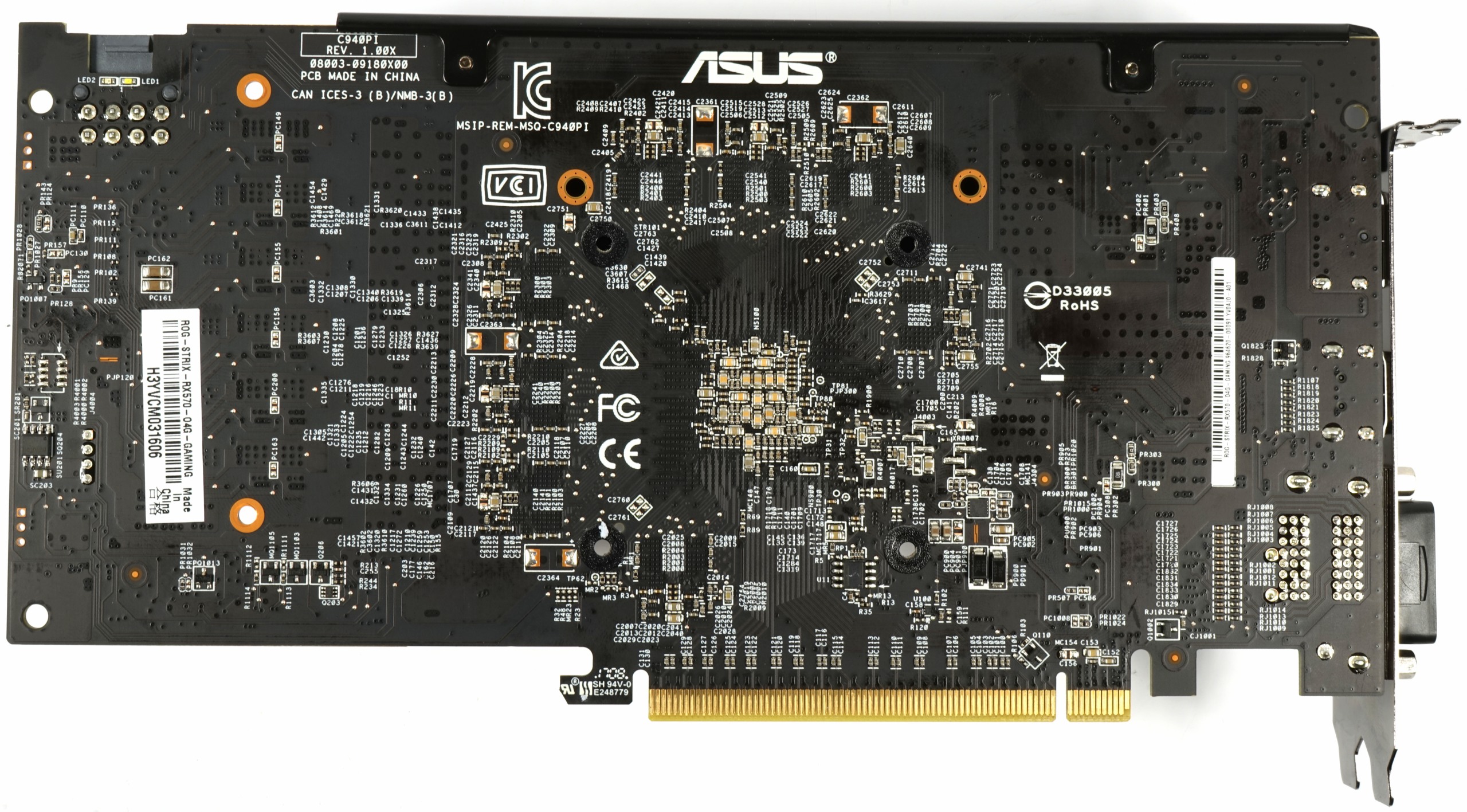

The Strix RX 570 OC looks fairly mundane and feels as mainstream as its price would indicate. A black plastic shroud covers the heat sink underneath, though metal fins are still visible through the fan's many blades. For less than $200, we expect practicality to become a bigger consideration than gaudy extras, so this is expected.

Asus' card weighs in at just 658g. From the outer edge of its slot bracket to the end of the cooler, the Strix RX 570 OC measures 24.2cm. Its height, from the motherboard slot's top edge to the protruding shroud, is 12.2cm. A depth of 3.5cm makes this a smaller dual-slot card. However, roughly 0.2cm of that measurement is attributable to a stabilization frame, which could affect compatibility with large CPU coolers or mini-ITX-based form factors.

Looking down from the top, we see the exposed heat sink, an illuminated ROG logo, and the eight-pin power connector.

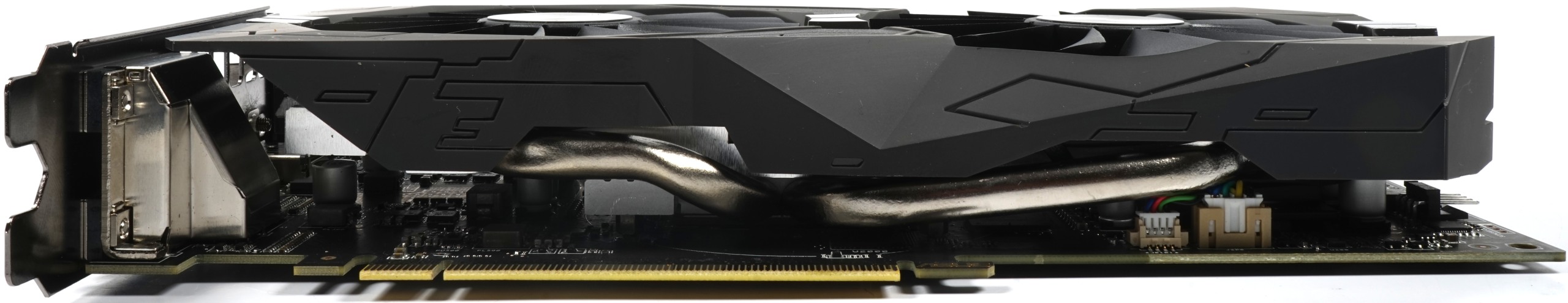

The bottom reveals two 6mm heat pipes made of nickel-plated composite material, along with more of the heat sink.

The cooler’s fins are arranged horizontally. Both ends of the card are left at least somewhat open to encourage air to pass through. Neither side allows for unobstructed flow, though, particularly since the slot bracket sports not just one, but two DVI-D connectors. A single DVI output would have probably been enough to accommodate gamers with older monitors, and we would have preferred more exhaust to the second DVI port. Asus also exposes one HDMI 2.0 and one DisplayPort 1.4-ready connector.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Voltage Supply & Board Layout

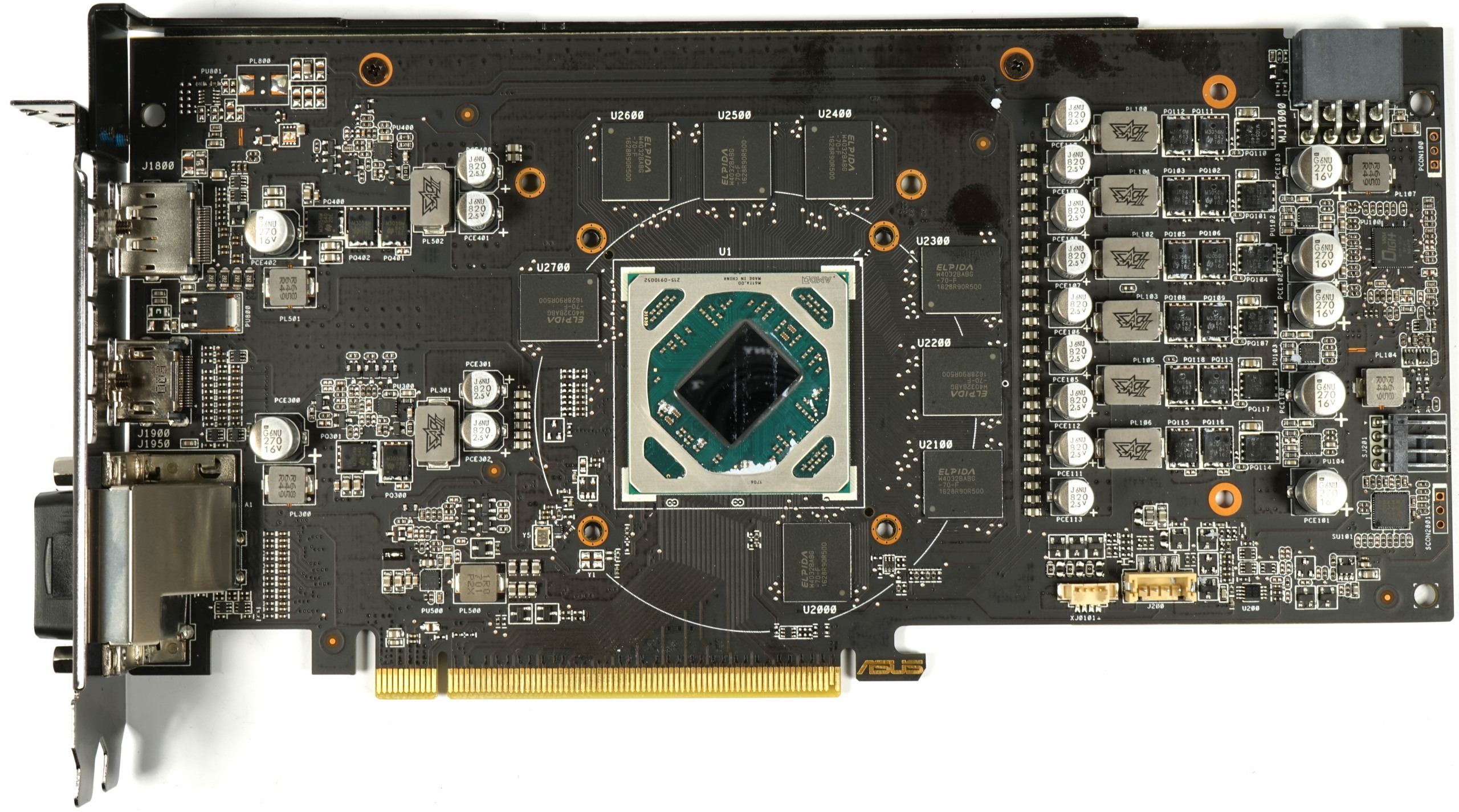

Asus' Strix RX 570 OC employs the company's own multi-layer PCB, and it's quite a bit different from the Strix RX 470 OC. To begin, the voltage supply moved to the board's right side, which makes sense when we consider the hot-spots those components create.

International Rectifier's ASP1300 PWM controller is labeled Digi+ for Asus. We might not have this particular component's specifications, but we can clearly see that there aren't six phases driving the GPU, but rather three.

Those three IR3598s are dual-drivers that can be interleaved to drive two pairs of MOSFETs. This is known as phase doubling, even though just one PWM signal is being split, and the idea is to create a high phase count voltage regulator while saving space and, ultimately, cost.

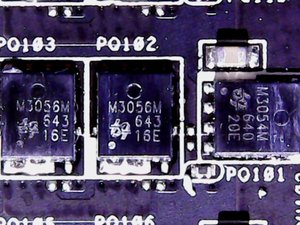

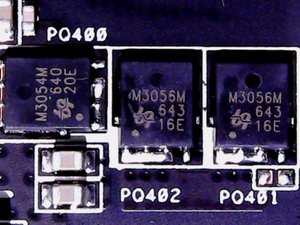

There’s one M3054 N-channel MOSFET for the high side and two M3056 N-channel MOSFETs for the low side, all of which come from UBIQ. The corresponding capped ferrite coils are labeled SAP II (Super Alloy Power), and they should help reduce coil whine across this card's temperature range.

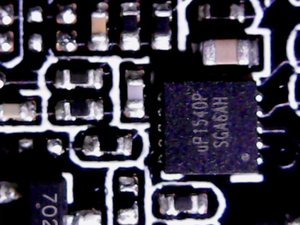

The memory’s voltage supply is realized through uPI Semiconductor's uP1540, a buck converter with synchronous rectification. This component also contains a bootstrap diode and the MOSFETs' gate driver. It’s not listed on uPI's website because it's an OEM-only product, though.

The MOSFETs are the same as what we found for the GPU's VR, though Asus looks to balance their load by powering memory through the motherboard slot.

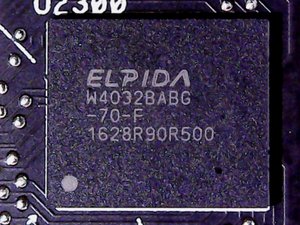

The Strix RX 570 OC's memory modules are supplied by Elpida (now Micron). Eight W4032BABG-70-F modules offer 4Gb density (16x 256Mb) with a maximum rated clock rate of 1750 MHz. These particular memory modules don’t have a lot of overclocking headroom, especially since they aren’t actively cooled. In the past, they didn't deal well with temperatures beyond their rated specifications.

Asus likes its undocumented OEM parts; the ITE8915 is a controller and monitoring chip with little available information for us to dig into.

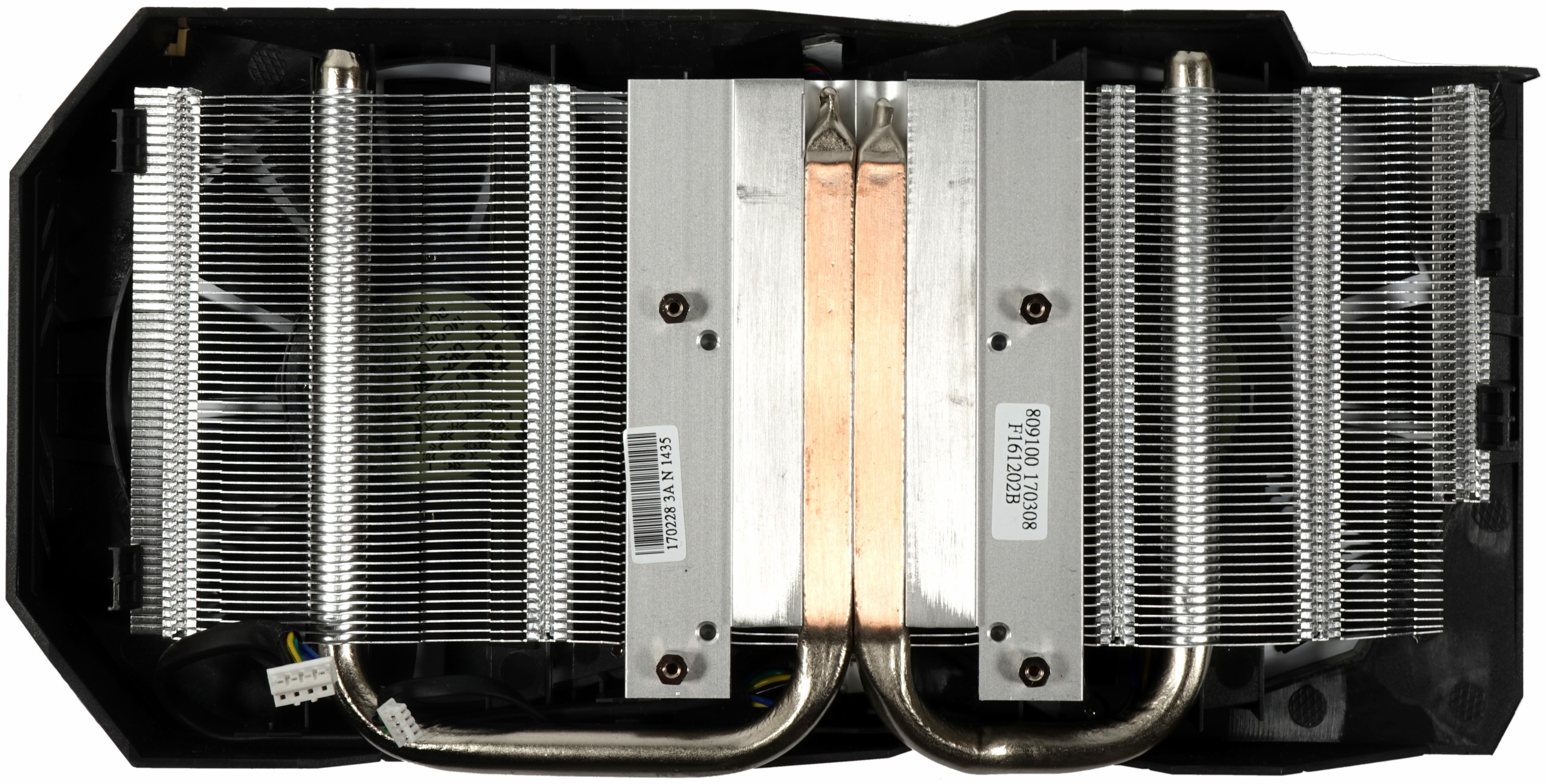

Cooling Solution

In spite of its large fans, the thermal solution seems small. The fans themselves have a diameter of 95mm, are made up of 13 blades, and run at a maximum speed of 2500 RPM. They're designed to deliver static and direct air pressure. They don’t just serve the cooler’s main body, but also have to provide airflow to the components underneath.

The fans' primary target on the board is the VRM's heat sink, a small black strip of extruded aluminum. It’s separated from the MOSFETs by a thermal pad and held in place by screws.

There’s no backplate to help with cooling, and the memory modules sitting on top of the PCB make do with air blowing over them as well.

The cooler weighs in at 340g. It sports two 6mm heat pipes made of nickel-plated composite material that's flattened and pressed into the aluminum sink. This design is supposed to provide direct contact with the GPU. But marketing aside, it's primarily a cost-cutting measure.

Our measurements on an open bench table and in a closed case will show if Asus' smaller cooler can handle the waste heat generated by Ellesmere and the components surrounding it.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Igor Wallossek wrote a wide variety of hardware articles for Tom's Hardware, with a strong focus on technical analysis and in-depth reviews. His contributions have spanned a broad spectrum of PC components, including GPUs, CPUs, workstations, and PC builds. His insightful articles provide readers with detailed knowledge to make informed decisions in the ever-evolving tech landscape

-

shrapnel_indie Again...Reply

Confusion caused by re-branding existing hardware

Yet the exact same issue exists for the uninformed between the same gen GTX 1060 models (3GB and 6GB) which also differ in the available functioning parts of the GPU... There wasn't a big deal made about that, yet there seems to be with the Radeons. -

nzalog Reply19583803 said:Again...

Confusion caused by re-branding existing hardware

Yet the exact same issue exists for the uninformed between the same gen GTX 1060 models (3GB and 6GB) which also differ in the available functioning parts of the GPU... There wasn't a big deal made about that, yet there seems to be with the Radeons.

Uhh that's not quite the same. I get that you red hat might be on a little tight but RX570 and RX580 sound like a completely new gen card. Not a slightly overclocked RX470 and RX480. I was excited until I read into the actual specs. -

AndrewJacksonZA So basically it boils down to how much more it will cost for an RX570 over an RX470 for a 5%-10% improvement in performance.Reply

Thanks for your efforts Igor, we appreciate it. :-) -

AndrewJacksonZA Reply

Out of interest, what do you need CUDA support for?19583990 said:if they only supported CUDA, i'll go definitively for it .. :(

-

josetesan For the sake of comparison,Reply

see http://navoshta.com/cpu-vs-gpu/

According to amazon specs, g2.2xlarge does offer a gtx680/gtx770GPU, so , as you can see, speed increase is amazing !

Besides, i'd like a good gaming card . -

Roland Of Gilead 18 pages for that Final Conclusion. These 'new' cards from AMD are a joke. Cynincal for AMD. For those that have zero or very little technical savvy, they will purchase these. For the more discerned among us, this is a non-story. C'mon AMD, give us something to cheer about!!! not being the 'also rans' who gave us good cards, and then re-released the same card the following year. Sick of this crap.Reply -

AndrewJacksonZA Reply

Hooray for open standards like CUDA! /s19584028 said:Machine Learning

(Sorry, closed systems like that are a pet peeve of mine.) -

dstarr3 I keep wanting to do an AMD-based budget build, but... well, they just don't ever make anything that I feel is competitive. If eventually the price on this dropped to more like 1050 Ti prices, then absolutely, killer bang for the buck. But at the MSRP of $200, I'd rather spend just a little bit more and go for a 1060 6GB.Reply

And in terms of CPUs, I'd like to see what budget Ryzen chips AMD can come up with before I pull the trigger. i3s don't have the core count, so AMD's already ahead, but their budget lineup is getting a bit long in the tooth right now.

Really, it's just not a compelling time to buy just about anything right now.