Why you can trust Tom's Hardware

As you'd expect, the packaging and features on the PowerColor RX 6400 ITX are about as budget as budget can get. You get a brown cardboard box with a few specs and other details, and inside, the card itself is wrapped in an anti-static bag. There's no padding or protection for the card, though ours arrived without incident and works fine.

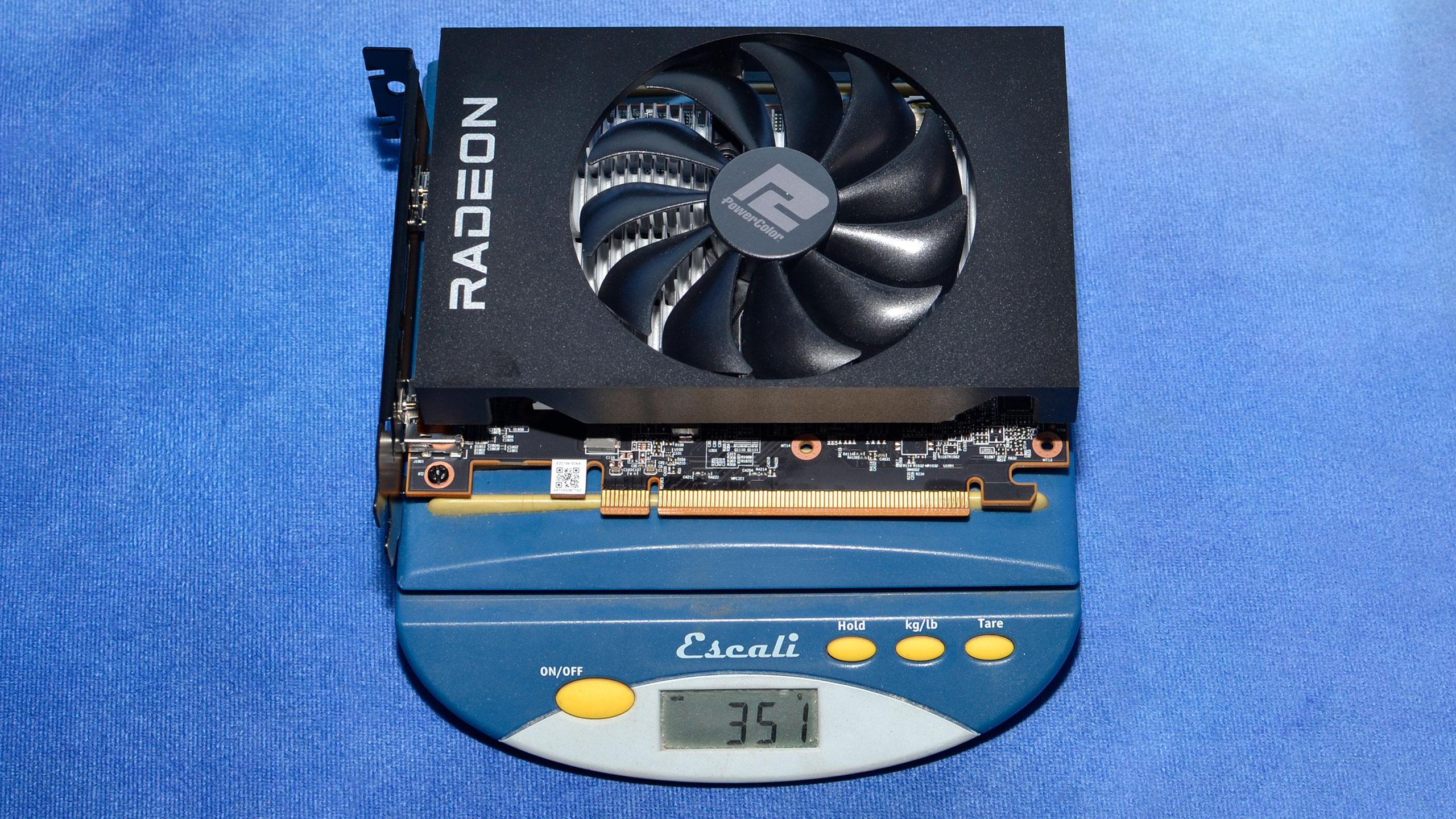

As you'd expect, the RX 6400 is a featherweight compared to typical cards like the PowerColor RX 6650 XT. It tips the scale at just 351g, making it one of the lightest cards we've had in-house in recent years — and half-height models would be even lighter. That also means the heatsink isn't likely a very high-quality model, and we saw slightly higher thermals as a result, though nothing we'd really worry about.

Dimensions for the card are 165x125x40mm, basically as long as your PCIe x16 slot, as tall as the expansion slot, and nothing more, though it is a dual-slot cooler. We bought the dual-slot card just because we wanted the theoretically best possible experience with the RX 6400. A half-height card with a tiny 40-50mm fan should probably perform just as well, given the low TBP, but we wanted to be sure.

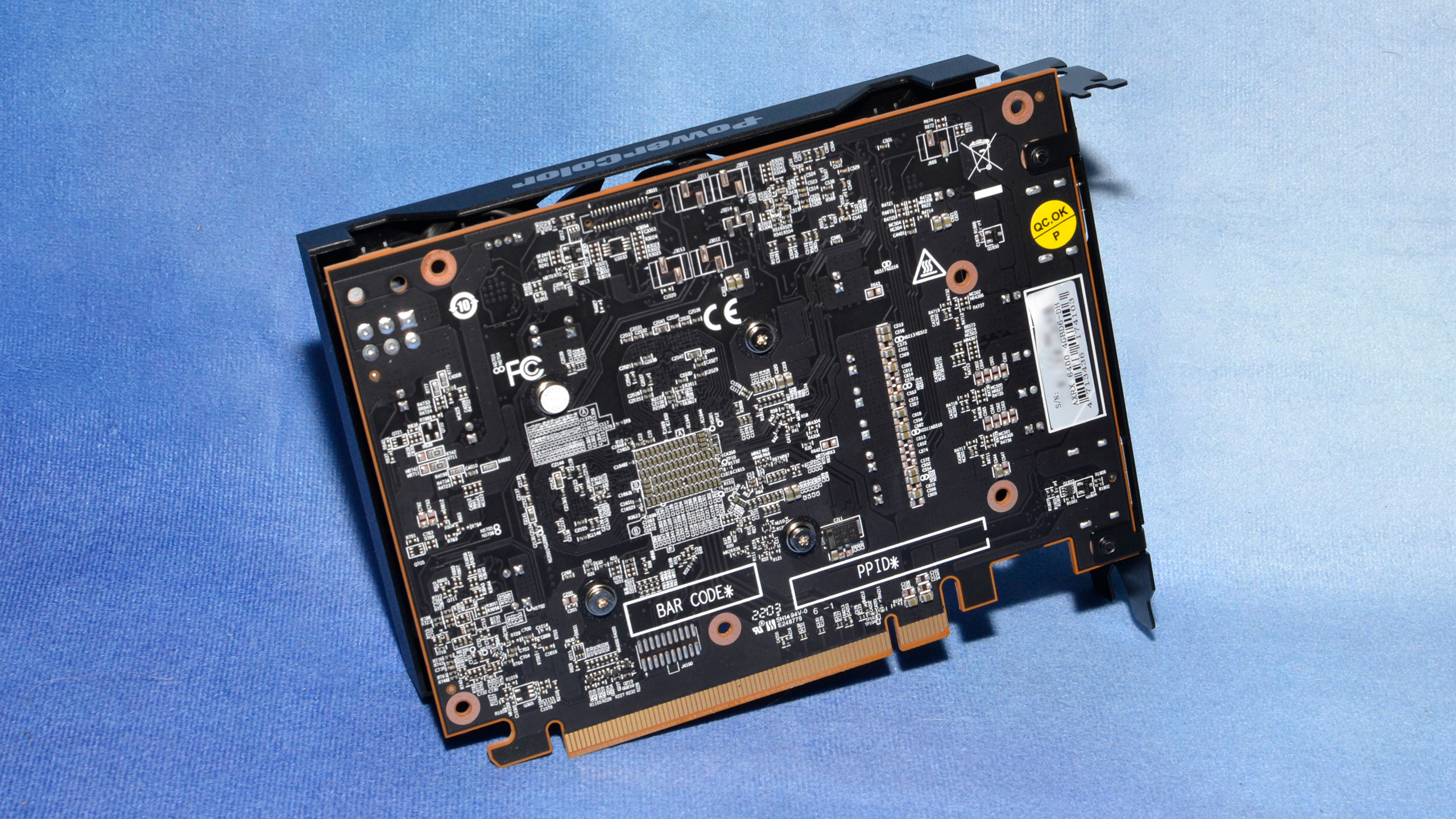

This is a barebones card with no lighting of any form, perfectly fit to go inside cases that don't have windows or try to show off the PC hardware. The fan is a 90mm model with 11 blades, a 4-pin connector, and three mounting screws.

As noted earlier, there's no factory overclock or any ability for end-users to overclock the RX 6400, at least for now. So the PowerColor card in that sense is a fully reference configuration, with a 53W TBP (typical board power) and a 2321 MHz boost clock.

The 4GB GDDR6 comes clocked at 16Gbps, and the 64-bit interface means the GPU only has 128 GB/s of bandwidth, though AMD lists the 16MB Infinity Cache bandwidth at 208 GB/s. That cache bandwidth will help a lot more at lower settings that are less likely to exceed the cache capacity, as we saw with the RX 6500 XT.

- MORE: Best Graphics Cards

- MORE: GPU Benchmarks and Hierarchy

- MORE: All Graphics Content

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Current page: PowerColor Radeon RX 6400 ITX

Prev Page AMD Radeon RX 6400 Next Page Test Setup for Radeon RX 6950 XT

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

King_V If ever there was a GPU that strode forth, and boldly declared "Meh," this is it. I will, however, grant it points for its performance/watt, relative to its competitors.Reply

I would be very surprised if the price held up where it is, though. Then again, a quick look at PC Part Picker for a GDDR5 version of the GT 1030 is showing a single passively cooled model for $90 directly from Asus, and the rest at $114 and higher. The horrible DDR4 version is just as pricey, which elicits a big DoubleYoo Tee Eff?

Ouch. -

JarredWaltonGPU Reply

Just waiting for the GTX 1630 to arrive... It should give a little bit more meh to the GTX 16-series, because Nvidia can't let AMD run unchecked in the meh market segment of graphics cards! LOLKing_V said:If ever there was a GPU that strode forth, and boldly declared "Meh," this is it. I will, however, grant it points for its performance/watt, relative to its competitors.

I would be very surprised if the price held up where it is, though. Then again, a quick look at PC Part Picker for a GDDR5 version of the GT 1030 is showing a single passively cooled model for $90 directly from Asus, and the rest at $114 and higher. The horrible DDR4 version is just as pricey, which elicits a big DoubleYoo Tee Eff?

Ouch.

GPU price tiers:

Enthusiast/Extreme

High-end

Mainstream/Midrange

Budget/Entry-Level

Meh -

Liquidrider ReplyKing_V said:If ever there was a GPU that strode forth, and boldly declared "Meh," this is it. I will, however, grant it points for its performance/watt, relative to its competitors.

I would be very surprised if the price held up where it is, though. Then again, a quick look at PC Part Picker for a GDDR5 version of the GT 1030 is showing a single passively cooled model for $90 directly from Asus, and the rest at $114 and higher. The horrible DDR4 version is just as pricey, which elicits a big DoubleYoo Tee Eff?

Ouch.

I can think of another GPU that strode forth and bodly declared Meh this is it.

Do you mean competitors like Intel's ARC A380 which was just released in China only and cost more than AMD 6400 and is slower?

Unlike Intel, however, at least AMD didn't build a bunch of hype around the 6400. -

JarredWaltonGPU Reply

I'm not sure the A380 actually costs more than the RX 6400, and it has 2GB more VRAM, much better codec support... but questionable drivers at present. Yeah, it's not great, and the China-only business does not inspire any confidence in me whatsoever. But the theoretical price of the A380 is supposed to be under $150 as I understand things. And if Intel ever wants to be a real player in the GPU space, it absolutely has to fix the driver situation, which is something it knows and is working on. I'm pretty sure a big part of the delayed US launch is to give the driver teams three extra months of debugging and fixing. We'll find out in the next two months... But yes, I'm looking to be underwhelmed by first generation Arc performance. I'm also very hopeful that Intel will keep iterating and actually close the gap with AMD and Nvidia over time, because it would be great to have a third serious player in the GPU market.Liquidrider said:I can think of another GPU that strode forth and bodly declared Meh this is it.

Do you mean competitors like Intel's ARC A380 which was just released in China only and cost more than AMD 6400 and is slower?

Unlike Intel, however, at least AMD didn't build a bunch of hype around the 6400. -

shady28 It's pretty disappointing that the lower end of the market, around $150 MSRP, hasn't really moved in performance since the 1650 was released in Feb of 2019.Reply

Yes, 2 1/2 years and there is really no movement here. This wasn't always the case, the 750 Ti (2014) and 1050 Ti (2016) were great cards for their time that sucked people into PC gaming for a fairly low price.

This failure to seed the market so to speak may backfire in coming years.

-

-Fran- Thanks a lot for the review. This card actually had a lot of potential (much like the 6500XT) to come and save the day for a lot of people, but they both fell so darn flat it wasn't even funny. It's like one of those bad movies that is so bad it's good, but in this case, the movie was just bad... At least you can decode the movie, right? Heh.Reply

Anyway, I wish they'd pack a bit more features for <75W cards to justify them being half width for slim cases. I still have my case waiting for that one card that is worthy of going into it. Ah, the dreams and hopes burned, haha.

Regards. -

King_V I don't think it's actually terrible for that niche it's supposed to cover... you need something in a system that doesn't have a PCIe connector, with the option of also having a low-profile, single-slot version.Reply

And, when I say that, I mean to include that the cooler itself is only single-slot height.

After all, as Jarred said:

AMD's Radeon RX 6400 is geared for a very specific niche. If you happen to fall into that niche, go ahead and add 1.5 stars to our score and pick one up.

I agree that, even for what it is, it most certainly is overpriced. Then again, I seem to recall R7 250E/7750 cards that were single-slot-low-profile designs costing more than their normal sized counterparts. Likewise, it was difficult (impossible?) to find a 750Ti that fit that form factor at all (I was looking to squeeze something into a Dell Inspiron 3647 Small Desktop). They tended to be priced a little higher probably because of the cold calculation of "a captive audience with very few options."

If a 1630 comes out, I can't imagine it being a contender. The 1650 and 6400 trade blows, with the 1650 dominating once the details are cranked up. The 1630 would probably be out of the running.

That leaves the real contest for New Meh to the 6400 and the A380. Assuming that the A380 allows for no-PCIe, and offers low-profile-single-slot solutions. At 75W, I don't think that's going to be possible.

Best case, A380 vs 6400 becomes the Battle For Meh!™ . . . but I'm starting to suspect that even the A380 won't be able to - it'll require physically larger cards/coolers, and, similar to the 1650, most will require a PCIe connector.

That'll put it at A380 vs 1650, which the Intel card is going to lose, at a performance level, though likely win easily in the price/performance aspect.

(edit: grammar/clarity) -

TerryLaze Reply

We have to assume that amd and nvidia have decades worth of a head-start of soft and hardware IP to make games run better and three month is not going to make a difference for intel in closing that gap.JarredWaltonGPU said:I'm pretty sure a big part of the delayed US launch is to give the driver teams three extra months of debugging and fixing. We'll find out in the next two months... But yes, I'm looking to be underwhelmed by first generation Arc performance. I'm also very hopeful that Intel will keep iterating and actually close the gap with AMD and Nvidia over time, because it would be great to have a third serious player in the GPU market.

Intel has to focus to the future and that's what they are doing, they provide all the tools and info to developers and have arc integrated to unity and unreal, they focus on future games being specifically optimized for their cards.

It's still not a sure bet by any means that the cards will perform well in the future, but at least intel has the groundwork laid out.

https://www.intel.com/content/www/us/en/developer/topic-technology/gamedev/overview.html -

thisisaname ReplyLiquidrider said:I can think of another GPU that strode forth and bodly declared Meh this is it.

Do you mean competitors like Intel's ARC A380 which was just released in China only and cost more than AMD 6400 and is slower?

Unlike Intel, however, at least AMD didn't build a bunch of hype around the 6400.

Loved the sub heading on the articles, harsh but true :giggle: -

magbarn Reply

Too bad for Intel as the train has already left the station. Knowing Intel, if Arc is a dud, they'll fire the whole team and we'll be back to the duopoly again. If they just started 6 months ago, Intel would've been as a savior of GPU market. Here's hoping that they give their GPU division at least 3 generations to catch up and get sizable market share.JarredWaltonGPU said:I'm not sure the A380 actually costs more than the RX 6400, and it has 2GB more VRAM, much better codec support... but questionable drivers at present. Yeah, it's not great, and the China-only business does not inspire any confidence in me whatsoever. But the theoretical price of the A380 is supposed to be under $150 as I understand things. And if Intel ever wants to be a real player in the GPU space, it absolutely has to fix the driver situation, which is something it knows and is working on. I'm pretty sure a big part of the delayed US launch is to give the driver teams three extra months of debugging and fixing. We'll find out in the next two months... But yes, I'm looking to be underwhelmed by first generation Arc performance. I'm also very hopeful that Intel will keep iterating and actually close the gap with AMD and Nvidia over time, because it would be great to have a third serious player in the GPU market.