Early Verdict

The X299 Taichi’s CPU-fed M.2 slot is likely the biggest competitive advantage of the three LGA 2066 motherboards tested thus far. Though the way its extra M.2 slot is connected precludes SLI when used with Kaby Lake-X, the X299 Taichi is cheap enough to offset the cost of a Skylake-X CPU upgrade.

Pros

- +

Great overclocking

- +

Good efficiency

- +

Dual Gigabit Ethernet plus Wi-Fi

- +

Three M.2 NVMe slots with one fed directly by the CPU

Cons

- -

Wi-Fi module is lower model than competing products

- -

No SLI support on Kaby Lake-X

Why you can trust Tom's Hardware

Features & Specifications

The launch of Intel’s X299 platform hasn’t been smooth. Motherboard manufacturers were receiving important core firmware updates even up to the day of the launch. The two boards we used in our launch-day reviews were at least stable, but we still had to go back and retest them before running the new results against today’s test subject, ASRock’s X299 Taichi. Let's see what progress has been made in three weeks.

Specifications

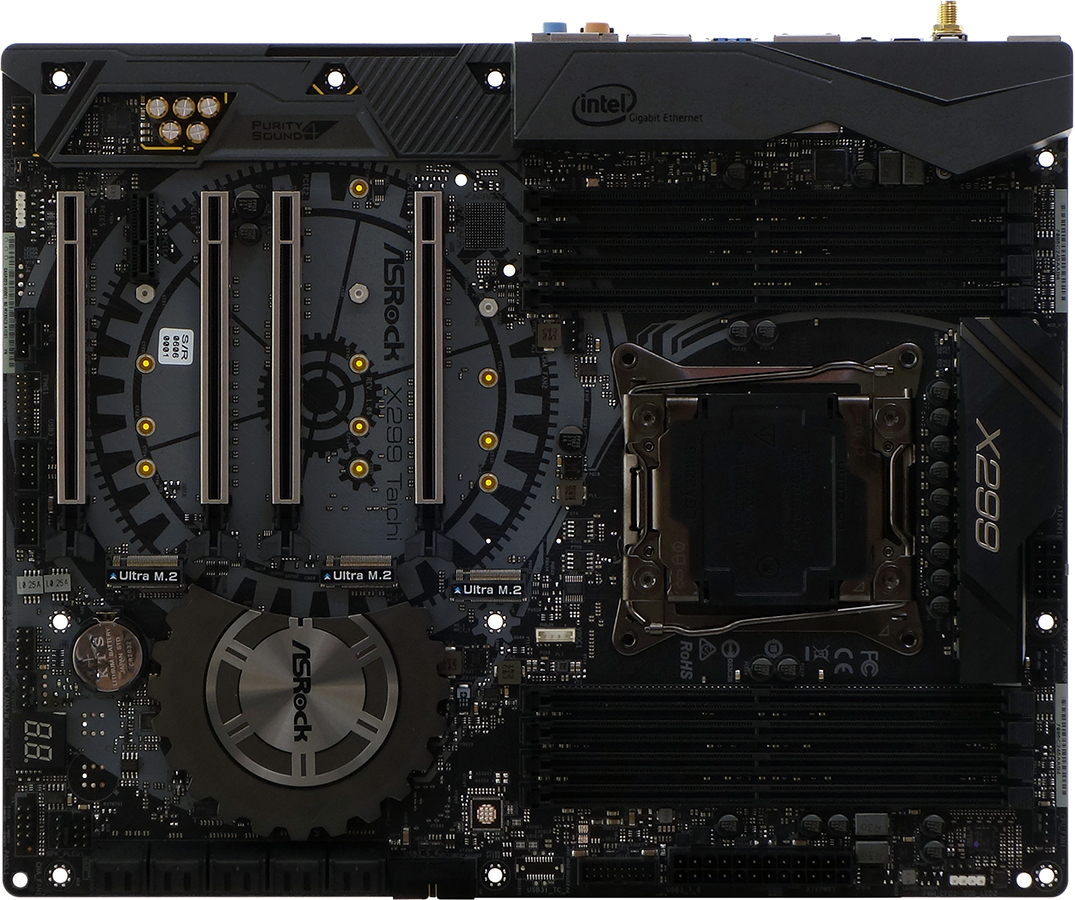

The first thing we noticed on the X299 Taichi spec sheet was this motherboard's paucity of resource sharing. SATA-based M.2 drives still steal SATA ports, but interest in those has quickly diminished in the age of NVMe. A closer look reveals that the X299 Taichi has one more M.2 slot than its competitors, and it comes with a stealthy share: Fed by the CPU’s PCIe controller, a PCIe-based (e.g. NVMe) drive will force the graphics card slots into x8/x0/x4/x0 mode when installed alongside a 16-lane (Kaby Lake-X) processor. On the plus side, the Taichi is also the first X299 motherboard we've tested that bypasses the bandwidth sharing problem of the chipset’s DMI by directly linking an M.2 slot to the CPU.

Like the two previously-tested X299 models, ASRock’s Taichi has two 10Gb/s USB 3.1 ports on the back (one with a Type-C interface), CLR_CMOS and firmware flashing buttons, and cable connectors for a Wi-Fi controller. Matching the Asus configuration, the dual Gigabit Ethernet ports of ASRock’s X299 Taichi edge ahead of the single port provided on our MSI sample. Unfortunately, ASRock’s chosen 433 Mb/s Wi-Fi controller has the least rated bandwidth of the three samples we've reviewed.

Every X299 motherboard we’ve seen was designed to manage the weirdness of Intel’s adding a CPU with 16-lane PCIe controller (we're talking about Kaby Lake-X) to a platform designed to benefit from the added pathways of 44-lane and 28-lane processor-based controllers. The 28-lane option makes sense because it allows 3-way SLI (where the minimum allowed is eight lanes per card) while leaving four lanes for a PCIe-based storage controller. We’ve already detailed how ASRock maintained that storage focus by feeding its first M.2 slot with CPU lanes, but it’s also important to remember that this configuration excludes 2-way SLI from running on those same 16-lane processors.

Conversely, Asus' X299 Prime excluded the 3-way SLI option when using 28-lane processors, and didn’t even include CPU-based M.2. While Kaby Lake-X owners may question why ASRock didn’t add even more PCIe switches to allow the option of disabling the first M.2 slot while retaining basic SLI compatibility, a better question might be why anyone would spend the extra $100 to put Kaby Lake on X299, rather than stick with Z270.

Basic CrossFire and SLI compatibility is handled by the first and third metal-reinforced slots, providing two empty slots between cards to allow the use of oversized graphics card coolers. While ASRock claims that CrossFire isn’t possible with 16-lane processors, we’ve never seen AMD exclude an x8/x4 configuration for two cards.

The X299 Taichi supports two RGB strips at its upper front and rearward bottom edges, two USB 3.0 front-panel cables at its front edge, two USB 2.0 front-panel cables at its bottom edge, four 4-pin fans along its bottom and top edges, a fifth 4-pin fan between the CPU socket and upper PCIe slot, a VROC (RAID for PCIe storage) module between its upper M.2 connector and nearby DIMMs, a Thunderbolt Add-in card, and 10 SATA drives. All 10 SATA ports point forward to keep connectors under the forward edge of long expansion cards, as does the second USB 3.0 header above them. Users also have access to a two-digit POST code display, but the dual BIOS ICs are not manually selectable. Builders who love cases with poorly-specified front-panel cables will probably hate the X299 Taichi, as there are always a few cases on the market with FP-Audio cables that are too short to reach this motherboard's traditional bottom-rear-corner header position.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Two empty BGA pads appear to be for a 10GbE controller and front-panel USB 3.1 connector, the later guess bolstered by the missing Type-C lead located between the two USB 3.0 front-panel headers. We can’t wait to see the more advanced motherboard that will be based on this circuit board!

We no longer expect to see a large cable bundle on high-end motherboards, now that M.2 drives have taken over primary storage duty. ASRock includes four SATA cables for your storage drives, along with an HB-style SLI bridge, a 3-way SLI bridge, two Wi-Fi antennas, an I/O shield, drivers, and documentation.

MORE: Best Motherboards

MORE: How To Choose A Motherboard

MORE: All Motherboard Content

-

You overclocked 10/20@4.4Ghz and then you complain about heat? You people are insane, seriously...Reply

-

Speaking of ASRock boards, in my experience they are the best. I am waiting for extreme series x299, like Extreme4, 6, 10. I still have AsRock x79 Extreme4 and AsRock x99 Extreme4 and they are rocking awesome since day 1 and both i paid just $170 (On Sale). Again speaking of SkyLake X, lower that BLCK down to 100Mhz and set multiplier to 20x and lower your voltage or in fact use default voltage and do not worry about heat. As I said in the previous article, pushing 10/20 this high goes beyond reasonable. If you guys snatch 6/12 Skylake - X you can set that one to 4.5/4.6Ghz and you will not see heat issues.Reply

-

Crashman Reply

Does this sound like a complaint or an observation?19925236 said:You overclocked 10/20@4.4Ghz and then you complain about heat? You people are insane, seriously...

The X299 Taichi falls between the two previously-tested X299 motherboards in power consumption, as indicated within Intel’s Extreme Tuning Utility. It’s closer to the Asus sample, though the heat measured at its voltage regulator is noticeably higher than either competitor.

Relax, it's a beautiful day somewhere

I'd be happy with 4.6 GHz 8-cores, 4.4 GHz 10-cores, and 4.2 GHz 12-cores. It looks like we're on our way :)19925267 said:Speaking of ASRock boards, in my experience they are the best. I am waiting for extreme series x299, like Extreme4, 6, 10. I still have AsRock x79 Extreme4 and AsRock x99 Extreme4 and they are rocking awesome since day 1 and both i paid just $170 (On Sale). Again speaking of SkyLake X, lower that BLCK down to 100Mhz and set multiplier to 20x and lower your voltage or in fact use default voltage and do not worry about heat. As I said in the previous article, pushing 10/20 this high goes beyond reasonable. If you guys snatch 6/12 Skylake - X you can set that one to 4.5/4.6Ghz and you will not see heat issues.

-

Thom457 To someone not fully versed in what a I9 7900X is you'd never realize that the base clock speed of this $1000 CPU is 3.3 Ghz. One might surmise that the I9 7900X is defective because it won't run all the Cores at 4.3 Ghz without issues of heat. To someone that isn't obsessed with finding a way to run these CPUs at full load under unrealistic practical loads (lab rat only kind of loads) all the issues with "heat" and throttling sends kind of a false picture here... I'm not an Intel kind of Guy but this obsession tends to fall on both Camps and since Intel typically overclocks better the obsession is found there more than on the AMD side. Can anyone really see the difference between 120 and 160 FPS? I can see the dollar difference readily.Reply

I clearly remember all the overclocking issues with the Ivy stuff as the first generation die shrink from 32 NM to 22. Push the Cores beyond what they were rated for and heat and voltage spikes were the rule because the smaller die couldn't shed the heat that the 32 NM stuff could to the heat spreader. My Devil's Canyon was the result of optimizing that problem in rev two of the 22 NM stuff. My not over clocked DC running at stock 4.0 Ghz on water never needs to clock up all the cores on anything I can do in the practical world. On water it will naturally overclock better than air but most of the time it only overclocks up 1 to 2 Cores in normal use because outside of artificial means there is just no real world need for all four Cores to run at even 4.0 Ghz.

Anyone that needs to overclock their equipment to these extremes I hope has deep pockets or a Sugar Daddy with deep pockets. To some it seems the base clock rate of these CPUs are treated like the speed limits the majority ignore most of the time. I thought and still think that the $336.00 I paid for my Quad Devils Canyon was a lot of money. When you add in all the supporting expenses that tend to be locked in generational too blowing the CPU a year or two down the road doesn't just mean paying an outrageous amount for a replacement CPU if you can find a NIB one but likely having to pay for a complete new MB, Memory and new model CPU because that turn out to be a better investment compared to buying rather rare and expensive older CPU models. To those where money is no restriction on their obsessions none of this matters I understand.

I've been an enthusiast in this field since the Apple II days and not once have I abused my equipment in the vain pursuit of a meaningful increase in performance at the expense of the life span of the equipment. My time and money are valuable to me.

If you "need" a ten Core CPU to run beyond what it is rated for I'd hope there's a commercial payback for doing that. Various commercial and government interests can afford to buy by the thousands and apply cooling mechanisms that dwarf anything available on the Consumer side of the equation. You do this in the multi-CPU Server world and you void the warranty and are on your own. No one does that because the downside of blowing out a CPU and having to explain that to the money men isn't a career advancing move. That's why server stuff isn't unlocked. The average CPU use was 3% in my Server Farm before VM came along and promised to solve that problem. That some CPUs do in fact hit 100% now and then for limited periods of time gets lost in that drive to raise CPU utilization use and lower equipment cost through buying less CPUs for the most part. When net application performance declines under load while that CPU utilization level rises explaining that to VM Warriors is about as effective as explaining to Consumers that pushing your equipment beyond its design specs isn't going to buy you anything in the real world outside of having to replace your system years before it needs to be.

I saw the same madness when the 8 Core Intel Extreme came out. If you couldn't get all its Cores to run at 4.5 Ghz somehow you were being cheated it seemed. That it had a base frequency of 3.0 Ghz got lost in all the noise. That's its Xeon version ran at 3.2 Ghz was apparently lost on many.

We all want something for nothing at times... With CPU performance some will never be satisfied with anything offered. That's human nature. Just as a matter of practical concern will all the CPUs on this $1000.00 CPU at over 4.0 Ghz provide a better gaming experience than my ancient $336.00 4.0 Devil's Canyon at 4.0 Ghz all else equal? Will the minute difference in FPS be detectable by the human eye?

As another has said worrying about heat at 4.3 Ghz with this CPU model is insanity. It feeds a Beast that knows no way to be satisfied. The thermal limits at 14 NM with Silicon are there for everyone to see. I still remember the debates about which was faster the 6502 running at 2 Mhz or the Z80 at 5.0 Mhz? It didn't matter to me because my Z80A ran at 8 Mhz...on Static Ram no less. The S100 system with 16 KB of memory was a secondary heater for the house.

Next year Intel and AMD will bring out something faster and the year after and the year after that but for some nothing will ever be fast enough. The human condition there. I value my time and money. I don't need to feed the Beast here. There's little practical value in these kinds of articles and testing. The 10 Core I9 7900X rushed to production has issues running at 4.3 Ghz vs. its stock 3.3 Ghz... Who would have thought?

Just saying... -

the nerd 389 Could you check the VRM temperatures as well? Specifically, if the caps on a 13 phase VRM with 105C/5k caps exceeds 60C, then there is likely a 1-year reliability issue. Above 50C, there's likely a 2-year reliability issue.Reply -

Crashman Reply

It doesn't quite work out that way. To begin with, the BEST reason for desktop users to step up from Z270 to X299 is to get more PCIe. The fact that this doesn't jive with Kaby Lake-X just makes Kaby Lake-X a poor product choice.19925877 said:To someone not fully versed in what a I9 7900X is you'd never realize that the base clock speed of this $1000 CPU is 3.3 Ghz. One might surmise that the I9 7900X is defective because it won't run all the Cores at 4.3 Ghz without issues of heat. To someone that isn't obsessed with finding a way to run these CPUs at full load under unrealistic practical loads (lab rat only kind of loads) all the issues with "heat" and throttling sends kind of a false picture here... I'm not an Intel kind of Guy but this obsession tends to fall on both Camps and since Intel typically overclocks better the obsession is found there more than on the AMD side. Can anyone really see the difference between 120 and 160 FPS? I can see the dollar difference readily.

I clearly remember all the overclocking issues with the Ivy stuff as the first generation die shrink from 32 NM to 22. Push the Cores beyond what they were rated for and heat and voltage spikes were the rule because the smaller die couldn't shed the heat that the 32 NM stuff could to the heat spreader. My Devil's Canyon was the result of optimizing that problem in rev two of the 22 NM stuff. My not over clocked DC running at stock 4.0 Ghz on water never needs to clock up all the cores on anything I can do in the practical world. On water it will naturally overclock better than air but most of the time it only overclocks up 1 to 2 Cores in normal use because outside of artificial means there is just no real world need for all four Cores to run at even 4.0 Ghz.

Anyone that needs to overclock their equipment to these extremes I hope has deep pockets or a Sugar Daddy with deep pockets. To some it seems the base clock rate of these CPUs are treated like the speed limits the majority ignore most of the time. I thought and still think that the $336.00 I paid for my Quad Devils Canyon was a lot of money. When you add in all the supporting expenses that tend to be locked in generational too blowing the CPU a year or two down the road doesn't just mean paying an outrageous amount for a replacement CPU if you can find a NIB one but likely having to pay for a complete new MB, Memory and new model CPU because that turn out to be a better investment compared to buying rather rare and expensive older CPU models. To those where money is no restriction on their obsessions none of this matters I understand.

I've been an enthusiast in this field since the Apple II days and not once have I abused my equipment in the vain pursuit of a meaningful increase in performance at the expense of the life span of the equipment. My time and money are valuable to me.

If you "need" a ten Core CPU to run beyond what it is rated for I'd hope there's a commercial payback for doing that. Various commercial and government interests can afford to buy by the thousands and apply cooling mechanisms that dwarf anything available on the Consumer side of the equation. You do this in the multi-CPU Server world and you void the warranty and are on your own. No one does that because the downside of blowing out a CPU and having to explain that to the money men isn't a career advancing move. That's why server stuff isn't unlocked. The average CPU use was 3% in my Server Farm before VM came along and promised to solve that problem. That some CPUs do in fact hit 100% now and then for limited periods of time gets lost in that drive to raise CPU utilization use and lower equipment cost through buying less CPUs for the most part. When net application performance declines under load while that CPU utilization level rises explaining that to VM Warriors is about as effective as explaining to Consumers that pushing your equipment beyond its design specs isn't going to buy you anything in the real world outside of having to replace your system years before it needs to be.

I saw the same madness when the 8 Core Intel Extreme came out. If you couldn't get all its Cores to run at 4.5 Ghz somehow you were being cheated it seemed. That it had a base frequency of 3.0 Ghz got lost in all the noise. That's its Xeon version ran at 3.2 Ghz was apparently lost on many.

We all want something for nothing at times... With CPU performance some will never be satisfied with anything offered. That's human nature. Just as a matter of practical concern will all the CPUs on this $1000.00 CPU at over 4.0 Ghz provide a better gaming experience than my ancient $336.00 4.0 Devil's Canyon at 4.0 Ghz all else equal? Will the minute difference in FPS be detectable by the human eye?

As another has said worrying about heat at 4.3 Ghz with this CPU model is insanity. It feeds a Beast that knows no way to be satisfied. The thermal limits at 14 NM with Silicon are there for everyone to see. I still remember the debates about which was faster the 6502 running at 2 Mhz or the Z80 at 5.0 Mhz? It didn't matter to me because my Z80A ran at 8 Mhz...on Static Ram no less. The S100 system with 16 KB of memory was a secondary heater for the house.

Next year Intel and AMD will bring out something faster and the year after and the year after that but for some nothing will ever be fast enough. The human condition there. I value my time and money. I don't need to feed the Beast here. There's little practical value in these kinds of articles and testing. The 10 Core I9 7900X rushed to production has issues running at 4.3 Ghz vs. its stock 3.3 Ghz... Who would have thought?

Just saying...

Then you're stuck looking only at Skylake-X: The 28-lanes of two mid-tier models are probably good enough for most enthusiasts. The extra cores? If you need the extra lanes, I hope you want the extra cores as well.

But the maximum way to test THE BOARDS is with a 44-lane CPU. And then you're getting extra cores again, which are useful for testing the limits of the voltage regulator.

LGA 2066 doesn't offer a 6C/12T CPU with 44 lanes and extra overclocking capability. Such a mythical beast might be the best fit for the majority of HEDT users, but since it doesn't exist we're just going to test boards as close to their limits as we can afford.

-

Crashman Reply

I haven't plugged in a Voltage Resistor Module since Pentium Pro :D I'm just nitpicking over naming conventions at this point. The thermistor is wedged between the chokes and MOSFET sink in the charts shown.19926006 said:Could you check the VRM temperatures as well? Specifically, if the caps on a 13 phase VRM with 105C/5k caps exceeds 60C, then there is likely a 1-year reliability issue. Above 50C, there's likely a 2-year reliability issue.

-

the nerd 389 Reply

The caps are much more likely to fail than the MOSFETs in my experience. They're more accessible than the chokes. The ones on that board appear to be 160 uF, 6.3V caps for the VRMs. Is there any way to check their temps and if they're 105C/5k models or 105C/10k?19926029 said:

I haven't plugged in a Voltage Resistor Module since Pentium Pro :D I'm just nitpicking over naming conventions at this point. The thermistor is wedged between the chokes and MOSFET sink in the charts shown.19926006 said:Could you check the VRM temperatures as well? Specifically, if the caps on a 13 phase VRM with 105C/5k caps exceeds 60C, then there is likely a 1-year reliability issue. Above 50C, there's likely a 2-year reliability issue. -

Crashman Reply

Marked FP12K 73CJ 561 6.3. I should probably get an infrared thermometer :D19926116 said:

The caps are much more likely to fail than the MOSFETs in my experience. They're more accessible than the chokes. The ones on that board appear to be 160 uF, 6.3V caps for the VRMs. Is there any way to check their temps and if they're 105C/5k models or 105C/10k?19926029 said:

I haven't plugged in a Voltage Resistor Module since Pentium Pro :D I'm just nitpicking over naming conventions at this point. The thermistor is wedged between the chokes and MOSFET sink in the charts shown.19926006 said:Could you check the VRM temperatures as well? Specifically, if the caps on a 13 phase VRM with 105C/5k caps exceeds 60C, then there is likely a 1-year reliability issue. Above 50C, there's likely a 2-year reliability issue.

-

drajitsh Tom raises some valid points but crash man gives a good answer. My take is that the tempratures matter in 2 situations without overclocks--Reply

1. Workstation use, specially when cost constrained from purchasing Skylake SP and various accelerators (if your workload cannot be GPU accelerated.)

2. High ambient if you cannot or do not want to use below ambient cooling.