Facebook deploys the Steam Deck's Linux scheduler across its data centers — Valve's low-latency scheduler perfect for managing Meta's workloads at massive data centers

The scheduler was originally developed to prevent dropped frames on Valve's Steam Deck

When Meta went looking for a better Linux CPU scheduler for its massive server fleet, it didn't start in the data center. Instead, it started with a handheld gaming PC. In a recent technical talk at the Linux Plumbers' Conference in Tokyo, Meta engineers detailed how they've been deploying SCX-LAVD, a low-latency Linux scheduler originally developed by Valve for the Steam Deck, across production servers running everything from messaging backends to caching services. The surprising conclusion: a scheduler designed to keep games responsive under load turns out to be an excellent fit for large-scale data center workloads, too.

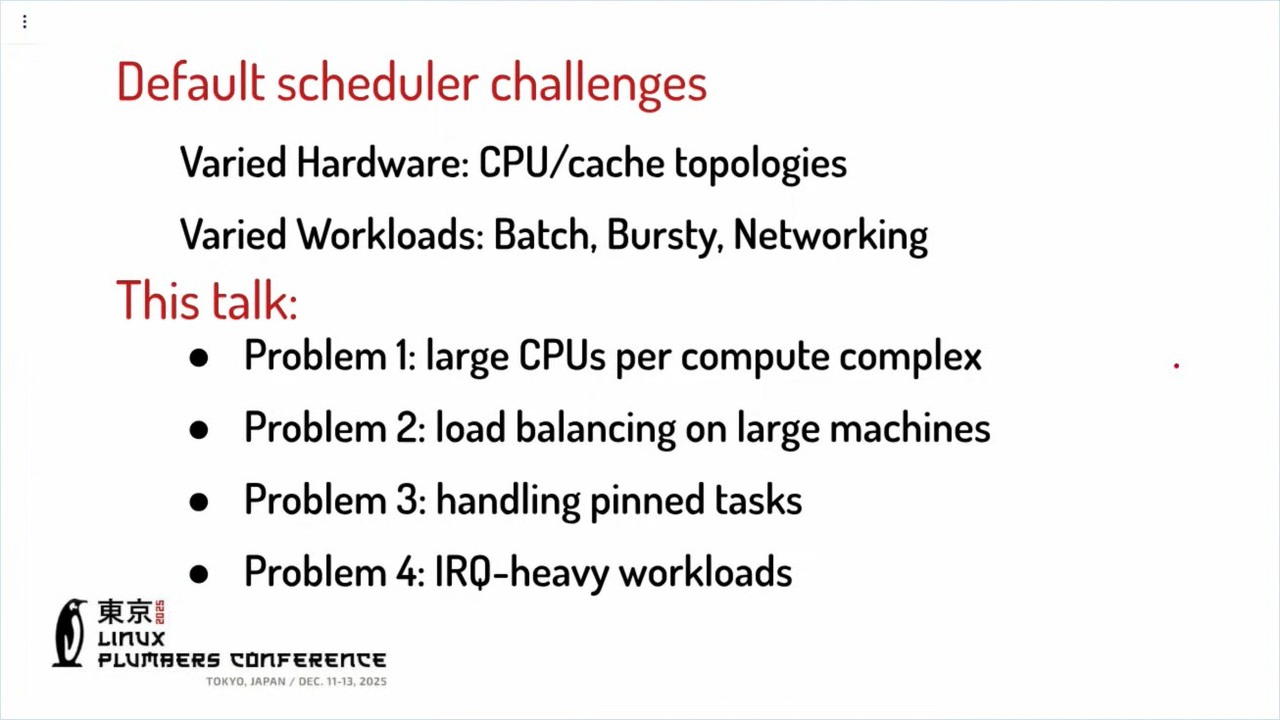

At a high level, a CPU scheduler decides which programs get to run on what CPU cores, and when. Linux's default scheduler has to work everywhere — phones, laptops, desktops, servers — which makes it extremely conservative. Meta's challenge is different: it runs enormous machines with hundreds of CPU cores, wildly varied workloads, and most critically, strict latency targets. In that environment, "good enough everywhere" often isn't good enough. Rather than building a custom scheduler for every service, Meta wanted something closer to a fleet-wide default, a "one size fits most" scheduler that could adapt automatically without hand-tuned configuration. That's where SCX-LAVD came in.

SCX-LAVD is built on sched_ext, a relatively new Linux framework that enables alternative schedulers to plug into the kernel without significant kernel modification. In simple terms, sched_ext lets companies experiment with different scheduling strategies safely and incrementally, instead of forking Linux or maintaining massive patch sets.

LAVD itself stands for Latency-Aware Virtual Deadline, and the name gives the game away, if you pay attention. Instead of relying on static priorities or manual hints, the scheduler continuously observes how tasks behave, how often they sleep, wake, and block, and then estimates which ones are latency-sensitive. Those tasks get earlier "virtual deadlines," increasing their chances of running promptly when the system is busy.

That approach was originally motivated by gaming. On the Steam Deck, missed scheduling deadlines translate into dropped frames, stutter, or sluggish input response. As it happens, in a data center, the same failure modes show up as slow web requests, delayed messages, or missed service-level objectives. Completely different applications, but fundamentally, the same underlying problem.

In the presentation, Meta's engineers described several challenges that emerged when they scaled LAVD up to server-class hardware. On machines with dozens of cores sharing a single scheduling queue, contention became a bottleneck. Pinned tasks, which are threads that can only run on one specific core, caused unnecessary interference. Network-heavy services spent so much time handling interrupts that the scheduler's fairness accounting broke down.

These issues forced changes to how LAVD handled task queues, time slices, and CPU accounting. In several cases, Meta added logic to better preserve cache locality or to compensate for cores overwhelmed by network interrupts, effectively treating them as "slower" CPUs. Crucially, these fixes didn't require per-service configuration or manual priority tagging. That's the core appeal of LAVD for Meta: it adapts based on observed behavior, not hard-coded rules.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The engineers also addressed the obvious concern: does optimizing a scheduler for Meta's servers risk harming its original gaming use case? So far, they say, the changes are either neutral or beneficial for the Steam Deck, and features that don't apply can simply be disabled with kernel flags. Still, they admitted that the experiment is ongoing.

As Linux becomes the common substrate for everything from handheld consoles to hyperscale servers, innovations in one corner of the ecosystem are increasingly bleeding into others. In this case, the same scheduling logic that helps a $400 gaming PC feel snappy may also help keep billions of messages moving on time. This is a perfect demonstration of the power of open source software, and a salient argument in favor of how a rising tide lifts all boats.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Zak is a freelance contributor to Tom's Hardware with decades of PC benchmarking experience who has also written for HotHardware and The Tech Report. A modern-day Renaissance man, he may not be an expert on anything, but he knows just a little about nearly everything.