AMD To Develop Semi-Custom Graphics Chip For Intel

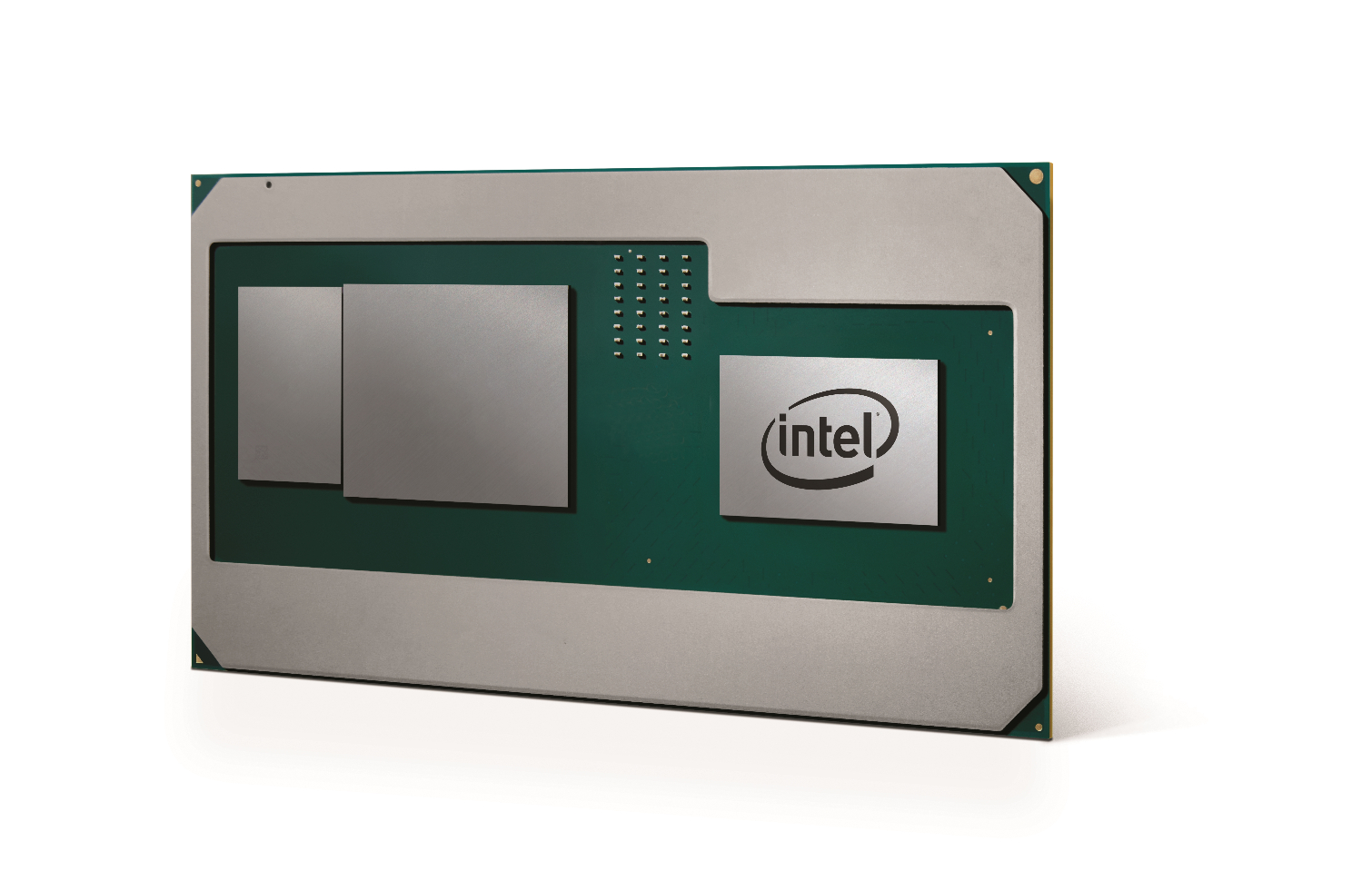

In an interesting turn of events, AMD announced that it's creating a semi-custom GPU for a forthcoming Intel Multi-Chip Package (MCP). AMD's GPU will snap into an eighth-generation Intel processor that utilizes HBM2 memory, a first for a mobile PC, and Intel's EMIB interconnect. Both Intel and AMD confirmed development of the new H-Series processors, which also marks the first use of EMIB technology in a consumer product.

There have been rumors, beginning with a forum post earlier this year by HardOCP's Kyle Bennet, that claimed AMD is developing GPUs for Intel. This led to quite the industry buzz, and a short-term stock gain for AMD, but it was largely dismissed as an unfounded rumor. At the time, Intel also issued an official statement claiming that it had not licensed AMD's graphics technology. But more recently AMD has mentioned a big semi-custom chip win in several of its latest financial earnings calls, but declined to name the new customer. It appears to be Intel.

AMD finally released an official statement this morning:

“Our collaboration with Intel expands the installed base for AMD Radeon GPUs and brings to market a differentiated solution for high-performance graphics,” said Scott Herkelman, vice president and general manager, AMD Radeon Technologies Group. “Together, we are offering gamers and content creators the opportunity to have a thinner-and-lighter PC capable of delivering discrete performance-tier graphics experiences in AAA games and content creation applications. This new semi-custom GPU puts the performance and capabilities of Radeon graphics into the hands of an expanded set of enthusiasts who want the best visual experience possible.”

The Wall Street Journal claims the new chip will not compete with AMD's forthcoming Ryzen Mobile processors. AMD hasn't officially confirmed the information, but it seems to be true, given the few details Intel has released about the H-Series design. Intel's H-Series also falls into the 35-45W TDP envelope, while the Ryzen Mobile processors are 15W processors.

Intel also released a statement:

The new product, which will be part of our 8th Gen Intel Core family, brings together our high-performing Intel Core H-series processor, second generation High Bandwidth Memory (HBM2) and a custom-to-Intel third-party discrete graphics chip from AMD’s Radeon Technologies Group* – all in a single processor package.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Notably, Intel's eighth-generation lineup consists of three separate nodes/architectures: the 14nm+ Kaby Lake-R (refresh), 14nm++ Coffee Lake, and 10nm Cannon Lake. Intel hasn't disclosed which process it will employ with the new processor, but it is likely paired with the forthcoming 10nm Cannon Lake processors that have long been scheduled for release early next year. It's also logical to assume the AMD GPU employs Vega cores, especially given the use of HBM2 and the unique power delivery system (covered below).

Intel has traditionally used Nvidia IP for its graphics solutions through a patent-sharing license, but that agreement expired earlier this year. The agreement allows Intel to use Nvidia's IP in perpetuity, but it only applies to the IP shared during the licensing period. That means Intel doesn't have access to newer Nvidia IP. Intel confirmed to us that its new arrangement with AMD doesn't include licensing of AMD's technology; instead, Intel is merely purchasing finished semi-custom products from AMD that it fabs with its partners. That means Intel likely isn't abandoning its own internal GPU development programs. Intel also confirmed to us that it approached AMD to begin the project.

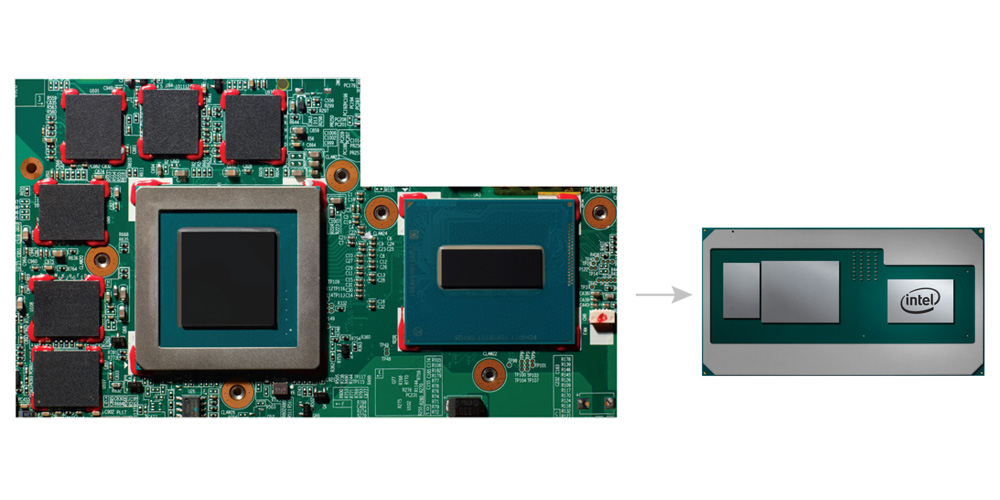

Intel claims the new design will allow for thinner and lighter devices, such as notebooks, 2-in-1s, and mini-desktops, by combining all of the components into one device. Intel claims the new processor, which obviously will power high-end mobile products, reduces the component footprint by half compared to existing motherboards. That equates to a real estate savings of 1,900mm2. That enables OEMs to make thinner and lighter devices while also bulking up the thermal solution. Increased thermal dissipation is a key enabler for high-power mobile devices because it allows the device to operate at a higher level of performance for a longer duration.

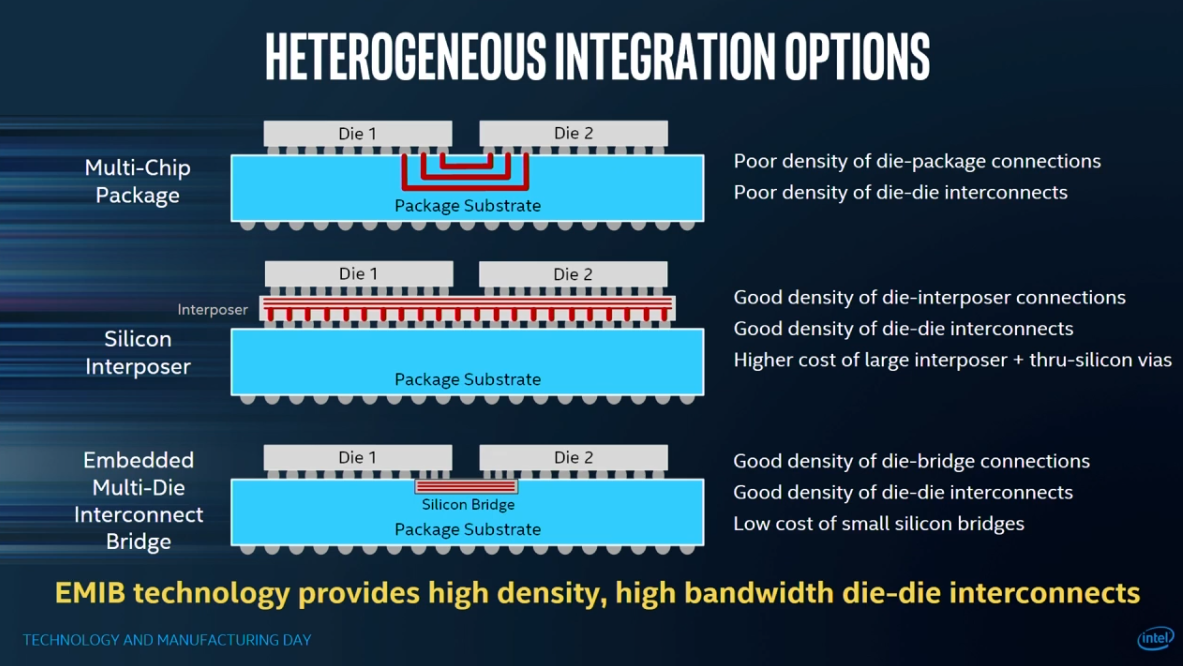

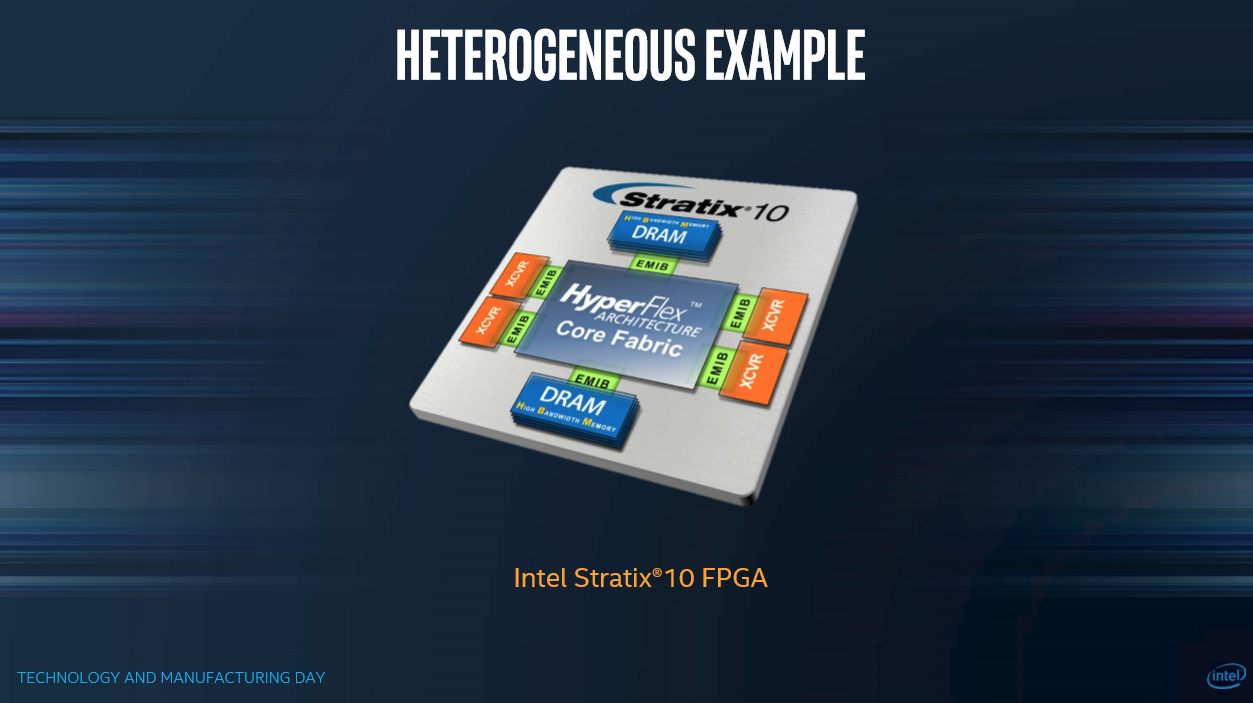

Intel gave us the details of its new EMIB (Embedded Multi-Die Interconnect Bridge) earlier this year at the Hot Chips conference. Our Hot Chips 2017: Intel Deep Dives Into EMIB article covers all of the details of the new technology. In a nutshell, EMIB is an interconnect that ties multiple discrete chips together into a single heterogeneous package. The connection consists of a small silicon bridge, or several of them, embedded into the package substrate.

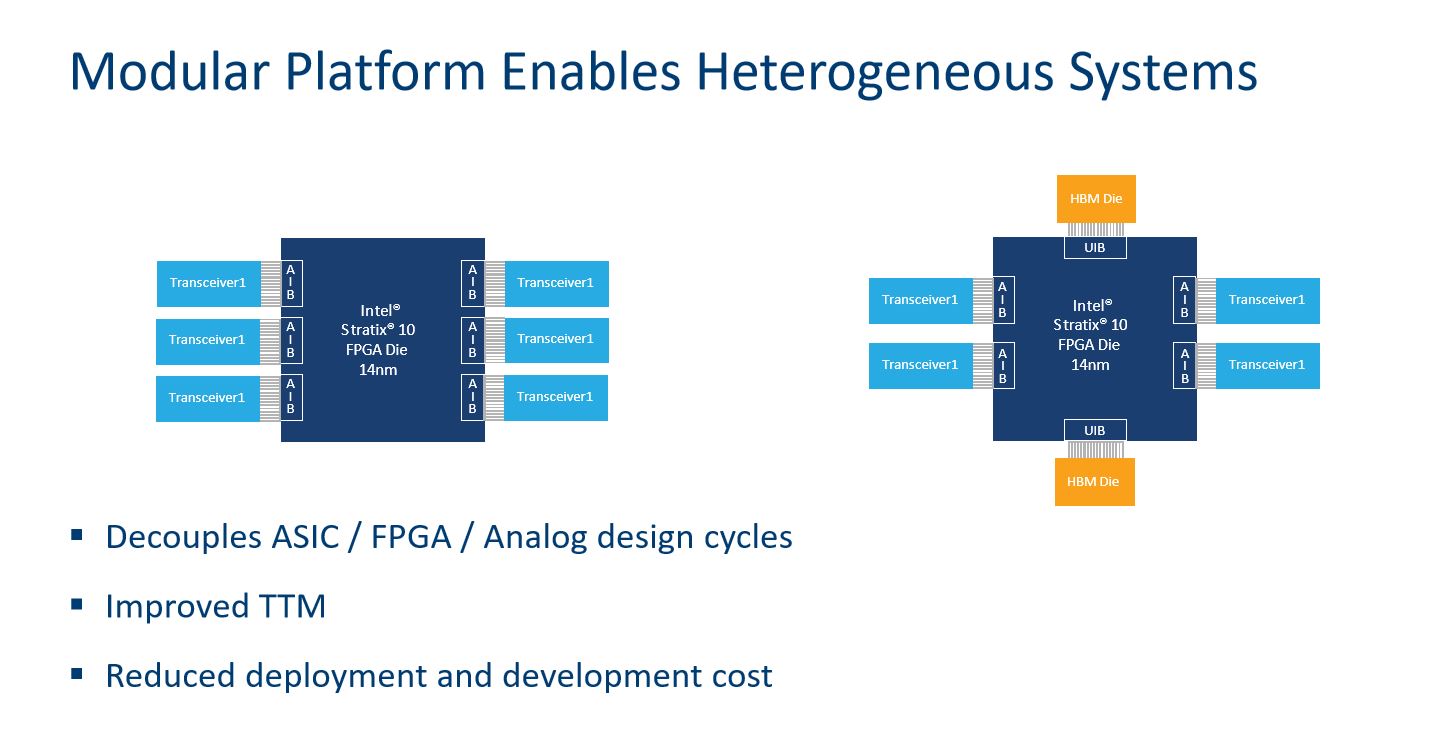

Intel designed the technology to tie its chips together with "chiplets," which are small, re-usable, third-party IP building blocks that can be processors, transceivers, memory, GPUs, or other types of components. Intel can mix and match the chiplets, much like Lego blocks, and connect them to its processors to create custom designs for different applications. Above, we see Intel's plans for matching its Stratix FPGAs with several chiplets, but in the case of the new eighth-gen processors, the components will consist of AMD's GPU and several HBM2 packages.

The different components communicate over a quasi-standardized communication protocol, which should allow vendors to protect key portions of their IP.

Intel added its own software drivers to the H-Series processor to manage temperature, power delivery, and performance in real time. This new software allows the processor to dynamically adjust the power delivery ratio between the processor and the GPU based upon the workload, much like AMD's new power delivery subsystem in its Ryzen Mobile products. That will help reduce overall power consumption. HBM2 also offers more performance and lower power consumption than GDDR5 memory, while also consuming less precious real estate.

The dawn of the chiplet marks a tremendous shift in the semiconductor industry. The industry is somewhat skeptical of the chiplet concept, largely because it requires competitors to arm their competition, but the Intel and AMD collaboration proves that it can work with two of the biggest heavyweights in the computing industry. Not to mention bitter rivals. Industry watchers have also largely been in agreement that EMIB would not filter down to the consumer market for several years, but the announcement clearly proves the technology is ready for prime time.

DARPA initially brought the chiplet revolution to the forefront with its CHIPS (Common Heterogeneous Integration and Intellectual Property (IP) Reuse Strategies) initiative, which aims to circumvent the limitations of the waning Moore's Law.

Intel plans to bring the new devices to market early in 2018 through several major OEMs. Neither Intel nor AMD have released any detailed information, such as graphics or compute capabilities, TDP ratings, or HBM2 capacity, but we expect those details to come to light early next year.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

hannibal This seems more like amd is selling gpus to Intel devices. So Intel make the cpu and amd make the gpu and Intel clue these together in their factories.Reply

First I was thinking that Intel Also make the gpu, but the gpu would be designed by amd. That would have been the fastest and most effient amd gpu ever, because it would have been made by Intel, but now it seems that Global foundries makes the gpu and Intel make the rest, so the gpu will suffer the same problems as all amd products at this moment. The proses does not like high frequences... -

derekullo Reply20348421 said::( shame on you AMD .. dont give intel GPU advantage ever.

AMD must be in a desperate need for cash.

Just the mentioning of this sent AMD's stock up 8%.

-

Eximo Vega architecture as being produced is designed as a low power chip. It doesn't demonstrate great performance per watt on the high end because it wasn't really meant for that. This is coming from a company that basically swore they weren't going to compete in the high end market a few years ago. Clearly they know where the profits really come from and it isn't big desktop graphics cards.Reply

Should be quite good I would think. Hopefully they make it into a few NUCs, I would buy one just to mess with it. -

derekullo Reply20348513 said:Vega architecture as being produced is designed as a low power chip. It doesn't demonstrate great performance per watt on the high end because it wasn't really meant for that. This is coming from a company that basically swore they weren't going to compete in the high end market a few years ago. Clearly they know where the profits really come from and it isn't big desktop graphics cards.

Should be quite good I would think. Hopefully they make it into a few NUCs, I would buy one just to mess with it.

I beg to differ on where the profits are.

If AMD can make a graphics card that is more efficient in mining ethereum than a Geforce 1070 they literally won't be able to keep them in stock.

Miners will buy as many as they can produce.

-

AnimeMania I find this interesting because when the graphic processors were part of the CPU they shared the same memory. Now that the graphic processors are separated from the CPU they have their own high speed memory. This should make an impressive leap in laptop graphics performance without increasing thickness.Reply -

redgarl Reply20348533 said:20348513 said:Vega architecture as being produced is designed as a low power chip. It doesn't demonstrate great performance per watt on the high end because it wasn't really meant for that. This is coming from a company that basically swore they weren't going to compete in the high end market a few years ago. Clearly they know where the profits really come from and it isn't big desktop graphics cards.

Should be quite good I would think. Hopefully they make it into a few NUCs, I would buy one just to mess with it.

I beg to differ on where the profits are.

If AMD can make a graphics card that is more efficient in mining ethereum than a Geforce 1070 they literally won't be able to keep them in stock.

Miners will buy as many as they can produce.

Polaris is already a huge mining. Putting more GPU for miners is not a good strategy. AMD knows that and the market will fluctuate depending on the values of these cryptocurrencies, however AI, Infinity Fabric and EMIB are the future.

Intel just understood how much AMD Infinity Fabric is a huge idea. It can reduce significantly the cost of production of complex chips with more simple ones and use the best technology available from third parties for different aspects, like GPU.

This is nothing more than good news, it means Intel is trusting AMD Vega core to be the best options for their project.

-

madmatt30 Its a good move for amd essentially even taking into account Intel are their primary 'enemy' .Reply

They get their GPU soc into Intel low power laptops & also get their own entire soc into up & coming laptops running raven ridge (which looks in all honesty to have blinding specs for the power requirements)

They'll make money on virtually every device sold whether it be an intel or an amd CPU based one.

Its a win win situation.

-

derekullo Reply20348640 said:20348533 said:20348513 said:Vega architecture as being produced is designed as a low power chip. It doesn't demonstrate great performance per watt on the high end because it wasn't really meant for that. This is coming from a company that basically swore they weren't going to compete in the high end market a few years ago. Clearly they know where the profits really come from and it isn't big desktop graphics cards.

Should be quite good I would think. Hopefully they make it into a few NUCs, I would buy one just to mess with it.

I beg to differ on where the profits are.

If AMD can make a graphics card that is more efficient in mining ethereum than a Geforce 1070 they literally won't be able to keep them in stock.

Miners will buy as many as they can produce.

Polaris is already a huge mining. Putting more GPU for miners is not a good strategy. AMD knows that and the market will fluctuate depending on the values of these cryptocurrencies, however AI, Infinity Fabric and EMIB are the future.

Intel just understood how much AMD Infinity Fabric is a huge idea. It can reduce significantly the cost of production of complex chips with more simple ones and use the best technology available from third parties for different aspects, like GPU.

This is nothing more than good news, it means Intel is trusting AMD Vega core to be the best options for their project.

Having an efficient GPU doesn't mean it has to be used for mining.

But if this efficiency translates into more hashes per watt then that is just icing on the cake.

For comparison:

http://www.legitreviews.com/amd-radeon-rx-vega-64-vega-56-ethereum-mining-performance_197049

The hashes per watt picture says it best

0.18 for Geforce 1070

0.13 for Vega 56 and Vega 64

Vega/Polaris is "huge at mining" pulling 32-34 Mh/s but it is also huge at gulping power using 100-160 more watts to achieve the same Mh/s.

-

Martell1977 I think HBM2 and AMD's modular construction are major factors in Intel decision. Have to remember that Intel showed a lot of interest in Mantel shortly after it came out.Reply