ZeroPoint Technology AB Secures $2.5 Million for Hardware Based RAM Data Compression

ZeroPoint Technologies AB, a Swedish startup company appearing as a spinout of the Chalmers University of Technology, has today announced its latest work. Ziptilion, a memory technology that has been awarded a patent, €2.5 million (just under $3 million) in seed funding, and promises to double your RAM capacity and bandwidth, all while achieving higher power efficiency.

Many of you remember the old RAM doubling software that existed back in the 80s and 90s. They were a big scam at the time, promising users who purchased the software double their ram capacities without a proper hardware upgrade. Today, ZeroPoint plans to do that, however, with a completely different, hardware-based approach.

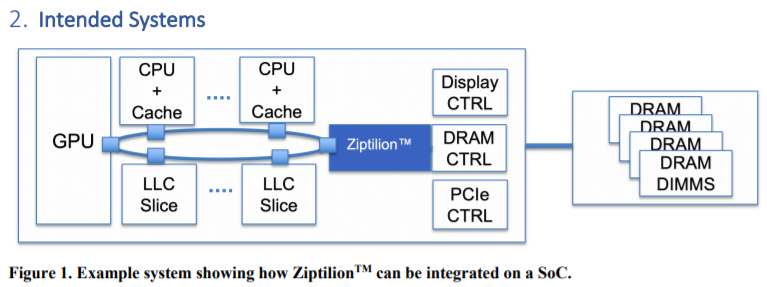

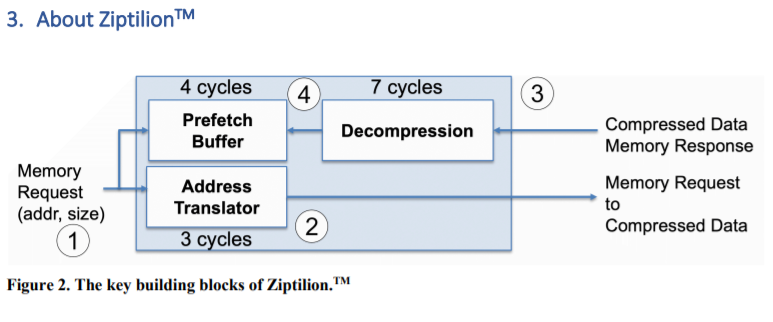

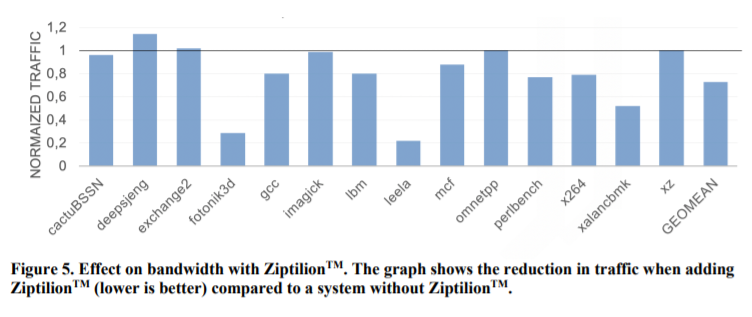

Called the Ziptilion, this hardware IP works by compressing memory data using proprietary compression algorithms, and ZeroPoint claims an2-3 times increase in bandwidth. The way Ziptilion works is by embedding the IP into a design, and it works directly with a memory controller and processor's cache subsystem, using the industry-standard SoC AXI interconnect fabric. The company claims that its technology can compress memory in such an efficient way that memory latency mostly is reduced by using the Ziptilion IP, as it fetches the compressed data from and to the memory. In extreme cases, the latency can be higher approximately 1-100 nanoseconds, however, the gains are outweighing the downsides.

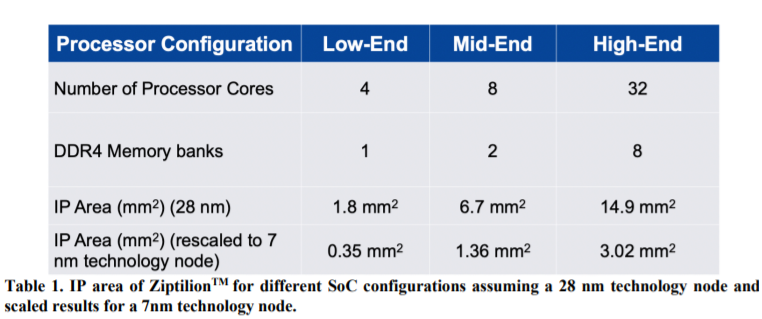

And you might wonder what is the cost of this IP? Well, according to the company, there exists a design on TSMC's 28nm node that implemented the Ziptilion IP on the AXI bus running at 800 MHz frequency and with a bandwidth of 32 GB/s. The average 7 nm dual-channel memory design that uses this IP will gain only 1.36 square millimeters of extra die usage, while a server CPU with an eight-channel memory controller will require additional 3.02 square millimeters for embedding it.

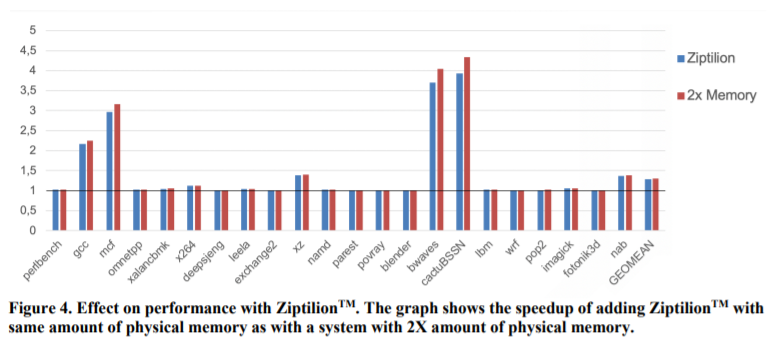

In terms of performance, the Ziptilion IP whitepaper has compared its compression technology with the addition of more RAM. Precisely, the whitepaper compared the impact of doubling the system's RAM capacity with an addition of Ziptilion design to the SoC. It concludes that the new technology can bring very similar results, effectively doubling your working ram capacity thanks to the compressed data.

And for possible applications, the design can be implemented in many forms. More specifically, a SoC like the one found on Raspberry Pi and the smartphone SoCs can benefit greatly from it, as those systems are limited by the system memory they are equipped with. A simple smartphone with 8 GB of RAM could see a boost up to 16 GB RAM with this IP block, as an example.

While we don't know if this technology will ever make it to the mainstream market, it does show promise, unlike the RAM doublers of yesteryear. The people behind the technology have been researching memory compression algorithms for over 15 years, and it looks like the market is finally ready for something like this to be embedded into future designs.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

-

Howardohyea very promising on paper, but like all demonstrations I'm assuming uptake will take a while. I'm not saying it's bad, just it might take some time to gain widespread adoptionReply -

Alvar "Miles" Udell But with DDR5 drastically increasing memory capacities, up to 128GB per DIMM and 2TB per server, will this really see the light of day?Reply -

InvalidError Reply

Twice as much memory costs roughly twice as much, usually more at the top-end. Though I'm highly skeptical that this stuff will really achieve a 2X effective memory size at sub-100ns latency increase unless running applications that handle large amounts of highly compressible data. For an SoC running an UI and games, most of the graphics data is already compressed using image-specific algorithms, adding a generic compression layer on top would most likely hurt more than it helps.Alvar Miles Udell said:But with DDR5 drastically increasing memory capacities, up to 128GB per DIMM and 2TB per server, will this really see the light of day?

I'd imagine having to have the OS coordinate with DRAM chips to manage an ever-changing effective memory size due to variable data compressibility would be somewhat of a nightmare for the main working memory of a general-purpose computing device where there you cannot make any assumptions whatsoever on overall data compressibility.

With Windows (and likely other modern OSes too) already compressing rarely used pages to reclaim memory and reduce swapfile usage, having in-DRAM compression sounds like unnecessary added complexity.

I doubt this stuff will make it beyond a few niches if it goes anywhere at all. -

abufrejoval ReplyInvalidError said:Twice as much memory costs roughly twice as much, usually more at the top-end. Though I'm highly skeptical that this stuff will really achieve a 2X effective memory size at sub-100ns latency increase unless running applications that handle large amounts of highly compressible data. For an SoC running an UI and games, most of the graphics data is already compressed using image-specific algorithms, adding a generic compression layer on top would most likely hurt more than it helps.

I'd imagine having to have the OS coordinate with DRAM chips to manage an ever-changing effective memory size due to variable data compressibility would be somewhat of a nightmare for the main working memory of a general-purpose computing device where there you cannot make any assumptions whatsoever on overall data compressibility.

With Windows (and likely other modern OSes too) already compressing rarely used pages to reclaim memory and reduce swapfile usage, having in-DRAM compression sounds like unnecessary added complexity.

I doubt this stuff will make it beyond a few niches if it goes anywhere at all.

You're obviously not wrong when you argue that there may be data that's hard to compress.

For those cases it's most important they recognize such data quickly and do not touch it.

But if there wasn't enough data that's still compressible, they wouldn't do it. And I am really thinking more of code here, which can contain so many high zero address bits, that these get reused by ARM 8.3 control flow integrity tags.

From what I understood when I talked to the guys at HiPEAC 2020 in Bologna, they have done their homework and run real-life tests.

These days my concern would be that we also need to have memory encryption and this would obviously need to happen after the compression, but well integrated enough not to add terrible latencies on top.

Of course some serious de-duplication potential might get lost with both, e.g. thinking of standard libraries and large VDI installations. -

abufrejoval Reply

The reason we see vastly increased memory capacities is that we want to process vastly greater amounts of data: there is real pressure there and real money is spent on relieving the pressure.Alvar Miles Udell said:But with DDR5 drastically increasing memory capacities, up to 128GB per DIMM and 2TB per server, will this really see the light of day?

At 2TB both the energy and investment cost for DRAM tend to exceed that of the CPUs. If such an IP block can double RAM or halve the chips without turning it all into heat, that's bottom line money every COO will appreciate.

I wouldn't even mind this in my smarphone or laptop. Can't remember having too much RAM, ever....

(Well, actually there was this PC which took far too long when doing its DRAM check when fully loaded with 640KB. That was at a time when the original IBM-PC a) had ECC DRAM b) would parity check it on every cold boot and that took longer than booting PC-DOS from a floppy disk...) -

InvalidError Reply

If you want to de-duplicate data, you need to have a sufficiently large data window to find such data in the first place. There isn't much time to transfer data and operate on it when your target is sub-100ns added latency, so the data window will be quite narrow and de-duplication opportunities quite few by necessity.abufrejoval said:Of course some serious de-duplication potential might get lost with both, e.g. thinking of standard libraries and large VDI installations.

With encryption on top, it probably lands in "forget it" territory unless you add an extra storage/cache tier between DRAM and whatever media the swap file resides on. -

abufrejoval ReplyInvalidError said:If you want to de-duplicate data, you need to have a sufficiently large data window to find such data in the first place. There isn't much time to transfer data and operate on it when your target is sub-100ns added latency, so the data window will be quite narrow and de-duplication opportunities quite few by necessity.

With encryption on top, it probably lands in "forget it" territory unless you add an extra storage/cache tier between DRAM and whatever media the swap file resides on.

You're right on de-dup, that would only work at storage level and with memory encryption that's far too late. That comes from me working too much with RHEL VDO, where (storage) compression and de-duplication are merged into one process.

As to memory compression and encryption: memory encryption is being done today even on x86 and the impact seems quite low enough, people might not even notice. I can barely tell with my 5800U based notebook or my 5800X based workstation, which both support full memory encryption on the BIOS.

Currently on the client side the granularity is only at the physical host level, even if the hardware is all the same as the servers, where it's supported at the VM level with EPYC (Intel is just as bad and won't support per-VM encryption on VMs on Tiger Lake).

Including this Swedish IP block would have to be done before the encryption, but I don't see why it couldn't be done.

As for memory compression I mostly keep wondering why it's disappeared in the mean-time, because I believe it's been around for a long time and IBM even sold a Xeon chipset long ago (MXT?) that supported it. But that was at a time when memory controllers wheren't yet included in the CPUs.

I found this old hot-chip paper on the topic: https://old.hotchips.org/wp-content/uploads/hc_archives/hc12/hc12pres_pdf/IBM-MXTpt1.PDF

Memory compression encryption must be a long time mainframe feature, because these used to be clouds before the word cloud computing was invented to describe what they had done for decades.

I don't see a lot of swapping these days on 2TB machines... but I remember my PDP-11 (DEC Professional 350) doing a lot of swapping--even with some benefit... at 32Kwords maximum segment size.

Ah and I believe one of my first mobile smartphones, the Samsung Galaxy i-9000 actually started swap to zRAM with one custom ROM to eke a bit more life out of 512MB of RAM.