Nvidia GeForce GTX Titan 6 GB: GK110 On A Gaming Card

After almost one year of speculation about a flagship gaming card based on something bigger and more complex than GK104, Nvidia is just about ready with its GeForce GTX Titan, based on GK110. Does this monster make sense, or is it simply too expensive?

GPU Boost 2.0: Changing A Technology’s Behavior

GPU Boost is Nvidia’s mechanism for adapting the performance of its graphics cards based on the workloads they encounter. As you probably already know, games exact different demands on a GPU’s resources. Historically, clock rates had to be set with the worst-case scenario in mind. But, under “light” loads, performance ended up on the table. GPU Boost changes that by monitoring a number of different variables and adjusting clock rates up or down as the readings allow.

In its first iteration, GPU Boost operated within a defined power target—170 W in the case of Nvidia’s GeForce GTX 680. However, the company’s engineers figured out that they could safely exceed that power level, so long as the graphics processor’s temperature was low enough. Therefore, performance could be further optimized.

Practically, GPU Boost 2.0 is different only in that Nvidia is now speeding up its clock rate based on an 80-degree thermal target, rather than a power ceiling. That means you should see higher frequencies and voltages, up to 80 degrees, and within the fan profile you’re willing to tolerate (setting a higher fan speed pushes temperatures lower, yielding more benefit from GPU Boost). It still reacts within roughly 100 ms, so there’s plenty of room for Nvidia to make this feature more responsive in future implementations.

Of course, thermally-dependent adjustments do complicate performance testing more than the first version of GPU Boost. Anything able to nudge GK110’s temperature up or down alters the chip’s clock rate. It’s consequently difficult to achieve consistency from one benchmark run to the next. In a lab setting, the best you can hope for is a steady ambient temperature.

Vendor-Sanctioned Overvoltage?

When Nvidia creates the specifications for a product, it targets five years of useful life. Choosing clock rates and voltages is a careful process that must take this period into account. Manually overriding a device’s voltage setting typically causes it to run hotter, which adversely effects longevity. As a result, overclocking is a sensitive subject for most companies—it’s standard practice to actively discourage enthusiasts from tuning hardware aggressively. Even if vendors know guys like us ignore those warnings anyway, they’re at least within their right to deny support claims on components that fail prematurely due to overclocking.

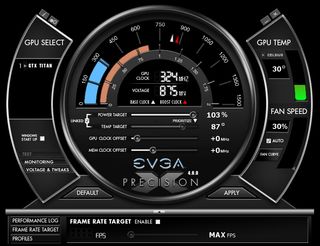

Now that GPU Boost 2.0 is tied to thermal readings, the technology can make sure GK110 doesn’t venture up into a condition that’ll hurt it. So, Nvidia now allows limited voltage increases to improve overclocking headroom, though add-in card manufacturers are free to narrow the range as they see fit. Our reference GeForce GTX Titans default to a 1,162 mV maximum, though EVGA’s Precision X software pushed them as high as 1,200 mV. You are asked to acknowledge the increased risk due to electromigration. However, your warranty shouldn’t be voided.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Current page: GPU Boost 2.0: Changing A Technology’s Behavior

Prev Page Compute Performance And Striking A Balance Next Page Brute Force With Three-Way SLI...-

jaquith Hmm...$1K yeah there will be lines. I'm sure it's sweet.Reply

Better idea, lower all of the prices on the current GTX 600 series by 20%+ and I'd be a happy camper! ;)

Crysis 3 broke my SLI GTX 560's and I need new GPU's... -

Trull Dat price... I don't know what they were thinking, tbh.Reply

AMD really has a chance now to come strong in 1 month. We'll see. -

tlg The high price OBVIOUSLY is related to low yields, if they could get thousands of those on the market at once then they would price it near the gtx680. This is more like a "nVidia collector's edition" model. Also gives nVidia the chance to claim "fastest single gpu on the planet" for some time.Reply -

tlg AMD already said in (a leaked?) teleconference that they will not respond to the TITAN with any card. It's not worth the small market at £1000...Reply -

wavebossa "Twelve 2 Gb packages on the front of the card and 12 on the back add up to 6 GB of GDDR5 memory. The .33 ns Samsung parts are rated for up to 6,000 Mb/s, and Nvidia operates them at 1,502 MHz. On a 384-bit aggregate bus, that’s 288.4 GB/s of bandwidth."Reply

12x2 + 12x2 = 6? ...

"That card bears a 300 W TDP and consequently requires two eight-pin power leads."

Shows a picture of a 6pin and an 8pin...

I haven't even gotten past the first page but mistakes like this bug me

-

wavebossa wavebossa"Twelve 2 Gb packages on the front of the card and 12 on the back add up to 6 GB of GDDR5 memory. The .33 ns Samsung parts are rated for up to 6,000 Mb/s, and Nvidia operates them at 1,502 MHz. On a 384-bit aggregate bus, that’s 288.4 GB/s of bandwidth."12x2 + 12x2 = 6? ..."That card bears a 300 W TDP and consequently requires two eight-pin power leads."Shows a picture of a 6pin and an 8pin...I haven't even gotten past the first page but mistakes like this bug meReply

Nevermind, the 2nd mistake wasn't a mistake. That was my own fail reading. -

ilysaml ReplyThe Titan isn’t worth $600 more than a Radeon HD 7970 GHz Edition. Two of AMD’s cards are going to be faster and cost less.

My understanding from this is that Titan is just 40-50% faster than HD 7970 GHz Ed that doesn't justify the Extra $1K. -

battlecrymoderngearsolid Can't it match GTX 670s in SLI? If yes, then I am sold on this card.Reply

What? Electricity is not cheap in the Philippines.

Most Popular