Sapphire Toxic HD 7970 GHz Edition Review: Gaming On 6 GB Of GDDR5

Sapphire gives its new flagship graphics card 6 GB of very fast memory, compared to the mere 3 GB on AMD's reference card. Does this give Sapphire's Toxic HD 7970 GHz Edition a real-world speed boost? We connect it to an epic six-screen array to find out.

Temperatures And Fan Speed

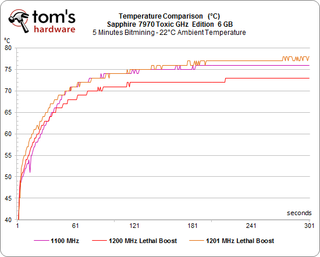

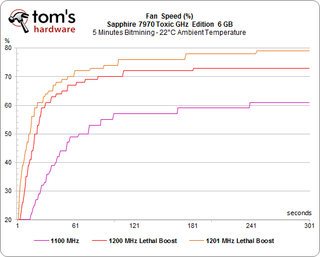

Temperatures under Load (Bitmining)

Sapphire's Toxic HD 7970 GHz Edition 6 GB stays cooler with Lethal Boost enabled than it does at its factory clock rate. This is due to a more aggressive fan profile that’s activated alongside the high frequencies.

The 60-percent duty cycle at 1100 MHz is still within reason, though noise is already creeping up to an uncomfortable level. Lethal Boost triggers a jump to 73-percent duty cycle, and the static 1201 MHz sees us approach 78 percent.

We have a video of what that sounds like on the next page. But before we recorded it, we already knew that the noise level exceeded an unacceptable 60 dB(A).

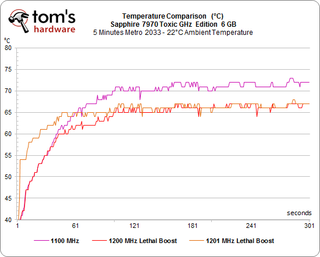

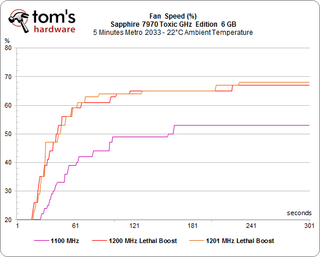

Gaming Temperatures

Sapphire's Toxic HD 7970 GHz Edition 6 GB ran hotter than the other two profiles in our gaming workload, though it was still acceptable.

The higher clock rates are fairly similar, simultaneously offering lower temperatures and a more aggressive cooling profile.

Stay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

Based on the thermals, acoustics fall right about where we'd expect them. The card's fans are noticeable, but not distracting, at 1100 MHz. The faster clock rates push the fans to an almost-70-percent duty cycle, yielding a noise level that's loud enough to hear even with headphones on.

The noise level videos on the next page provide a better sense of what our results mean in practice. We can already say that activating Lethal Boost creates quite a bit of noise.

Current page: Temperatures And Fan Speed

Prev Page Clock Frequencies And Power Consumption: Gaming Next Page Noise Level Comparison Videos-

Youngmind The 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply -

robthatguyx i think this would perform much better with a trifire.if one 7970 reference can handle 3 screens than 3 of these could easily eat 6 screen,in my op YoungmindThe 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply -

palladin9479 YoungmindThe 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply

Seeing as in both SLI and CFX memory contents are copied to each card, you would practically need that much for ridiculously large screen playing. One card can not handle multiple screens as this was designed for, you need at least two for a x4 screen and three for a x6 screen. The golden rule seems to be two screens per high end card. -

tpi2007 YoungmindThe 6gb of memory might not have much of an effect with only a single card, but I wonder if it will have a larger impact if you use in configurations with more graphics cards such as tri-crossfire and quad-crossfire? If people are willing to spend so much money on monitors, I think they'd be willing to spend a lot of money on tri/quad graphics card configurations.Reply

This.

BigMack70Would be very interested in seeing this in crossfire at crazy resolutions compared to a pair of 3GB cards in crossfire to see if the vram helps in that case

And this.

Tom's Hardware, if you are going to be reviewing a graphics card with 6 GB of VRAM you have to review at least two of them in Crossfire. VRAM is not cumulative, so using two regular HD 7970 3 GB in Crossfire still means that you only have a 3 GB framebuffer, so for high resolutions with multiple monitors, 6 GB might make the difference.

So, are we going to get an update to this review ? As it is it is useless. Make a review with at least two of those cards with three 30" 1600p monitors. That is the kind of setup someone considering buying one of those cards will have. And that person won't buy just one card. Those cards with 6 GB of VRAM were made to be used at least in pairs. I'm surprised Sapphire didn't tell you guys that in the first place. In any case, you should have figured it out.

-

FormatC ReplyTom's Hardware, if you are going to be reviewing a graphics card with 6 GB of VRAM you have to review at least two of them in Crossfire.

Sapphire was unfortunately not able to send two cards. That's annoying, but not our problem. And: two of these are cards are deadly for my ears ;) -

tpi2007This.And this.Tom's Hardware, if you are going to be reviewing a graphics card with 6 GB of VRAM you have to review at least two of them in Crossfire. VRAM is not cumulative, so using two regular HD 7970 3 GB in Crossfire still means that you only have a 3 GB framebuffer, so for high resolutions with multiple monitors, 6 GB might make the difference.So, are we going to get an update to this review ? As it is it is useless. Make a review with at least two of those cards with three 30" 1600p monitors. That is the kind of setup someone considering buying one of those cards will have. And that person won't buy just one card. Those cards with 6 GB of VRAM were made to be used at least in pairs. I'm surprised Sapphire didn't tell you guys that in the first place. In any case, you should have figured it out.Why not go to the uber-extreme and have crossfire X (4gpus) with six 2500X1600 monitors and crank up the AA to 4x super sampling to prove once and for all in stone.Reply

-

freggo FormatCSapphire was unfortunately not able to send two cards. That's annoying, but not our problem. And: two of these are cards are deadly for my earsReply

Thanks for the review. The noise demo alone helps in making a purchase decission.

No sale !

Anyone know why no card has been designed to be turned OFF ( 0 Watts !) when idle, and the system switching to internal graphics for just desktop stuff or simple tasks?

Then applications like Photoshop, Premiere or the ever popular Crisis could 'wake up' the card and have the system switch over.

Or are there cards like that ?

-

FormatC For noise comparison between oc'ed Radeons HD 7970 take a look at this:Reply

http://www.tomshardware.de/Tahiti-XT2-HD-7970-X-X-Edition,testberichte-241091-6.html

-

dudewitbow freggoThanks for the review. The noise demo alone helps in making a purchase decission.No sale !Anyone know why no card has been designed to be turned OFF ( 0 Watts !) when idle, and the system switching to internal graphics for just desktop stuff or simple tasks?Then applications like Photoshop, Premiere or the ever popular Crisis could 'wake up' the card and have the system switch over.Or are there cards like that ?Reply

I think that has been applied to laptops, but not on the desktop scene. One of the reasons why I would think its not as useful on a desktop scene is even if your build has stuff off, the PSU is the least efficient when on near 0% load, so no matter what, your still going to burn electricity just by having the computer on. All gpus nowandays have downclocking features when its not being on load(my 7850 downclocks to 300mhz on idle) but I wouldnt think cards will go full out 0.

Most Popular