AMD Radeon RX 5700 XT vs. Nvidia GeForce RTX 2060 Super: $400 GPU Throwdown

Refreshed Turing takes on AMD’s fastest Navi card.

Two of the top contenders for the best graphics card right now are AMD's Radeon RX 5700 XT and Nvidia's GeForce RTX 2060 Super. They're not the fastest GPUs around—or at least, Nvidia's GPU isn't the company's fastest option, as the RTX 2070 through RTX 2080 Ti are all faster—but they're two of the best overall values. Both cards are available for around $400, representing the lower portion of the high-end GPU market, and we're here to declare a winner.

Both the RTX 2060 Super and RX 5700 XT were introduced in the summer of 2019. AMD's card features a Navi 10 GPU with a new RDNA architecture, while Nvidia's card is effectively a refresh of 2018's RTX 2070—the same TU106 GPU with slightly fewer CUDA cores enabled, but with a lower price that makes it the better bargain. $400 is an important price point as well, as going higher up the performance ladder typically brings diminishing returns. You can get great performance at 1440p with high settings, or 1080p ultra, without blowing up your build budget. Similar previous-generation cards like the GTX 970, GTX 1070, R9 390 and RX Vega 56 have all sold well.

You can also step down one rung and look at the Radeon RX 5700 and GeForce RTX 2060, or the Radeon RX 5600 XT if you’re going to stick to 1080p ultra or 1440p medium settings. As for stepping up, the only faster card from AMD right now is the Radeon VII, which is expensive, power hungry and not actually much faster than the RX 5700 XT. If you want something more potent, you'll need to either wait for AMD to release its next-gen Navi 20/21 GPUs — which are supposed to include hardware ray tracing functionality as well — or look at Nvidia's more expensive RTX models. Right now AMD has nothing that can beat the RTX 2070 Super and above.

But let's get back on topic. Which card is actually better, the RTX 2060 Super or the RX 5700 XT? We've put the reference models from both companies against each other in this head-to-heat battle. We'll look at features and specifications, drivers and software, performance in games, power and thermals, and finally overall value. Cue the high noon music as the rivals prepare to draw.

Featured Technology

AMD's Radeon RX 5700 XT is the current halo product for the company, using the fully-enabled Navi 10 XT GPU and sporting 2560 processing cores. The reference model has a game clock of 1755 MHz, with a maximum boost clock of 1905 MHz, and factory overclocked cards typically increase the game clock to around 1815 MHz (give or take). Nvidia's GeForce RTX 2060 Super uses the TU106-410-A1 GPU, with 2176 CUDA cores and a conservative boost clock of 1650 MHz. It also includes 34 RT cores for ray tracing calculations and 272 Tensor cores for deep learning computations (ie, DLSS).

1. Hardware Specifications

| RX 5700 XT | RTX 2060 Super | |

|---|---|---|

| Architecture | Navi 10 | TU106 |

| Process (nm) | 7 | 12 |

| Transistors (Billion) | 10.3 | 10.8 |

| Die size (mm^2) | 251 | 445 |

| SMMs / CUs | 40 | 34 |

| GPU Cores | 2560 | 2176 |

| Tensor Cores | N/A | 272 |

| RT Cores | N/A | 34 |

| Turbo Clock (MHz) | 1755 | 1650 |

| VRAM Speed (Gbps) | 14 | 14 |

| VRAM (GB) | 8 | 8 |

| VRAM Bus Width | 256 | 256 |

| ROPs | 64 | 64 |

| TMUs | 160 | 136 |

| GFLOPS (Boost) | 8986 | 7181 |

| Bandwidth (GBps) | 448 | 448 |

| TDP (watts) | 225 | 175 |

| Launch Date | Jul-19 | Jul-19 |

Comparing theoretical performance based on paper specs is typically a fool's errand, as architectural details come into play. Still, on paper the RX 5700 XT has 9.0 TFLOPS and 448 GBps of bandwidth going up against the RTX 2060 Super's 7.2 TFLOPS and 448 GBps. That's a 25 percent advantage for AMD, but (spoiler!) it almost never shows up to that level in games.

AMD's RX 5700 XT is also the first 'real' 7nm GPU—we don't really count the Radeon VII, since it was late to the party, based around an old architecture, and overall couldn't match Nvidia's existing cards. TSMC's 7nm finFET process allows AMD to stuff approximately 10.3 billion transistors into the Navi 10 die that measures just 251 mm2.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

In contrast, the RTX 2060 Super uses TSMC's older 12nm finFET process. It only has a 'few' more transistors,10.8 billion (that’s a half billion, but not a big difference percentage-wise), but the die size is 445 mm2. That's a 77% larger die, again for only 5% more transistors—not that die size or process technology matter to the end user, but they can affect power use, cost and even performance.

On the memory side of things, both GPUs feature 8GB of GDDR6 RAM clocked at 14 Gbps on a 256-bit bus, giving them the same bandwidth. That doesn't mean the memory subsystems of the GPUs are the same, however. Both companies use various forms of lossless delta color compression to extract more performance from the memory. Details are somewhat limited, though Nvidia's GPUs seem to either compress data better or simply extract more performance from the same memory bandwidth in other ways.

The bigger difference between the GPUs is in support for rendering features, specifically DirectX Raytracing (DXR) and Vulkan-RT. How important is ray tracing in games? Right now, there are only a small number of supported titles, and the ray tracing effects are often relatively small.

Here's the complete list of ray tracing games that were available when we wrote this: Battlefield V, Call of Duty: Modern Warfare, Control, Deliver Us the Moon, Metro Exodus, Quake II RTX, Shadow of the Tomb Raider and Wolfenstein: Youngblood. That's eight games, and another eight should launch in 2020 (Cyberpunk 2077, Doom Eternal, Dying Light 2, MechWarrior 5: Mercenaries, Minecraft RTX, Synced: Off-Planet, Vampire: The Masquerade - Bloodlines 2 and Watch Dogs: Legion). The problem is that so far, we'd only rate the ray tracing effects as truly desirable in Control and maybe Deliver Us the Moon—and even then the performance hit can be large.

That's why Nvidia also has deep learning super-sample (DLSS) technology, which uses machine learning and massive amounts of training on Nvidia's supercomputers to allow games to render at a lower resolution and then upscale with anti-aliasing detection and removal. DLSS works okay but it's not perfect. It's also very useful if you're not using the RTX 2080 Ti and playing titles with ray tracing at 1080p.

The two competitors also offer adaptive refresh rate technology: AMD FreeSync and Nvidia G-Sync. Nvidia has an advantage now, as it has started to support select 'compatible' adaptive sync displays (aka, FreeSync but without the AMD branding), whereas AMD only works with FreeSync/Adaptive Sync monitors. Nvidia says it has tested hundreds of FreeSync displays, 90 of which are now 'G-Sync Compatible'—including LG's latest OLED 120Hz TVs. Also, Nvidia has added HDMI 2.1 VRR (variable refresh rate) support to its RTX series cards.

Ultimately, despite AMD having an advantage in manufacturing technology, Nvidia's hardware features win out. You may not love the way ray tracing effects look in every game, or the potential performance impact, but at least you have the option to turn them on and try them out. Plus you have the choice between G-Sync and FreeSync / G-Sync Compatible displays.

Winner: Nvidia

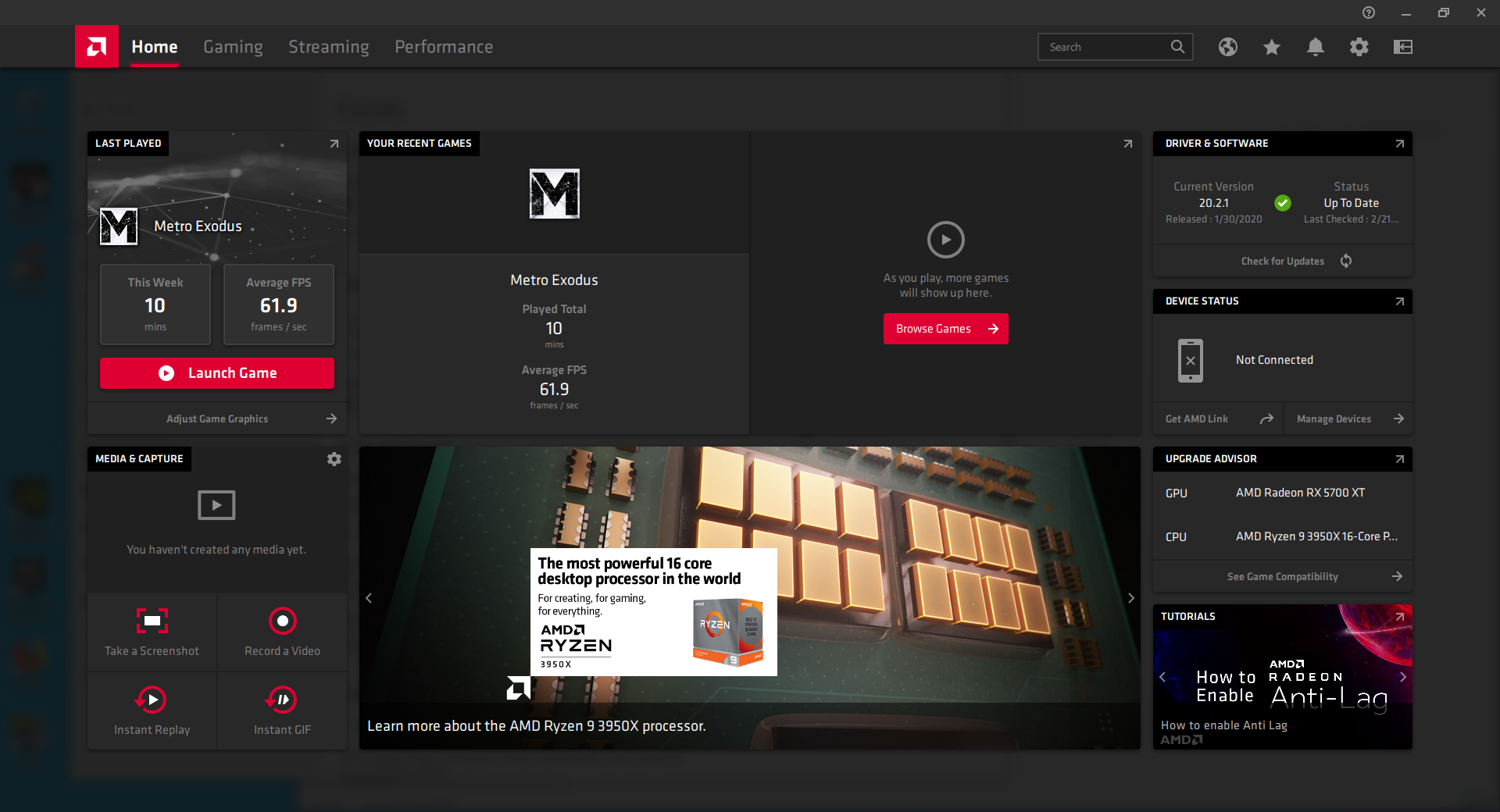

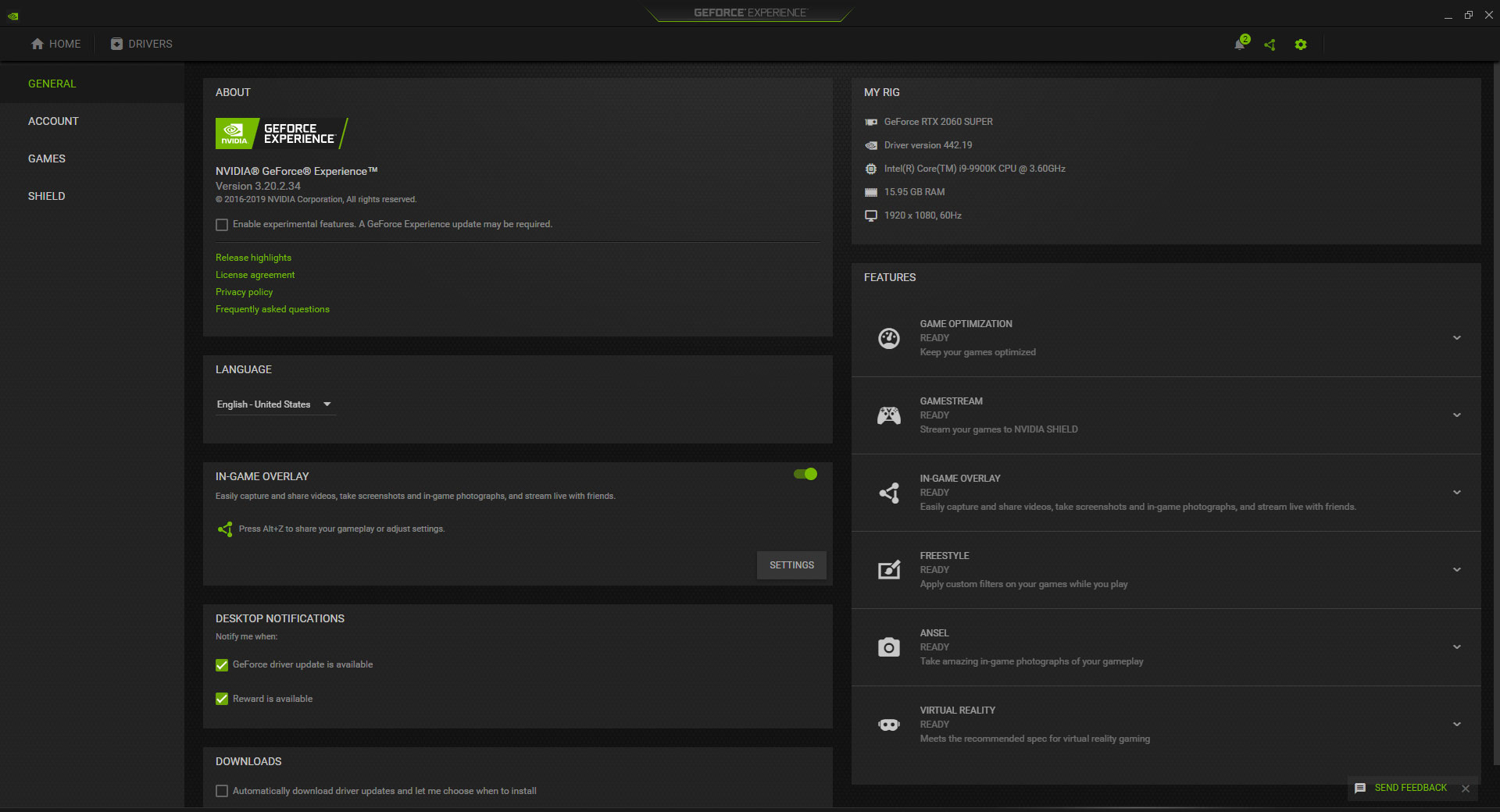

2. Drivers and Software

Both AMD and Nvidia offer full suites of drivers and software. To put things in perspective, the driver download size currently tips the scale at 529 MB for Nvidia and 482 MB for AMD. That's nearly as large as the entirety of Windows XP, just for your GPU drivers and associated software.

AMD's latest Radeon Adrenalin 2020 drivers added a bunch of new features: integer scaling, Game Boost, and a completely revamped UI. AMD claims performance is "up to 12% better" relative to its Adrenalin 2019 drivers, though having tested both it's usually not that big of a jump. There are loads of pre-existing features as well, like Radeon Anti-Lag, Radeon Image Sharpening, Radeon Chill, Enhanced Sync, and the ability to record or stream your gaming sessions. It's almost too much to dig through, but AMD basically gives you everything you need in one package, including the ability to tweak and overclock your graphics card.

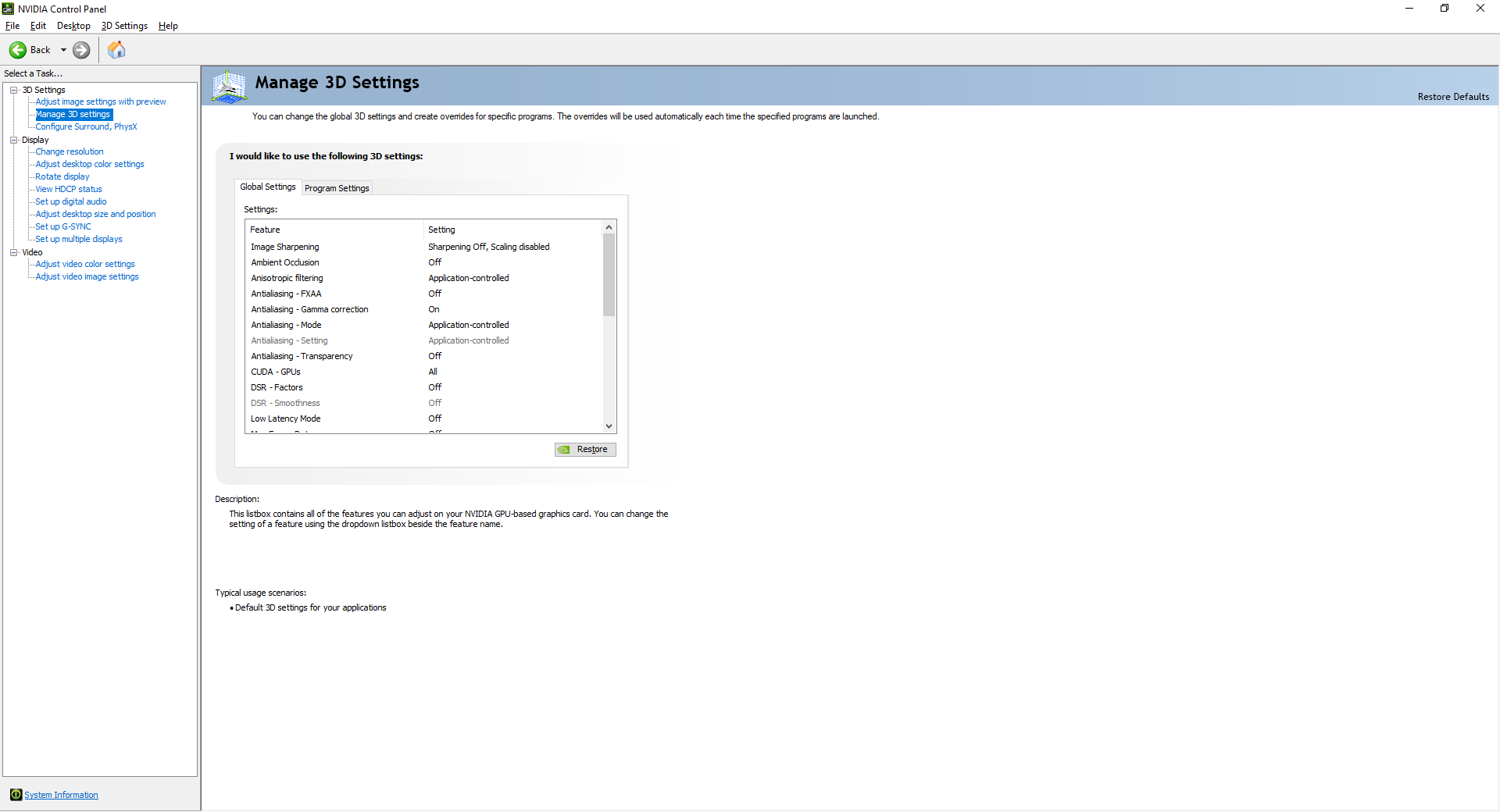

The latest Nvidia 442.19 drivers don't have any major new highlights to discuss, but like AMD the company has been adding features and enhancements for years. Integer scaling is available (on Turing GPUs), and you can adjust 3D settings and video enhancements. But a lot of the extras are within GeForce Experience. That's where you'll find ShadowPlay for streaming and recording your gameplay, hear about driver updates, get recommended settings profiles for most major games, and there's the in-game overlay that allows you to apply filters—including sharpening, select ReShade filters, and more.

There's also no overclocking support built into Nvidia's drivers, but it's not a major loss. Tools like MSI Afterburner and EVGA Precision X1 are readily available for those that want to tinker, and are arguably better than anything AMD or Nvidia are likely to build into their drivers.

We prefer AMD's unified approach, but there's something else we like about AMD's drivers. Generally speaking, the software and drivers discussion ends up being nitpicky. However, there's one thing that I've come to despise about GeForce Experience: The required login and captcha to make things work. We upgrade GPU drivers more frequently than most users, and with a constant stream of new GPUs to test we're more aware of login requirements and captchas than most. Still, it's extremely annoying how frequently GeForce Experience seems to forget login credentials. Please, just make all of that go away, or at least make it optional. We've been data mined enough, thanks.

Frequency and quality of driver updates is a bit harder to quantify. Both companies put out new drivers on a regular basis—AMD had 33 releases in 2019 by our count, and Nvidia had 26 releases (not including non-public drivers). That's 2-3 driver updates per month on average, which some would argue is too many. Both companies also delivered 'game ready' drivers in a timely fashion for all of the major launches, and both had a few hiccups along the way.

Winner: AMD, because logging in to use drivers is a terrible idea

3. Gaming Performance

Unless you're folding protein strands, searching for ET, building an AI to take over the world, mining cryptocurrency or some other work, chances are good that you're looking at a graphics card upgrade to play games. We've tested 12 games across multiple resolutions and settings to determine which of these two GPU ends up being the fastest. Here are the results:

Average / Minimum FPS

| Game | Setting | RX 5700 XT | RTX 2060 Super | RX 5700 XT vs. RTX 2060 Super |

|---|---|---|---|---|

| 12 Game Average | 1080p Medium | 161.1 / 115.3 | 147.7 / 104.8 | 9% / 10% |

| Row 1 - Cell 0 | 1080p Ultra | 111.1 / 79.9 | 102.1 / 73.5 | 8.8% / 8.8% |

| Row 2 - Cell 0 | 1440p Ultra | 80.6 / 60.9 | 74.5 / 57.2 | 8.2% / 6.4% |

| Row 3 - Cell 0 | 4k Ultra | 45.4 / 35.5 | 42.3 / 33.7 | 7.1% / 5.3% |

| Assassin's Creed Odyssey | 1080p Medium | 101.3 / 77.4 | 105.7 / 77.9 | -4.2% / -0.6% |

| Row 5 - Cell 0 | 1080p Ultra | 73.3 / 55.8 | 66.2 / 50.4 | 10.7% / 10.7% |

| Row 6 - Cell 0 | 1440p Ultra | 56.2 / 44.0 | 53.7 / 41.8 | 4.7% / 5.3% |

| Row 7 - Cell 0 | 4k Ultra | 36.2 / 29.1 | 35.9 / 30.4 | 0.8% / -4.3% |

| Borderlands 3 | 1080p Medium | 168.1 / 121.2 | 138.2 / 97.5 | 21.6% / 24.3% |

| Row 9 - Cell 0 | 1080p Ultra | 95.3 / 74.8 | 77.4 / 62.9 | 23.1% / 18.9% |

| Row 10 - Cell 0 | 1440p Ultra | 67.1 / 55.6 | 55.0 / 46.4 | 22% / 19.8% |

| Row 11 - Cell 0 | 4k Ultra | 36.1 / 30.7 | 30.2 / 26.3 | 19.5% / 16.7% |

| The Division 2 | 1080p Medium | 199.6 / 152.5 | 164.5 / 127.0 | 21.3% / 20.1% |

| Row 13 - Cell 0 | 1080p Ultra | 104.4 / 83.2 | 94.8 / 75.5 | 10.1% / 10.2% |

| Row 14 - Cell 0 | 1440p Ultra | 68.3 / 57.6 | 63.8 / 53.5 | 7.1% / 7.7% |

| Row 15 - Cell 0 | 4k Ultra | 35.3 / 30.6 | 33.4 / 29.4 | 5.7% / 4.1% |

| Far Cry 5 | 1080p Medium | 152.5 / 121.0 | 141.5 / 104.1 | 7.8% / 16.2% |

| Row 17 - Cell 0 | 1080p Epic | 133.8 / 110.1 | 124.6 / 87.6 | 7.4% / 25.7% |

| Row 18 - Cell 0 | 1440p Epic | 100.3 / 85.1 | 88.8 / 73.5 | 13% / 15.8% |

| Row 19 - Cell 0 | 4k Epic | 51.8 / 44.7 | 47.1 / 40.8 | 10% / 9.6% |

| Forza Horizon 4 | 1080p Medium | 218.6 / 172.6 | 181.4 / 143.2 | 20.5% / 20.5% |

| Row 21 - Cell 0 | 1080p Ultra | 166.5 / 141.5 | 137.5 / 116.3 | 21.1% / 21.7% |

| Row 22 - Cell 0 | 1440p Ultra | 134.1 / 116.5 | 111.4 / 94.8 | 20.4% / 22.9% |

| Row 23 - Cell 0 | 4k Ultra | 84.3 / 72.7 | 73.6 / 63.6 | 14.5% / 14.3% |

| Hitman 2 | 1080p 'Medium' | 162.1 / 101.2 | 144.3 / 90.4 | 12.3% / 11.9% |

| Row 25 - Cell 0 | 1080p 'Max' | 121.6 / 68.9 | 115.5 / 65.2 | 5.3% / 5.7% |

| Row 26 - Cell 0 | 1440p 'Max' | 85.3 / 50.9 | 79.3 / 49.5 | 7.6% / 2.8% |

| Row 27 - Cell 0 | 4k 'Max' | 44.9 / 28.3 | 41.2 / 25.6 | 9% / 10.5% |

| Metro Exodus | 1080p Normal | 114.1 / 56.8 | 98.8 / 50.7 | 15.5% / 12% |

| Row 29 - Cell 0 | 1080p High | 81.8 / 43.0 | 74.5 / 40.1 | 9.8% / 7.2% |

| Row 30 - Cell 0 | 1440p High | 63.6 / 36.8 | 56.2 / 34.0 | 13.2% / 8.2% |

| Row 31 - Cell 0 | 4k High | 39.4 / 25.0 | 34.7 / 22.4 | 13.5% / 11.6% |

| The Outer Worlds | 1080p Medium | 155.8 / 93.6 | 166.3 / 102.1 | -6.3% / -8.3% |

| Row 33 - Cell 0 | 1080p Ultra | 106.4 / 71.4 | 112.1 / 79.4 | -5.1% / -10.1% |

| Row 34 - Cell 0 | 1440p Ultra | 71.0 / 50.5 | 76.0 / 58.0 | -6.6% / -12.9% |

| Row 35 - Cell 0 | 4k Ultra | 37.0 / 28.2 | 39.9 / 32.5 | -7.3% / -13.2% |

| Red Dead Redemption 2 | 1080p Low | 159.8 / 117.9 | 145.2 / 107.6 | 10.1% / 9.6% |

| Row 37 - Cell 0 | 1080p High | 106.2 / 80.0 | 96.8 / 73.4 | 9.7% / 9% |

| Row 38 - Cell 0 | 1440p High | 80.5 / 60.4 | 72.3 / 55.1 | 11.3% / 9.6% |

| Row 39 - Cell 0 | 4k High | 47.9 / 37.3 | 41.1 / 29.8 | 16.5% / 25.2% |

| Shadow of the Tomb Raider | 1080p Medium | 153.1 / 120.3 | 134.7 / 101.6 | 13.7% / 18.4% |

| Row 41 - Cell 0 | 1080p Highest | 125.9 / 97.0 | 117.7 / 89.6 | 7% / 8.3% |

| Row 42 - Cell 0 | 1440p Highest | 82.5 / 66.8 | 78.9 / 63.2 | 4.6% / 5.7% |

| Row 43 - Cell 0 | 4k Highest | 42.1 / 34.1 | 41.5 / 35.1 | 1.4% / -2.8% |

| Strange Brigade | 1080p Medium | 222.9 / 189.6 | 216.2 / 190.0 | 3.1% / -0.2% |

| Row 45 - Cell 0 | 1080p Ultra | 162.1 / 119.7 | 142.7 / 110.0 | 13.6% / 8.8% |

| Row 46 - Cell 0 | 1440p Ultra | 116.5 / 90.7 | 104.9 / 85.4 | 11.1% / 6.2% |

| Row 47 - Cell 0 | 4k Ultra | 69.4 / 59.7 | 60.4 / 52.5 | 14.9% / 13.7% |

| Total War: Warhammer 2 | 1080p Medium | 171.3 / 127.7 | 174.8 / 126.2 | -2% / 1.2% |

| Row 49 - Cell 0 | 1080p Ultra | 94.5 / 63.8 | 98.3 / 67.5 | -3.9% / -5.5% |

| Row 50 - Cell 0 | 1440p Ultra | 73.3 / 53.5 | 77.7 / 58.6 | -5.7% / -8.7% |

| Row 51 - Cell 0 | 4k Ultra | 41.1 / 30.1 | 44.9 / 34.8 | -8.5% / -13.5% |

AMD's RX 5700 XT just about sweeps the results, coming out 7-10% faster overall at the tested resolutions. The only games that favored the Nvidia RTX 2060 Super are The Outer Worlds and Total War: Warhammer 2—both of which are, oddly enough, AMD promotional titles. Both games also support the DirectX 11 API (we tested DX12 in Warhammer 2 on AMD GPUs, DX11 on Nvidia), where eight of the ten remaining games were tested with either the DX12 or Vulkan API. We also didn't test with ray tracing enabled in the two games that support it, since AMD has so far elected not to add driver support for DXR.

This isn't an insurmountable lead, and factory overclocked cards could potentially narrow the gap, but both AMD and Nvidia partner cards are typically overclocked to similar levels so that shouldn't shake up the standings much. The good news is that either GPU should easily handle 1080p ultra gaming at 60 fps, and 60 fps is even doable at 1440p ultra on many of the games—Assassin's Creed Odyssey, Borderlands 3 and Metro Exodus being three where one or both cards come up short.

Winner: AMD

4. Power Consumption and Heat Output

These are high-end GPUs and depending on the specific model, PCI Express Graphics (PEG) power connector requirements will vary. Most cards will require both 8-pin and 6-pin PEG connectors. Some factory overclocked models might include dual 8-pin PEG connectors, though almost certainly not because it's strictly necessary. Only the RTX 2060 Super Founders Edition uses a single 8-pin PEG connector.

AMD's RX 5700 XT has an official Typical Board Power (TBP) rating of 225W, while Nvidia's RTX 2060 Super is rated at 175W. Those are both for reference designs, however, and factory overclocked partner cards are very likely to be a bit higher. AMD recommends a minimum 600W power supply, and Nvidia recommends at least a 550W PSU.

In actual use, power requirements with a Core i9-9900K test system are far lower than those PSU recommendations. We measured around 325W at the outlet with the RTX 2060 Super, and 370W with the RX 5700 XT. We'll be refining our power testing with specialized hardware in the near future, as reading power use via software utilities depends on the drivers, but AMD and Nvidia don't report the same metrics. AMD gives GPU-only power use, while Nvidia gives total board power use. GPU-Z as an example showed average power draws of 173.2W for the 2060 Super vs. 179.0W for the 5700 XT—even though outlet power use was 48W higher on the AMD card.

For most PCs, the 30-50W difference in power use isn't going to matter a lot—if your PSU can handle the 2060 Super, it should be able to handle the 5700 XT as well. However, higher power use leads directly to more heat output, which potentially means more fan noise. It's possible for custom cards to use larger coolers and better fans to get around that, but in general the Nvidia RTX 2060 Super will use less power, generate less heat and keep noise levels down.

The fact that Nvidia offers similar (though slightly lower) performance while using the older 12nm manufacturing node is impressive, and we can only look forward to seeing what Nvidia's 7nm Ampere GPUs do later this year.

Winner: Nvidia

5. Value Proposition

Determining which card is the better value is tricky, since all of the above elements are factors. Both cards have a recommended price of $400. Right now, the least expensive RTX 2060 Super cards cost $400, though there's an EVGA RTX 2060 Super SC Ultra Gaming at Newegg that drops to $380 after rebates if you're willing to deal with those. There are rebates on AMD's RX 5700 XT as well, but the least expensive model is the ASRock RX 5700 XT Challenger D 8G OC, which is currently on sale for $360 without any mail-in rebate hoops to jump through.

Individual GPU sales may come and go, but for the past several months at least, the RX 5700 XT has been selling for less than the RTX 2060 Super. AMD also sweetens the deal by offering some free games with RX 5700 XT cards (you'll want to verify the deal is available from the reseller before buying, however). AMD's current Raise the Game bundle will get you Monster Hunter World: Iceborne, Resident Evil 3 and three months of Xbox Game Pass for PC with the RX 5700 XT. How much those games are worth is debatable, but it's obviously a much better deal than getting nothing extra.

Winner: AMD

| Round | Nvidia GeForce RTX 2060 Super | AMD Radeon RX 5700 XT |

|---|---|---|

| Featured Technology | ✗ | Row 0 - Cell 2 |

| Drivers and Software | Row 1 - Cell 1 | ✗ |

| Gaming Performance | Row 2 - Cell 1 | ✗ |

| Power Consumption | ✗ | Row 3 - Cell 2 |

| Value Proposition | Row 4 - Cell 1 | ✗ |

| Total | 2 | 3 |

Bottom Line

Lower prices go a long way toward overcoming any potential feature and power advantages Nvidia might have. If ray tracing in games truly catches on—we want more games that look like Control rather than Battlefield V or Shadow of the Tomb Raider, for example—the RTX 2060 Super could be a better long-term purchase. Will it still be fast enough to handle all the extra effects, or will you need to upgrade to a 3060 or some other future card? We can't say for certain. Regardless, we've been hearing about all the 'amazing' ray tracing enabled games coming soon for more than a year now, and most have failed to live up to the hype.

Our advice is to get a card that runs current games better, and save ray tracing for another day. AMD's RX 5700 XT costs less than the RTX 2060 Super, comes with a gaming bundle as an added bonus, has good driver and software support, and outperforms the competition in ten of the 12 games we tested. If you want the best GPU right now for $400 or less, AMD's RX 5700 XT, Navi 10 and the RDNA architecture are the winner.

If you're willing to spend more money, AMD falls behind the RTX 2070 Super and beyond. Alternatively, you can still find a few RTX 2070 cards at clearance pricing, like this Gigabyte RTX 2070 Windforce 8G (also available at B&H and Newegg for the same $400 asking price). The RTX 2070 ends up being 5% faster than the RTX 2060 Super, making performance mostly a tie. It still costs more, but ray tracing use in games should pick up with both the PlayStation 5 and Xbox Series X coming later this year and supporting the feature.

Pricing can also change, and Nvidia dropped the RTX 2060 starting price to $300 when AMD launched the RX 5600 XT in January 2020. The RTX 2060 Super prices didn't drop, but if they do, Nvidia could reclaim the $350 crown. What’s more likely is that Nvidia's next-gen Ampere GPUs will shake things up in the graphics card market when they launch later this year.

Overall Winner: AMD

Jarred Walton is a senior editor at Tom's Hardware focusing on everything GPU. He has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

-

Darkbreeze Replyhas good driver and software support

Really? And how exactly do you figure that to be accurate when AMD themselves admit they are still trying to iron out problems on Navi that have existed since day 1, 7 months later?

https://www.techspot.com/news/84005-gamers-ditching-radeon-graphics-cards-over-driver-issues.html -

Makaveli ReplyDarkbreeze said:Really? And how exactly do you figure that to be accurate when AMD themselves admit they are still trying to iron out problems on Navi that have existed since day 1, 7 months later?

https://www.techspot.com/news/84005-gamers-ditching-radeon-graphics-cards-over-driver-issues.html

Not everyone is having issues.

Even in that link Techspot themselves say they have rarely ran into any issues with any of the Navi radeon's they have.

Not saying it doesn't happen just not everyone is affected. -

travsb1984 Both these cards should have come out at $250 max and been down to $200 by now. The fact that they did not is why many will probably be migrating to the next gen console when it comes out. Whether AMD and Nvidia would keep prices sky high after the mining craze has been answered loud and clear. They could have gotten a couple hundred bucks out of me every year and a half or so. Now it looks like they'll get 150 of my bucks through Microsoft and that'll have to last them around 5 or 6 years... I think in the long run their greed will bite them in the butt.Reply -

Darkbreeze It doesn't matter that not everyone is having issues. If SOME are having issues, it makes "good driver and software support" a lot less, believable. And you apparently didn't read the article well because they also clearly said that this is not limited to Navi, neither the problems, nor the poll, nor the driver issues were limited to Navi, but primarily many of the core issues, especially the black screens etc., are. Seriously, we just had one member who updated his drivers and ALL FOUR of his working AMD cards instantly began having problems. FOUR. Just from a driver update. Two days ago.Reply

Last year we noticed that batches of RX 580 cards were dying off right after some driver updates. So yeah, the fact that not everybody is affected does not forgive the fact that there is a problem.

AMD themselves SAY there IS a problem, and that they are working on it. So how do you refute what they've said themselves? Not everybody had coil whine or was bothered by the GTX 970 3.5GB memory issues either, but they are/were STILL problems, regardless. Nobody would have said "The GTX 970 has terrific memory support" in light of the issues surrounding the architecture despite the fact that for most people it was a non-issue. -

mrface Replytravsb1984 said:...is why many will probably be migrating to the next gen console when it comes out....

This dead horse is touted out after each architecture release and propped up with a price point that never comes to fruition. While I agree that greed is the reason (xCOIN mining market is long been reduced to niche enthusiasts and most people who still worry about crypto rely on trading coins rather than mining coins) they havent dropped the price and is ridiculous, however, people will not be moving in droves away from PC gaming when the next gens come out. Its just as preposterous as someone saying "PS5 will be $500+, PS gamers are going to be flocking to other consoles or PC." Neither is a good argument as humans are innately tribal when it comes to their wants and cost is rarely a factor if someone decides to make a change, mostly due to brand loyalty and change is due to a major event. -

mrface ReplyDarkbreeze said:It doesn't matter that not everyone is having issues. If SOME are having issues, it makes "good driver and software support" a lot less, believable.

As mainly a ATi/Radeon/AMD plebe for years(since atleast the 7500 series, :X), I will say AMD is really lacking on the driver side of the house. -

Makaveli i've been using Radeon's since the Radeon 64 DDRReply

And the drivers are alot better compared to back in the day. And for me personally I've not had many issues in the 20+ years i've been using ATI gpus.

And as far as the article I read it before you posted it here as I'm a member on techspot.

Not had a single issue on my RX580 which spent many years in my old x58 i7-970 build and now transfered to my Ryzen 3800x build. -

gallovfc Why they test 1080p Medium, and 1080p, 1440p and 4K only at Ultra I cannot understand...Reply

Why 1080p medium ?!? Why not 1440p and 4K medium???

1080p Ultra is easy for these cards, 1440p medium could allow players to get high fps,

But specially 4K medium (or high) would ALLOW these cards to RUN 4K, since they obviously CAN'T at 4K Ultra...

So here's my request: for 1440p capable cards, forget about 1080p medium, add 1440p and 4K medium. -

Darkbreeze ReplyMakaveli said:i've been using Radeon's since the Radeon 64 DDR

And the drivers are alot better compared to back in the day. And for me personally I've not had many issues in the 20+ years i've been using ATI gpus.

And as far as the article I read it before you posted it here as I'm a member on techspot.

not had a single issue on my RX580.

Like I said, your sample of one is not indicative of any trends throughout the worldwide community, nor are even 75% of people not having problems an indication that no problems exist. If ten out of every 100 Navi owners have a problem, then it's a problem. Period. -

Makaveli ReplyDarkbreeze said:Like I said, your sample of one is not indicative of any trends throughout the worldwide community, nor are even 75% of people not having problems an indication that no problems exist. If ten out of every 100 Navi owners have a problem, then it's a problem. Period.

And if you read my original post I did say the problem was there just not effecting everyone. Very last line of my post.

no where did I say it was not a problem but you keep pushing that to further your point. Which was a point I never made.