HetCCL makes clustered Nvidia and AMD AI accelerators play nice with each other via RDMA — vendor-agnostic collective communications library removes an obstacle to heterogeneous AI data centers

New software library forms a red and green Voltron

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

In any datacenter, whether it's for AI or not, having fast networked communication across nodes is as equally important as the speed of the nodes themselves. When doing AI work, developers are steered to vendor-specific networking libraries like Nvidia's NCCL or AMD's RCCL. Now, in a new paper, a group of South Korean scientists has proposed a new library called HetCCL, a vendor-agnostic approach that allows clusters composed of GPUs from both vendors to operate as one.

Although it can simply be used for communicating between multiple GPUs in one setup, a collective commin a datacenter often ends up using good ol' Remote Direct Memory Access (RDMA) to let applications pass data to a GPU somewhere else in the network. Think of sending network packets directly into a device's memory (in this case GPU VRAM), rather than going through the driver, the TCP/IP stack, the OS networking layer, and burning a metric ton of CPU cycles in the process.

The paper's authors claim that HetCCL is a world-first drop-in replacement for the vendor-specific CCLs, accomplishing multiple feats at once, by enabling cross-platform communication and load balancing. HetCCL's greatest feat is that it can make multi-vendor deployments viable, letting developers use the aggregate compute capacity of Nvidia and AMD server racks for a given task.

Second, HetCCL purports to be a direct library replacement, apparently requiring only that developers link their application to the HetCCL code rather than their vendor's CCL. The best analogy here is changing a DLL in a game to inject fancy post-processing filters. This way, there should be no source code changes necessary anywhere, from the application all the way to drivers, a fact the HetCCL team proudly calls out.

Third, it implicitly adds support for any future new GPU vendors, as once linked to HetCCL, application code doesn't have to concern itself about whether their data transfer calls to, say, NCCL, will actually end up at Nvidia GPUs. And last but definitely not least, HetCCL accomplishes all of this with minimal overhead, sometimes even outperforming the original CCL thanks to better default tuning parameters.

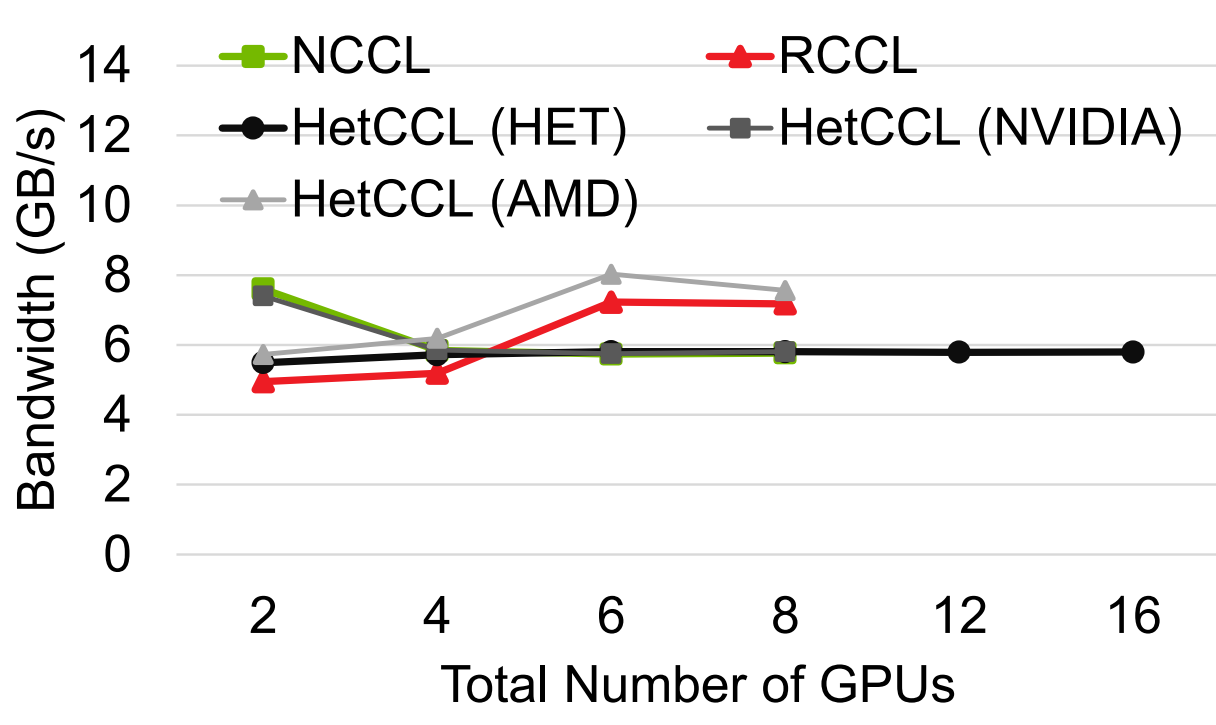

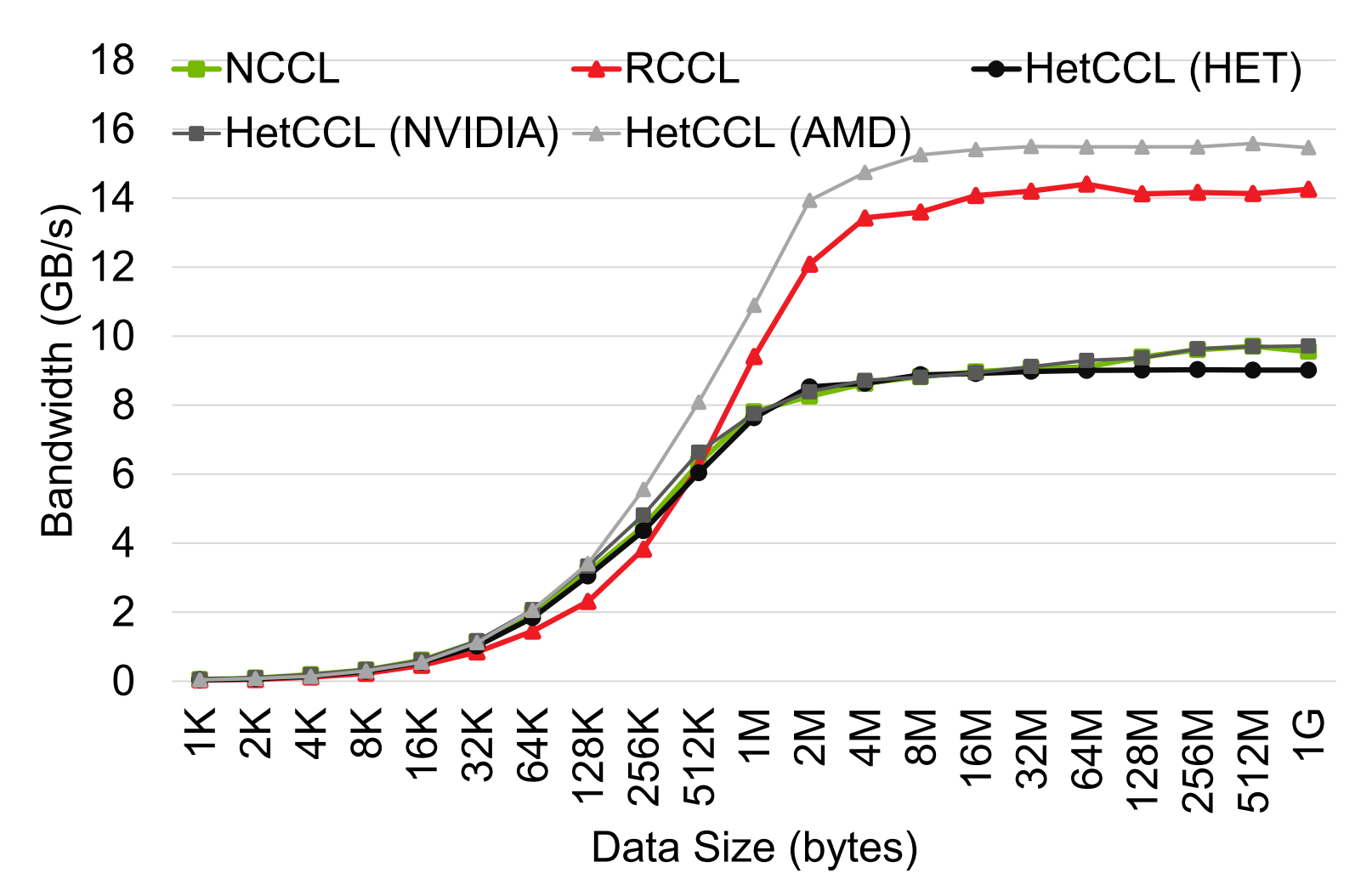

To illustrate this, the scientists ran tests on a four-node cluster, with 2x4 Nvidia GPUs, and 2x4 AMD GPUs. Do note that the results are not meant to be cross-vendor benchmarks, but rather an illustration of HetCCL's potential with meager test resources. After all, the Nvidia system had PCI 3.0 GPUs while the AMD systems had PCIe 4.0 units; all old hardware by now.

In many cases, the results reach their theoretical maximums by blindingly adding Nvidia and AMD computing power, an impressive achievement, though naturally this could will vary greatly across setups and workloads. Under the right conditions, HetCCL could lead to lower costs for training models, as efficiently using both Nvidia and AMD GPUs simultaneously means that tasks no longer have to be split up between clusters and ultimately wait on each other. There could also be man-hour savings in managing said tasks, too.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The major cons to consider are likely that it's simply hard to imagine a cross-vendor AI datacenter deployment, given that picking a GPU vendor also implies choosing a software ecosystem, and for now Nvidia's offerings are the standard. Plus, sysadmins are by nature conservative, opting to stick to one vendor for ease of maintenance and support.

The other remark is that abstracting the networking layer away is only one step. Model training and most any AI-related task run at datacenter level includes tons of GPU-specific code and setup optimizations. That limitation will still exist regardless of how neatly cross-platform the networking layer is.

Having said all that, HetCCL's entire point is to show that removing a major roadblock for the adoption of heterogeneous setups is possible, and others may yet follow in its footsteps.

Follow Tom's Hardware on Google News, or add us as a preferred source, to get our latest news, analysis, & reviews in your feeds.

Bruno Ferreira is a contributing writer for Tom's Hardware. He has decades of experience with PC hardware and assorted sundries, alongside a career as a developer. He's obsessed with detail and has a tendency to ramble on the topics he loves. When not doing that, he's usually playing games, or at live music shows and festivals.