Apple M1 Ultra's 64-Core GPU Fails to Dethrone the Mighty RTX 3090

Apple's GPU performance claims fall short, again.

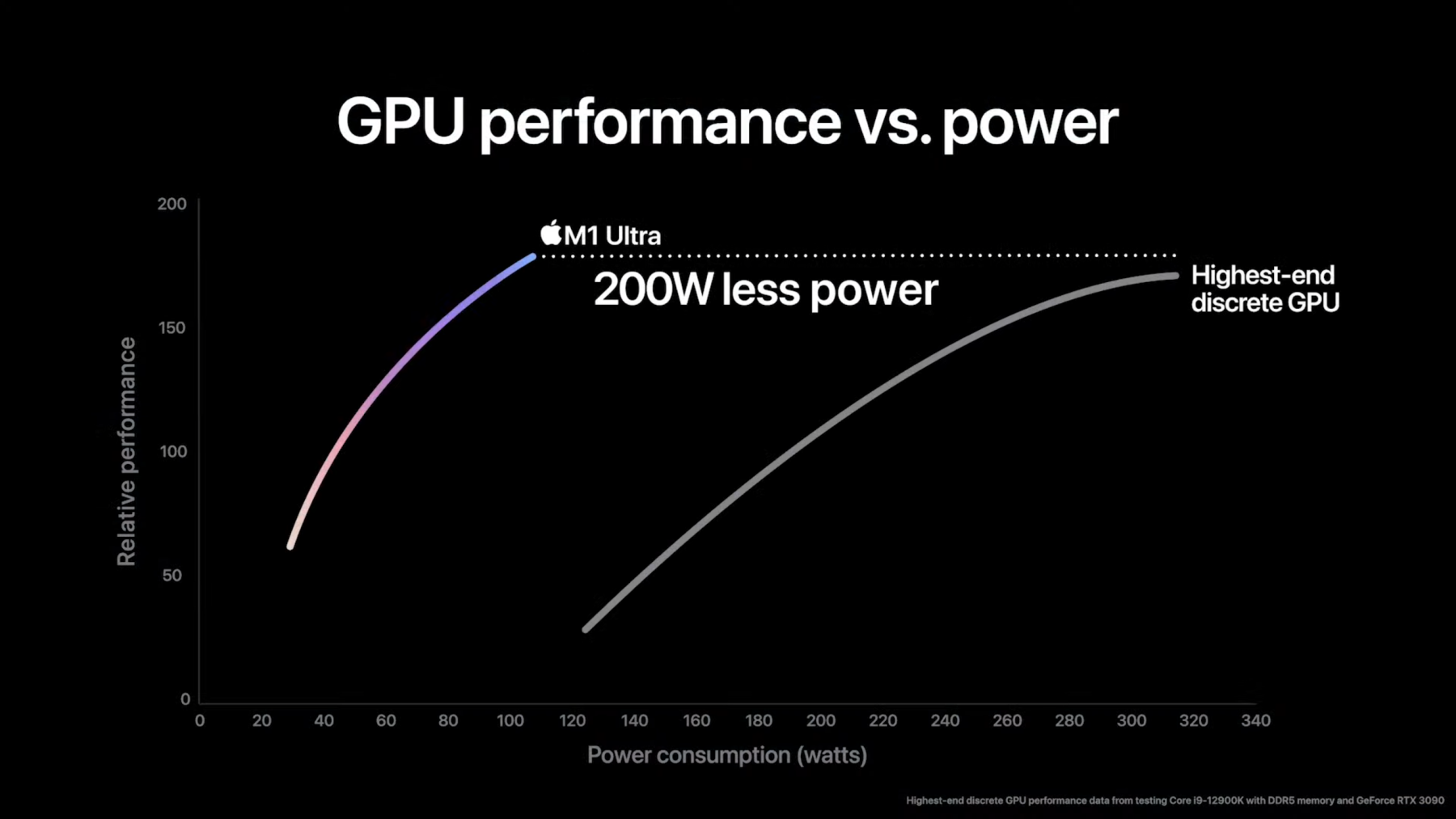

When Apple first announced its new M1 Ultra last week, the company was full of bluster, boasting about the chip's performance credentials. The company claimed that the M1 Ultra could offer greater performance than the Intel Core i9-12900K while consuming 100 fewer watts at load. But the most outrageous claim was likely the assertion that the M1 Ultra's 64-core GPU offered better performance than the flagship NVIDIA GeForce RTX 3090 while undercutting it by 200 watts in power consumption.

Those parts rank among the best CPUs and best graphics cards, and many people were rightfully skeptical about these claims, especially after Apple's GPU performance projections for the M1 Pro and M1 Max didn't quite mesh with real-world performance. To test Apple's claims, the folks at the Verge got their hands on a maxed-out Mac Studio configured with the most potent M1 Ultra SoC (20-core CPU, 64-core GPU) paired with 128GB of unified memory.

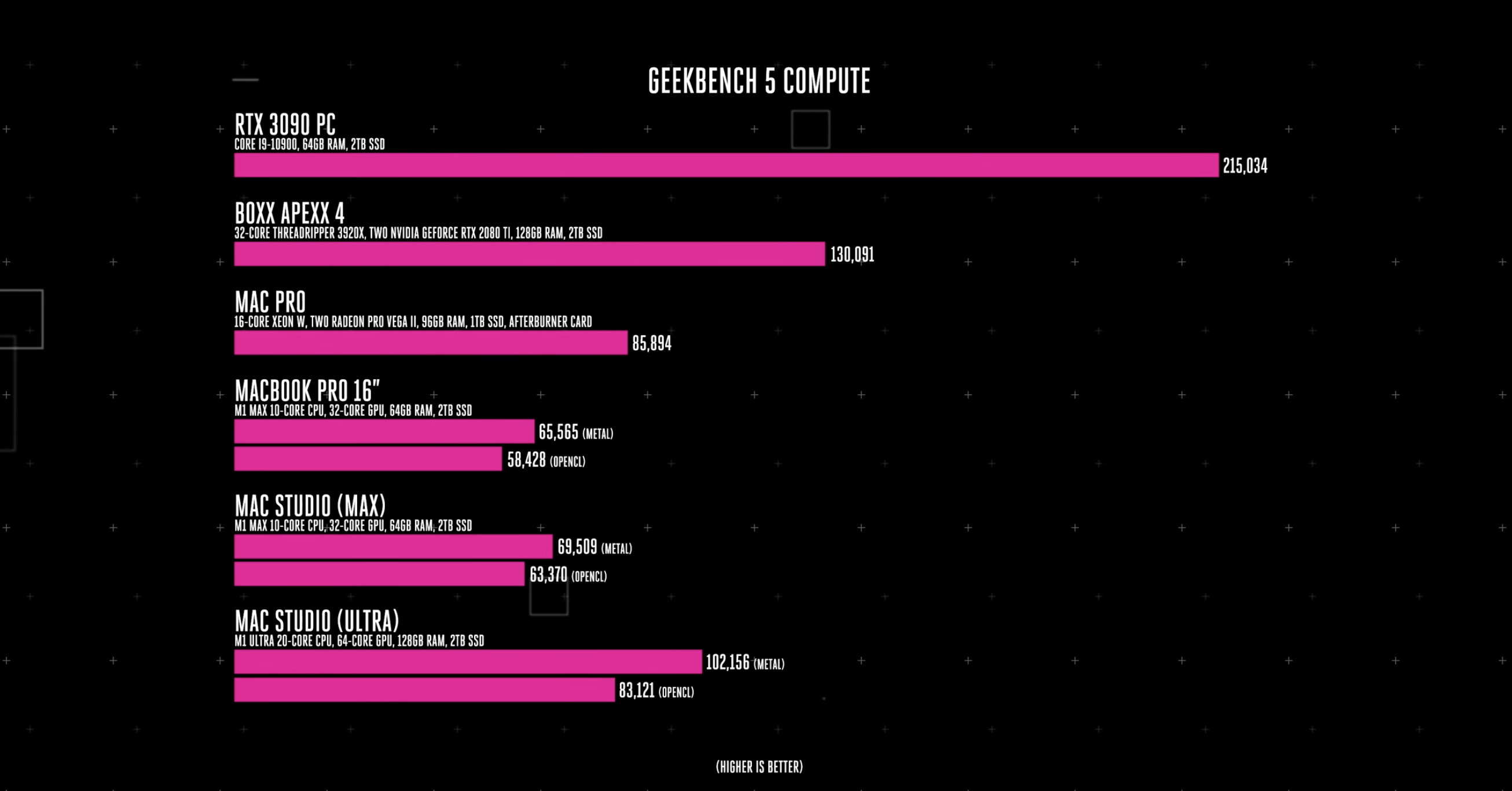

The most problematic aspects of Apple's benchmarks from last week were that it provided no frame of reference for its figures, didn't properly label them, and didn't even tell us which apps were being tested. This is typical Apple behavior, so it shouldn't be too surprising. However, the Verge's testing showed that its test rig with a Core i9-10900 and 64GB of RAM put up a Geekbench 5 Compute score of 215,034 compared to 83,121 for the Mac Studio with M1 Ultra (102,156 when using Metal).

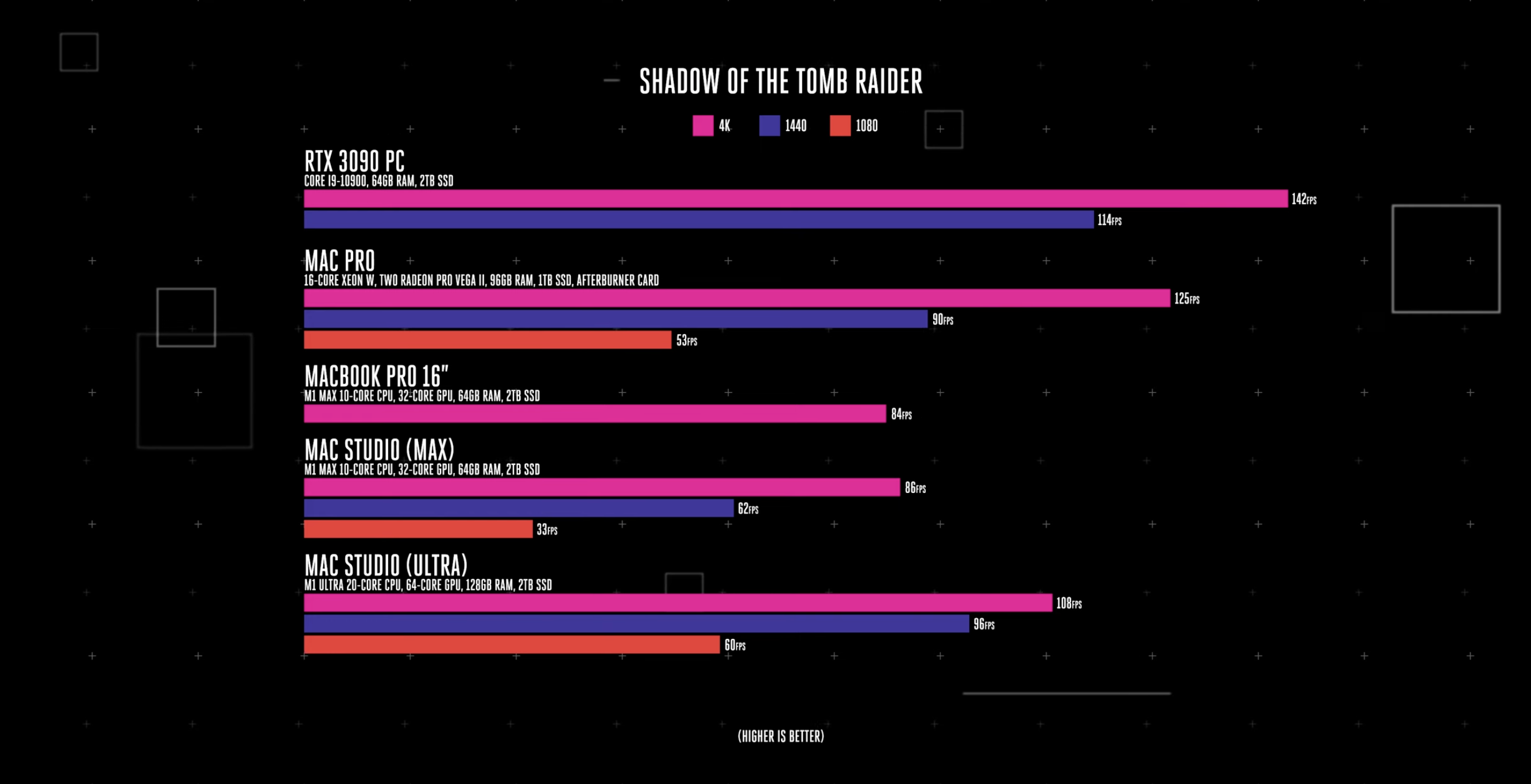

Not everyone puts a lot of stock in synthetic benchmarks like Geekbench, so the Verge also fired up Shadow of the Tomb Raider, and the RTX 3090 easily mopped the floor with the M1 Ultra, delivering 142 fps at 1440p and 114 fps at 4K. Unfortunately, the Mac Studio could only muster 108 fps and 96 fps, respectively. Those aren't horrible numbers, but we expected more from an alleged RTX 3090 killer.

Perhaps more important is that the publication's $6,199 Mac Studio provided nearly as much grunt as its previous generation $14,000 Mac Pro test system in a much smaller package. Arguably, that's a more impressive feat for Apple than picking a fight with dedicated graphics cards like the RTX 3090.

Why does Apple even bother with these fanciful benchmark graphs to tout superiority over processors and GPUs from the PC sphere? Even with its 5nm process node, Apple can only push the 64-core M1 Ultra GPU so far in a 60-watt envelope. On the other hand, the RTX 3090 can take full advantage of its 350-watt TDP to deliver blazing fast and consistent performance in a wide variety of scenarios. Sure, there might be a few specific benchmarks where the M1 Ultra's GPU might come out ahead on the performance-per-watt scale, but if we're talking all-out performance, we have to side with the RTX 3090.

Are the M1 Ultra's 20 CPU cores and 64 GPU cores impressive? Yes, most definitely. However, should Nvidia GPU and Intel CPU engineers be hanging their heads in shame over the mere arrival of the M1 Ultra? Absolutely not.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Brandon Hill is a senior editor at Tom's Hardware. He has written about PC and Mac tech since the late 1990s with bylines at AnandTech, DailyTech, and Hot Hardware. When he is not consuming copious amounts of tech news, he can be found enjoying the NC mountains or the beach with his wife and two sons.

-

JamesJones44 Not that I think an M1 Ultra is even comparable to an 3090, but this test is just a flawed as the synthetic benchmarks are. Shadow of the Tomb Raider runs under Rosetta 2 and is not a native binary. It's already been shown with several benchmarks that running games under Rosetta 2 has a 10 to 20% net reduction in FPS.Reply

They should do a real test with a game/app that is optimized for both platforms if they want to know what the real world difference is. -

Reply

the Verge also fired up Shadow of the Tomb Raider, and the RTX 3090 easily mopped the floor with the M1 Ultra, delivering 142 fps at 4K and 114 fps at 1440p.

I'm surprised nobody thought it was weird that the results for 4K were better than 1440p, and much better than 1080p.

So I went over to The Verge and realized that they must've fixed their chart by now:

https://www.theverge.com/22981815/apple-mac-studio-m1-ultra-max-review

You might want to update your article. -

Nuwan Fernando Reply

Even I was wondering for a moment, how can it gain more performance going from 1080p to 4K.Nolonar said:I'm surprised nobody thought it was weird that the results for 4K were better than 1440p, and much better than 1080p.

So I went over to The Verge and realized that they must've fixed their chart by now:

https://www.theverge.com/22981815/apple-mac-studio-m1-ultra-max-review

You might want to update your article. -

peachpuff Reply

This is the verge we're talking about, they barely know how to build a pc.Nolonar said:I'm surprised nobody thought it was weird that the results for 4K were better than 1440p, and much better than 1080p.

M-2Scfj4FZkView: https://youtu.be/M-2Scfj4FZk -

salgado18 We definitely need professional reviews for this, but the propaganda has already worked. Most people don't look up reviews to buy products, they just look at base specs and price, and maybe product pages. Also, Apple got the attention they need, now the M1 Ultra is in more news than it would if these numbers were more realistic. I hope Tom's can do an in-depth review of the machine, with some good analysis especially of the power and heat (it's hard to believe the chip can do so much while using so little power).Reply -

Reply

But what does it say about Tom's when they simply copy-paste a chart from there without even second-guessing or questioning the results, when they obviously can't be true? Does that make them worse than The Verge, as they can't even interpret some FPS results?peachpuff said:This is the verge we're talking about, they barely know how to build a pc.

It's one thing if you make a chart and label it wrong by accident before uploading it. It's another thing altogether if you find that chart online, look at it, and even write about the results without ever wondering whether or not these results even make sense in the first place. -

larkspur Reply

Shadow of the Tomb Raider is a real world app. What you suggest would be cherry-picking - which is exactly what Apple would like you to do.JamesJones44 said:They should do a real test with a game/app that is optimized for both platforms if they want to know what the real world difference is. -

Conrad Orc It would only be cherry-picking if SoTR was also natively coded for the Apple ecosystem. What James is suggesting is getting a fuller sample of applications, some which aren't just for gaming, some which are. If you want facts, you need good science data.Reply -

JamesJones44 Replylarkspur said:Shadow of the Tomb Raider is a real world app. What you suggest would be cherry-picking - which is exactly what Apple would like you to do.

It has nothing to do with Apple and "cherry picking". Pick any App or game that isn't running under emulation. Flip the script and run Shadow of the Tomb Raider in Wine under WSL, at least then both are in emulation. I'm saying either run both emulated or run both native, there are plenty of Apps and Games that have native binaries for both. -

sundragon Are we gonna talk about power consumption? LMAO I suspect the Ryzen is a bit more hungry and this is an apples to oranges comparison... Wow, Tom's I've been a member since the dark ages, what is going on with these crappy reviews?Reply