Deep Render Says Its AI Video Compression Tech Will 'Save the Internet'

Firm asserts that its video compression is already delivering 5x smaller file sizes.

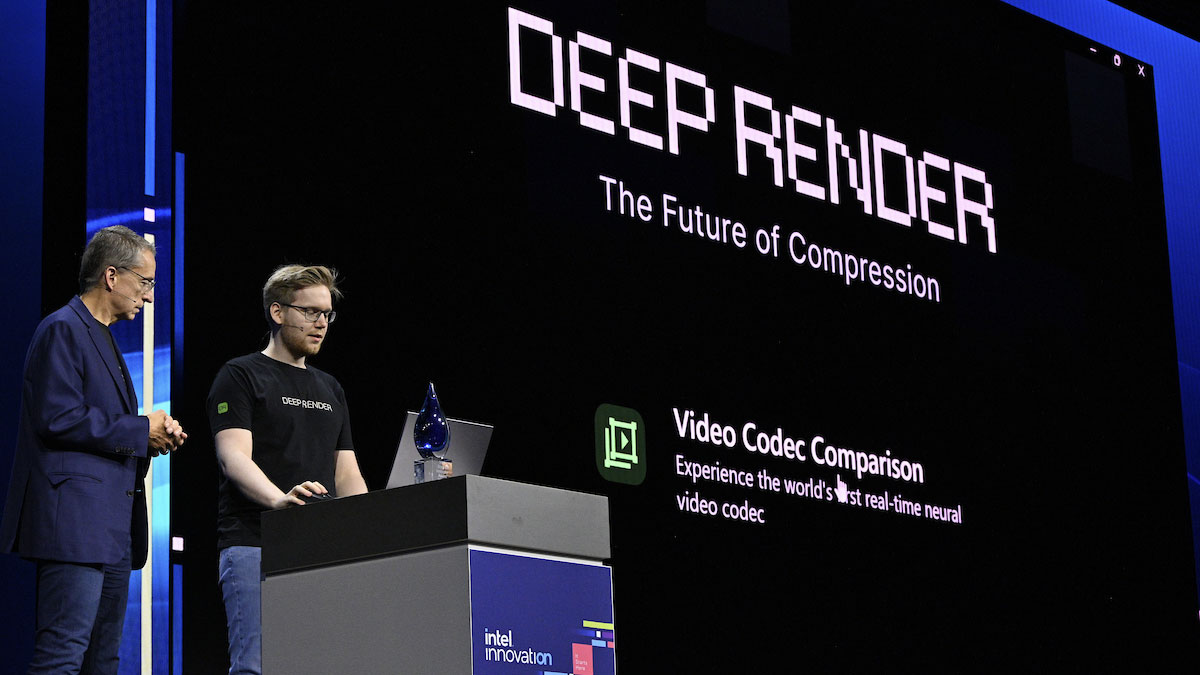

Deep Render is a startup aiming AI smarts at the decades-old computer problem of compression. It has developed AI-only video compression technology that is already claimed to offer 5x smaller video file sizes, with sights set on up to 50x improvements. The firm has recently been embraced by the Intel Ignite startup acceleration program, which precipitated the video presentation below.

As an older computer enthusiast, I have experience with file and disk compression dating back to the 8- and 16-bit eras. Nowadays, the most useful compression algorithms make video files smaller while maintaining high-quality imagery and audio. As the Intel Ignite participant clip above notes, transferring video data is a huge problem for the internet's infrastructure. Moreover, data-hungry, high-quality, fast frame rate, high-resolution videos and streams are increasingly popular.

Chri Besenbruch and Asralan Zafar co-founded Deep Render while they were computer science students at London's Imperial College. The pair asserts that today's advanced video requirements push up data volumes while traditional compression techniques and the internet buckle under pressure. Thus, people expecting great online video experiences often have to cope with lower frame rates, dropped frames, stuttering, artifacts, and other undesirable side effects of bandwidth constraints.

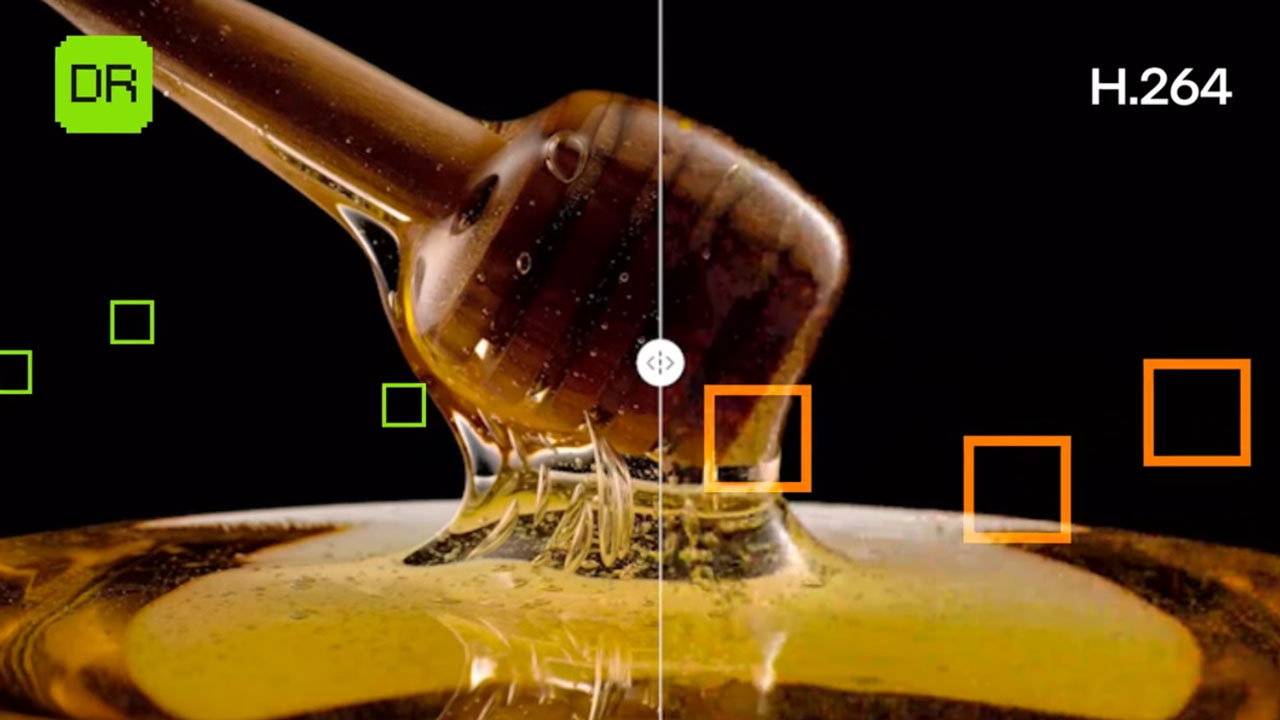

The Deep Render duo confidently reckons they "can fix this problem." Their AI compression technology is said to eschew the old guard and hit the source video data with a solely AI-driven compression pipeline. Zafar explains that "compression is all about exploiting redundancies" in the data and asserts the Deep Render AI compression "exploits redundancies in a far more fine-grained manner, tracing every single pixel, its movements, and destination in the frame sequence. Currently, it is boasted that Deep Render is "at 5x smaller file sizes now," with estimates that this AI compression tech could deliver 50x smaller files than technologies like H.264.

You can read more about the company on their website. Sadly, a technology demo linked on the home page is restricted to commercial partners and investors. On the topic of investors, Deep Render raised $9 million in a Series A funding round back in March. That funding valued the startup at $30 million. Deep Render also netted a $2.7 million grant from the European Innovation Council.

Big Green Competitor

Other tech companies interested in leveraging AI to video compression include some big hitters like Alphabet's DeepMind, Disney, and Nvidia.

In February, Tom's Hardware GPU editor Jarred Walton pondered over Nvidia's newly launched Video Super Resolution - Nvidia VSR. Naturally, this video technology, which can upscale lower-res videos to 4K, leans on similar technology to the firm's better-known DLSS.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

bigdragon This is great! One of the top 2 things I hate about streaming media is the compression. When you've got a big screen, 4k projector, good refresh rate, and serious sound system compression becomes more apparent. This is why I prefer to buy physical media despite how annoying 4k blu rays are to play.Reply

My hope is that this new AI solution improves the quality of media. I worry that a new compression method will be used to further shrink files instead of improving the fidelity of streamed content at the same file size as older compression methods. -

salgado18 Reply

I play 1080p content at standard refresh rates and the limited colors already bug me. Just look ata dark scene with fog, and you can see the few shades of gray in the video. The screen can handle a lot more colors than that, but the compression reduces the amount of color values. I think that a higher compression rate will lead to better quality, since today's streaming speed is good. More colors and less artifacts at the same (or better) speeds.bigdragon said:This is great! One of the top 2 things I hate about streaming media is the compression. When you've got a big screen, 4k projector, good refresh rate, and serious sound system compression becomes more apparent. This is why I prefer to buy physical media despite how annoying 4k blu rays are to play.

My hope is that this new AI solution improves the quality of media. I worry that a new compression method will be used to further shrink files instead of improving the fidelity of streamed content at the same file size as older compression methods.

This is one use of AI that I think is awesome, and may indeed lead to a big step forward in tech. -

Kamen Rider Blade I wonder how many patents will this company trample through to claim their "Innvoation".Reply -

vehekos New video format standards should include a neural network specification to upscale, interpolate and guess frames, so only the part non hallucinated by the neural network should be transmitted.Reply -

usertests Make it open source or I don't care. Also, try comparing it to H.265, H.266, AV1, and AV2 by the time it launches.Reply -

BinToss H.264/AVC is how old now? Its Wikipedia article states its specifications were published 19 years ago. Just because something is commonly used does not mean it is or should be used as the de facto standard of the industry. Its successor, H.265/HEVC, has been around for 10 years now and its royalty-free competition surpassed it ages ago. Directly competing with H.264/AVC was VP7 (2005) and VP8 (2008). In 2013, VP9 and H.265/HEVC. VP9 is already on its way out as support for its successor, AV1, becomes more prevalent. And, as stated by @usertests, the successors to AV1 and H.265/HEVC have been in development for some time now.Reply

Comparing a next-gen video codec to a last-gen codec is like comparing a brand new NVMe SSD to any ol' SATA HDD. Though it feels more like comparing an NVMe SSD to an IDE HDD.

You say that as if Blu-Ray can only store lossless multimedia. You don't mean it that way, right? It's just an example of (re-encoded) streamed multimedia's quality being compromised for data savings as opposed to stored multimedia, which is typically encoded more slowly for better quality and greater storage efficiency, right?bigdragon said:This is great! One of the top 2 things I hate about streaming media is the compression. When you've got a big screen, 4k projector, good refresh rate, and serious sound system compression becomes more apparent. This is why I prefer to buy physical media despite how annoying 4k blu rays are to play.

My hope is that this new AI solution improves the quality of media. I worry that a new compression method will be used to further shrink files instead of improving the fidelity of streamed content at the same file size as older compression methods. -

BinToss Reply

So you're saying these features should be built into the decoder rather than the encoder and its video data output?vehekos said:New video format standards should include a neural network specification to upscale, interpolate and guess frames, so only the part non hallucinated by the neural network should be transmitted.

That would restrict the decoder to function on hardware supporting the necessary instructions. That's partly why H.264 is still so prevalent despite being 20 years old—compatibility. -

vehekos Reply

Both encoder and decoder need the NN.BinToss said:So you're saying these features should be built into the decoder rather than the encoder and its video data output?

The encoder uses the NN to get the predicted frame, and then encodes only the error |Predicted-ActualFrame|.

The decoder uses the same NN to predict the frame and then apply the encoded error correction.

Of course, it would need to be suboptimal so it runs in a wide range of hardware, including phones.

Also all hardware should be designed to run NN efficiently, which they will need to do anyway, because AI is getting everywhere.

Any hardware, including phones, already has graphics hardware capable of accelerating NN.

Youtube already encodes video in different formats for different platforms anyways.