AMD and Arm Form the Backbone of EU's 400 Petaflops "EuroExa" Supercomputer

Exascale with AMD Epyc, ARM Cortex, and Xilinx's FPGAs for 2022/2023 deployment

The European Union, via a €20 million grant through its "EU Horizon 2020" initiative, is looking to deploy a 400 petaflops supercomputer on European soil between 2022 and 2023. The supercomputer is being developed under the EuroExa project umbrella, which aims to rebalance the world's scales when it comes to the availability of supercomputing performance.

The supercomputer is expected to scale up to a maximum theoretical throughput of 400 petaflops while consuming up to 30MW of power, deploying technology from AMD, ARM, and a number of other providers in what amounts to a heterogeneous supercomputer. It's projected that the supercomputer will occupy an area equivalent to 30 shipping containers, about 40 meters from one edge of the system to the other.

The EU is in a relatively uncompetitive state when it comes to both silicon manufacturing and investment in high performance computing systems. As of June 2021 and according to the TOP 500 organization, the entire EU counted only 92 total operating supercomputers — the EU lost 11 of those, down from 103, when Brexit occurred. That's compared to 122 supercomputers deployed by the United States, which also has five of the systems in the top 10 supercomputers in the world, and 188 supercomputers for China (two of those in the Top 10).

Japan claimed the supercomputing crown on its own with the Fugaku supercomputer, an Arm-powered machine that is the world's most powerful system currently in operation. However, supercomputers sort of represent the currency of the future, along with a ceiling of how much research can be carried out, and the quality of that research. For example, supercomputers were essential in the development of vaccines for the still ongoing COVID-19 pandemic.

That is the backdrop for the EU's decision to (re)invest in the supercomputing space, a road on which the upcoming EuroExa will be one of the most important steps. As it currently stands, the EuroExa and its projected 400 petaflops capability will slot it right below Fugaku (500 petaflops), and provide enough computational power to top Summit, the current most powerful supercomputer in the US, by delivering double its 200 petaflops performance.

Part of the €20 million grant goes to basic hardware costs, and the EU aims to deliver a true heterogeneous supercomputer. AMD will be providing its Epyc CPUs, which will form the backbone of calculations proper. Arm cores will be used as data compression elements, allowing for more data to flow through the system in the same available bandwidth, while Maxeler will be providing the FPGA (Field-Programmable gate Array) IP required for data flow control systems — essentially a hardware-accelerated way of ensuring that data goes to whatever part of the supercomputer it has to as fast and efficiently as possible.

The actual FPGAs to be employed are Xilinx-made parts, of which Maxeler is a close collaborator - Maxeler Technologies is a Xilinx alliance member in the later's partner program. You may remember that AMD is in the process of acquiring Xilinx for $35 billion - and progress appears to be smooth, with multiple regulatory agencies having already given the green light for the deal. It seems AMD's acquisition of Xilinx is already bearing fruit, even before the merger has completed so it's likely not a coincidence that AMD and Xilinx are both providing hardware to the EuroEXA project.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

It can sound like it's relatively easy to scale a supercomputer: You just need more hardware that processes data, the energy to power it, the cooling system that keeps it from melting itself, and the space to actually build it. Those things are complex enough themselves, but there are many moving parts on each of the decisions involved, which take into account natural resources availability, quality and capacity for local power delivery (and backup power systems), and so on and so on.

Part of the grant will be used in the development of new immersion cooling systems, for instance, instead of using air cooling that's commonly used in most personal computers and even supercomputers. The supercomputer hardware will be immersed in a non-conductive medium (even your regular old kitchen oil can do this, after a fashion), that takes up the heat that the hardware outputs, and is then moved through a cooling system that removes the excess heat.

The grant not only helps with these hardware deployment costs, but also provides for research and development. That includes the development of new hardware and software solutions, such as UNIMEM, a global shared-memory architecture. One increasingly important obstacle toward scaling supercomputers is the most basic one, data. Supercomputers achieve their immense computing capabilities by having millions of hardware pieces (such as CPUs, GPUs, and FPGAs) all working in parallel, dividing a workload into millions of tiny pieces that can then be separately processed. However, the more moving parts you add, the more important it becomes to be able to get the data through different processing stages, and from hardware piece to hardware piece. That's where bottlenecks can appear.

A supercomputer may be able to make quadrillions of calculations per second, but if data stops flowing, you'll have parts of the computer sitting idle until they receive their next workload, bringing down theoretical maximum performance. Part of the grant will also be devoted to the improvement and development of an interconnect capable of moving the sheer amount of data through the system. This is where the use of FPGAs with IP from Maxeler comes into play. These are essentially flexible hardware chips that change their physical configuration on-the-fly, according to the data that needs processing at the time.

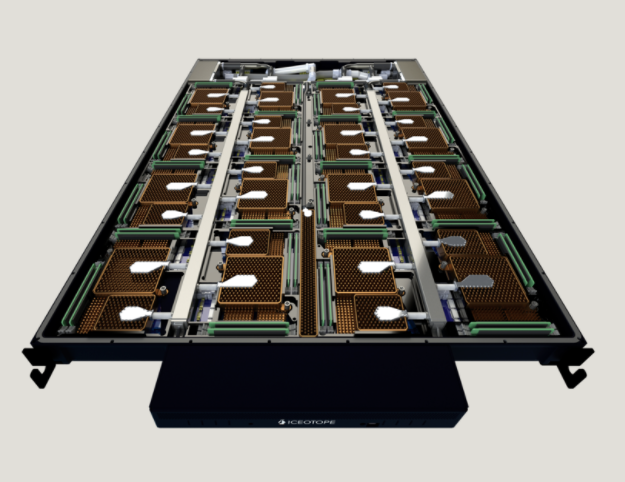

To facilitate the integration of all of these hardware pieces working in concert, the grant has also been used in the development of a Co-design Recommended Daughter Board (CRDB). The CRDB will include all the hardware parts (from AMD, Arm, and Maxeler), and will enable a direct connection to a smart IO controller, unifying storage and communication services with the application host. These CRDB units will then be scaled, with 16 of these CRDB modules deployed across four multi-carriers to create a single Open Compute Unit blade (1OU).

We will see how well the EuroExa project does in the coming year. The intention to develop and deploy an EU-bound supercomputer that can aid in research and development for the EU is a worthy goal. At the same time, it will also contribute to the rest of the world, even if only (and at worst) by proxy. And that sounds pretty good to us.

Francisco Pires is a freelance news writer for Tom's Hardware with a soft side for quantum computing.

-

Nikolaus_E Thank you for the extremely interesting article! If you take a deeper look to the EuroExa site, you will find that the Arm CPUs are in fact Xilinx SOCs with FPGA parts to be programmed by Maxeler which is BTW a close alliance partner of Xilinx. This could mean that we see here one of the first huge deployments benefiting from the anticipated merger of AMD and Xilinx!Reply

In the documentation this information is somewhat hidden in the background while the role of Arm and Maxeler is highlighted. The reason seems to be that this is an european project and the participants want to point out the importance of european companies with both Arm and Maxeler are being located in England. -

Soaptrail Did anyone else read this and first think they were talking about RGB and then upon rereading it thought they were talking about CBD?Reply

To facilitate the integration of all of these hardware pieces working in concert, the grant has also been used in the development of a Co-design Recommended Daughter Board (CRDB). The CRDB will include all the hardware parts (from AMD, Arm, and Maxeler), and will enable a direct connection to a smart IO controller, unifying storage and communication services with the application host. These CRDB units will then be scaled, with 16 of these CRDB modules deployed across four multi-carriers to create a single Open Compute Unit blade (1OU).

-

Nikolaus_E The infos are in the latest youtube video in the media section pretty much at the end around 1h:33m where AMD Epyc and Xilinx are mentioned but it's hard to understand how Xilinx is exactly involved …Reply