Intel's Celeron G6900 Alder Lake CPU Struggles In AAA Games

Golden Cove cores aren't magic, and 2C/2T just doesn't cut it in modern PC gaming.

Earlier in the week, we reported how the new Celeron G6900 from Intel's 12th Generation Alder Lake family matched the Core i9-10900K (Comet Lake) in single-threaded performance. In addition, the Geekbench benchmark results highlighted the punch of the new Golden Cove cores. However, it is much better to see and test processor performance in the real world, and Steve at Random Gaming in HD (RGIHD) has obliged with a nine-game test suite.

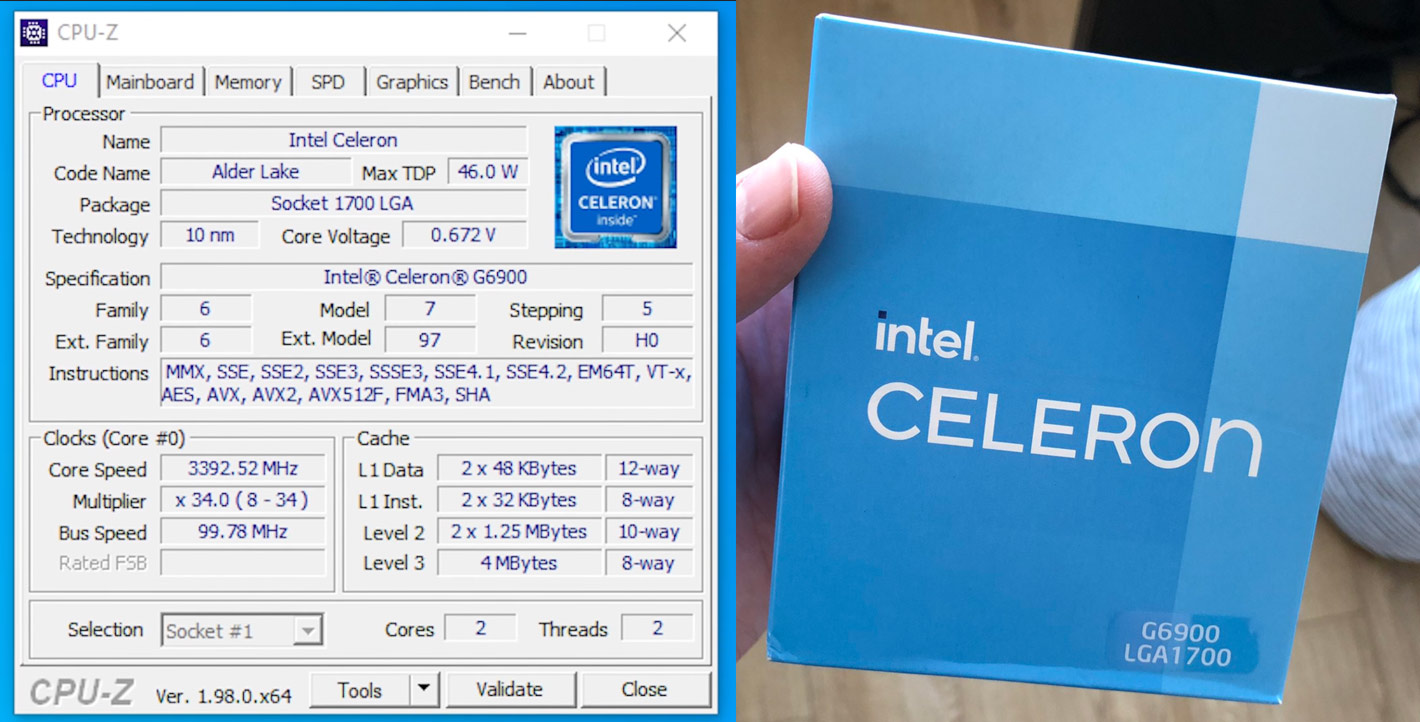

To recap the Intel Celeron G6900's specifications, it has two Golden Cove cores and no Hyper-Threading. It runs at 3.4 GHz, with 4MB Smart Cache and 2.5 MB of L2 cache. The processor supports up to DDR4-3200 or DDR5-4800. Intel's UHD Graphics 710 iGPU has up to a 1.3 GHz boost and 16 EUs. This Celeron has a 46W TDP.

As he was testing the base model in the Alder Lake lineup, which cost £55 (MSRP $42 in the US), Steve saw it to match it with the cheapest Intel LGA1700 motherboard he could find, the Gigabyte H610M S2H DDR4 for which he paid £80 (about $110). The RAM quantity wasn’t skimped upon for a budget build, with 16 GB (8 x 2 DIMMs) of DDR4-3000.

He didn’t say anything about the storage, but the GPU in the system for game testing was the Nvidia T1000, a creator-focused card with very similar specifications to the GTX 1650. In a previous video, RGIHD said he picked up this T1000 as it had better availability/pricing than the gamer/consumer-focused GTX 1650. So it might be the same for you, depending on your region.

The system, as mentioned earlier, was tasked with a decent range of popular games, new and old, using sensible quality settings.

The results showed The Witcher 3 at 1080p high with an average of 43 FPS, marred by lengthy intermittent freezes, which couldn’t accurately qualify as stutters – these pauses were far too long (1% low 0 FPS)

In GTA San Andreas at 1080p high, the system achieved an average of 58 FPS, and the glitches and slowdowns were much more bearable (1% low 15 FPS).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

For Cyberpunk 2077 playing at 1080p low in the Badlands, we saw an average of 47 FPS 1% low of 11 FPS). However, this game has a bigger problem, as it will not load save games when this lowly 2C/2T processor is your CPU. It is hard to complain about this “bug” as the Celeron is two cores short of the minimum specced CPU.

The YouTuber ran CS:GO at 1080p low settings on the Dust II map. The average in-game performance was 120 FPS (1% low 42 FPS), so performance was much more respectable.

Steve went on to test Fortnite, Forza Horizon 5 (hangs at loading screen), GTA 5, Far Cry 6 (so slow it is unplayable), and Red Dead Redemption 2 ran at an average 34 FPS using 1080p “console settings.”

A common observation throughout the testing was that the Celeron held this system back in gaming. If you flick through the video, you will often see CPU utilization at or near 100% while the GPU isn't really under duress (unless it is in Cyberpunk 2077). The system is not balanced for gaming.

To conclude his exciting insight into the G6900, Steve indicated that there wasn't much point in going for this latest-gen Celeron over any previous-gen entry-level Intel processor that is still available due to the higher motherboard costs at this time. However, on an optimistic note, RGIHD intends to look at the new Pentium Gold G7400, which Steve will be testing with the iGPU as well as an appropriate discrete GPU. The Pentium has several upgrades over the Celeron, including its 2C/4T configuration, a faster clock speed of 3.7 GHz, and double the Smart Cache.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Neilbob 2C/2T just doesn't cut it. No need to add more than that.Reply

This is one Intel Segmentation that really should go away now. -

Alvar "Miles" Udell I blame Microsoft. They allowed dual cores to meet the minimum spec for Windows 11, so this product can exist.Reply -

Co BIY The use of a T100O GPU is interesting - Sounds like something worth reviewing especially if availability and pricing on them are better in some areas.Reply -

InvalidError Reply

4MB of cache doesn't help either. If you take an i9-10900k and disable four cores, the i9 often performs 15-20% better than its i5 counterpart with all clocks locked to the same frequency simply due to the i9 having 20MB of L3 vs 12MB for the i5. There is a similar pattern with Alder Lake too, which the i5-12xxx could really use yet another extra 6MB more L3$.Neilbob said:2C/2T just doesn't cut it. No need to add more than that. -

InvalidError Reply

Wouldn't be so sure about that. My mother doesn't do anything fancy on her Ryzen 2500U laptop and still complains it is slow. I tossed an SSD and fresh Windows install to clear out all remaining OEM bloat a few weeks ago. She says it is better but still not great.DavidMV said:It has its place. The Celeron and Pentium are plenty good for several use cases including HTPC and basic office PCs. Why spend $120 when $50 will do just fine for those tasks.

That level of super-basic processing power is either for people desperate to throw something together on the smallest budget possible performance be damned or people who want to build a PC for a specific purpose (ex.: kiosks, ATMs, PoS terminals, etc.) and make it miserable for anything else on purpose so people will quit trying to use them for anything else. -

jpe1701 ReplyInvalidError said:Wouldn't be so sure about that. My mother doesn't do anything fancy on her Ryzen 2500U laptop and still complains it is slow. I tossed an SSD and fresh Windows install to clear out all remaining OEM bloat a few weeks ago. She says it is better but still not great.

That level of super-basic processing power is either for people desperate to throw something together on the smallest budget possible performance be damned or people who want to build a PC for a specific purpose (ex.: kiosks, ATMs, PoS terminals, etc.) and make it miserable for anything else on purpose so people will quit trying to use them for anything else.

Stop being cheap and buy your Mom a better laptop. lol. just kidding, but there are many people that use PCs with celeron and pentium processors and think nothing of the time things take. Things that would drive me mad waiting for, they do everyday and don't even realize it's that slow. They just see the cheap price tag and go with it. Besides they're not bad if all you are doing is web browsing, email, or office work as long as you don't do it all at once. I have a little chuwi laptop with a celeron n4120 quad core without hyperthreading that actually works incredibly well for what I use it for, and totally surprised me on how it performed even with emmc storage. -

jeremyj_83 Reply

It doesn't take much to bog down a 2c/2t CPU anymore even if all you are doing is basic office and browsing. My i5-6400 at work regularly gets bogged down. On average I have Outlook, Slack, OpenVPN, and 8 Firefox tabs open. CPU is usually in the 25-50% range and often goes higher than that.DavidMV said:It has its place. The Celeron and Pentium are plenty good for several use cases including HTPC and basic office PCs. Why spend $120 when $50 will do just fine for those tasks. -

Neilbob Replyjpe1701 said:... there are many people that use PCs with celeron and pentium processors and think nothing of the time things take. Things that would drive me mad waiting for, they do everyday and don't even realize it's that slow. They just see the cheap price tag and go with it. Besides they're not bad if all you are doing is web browsing, email, or office work as long as you don't do it all at once ...

The problem here is that the bulk of systems that end up with these Celerons will be the lowest of low end Dell and HP landfillers, for example, that not only have to run a web browser, email or office application, but also all the other bloated nonsense that these system integrators insist on adding in as a 'bonus'. Not to mention all the various things Windows itself performs in the background at the same time. A simple task like checking an email becomes, at that point, somewhere adrift of efficient.

The users of these systems may not realise things are going slow, but only because they don't know any better. I sometimes have to wonder if many people end up overpaying for a Mac further down the line because they have now formed the belief that Windows PCs are always that slow, regardless. -

jpe1701 Reply

I agree, and they buy things that are already way out of date not knowing any better too. I get by with my little cheap laptop because I am obsessed with keeping track of everything using memory and budget it accordingly. On a personal note about this subject my sister in-law who insisted that she didn't need help picking a laptop just picked up a shiny new laptop from Walmart. haha. It has an Intel dual core celeron n3350. Dual core with no hyperthreading. No windows 11 support. Which comedian was it that used to say "Here's your sign..." ?Neilbob said:The problem here is that the bulk of systems that end up with these Celerons will be the lowest of low end Dell and HP landfillers, for example, that not only have to run a web browser, email or office application, but also all the other bloated nonsense that these system integrators insist on adding in as a 'bonus'. Not to mention all the various things Windows itself performs in the background at the same time. A simple task like checking an email becomes, at that point, somewhere adrift of efficient.

The users of these systems may not realise things are going slow, but only because they don't know any better. I sometimes have to wonder if many people end up overpaying for a Mac further down the line because they have now formed the belief that Windows PCs are always that slow, regardless. -

watzupken When you consider the amount of background activities running in Windows, I am not surprised at all. In the past, I could still tolerate running Windows 7 on a mechanical hard drive. As we move on to Win 10, I was surprised at how unreasonably slow the same mechanical drive became. To the point where I thought the computer was broken. So with more background activities that are keeping the CPU busy, it will have a negative impact on gaming performance. And this doesn't even have HT, so making it more inefficient.Reply