Intel Habana Gaudi2 Purportedly Outperforms Nvidia's A100

Gaudi2 versus Nvidia's two-year-old compute GPU.

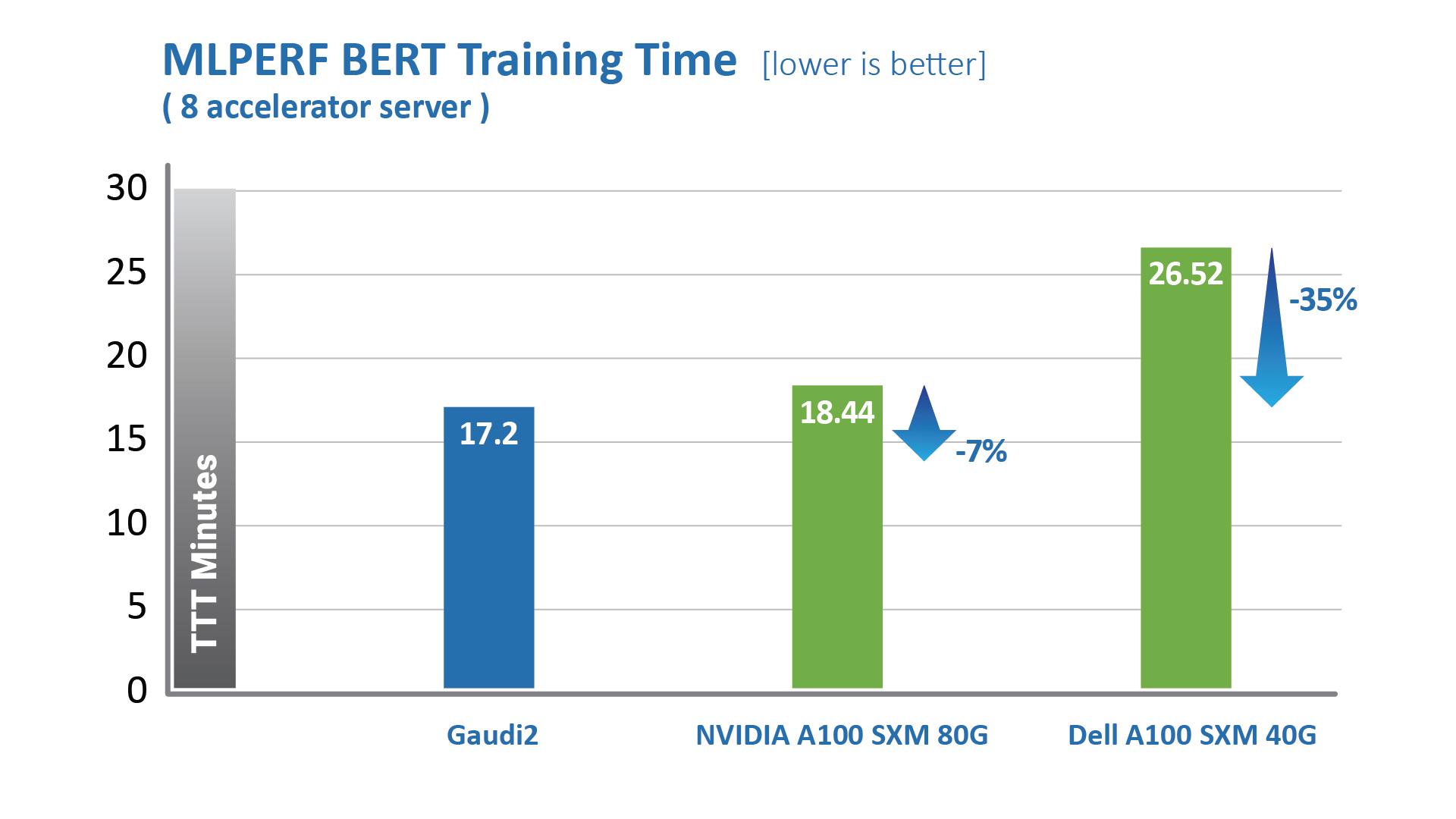

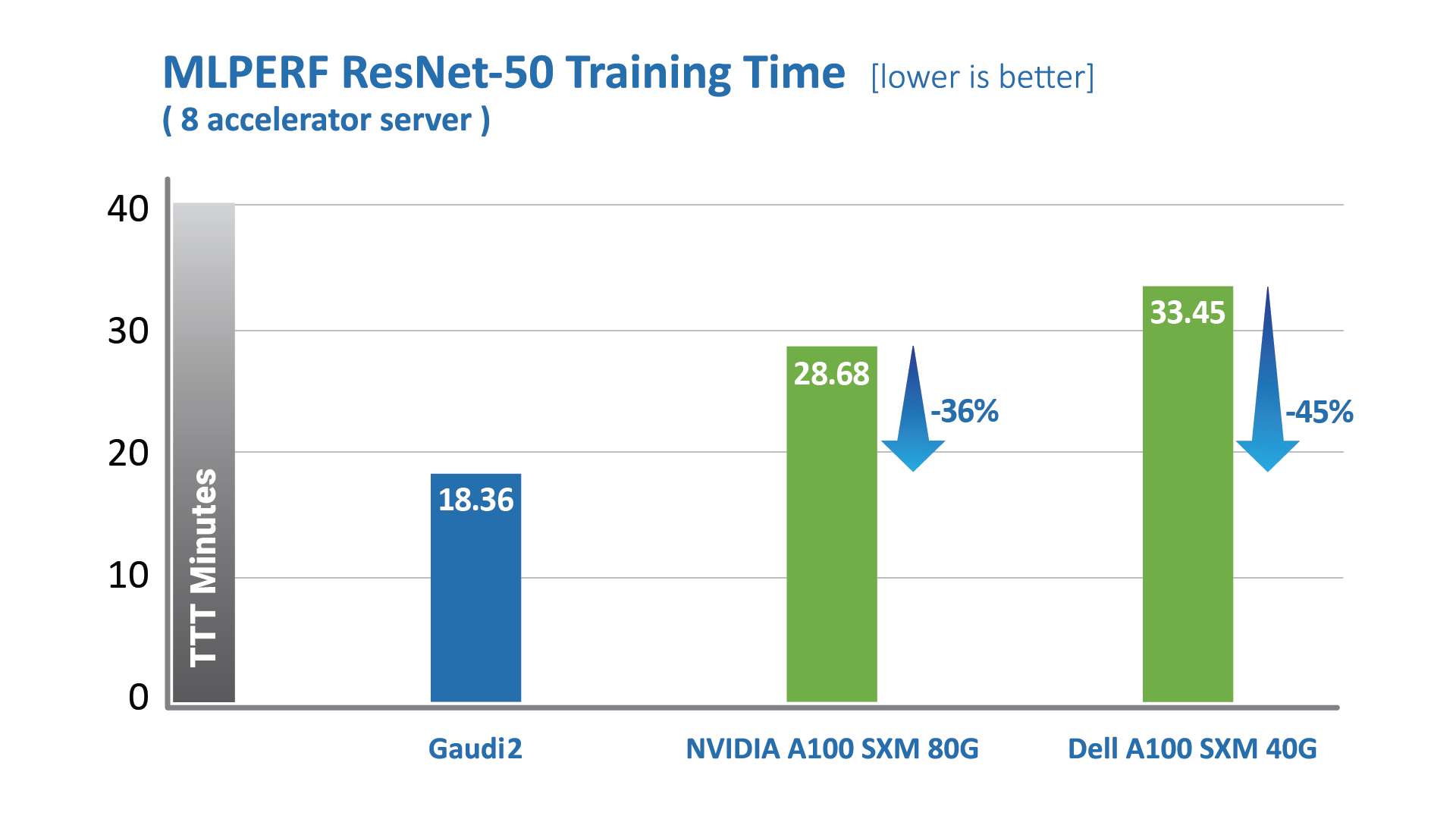

Intel on Wednesday published performance results of its Habana Labs Gaudi2 deep learning processor in MLPerf, a leading DL benchmark. The 2nd Generation Gaudi processor outperforms its main currently available competitor — Nvidia's A100 compute GPU with 80GB of HBM2E memory — by up to 3 times in terms of time-to-train metrics. While Intel's publication does not show how the Gaudi2 performs against Nvidia's H100 GPU, it describes some of Intel's own performance targets for the next-generation of chips.

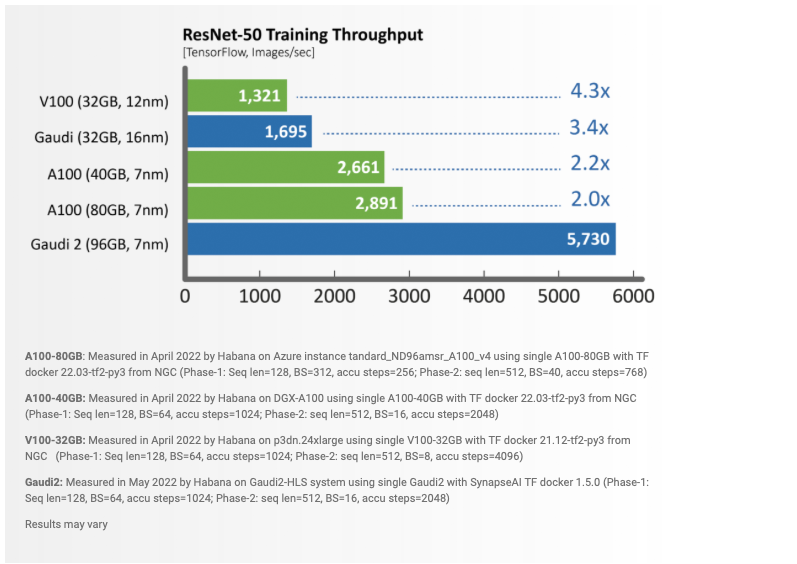

"For ResNet-50 Gaudi 2 shows a dramatic reduction in time-to-train of 36% vs. Nvidia’s submission for A100-80GB and 45% reduction compared to Dell’s submission cited for an A100-40GB 8-accelerator server that was submitted for both ResNet-50 and BERT results," an Intel statement reads.

3X Performance Uplift vs Gaudi

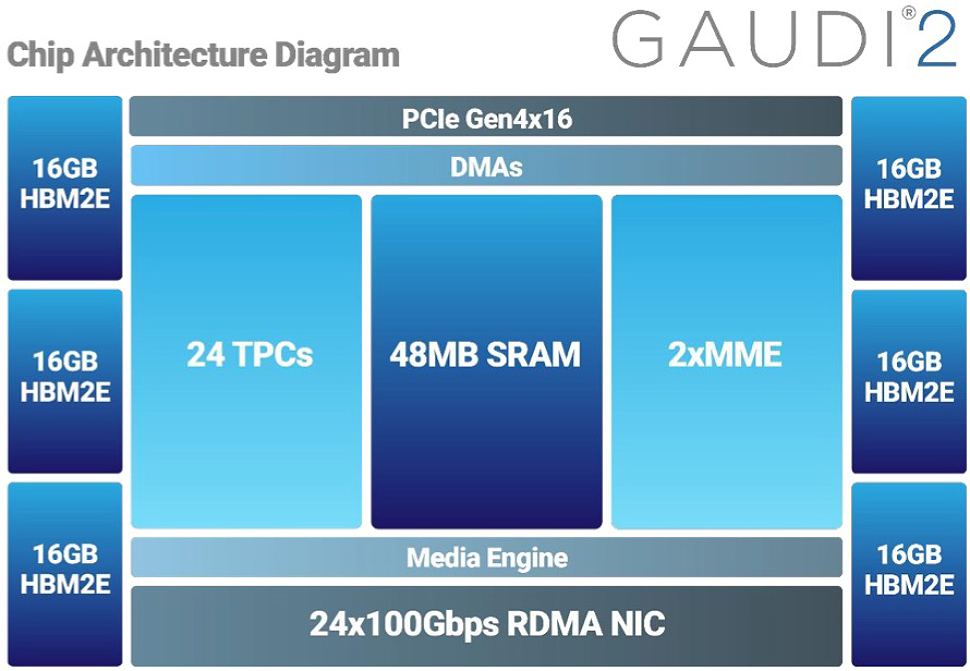

Before jumping right to performance results of Intel's Habana Gaudi2, let us quickly recapture what Gaudi actually is. The Gaudi processor is a heterogenous system-on-chip that packs a Matrix Multiplication Engine (MME) and a programmable Tensor Processor Core (TPC, each core is essentially a 256-bit VLIW SIMD general-purpose processor) cluster capable of processing data in FP32, TF32, BF16, FP16, and FP8 formats (FP8 is only supported on Gaudi2). In addition, Gaudi has its own media engines to process both video and audio data, something that is crucially important for vision procession.

While the original Habana Gaudi was made using one of TSMC's N16 fabrication processes, the new Gaudi2 is produced on an N7 node, which allowed Intel to boost the number of TPCs from 8 to 24 as well as add support for the FP8 data format. Increasing the number of execution units and memory performance can triple performance compared to that of the original Gaudi, but there may be other sources for the horsepower increase. On the other hand, there may be other limitations (e.g., thread dispatcher for VLIW cores, memory subsystem bandwidth, software scalability, etc.)

Compute cores of the Gaudi2 processor are equipped with 48MB of SRAM in total and the memory subsystem features 96GB of HBM2E memory offering a peak bandwidth of 2.45 TB/s (which is one of a few numbers we could tie to Nvidia's upcoming H100, which offers a memory bandwidth of around 3 TB/s in its SXM form at 700W). To make the chip even more versatile, it has 24 100GbE RDMA over converged Ethernet (RoCE2) ports.

The only things that are missing from the specifications are FLOPS and power (since these are are Mezzanine OAP cards, we assume it's 560W tops).

2X Performance Uplift vs A100

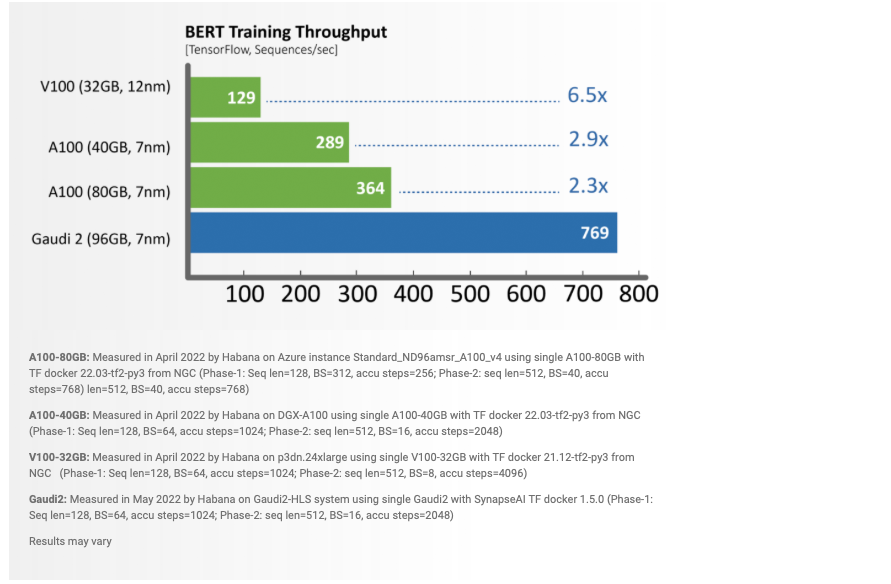

To benchmark its new Gaudi2 deep learning processor, Intel used computer vision (ResNet-50) and natural language processing (BERT) models from the MLPerf benchmark. The company compared Habana's machine with two Xeon Scalable 8380 CPUs and eight Gaudi2 processors (as Mezzanine cards) against commercially available servers powered by the 1st Gen Gaudi as well as Nvidia's A100 80GB/40GB-powered servers (with eight GPUs) from Dell and Nvidia. The results are currently featured in ML Common's database (details, code).

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Intel stresses that performance results of Nvidia A100-powered systems were obtained via out-of-box machines and performance of of Gaudi-powered servers was achieved "without special software manipulations," which defers "from the out-of-the-box commercial software stack available to Habana customers."

"Training throughput was derived with TensorFlow dockers from NGC and from Habana public repositories, employing best parameters for performance as recommended by the vendors (mixed precision used in both)," the description reads. "The training time throughput is a key factor affecting the resulting training time convergence."

While we should keep in mind that we are dealing with performance numbers obtained by Intel's Habana Labs (which should always be taken with some grain of salt), we also should appreciate that Intel published actual (i.e., eventually verifiable) performance numbers for its own Habana Gaudi2 deep learning processor and its competitors.

Indeed, when it comes to computer vision (ResNet-50) model, Intel's Gaudi2 outperforms an Nvidia A100 system by two times in terms of time-to-learn metrics. There are of course somewhat different software settings (which is natural given different architectures), but since we are dealing with one model, Intel claims that this is a fair comparison.

As far as natural language processing is concerned, we are talking about 1.8x – 3.0x times performance improvements over A100 machines. A part of this advantage can be attributed to Intel's industry-leading media processing engines incorporated into Gaudi2. But it looks like internal bandwidth and compute capabilities along with SynapseAI software advantages (keep in mind the advantages that Intel did to its PyTorch and TensorFlow support in the recent quarters) that come with Gaudi2 do the significant part of the job here.

Scaling Out

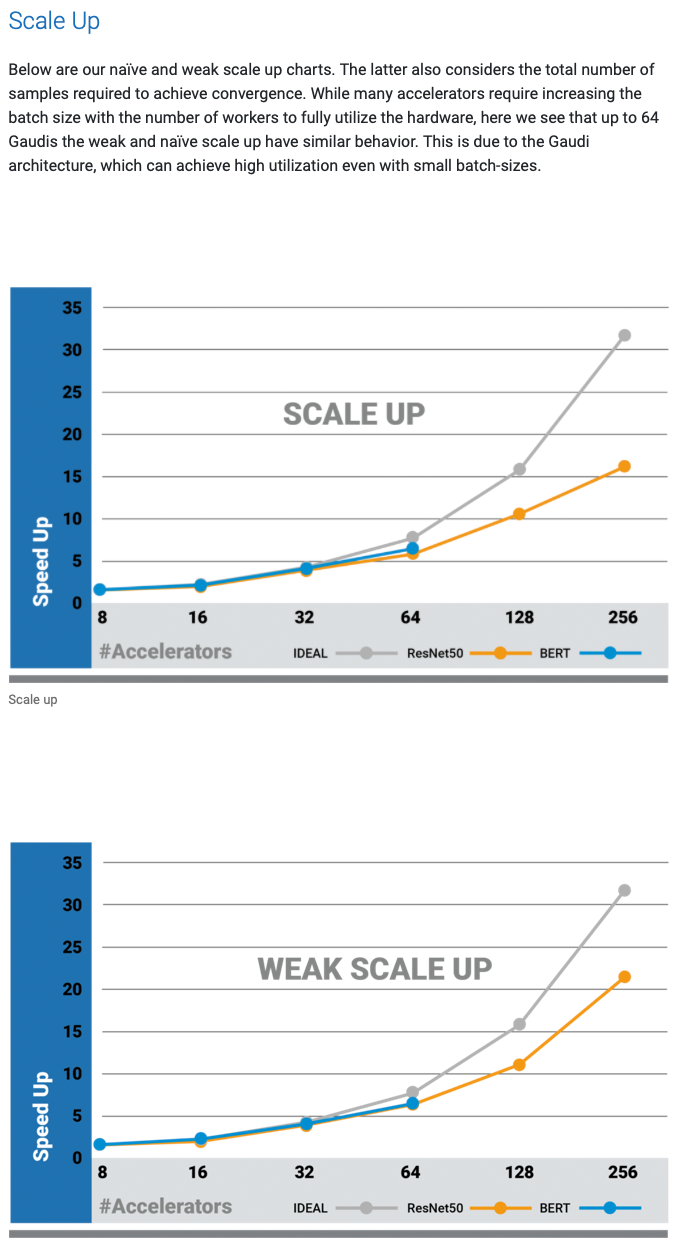

Among the things that Intel submitted to ML Common's database (which have not been published yet) were performance results of 128 and 256-accelerator configurations demonstrating parallel scale out capability of the Gaudi2 platform available to commercial software stack available to Habana customers (bear in mind, this chip has 24 100GbE RDMA ports and it may scale in different ways).

Among the things that Intel submitted to ML Common's database (which have not been published yet) were performance results of 128 and 256-accelerator configurations demonstrating parallel scale out capability of the Gaudi2 platform available to commercial software stack available to Habana customers (bear in mind, this chip has 24 100GbE RDMA ports and it may scale in different ways).

Amdahl's law says that performance scaling within one chip beyond one execution core depends on many factors, such as within-chip latency as well as software and interconnection speeds. GPU developers have long discouraged of this law. When it comes to scale out capabilities, Intel's Gaudi2 outperforms all existing AI models given its vast I/O. Meanwhile, Intel does not disclose how AMD and Nvidia-based solutions perform in the same cases (we should presume that it scales better with tensor ops, don't we?).

"Gaudi2 delivers clear leadership training performance as proven by our latest MLPerf results,"| said Eitan Medina, chief operating officer at Habana Labs. "And we continue to innovate on our deep-learning training architecture and software to deliver the most cost-competitive AI training solutions."

Some Thoughts

Without any doubt, performance results of Intel's Habana 8-way Gaudi2 96GB-based deep learning machine are nothing but impressive when compared to an Nvidia's 8-way A100 DL system. Beating a competitor by two times on the same process node is spectacular to say the least. But this competitor is two years old.

Yet this is without consideration of power consumption, which we do not know. We can only assume that Intel's Gaudi2 are OAM cards are rated at a 560W max (as spaked) per board. But this is barely a metric for those deploying things like Gaudi2.

Intel's Gaudi2 system partners currently include Ddn and Supermicro. Given the nature of ddn, we are talking about an AI-enabled storage solution here (bear in mind, this is an Intel PDF). Supermicro is only mentioned.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

JayNor It will be interesting to see a future roadmap for Gaudi. Intel's recent silicon photonics advancements promise multi- Tbps communication from in-package tiles, for example, so you have to wonder if these Gaudi2 boards can be reduced to a package.Reply