Intel Lands US Energy Department Funding for 2kW Cooling Tech

Cooling tech blends immersion, coral structures, and vapor chambers.

Intel today announced that its innovative immersion cooling technology has gained its first big customer - the U.S. Department of Energy (DOE). Intel's cooling tech will be developed and implemented with the backing of $1.71 million in funding over three years. This particular technology, which is expected to come into play in the DOE's data centers, was outlined back in April, including novel technologies capable of cooling processors up to 2,000 W. Intel is one of 15 organizations that the energy department has entrusted with creating cooling solutions for upcoming data centers.

The hefty funding comes via the COOLERCHIPS program – Cooling Operations Optimized for Leaps in Energy, Reliability, and Carbon Hyperefficiency for Information Processing Systems. This program is supported by the DOE's Advanced Research Projects Agency-Energy (ARPA-E). Apparently, the goal is to "enable the continuation of Moore’s Law," by allowing Intel to include more processing cores to its highest performance processors, with the reassurance that there will be coolers capable of handling 2,000 W chips. For context, today's most powerful data center processors are fast approaching 1,000 W.

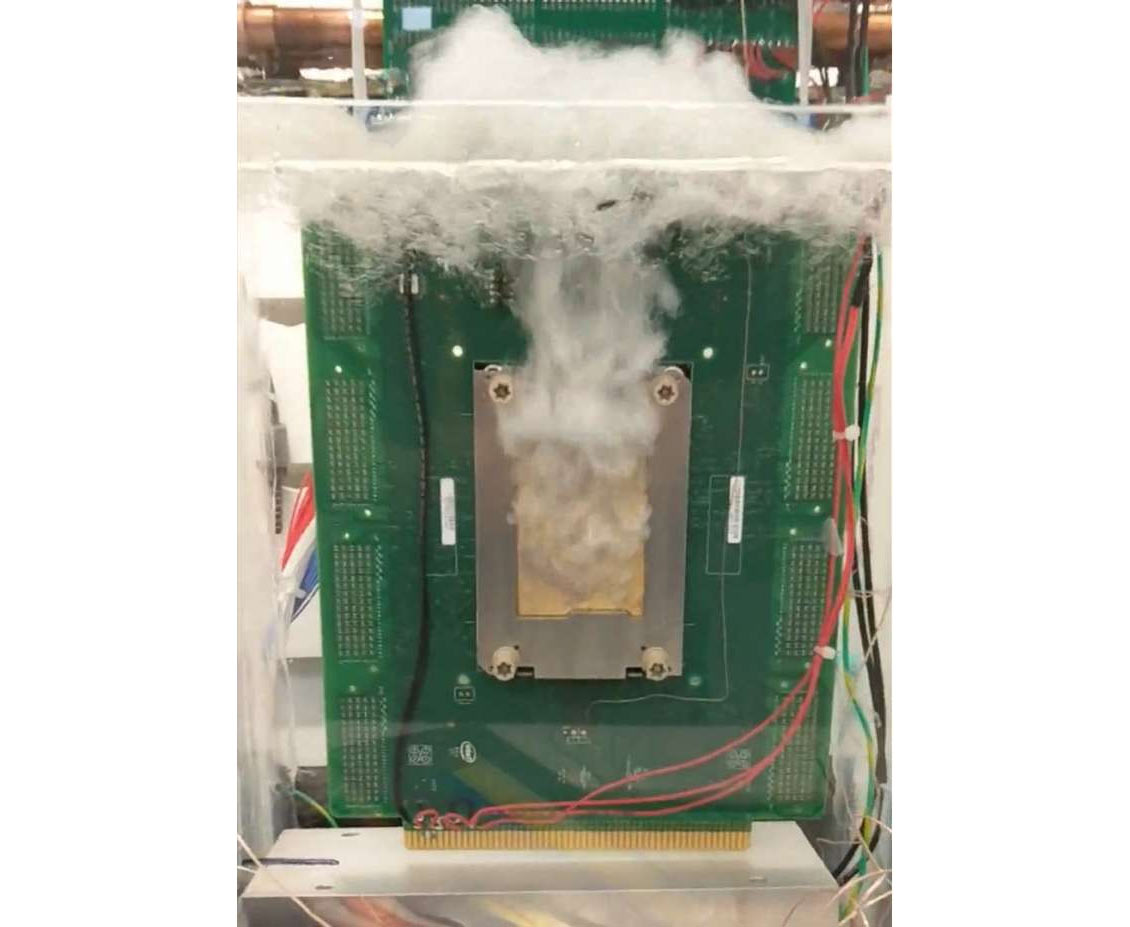

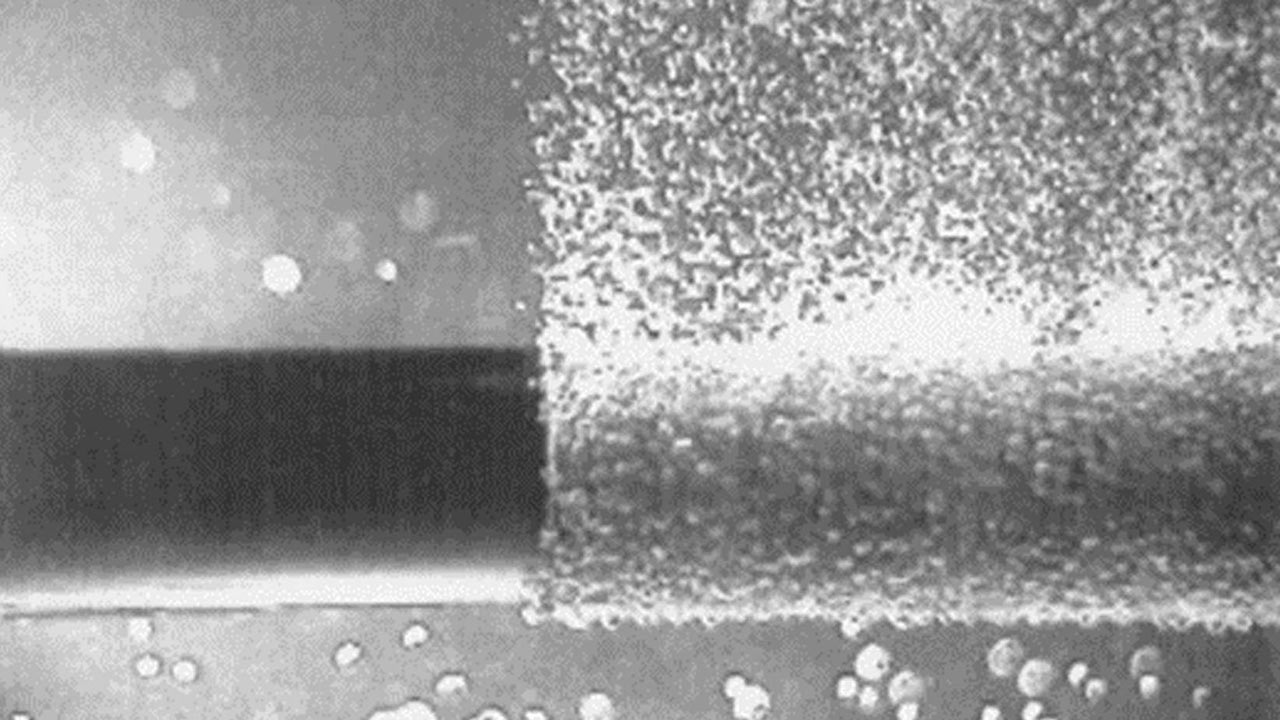

Intel is working with a mix of academic and industry researchers to create its two-phase immersion cooling solution. Being of the two-phases type means that, as well as the electronics being cooled by being immersed in liquid, the cooling action is enhanced by the coolant going through a phase change. From Intel's description, we understand that its immersion system is combined with vapor chambers (the phase change location). Moreover, heatsinks within the phase change chambers use a kind of coral-like design for efficient fluid flow, enhanced by innovative boiling enhancement coatings. The way that the coral-like heat sink design will be generatively created and 3D printed reminds us of cooling technology solutions touted by Diabatix and Amnovis, but Intel's press release doesn't specifically name any industry partners. As for the chamber coatings, they might use nanocoatings, possibly graphene, for the touted "boiling enhancement" properties.

As well as enabling chips approaching or exceeding 2,000 W TDPs, the new immersion cooling tech from Intel is claimed to be more efficient than any existing cooling technologies. This is important, as Intel claims that cooling currently accounts for up to 40% of total data center energy use. Intel says the teams assisting in this project are aiming to improve the two-phase system that is in development. Its ambitious goal is to improve the 0.025 degrees C/watt capability of the existing system by 2.5X or more.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Mark Tyson is a news editor at Tom's Hardware. He enjoys covering the full breadth of PC tech; from business and semiconductor design to products approaching the edge of reason.

-

Integr8d I’m sure that “Cooling Operations Optimized for Leaps in Energy, Reliability, and Carbon Hyperefficiency for Information Processing Systems” will totally offset the DOUBLING of power draw by these future chips... Total clowns.Reply -

TerryLaze Reply

Either one server will double in power or they will use two servers for the same total power but the number of CPUs/servers will increase either way.Integr8d said:I’m sure that “Cooling Operations Optimized for Leaps in Energy, Reliability, and Carbon Hyperefficiency for Information Processing Systems” will totally offset the DOUBLING of power draw by these future chips... Total clowns.

Nobody wants things to be slower, everybody wants everything to be faster and that means more processors.

This tech is supposed to reduce total power usage by decreasing the amount of power needed for the cooling.

This is important, as Intel claims that cooling currently accounts for up to 40% of total data center energy use. Intel says the teams assisting in this project are aiming to improve the two-phase system that is in development. Its ambitious goal is to improve the 0.025 degrees C/watt capability of the existing system by 2.5X or more.

-

Integr8d Reply

Servers aren’t going to double in power. They’re going to double in power draw.TerryLaze said:Either one server will double in power or they will use two servers for the same total power but the number of CPUs/servers will increase either way.

Nobody wants things to be slower, everybody wants everything to be faster and that means more processors.

This tech is supposed to reduce total power usage by decreasing the amount of power needed for the cooling.

“Nobody wants things to be slower, everybody wants everything to be faster and that means more processors.”

I don‘t have a response for that that won’t get me banned. But in my more patient years, I’d have argued for the typical increases in IPC, memory access, smarter caches, etc. -

TerryLaze Reply

You think that those don't increase power draw?!Integr8d said:But in my more patient years, I’d have argued for the typical increases in IPC, memory access, smarter caches, etc.

Also they wouldn't be enough on their own, the industry will always need more actual cores alongside whatever else. -

InvalidError Reply

Almost everything eventually reaches a "good enough" or "practical limit" point. We are seeing that in the consumer space with the on-going slowdown in PC sales and the start of decline in mobile device sales. Datacenter will likely follow a decade or two behind.TerryLaze said:Also they wouldn't be enough on their own, the industry will always need more actual cores alongside whatever else. -

TerryLaze Reply

Because there will be fewer people every year and they will be wanting their videos (whatever) provided much slower and in always lower resolutions?InvalidError said:Datacenter will likely follow a decade or two behind.

If we ever reach a point where the population will stay the same and every single person will already have a device and no newer higher resolution comes out and and and...

I don't see them slowing down for a long while unless they hit some physical limit. -

InvalidError Reply

What higher resolutions? From a comfortable sitting distance away from the screen, there isn't that much of a quality increase in motion video going form 1080p to 4k. Only a tiny fraction of the population will ever bother with more than UHD, the practical limit of human vision has been passed already.TerryLaze said:If we ever reach a point where the population will stay the same and every single person will already have a device and no newer higher resolution comes out and and and...

I don't see them slowing down for a long while unless they hit some physical limit. -

TerryLaze Reply

Just wait until we get the total recall my whole wall is a TV screen future...InvalidError said:What higher resolutions? From a comfortable sitting distance away from the screen, there isn't that much of a quality increase in motion video going form 1080p to 4k. Only a tiny fraction of the population will ever bother with more than UHD, the practical limit of human vision has been passed already.

8k is just the beginning.

This will be in our living rooms in the future, we might not live that long but then again prices drop really fast all the time.

https://hollywoodnorthnews.net/2022/11/24/volume-led-stage-comes-to-regina/ -

InvalidError Reply

If you sit far enough to comfortably be able to see the whole image, 4k is about as good as the human eye can resolve no matter how large the screen is since you end up having to stand/sit increasingly further away from the screen linearly with screen size, especially once you throw motion at it where you cannot fixate minute pixel details before they get replaced by something else.TerryLaze said:Just wait until we get the total recall my whole wall is a TV screen future...

8k is just the beginning. -

prestonf Integr8d said:ReplyI’m sure that “Cooling Operations Optimized for Leaps in Energy, Reliability, and Carbon Hyperefficiency for Information Processing Systems” will totally offset the DOUBLING of power draw by these future chips... Total clowns.

The press release is ridiculous, its not about total power at all, its about power density. And this won't do anything to reduce the cooling costs of a datacenter which would be mostly the air conditioning for the building.

Also this makes no sense :

from 0.025 °C/watt to less than 0.01 °C/watt, or 2.5 times (or more) improvement in efficiency.

Does that mean that the efficiency right now costs 1000Watts to get s 25C delta, and they will "improve" it by using 1000Watts to get at 10C delta?