Intel Details Inner Workings of XeSS

DLSS Puts on a Blue Shirt

Intel released an explainer video for its upcoming XeSS AI upscaling technology, and showcased how the tech works on its nearly ready for public release Arc Alchemist GPUs. It used the fastest Arc A770 for the demonstrations, though it's difficult to say how the performance will stack up against the best graphics cards based on the limited performance details shown.

If you're at all familiar with Nvidia's DLSS, which has been around for four years now in various incarnations, the video should spark a keen sense of Deja Vu. Tom Petersen, who formerly worked for Nvidia and gave some of the old DLSS presentations, walks through the XeSS fundamentals. Long story short, XeSS sounds very much like a mirrored version of Nvidia's DLSS, except it's designed to work with Intel's deep learning XMX cores rather than Nvidia's tensor cores. The tech can also work with other GPUs, however, using DP4a mode, which might make it an interesting alternative to AMD's FSR 2.0 upscaler.

In the demos shown by Intel, XeSS looked to be working well. Of course, it's difficult to say for sure when the source video is a 1080p compressed version of the actual content, but we'll save detailed image quality comparisons for another time. Performance gains look to be similar to what we've seen with DLSS, with over a 100% frame rate boost in some situations when using XeSS Performance mode.

How It Works

If you already know how DLSS works, Intel's solution is largely the same, but with some minor tweaks. XeSS is an AI accelerated resolution upscaling algorithm, designed to increase frame rates in video games.

It starts with training, the first step in most deep learning algorithms. The AI network takes lower resolution sample frames from a game and processes them, generating what should be upscaled output images. Then the network compares the results against the desired target image and back propagates weight adjustments to try and correct any "errors." At first, the resulting images won't look very good, but the AI algorithm slowly learns from its mistakes. After thousands (or more) of training images, the network eventually converges toward ideal weights that will "magically" generate the desired results.

Once the algorithm has been fully trained, using samples from lots of different games, it can in theory take any image input from any video game and upscale it almost perfectly. As with DLSS (and FSR 2.0), the XeSS algorithm also takes on the role of anti-aliasing and replaces classical solutions like temporal AA.

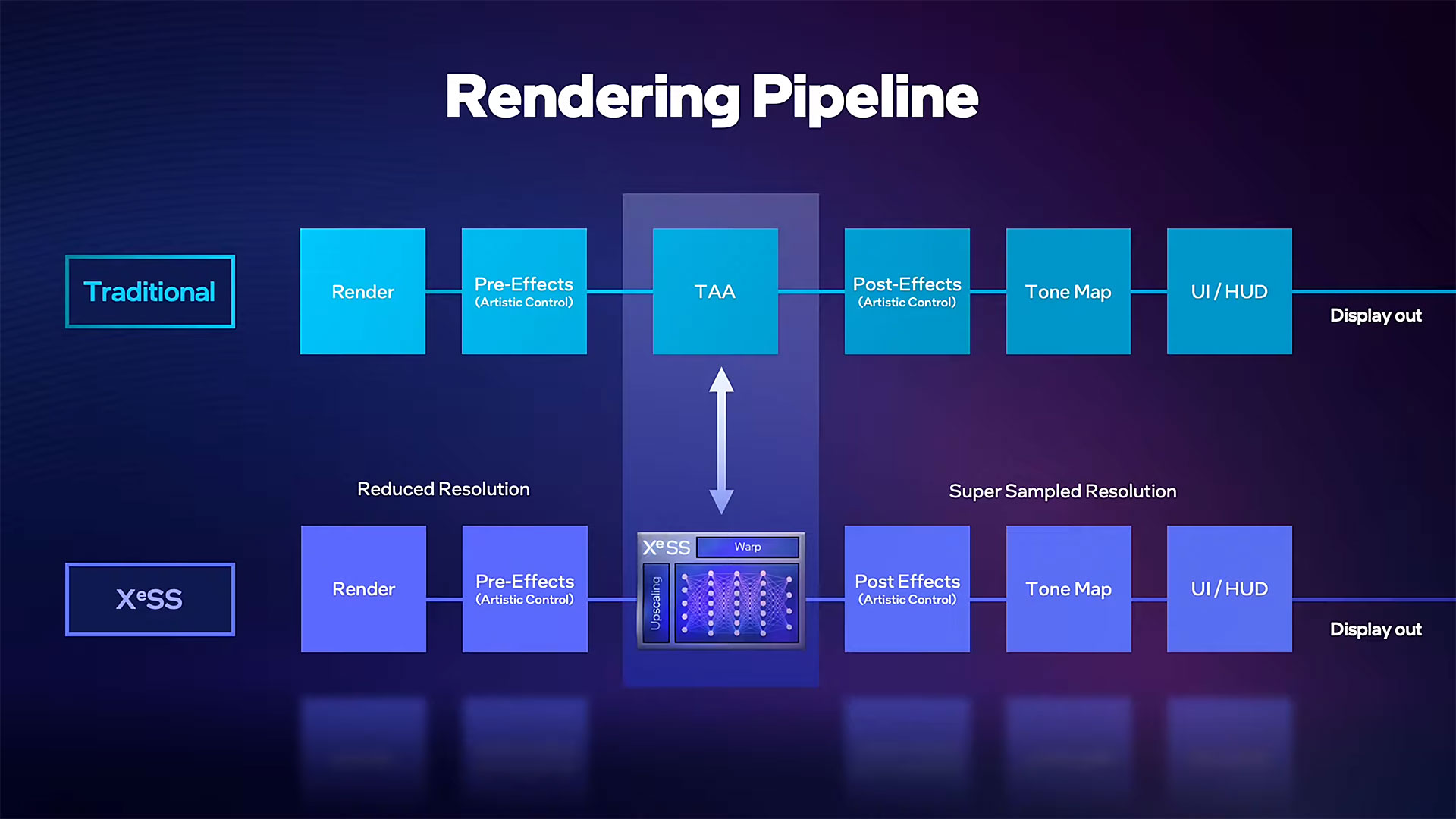

Again, nothing so far is particularly noteworthy. DLSS and FSR 2.0 and even standard temporal AA algorithms have a lot of the same core functionality — minus the AI stuff for FSR and TAA. Games will integrate XeSS into their rendering pipeline, typically after the main render and initial effects are done but before post processing effects and GUI/HUD elements are drawn. That way the UI stays sharp while the difficult task of 3D rendering gets to run at a lower resolution.

XeSS operates on Intel's Arc XMX cores, but it can also run on other GPUs in a slightly different mode. DP4a instructions are basically four INT8 (8-bit integer) calculations done using a single 32-bit register, what you'd typically have access to via a GPU shader core. XMX cores meanwhile natively support INT8 and can operate on 128 values at once.

That might seem very lopsided, but as an example an Arc A380 has 1024 shader cores that could each do four INT8 operations at the same time. Alternatively, the A380 has 128 MXM units that can each do 128 INT8 operations. That makes the MXM throughput four times faster than the DP4a throughput, but apparently DP4a mode should still be sufficient for some level of XeSS goodness.

Note that DP4a appears to use a different trained network, one that's perhaps less computationally intensive. How that will translate into real-world performance and image quality remains to be seen, and it sounds like game developers will need to explicitly include support for both XMX and DP4a modes if they want to support non-Arc GPUs.

Intel XeSS Performance Expectations

Intel showed off a couple of gaming tests running XeSS, including a development build of Shadow of the Tomb Raider and a new 3DMark benchmark specifically made for XeSS. It also showed brief clips of Arcadegeddon, Redout II, Ghostwire Tokyo, The DioField Chronicle, Chivalry II, Naraka Bladepoint, and Super People running with and without XeSS at the end of the video. Note that Intel has never shown XeSS running in DP4a mode, which is something we still want to see.

In Shadow of the Tomb Raider, running on an Arc A770 graphics card at 2560x1440 with nearly maxed out settings, including ray traced shadows, XeSS provided anywhere from about a 25% performance boost on the Ultra Quality setting up to a more than a 100% to frame rates at using the Performance setting. The Quality and Balanced settings go for a middle ground, and improved performance by around 50% and 75%, respectively.

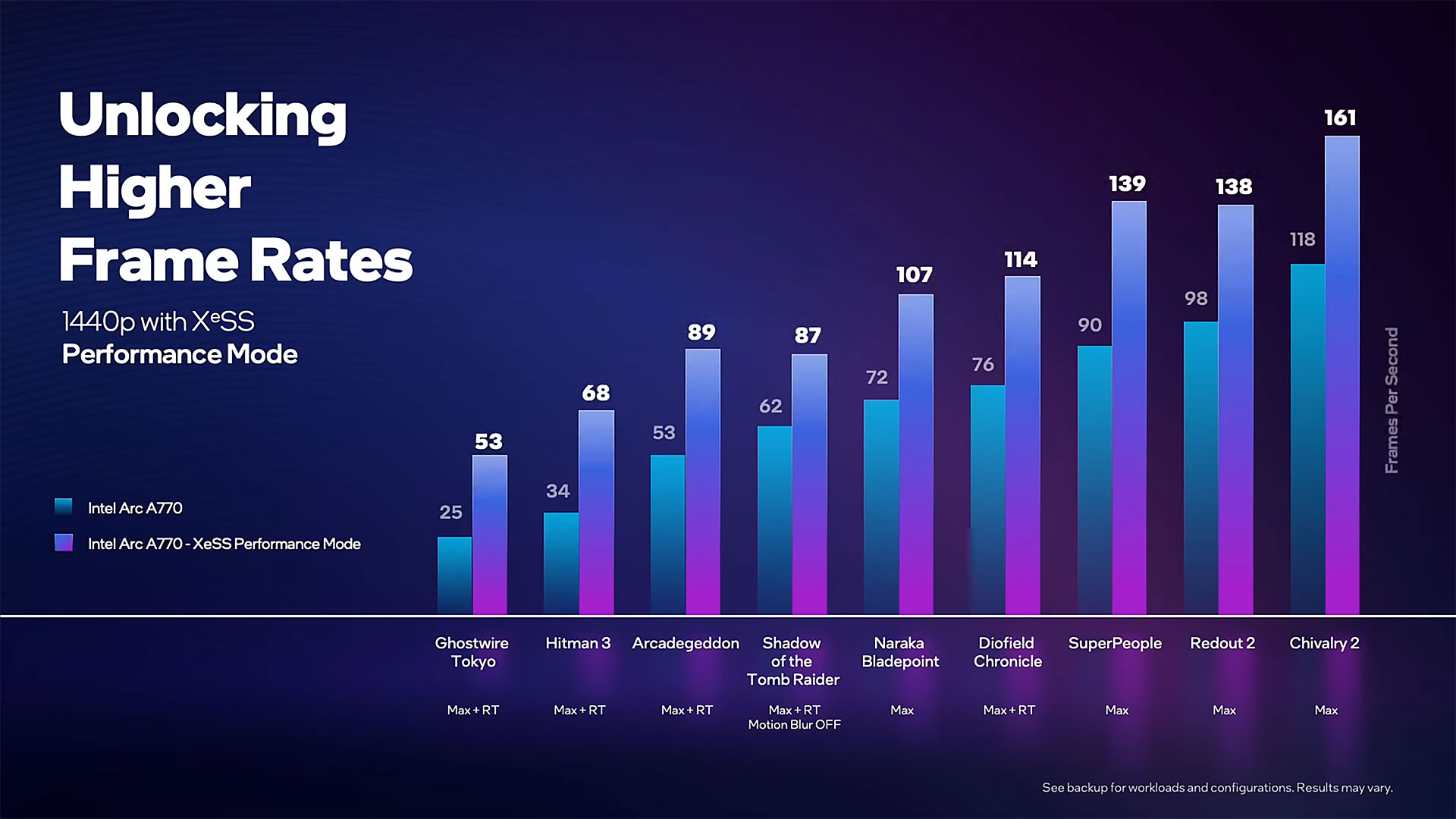

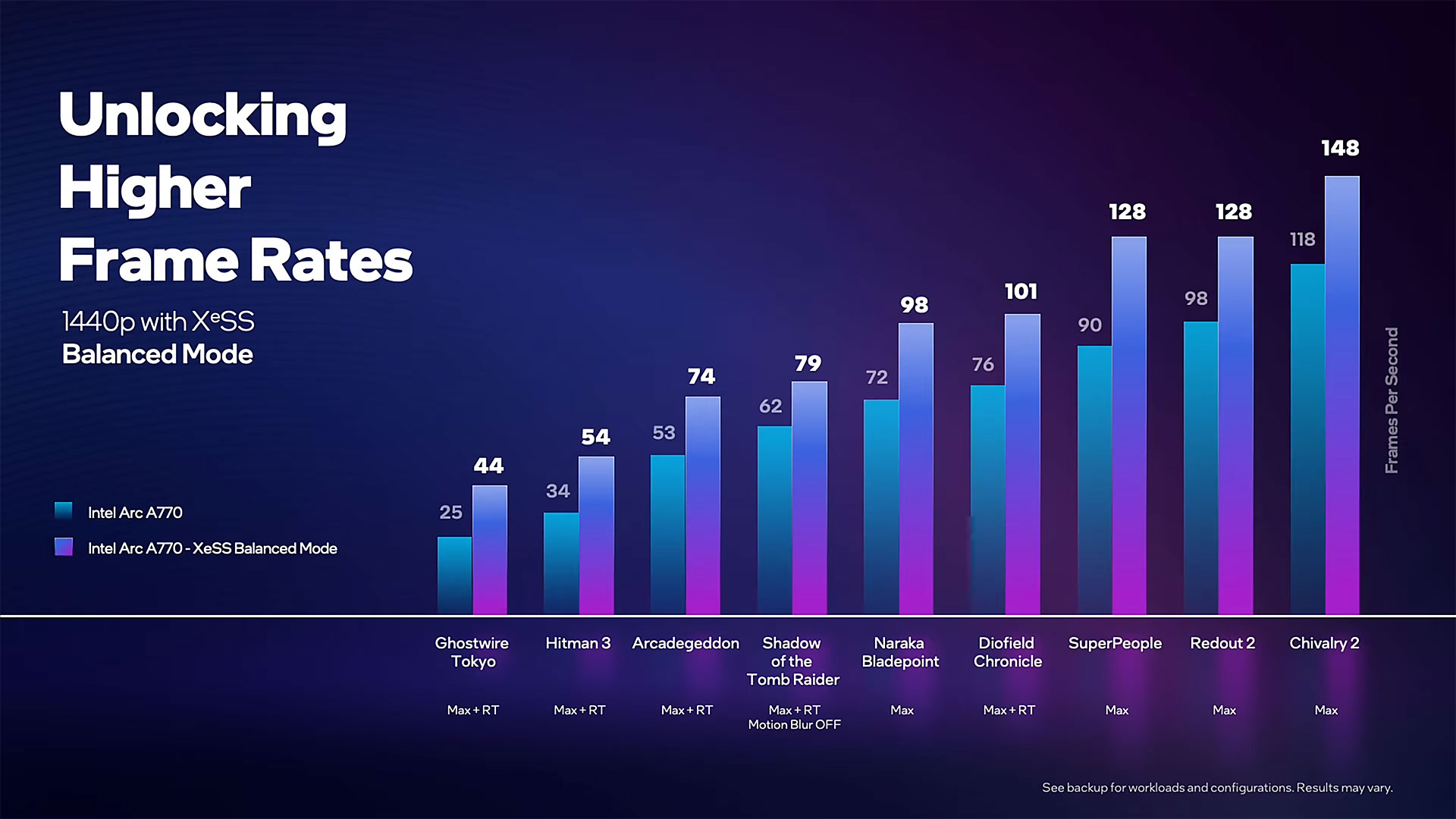

Those gains will naturally vary by the game engine, settings, and base performance. The more demanding the game and the lower the framerate, the more beneficial XeSS will likely be. Using the Performance mode, Intel showed typical gains of anywhere from 40% to 110% at 1440p, while the Balanced mode delivered improvements ranging from about 25% to as much as 75%.

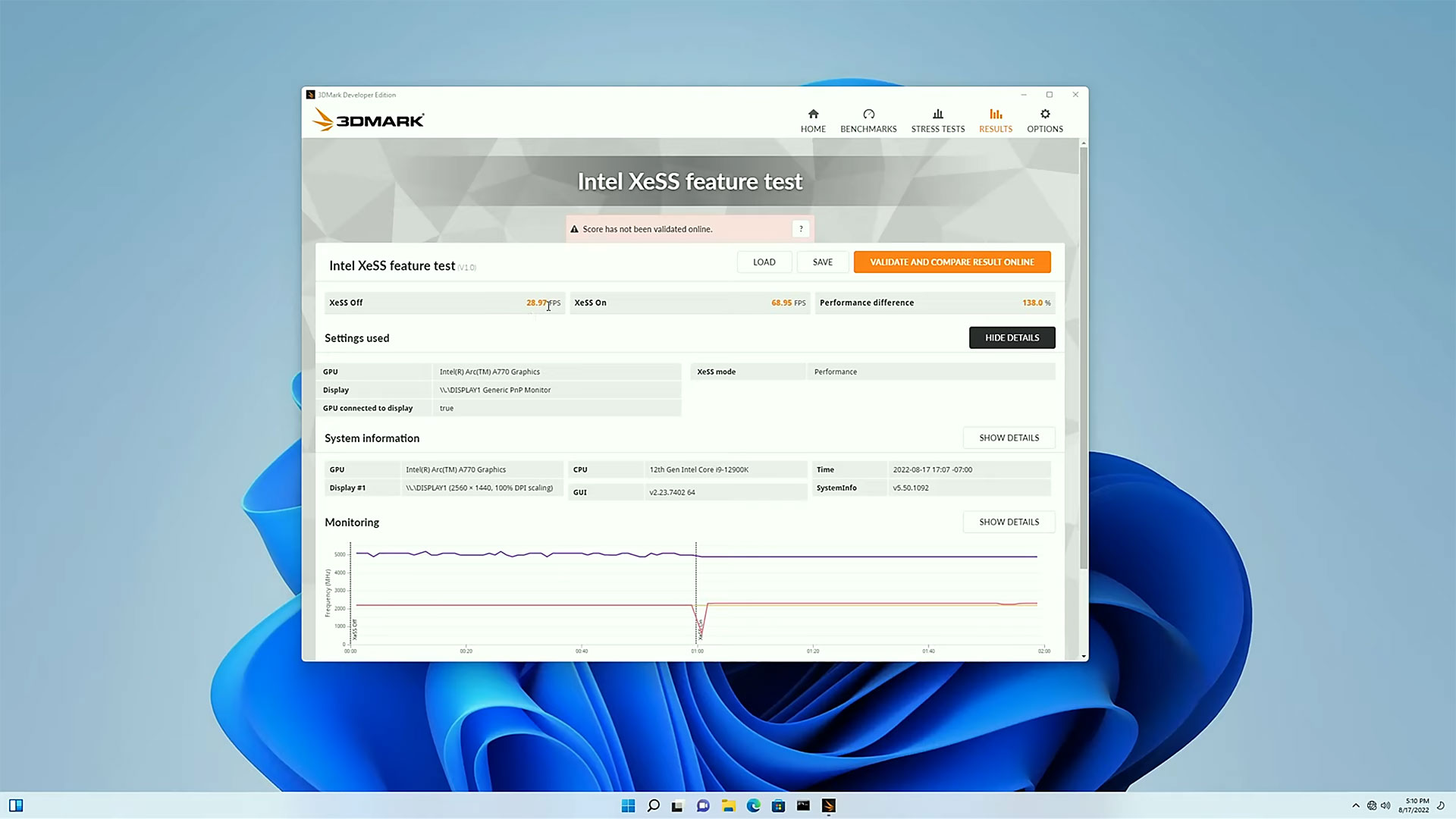

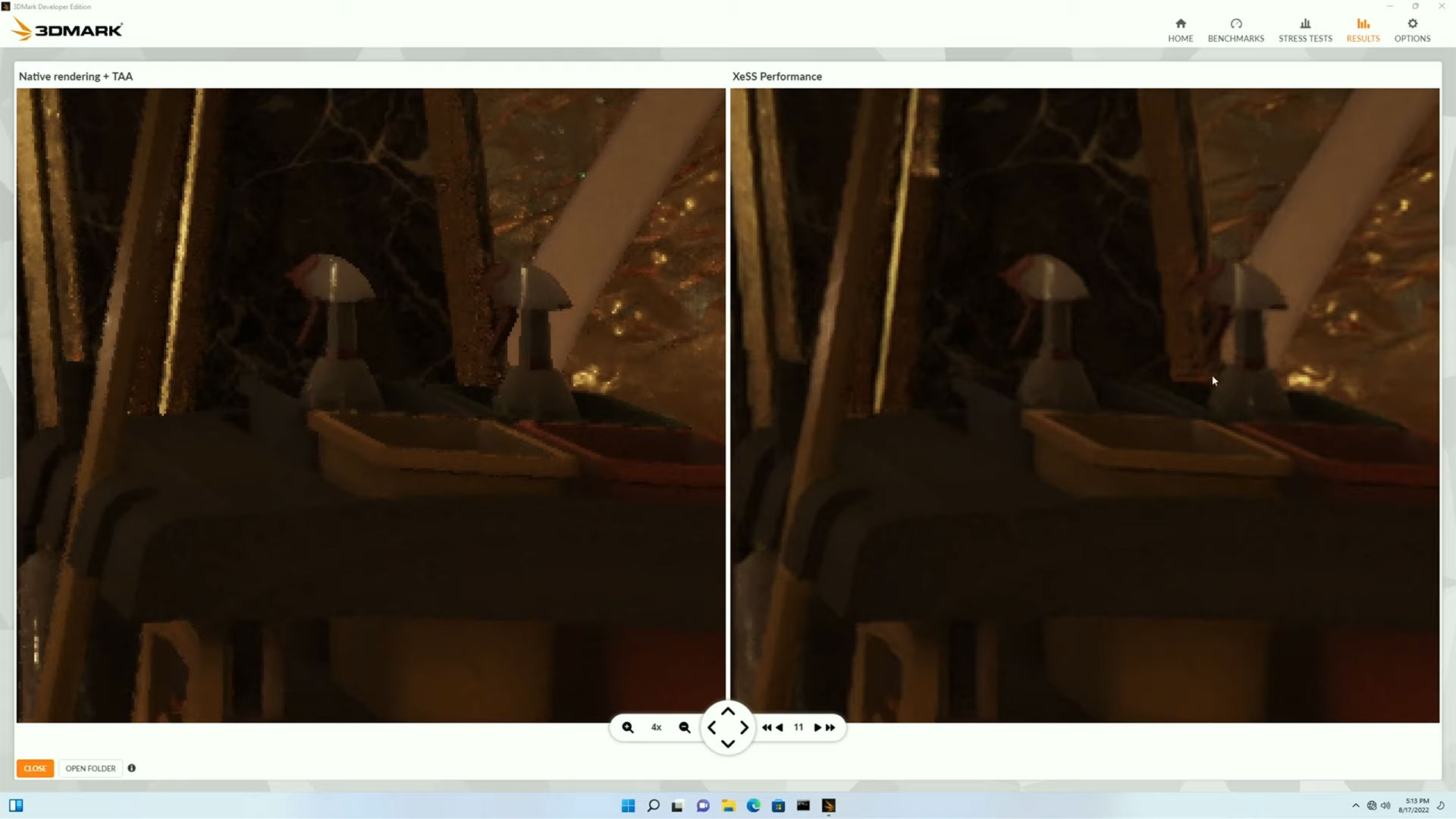

3DMark will also be adding an Intel XeSS Feature Test for its Advanced edition, which includes a benchmark mode as well as a Frame Inspector that allows users to look at images of the benchmark, zooming in to check the visual quality differences. It looks a lot easier to use than Nvidia's ICAT utility, though of course it's also limited to providing frames from a single synthetic benchmark.

Because 3DMark uses its demanding Port Royal ray tracing scene for the XeSS Feature Test, performance gains can be particularly impressive. At 1440p with XeSS in performance mode, the benchmark saw a 145% improvement in FPS, 109% boost with Balanced mode, 81% using Quality mode, and 49% with Ultra Quality mode.

The Frame Inspector also showed some good results, with XeSS reconstructing the image very well, to the point where Intel's Tom Petersen argues the XeSS image actually looks better than native with TAA. Of course, you need to take that with a grain of salt, and images from a single canned sequence likely won't fully represent real-world gaming experiences.

XeSS SDK and More Than 20 Games in the Works

Intel will be providing an SDK for implementing XeSS in a game engine. The interface and requirements will be very similar to TAA implementations, as well as DLSS and FSR 2.0, so it should be a relatively easy addition for any modern graphics engine.

Like TAA, FSR 2.0, and DLSS, XeSS needs motion vectors along with the current frame, and it keeps its own collection of previous frames. These are all fed into the AI network to ultimately generate a good result. XeSS also uses camera jitter to help eliminate aliasing in the scene. (The jitter is on the sub-pixel level, so it won't be directly visible to the end user.)

At present, Intel has more than 20 games with XeSS planned for release in the coming months. Some of those may fall through the cracks or get delayed, but it's at least a decent start for the newcomer. At the same time, AMD just announced another eight games that have recently added or will soon be adding FSR 2.0, and Nvidia has well over 100 games shipping with DLSS 2.0 or later. How many game developers will be willing to add all three alternatives, which would provide gamers with the choice of the best algorithm? We suspect a lot of games will only support one or two of the possible upscaling options.

XeSS will officially launch when Intel releases its Arc Alchemist GPUs worldwide at some point in the presumably near future. The Arc A380 has effectively launched at this point, and Intel has now teased the A750 and A770. Hopefully, we'll get to experience XeSS, in both MXM and DP4a modes, in the not too distant future. At present, uptake remains very far behind the AMD and Nvidia competition.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Aaron Klotz is a contributing writer for Tom’s Hardware, covering news related to computer hardware such as CPUs, and graphics cards.

-

peterf28 I don't like where this is going. It introduces artifacts and blurriness. I want raw pixel texel performance.Reply -

InvalidError Reply

While more raw pixel-pushing power may be nice, there is a point where scaling output quality any further using brute force approaches become prohibitively expensive. AI-upscaling is a somewhat of work-around for RT's computational cost: cheaper to develop and implement better upscaling than put in enough raw RT power to render in an all-RT pipeline at full native and de-aliased resolution.peterf28 said:I don't like where this is going. It introduces artifacts and blurriness. I want raw pixel texel performance. -

Fanboy2354 how come everybody is still hollerin' bout the need for Intel to ditch its ARC graphics after losing 3.5 billion on it already?Reply -

peterf28 Reply

My eyes want raw pixels, pure polygons, no filters or after effects. My eyes get seriously sore when the pixels, graphics, textures, etc are not CLEAR.InvalidError said:While more raw pixel-pushing power may be nice, there is a point where scaling output quality any further using brute force approaches become prohibitively expensive. AI-upscaling is a somewhat of work-around for RT's computational cost: cheaper to develop and implement better upscaling than put in enough raw RT power to render in an all-RT pipeline at full native and de-aliased resolution.