AMD Data Center and AI Technology Premiere Live Blog: Instinct MI300, 128-Core EPYC Bergamo

Breaking out the AI silicon.

The event has concluded, and you can see our overview with the live blog below, However, here are links to our deeper coverage of each topic:

AMD Expands MI300 With GPU-Only Model, Eight-GPU Platform with 1.5TB of HBM3

AMD EPYC Genoa-X Weilds 1.3 GB of L3 Cache, 96 Cores

AMD Details EPYC Bergamo CPUs With 128 Zen 4C Cores, Available Now

AMD Intros Ryzen 7000 Pro Mobile and Desktop Chips, AI Comes to Pro Series

AMD is holding its Data Center and AI Technology Premiere today, June 13, 2022, at 10 am PT here in San Francisco -- which is now. We're here to cover the event live and bring you the news as it happens as AMD CEO Lisa Su takes to the stage to reveal AMD's new AI-focused silicon.

AMD has already said that it will reveal its EPYC Bergamo chips at the event. These chips come with up to 128 cores, an innovation that's enabled by the company's new 'Zen 4c' efficiency cores. These new cores are optimized for density through several techniques, yet unlike Intel's competing efficiency cores, retain support for the chips' full feature set.

AMD is also expected to announce its Instinct MI300 accelerators. This data center APU blends a total of 13 chiplets, many of them 3D-stacked, to create a chip with twenty-four Zen 4 CPU cores fused with a CDNA 3 graphics engine and 8 stacks of HBM3. Overall the chip weighs in with 146 billion transistors, making it the largest chip AMD has pressed into production. This chip is designed to compete with Nvidia's Grace Hopper.

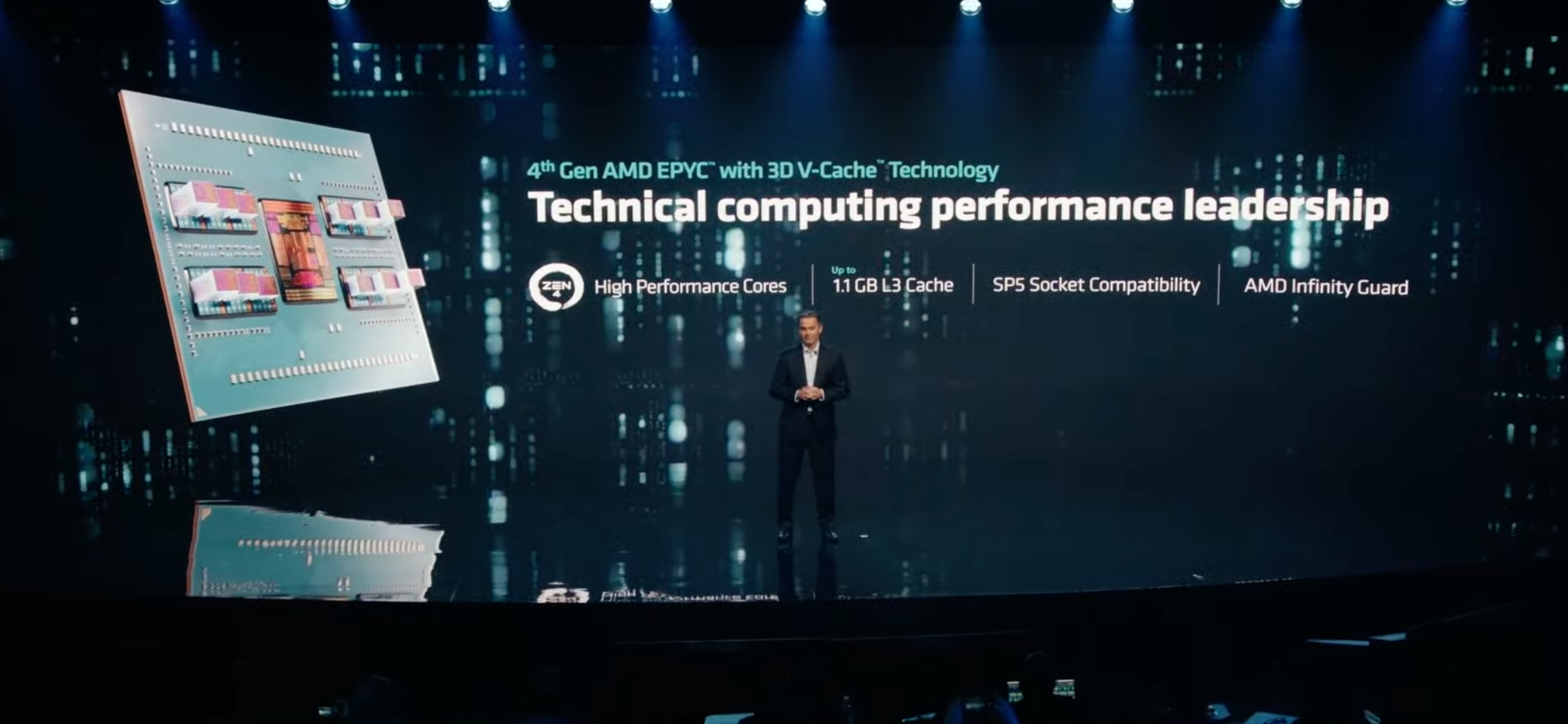

Other expected announcements include the debut of the company's Genoa-X processors, which use 3D-Stacked L3 cache to boost performance in technical workloads, much like the existing Milan-X processors. We also expect news about the company's first teleco-optimized chips, Sienna, and perhaps an update on the company's next-gen Zen 5 'Turin' data center chips.

We're now seated and ready for the show to begin in less than ten minutes.

AMD CEO Lisa Su has come on stage to introduce the company's new products, noting that she will introduce a range of new products including CPUs and GPUs.

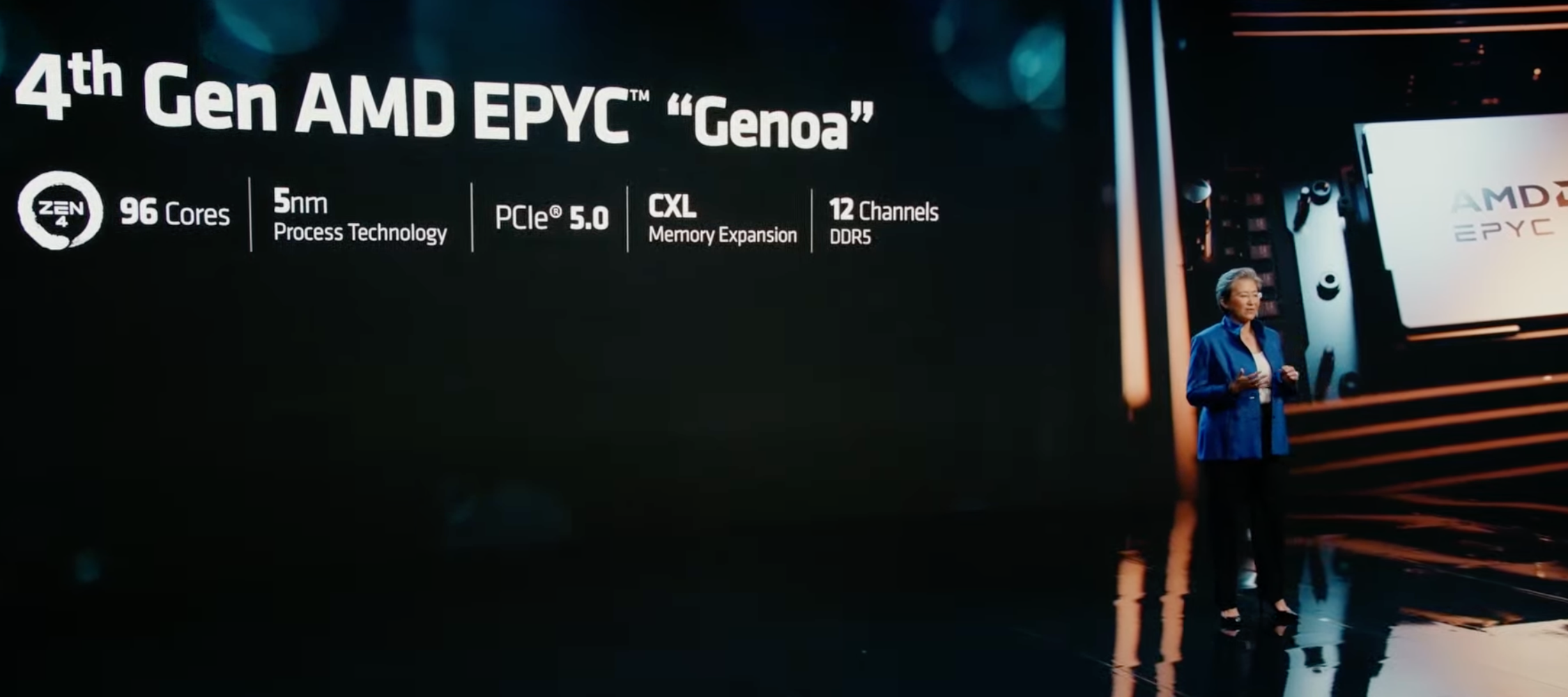

Lisa Su is outlining AMD's progress with its EPYC processors, particularly in the cloud with instances available worldwide.

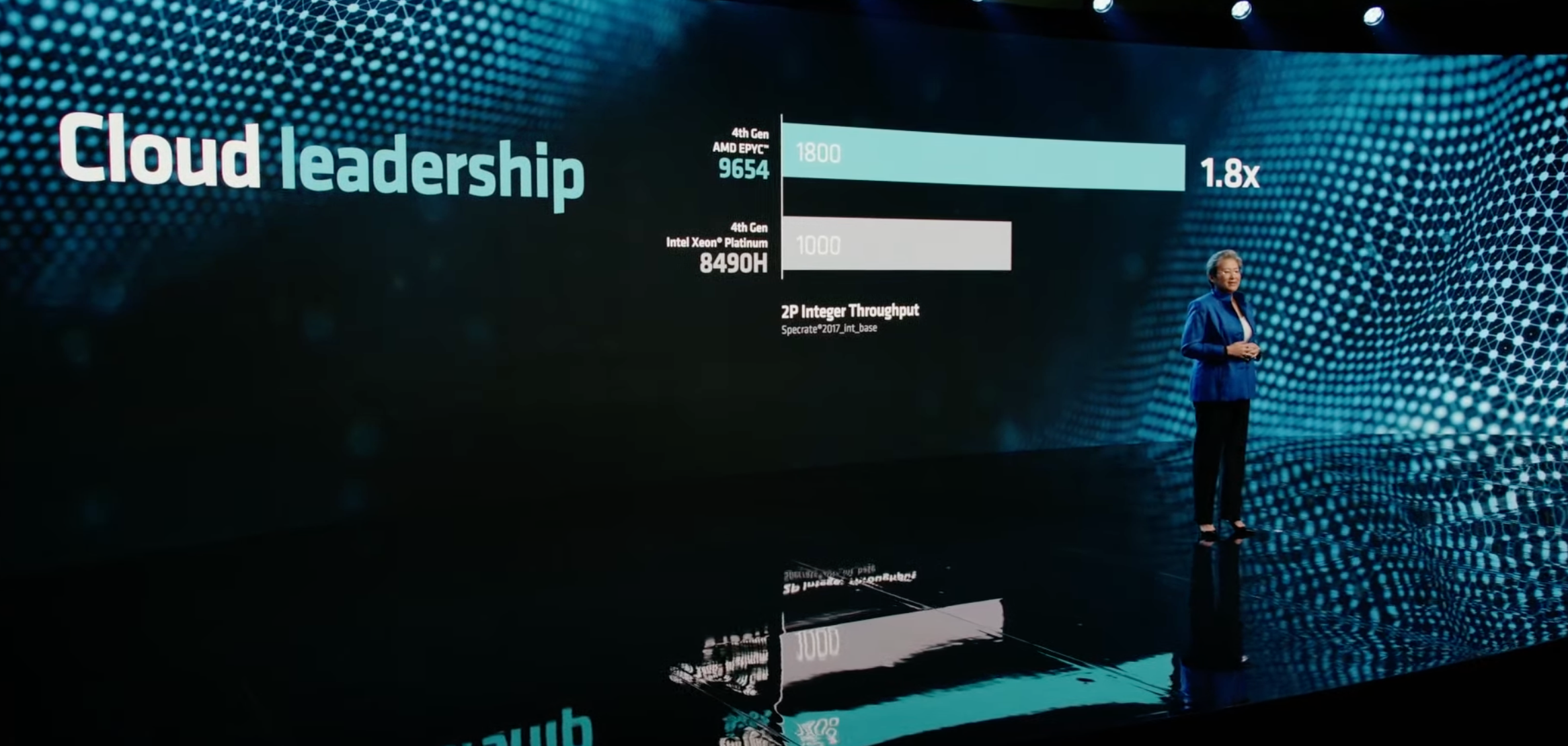

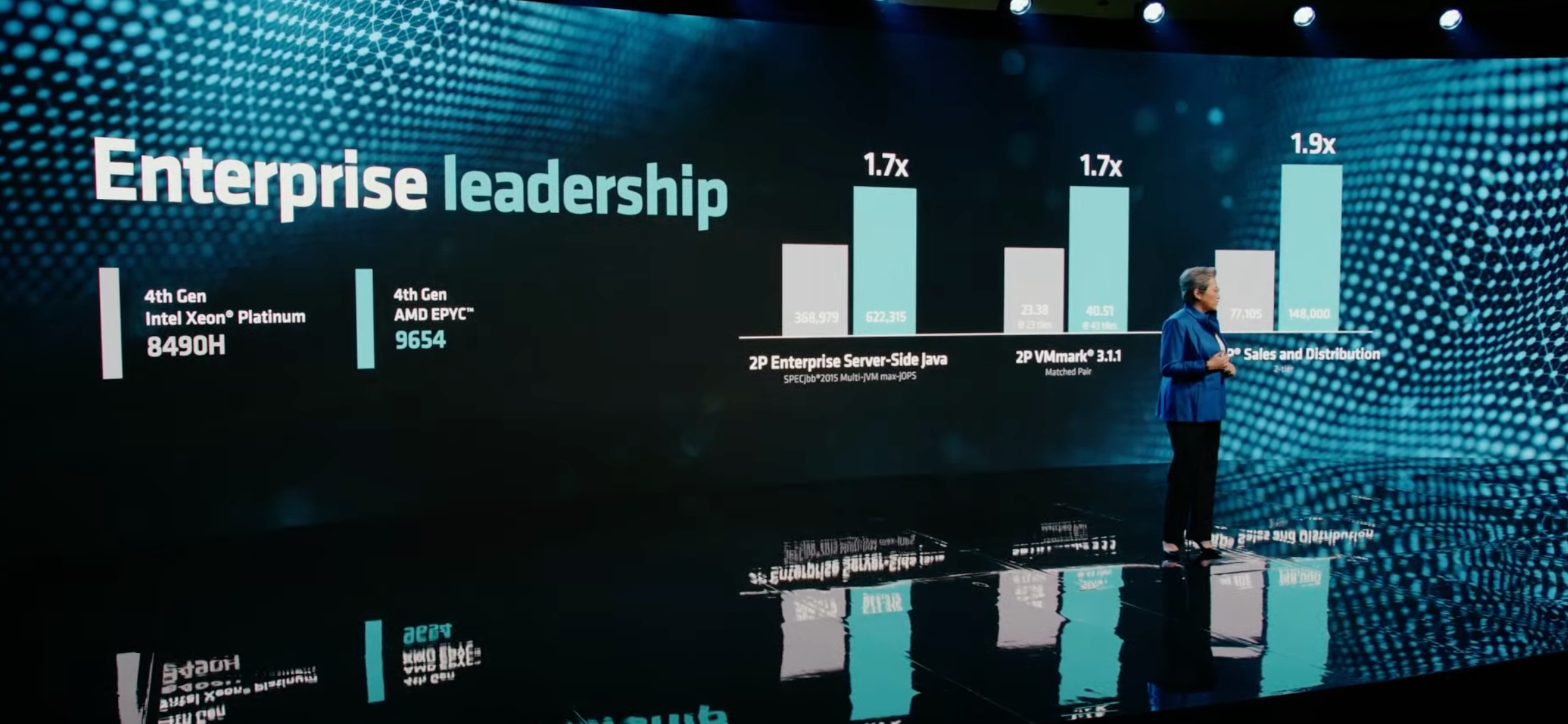

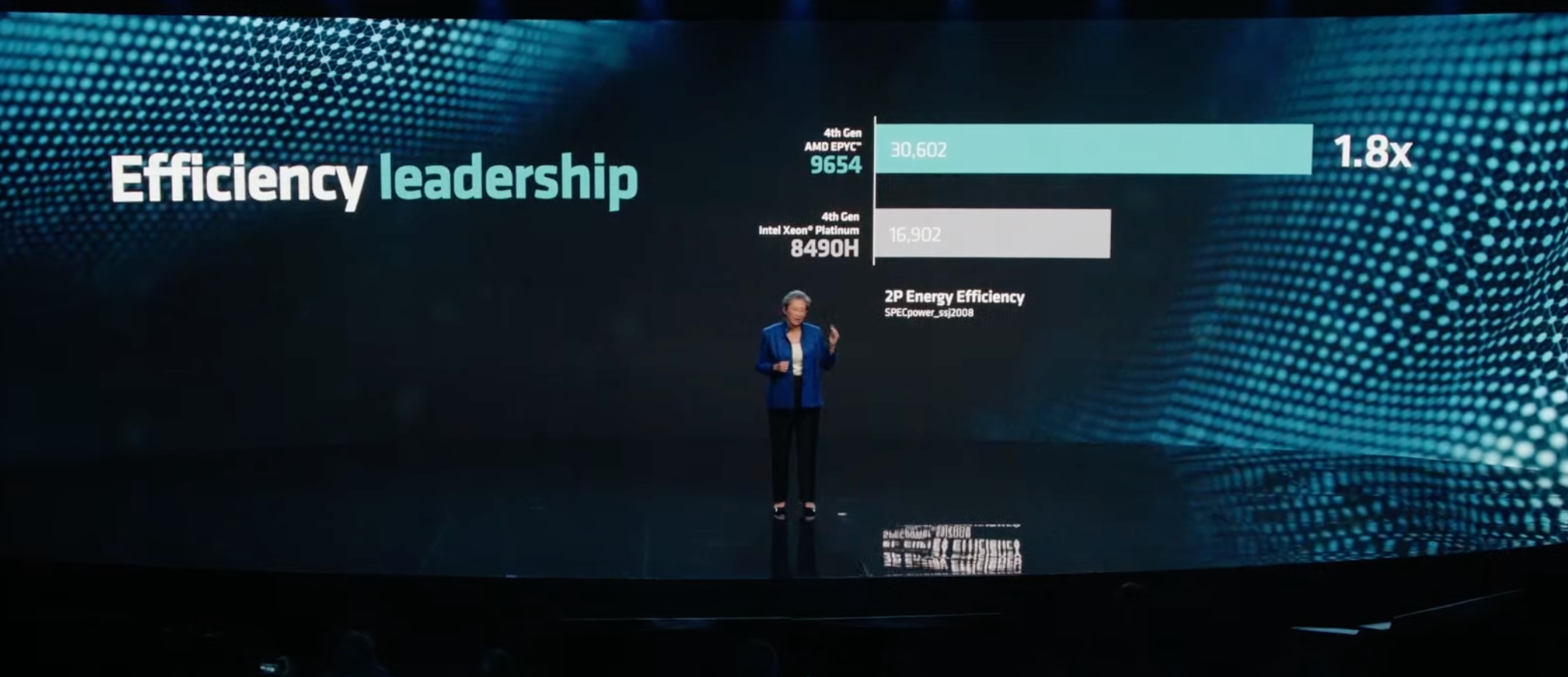

Lisa Su touts that AMD EPYC Genoa offers 1.8x the performance of Intel's competing processors in cloud workloads, and 1.9X faster in enterprise workloads.

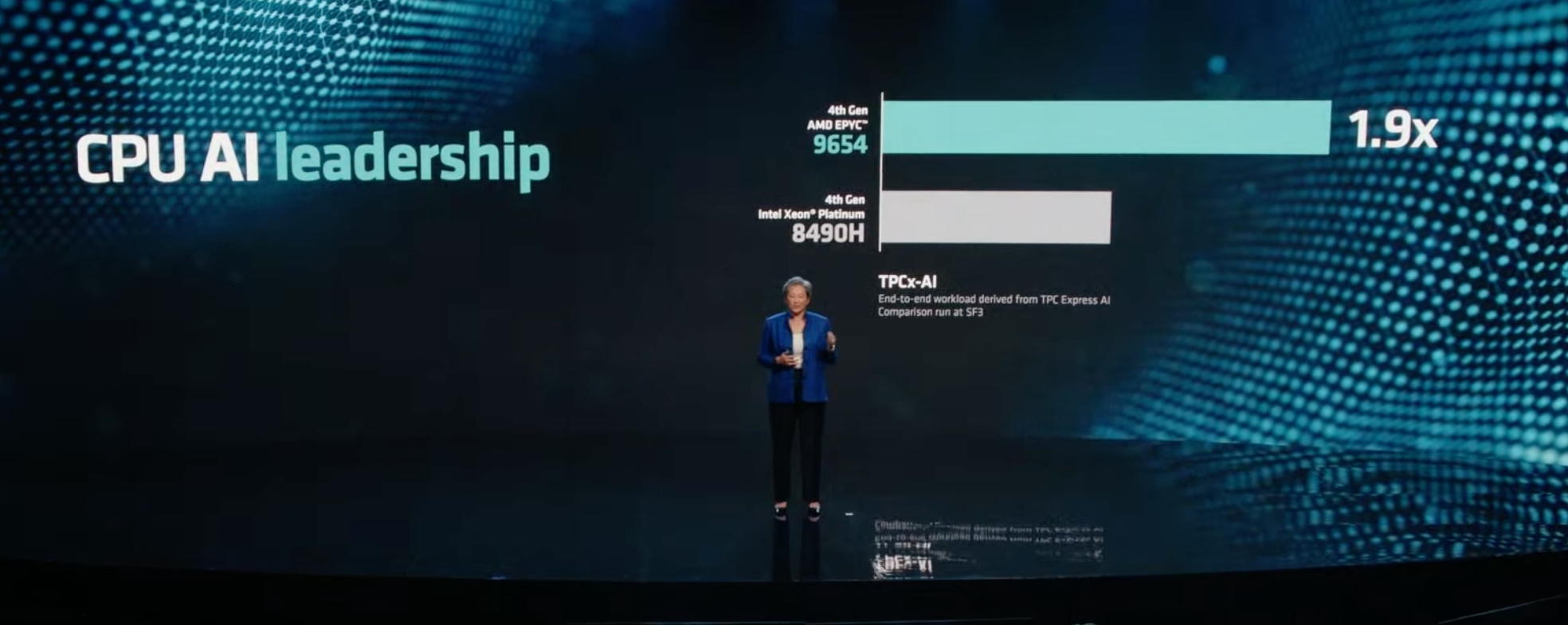

The vast majority of AI runs on CPUs, and AMD says it has a commanding lead in performance over competing Xeon 8490H, offering 1.9X more performance. Su also touted a 1.9X efficiency advantage.

Here we can see AMD's AI benchmarks relative to Intel's Sapphire Rapids Xeon.

Dave Brown, the VP of AWS's EC2, came on stage to talk about the cost savings and performance advantages of using AMD's instances in its cloud. He provided several examples of customers that benefited from the AMD instances, with workloads spanning from HPC to standard general-purpose workloads.

Amazon announced that it is building new instances with AWS Nitro and the fourth-generation EPYC Genoa processors. The EC2 M7a instances are available in preview today, offering 50% more performance than M6a instances. AWS says they offer the highest performance of the AWS x86 offerings.

AMD will also use the EC2 M7a instances for its own internal workloads as well, including for chip-designing EDA software.

AMD also announced that Oracle with have Genoa E5 instances available in July.

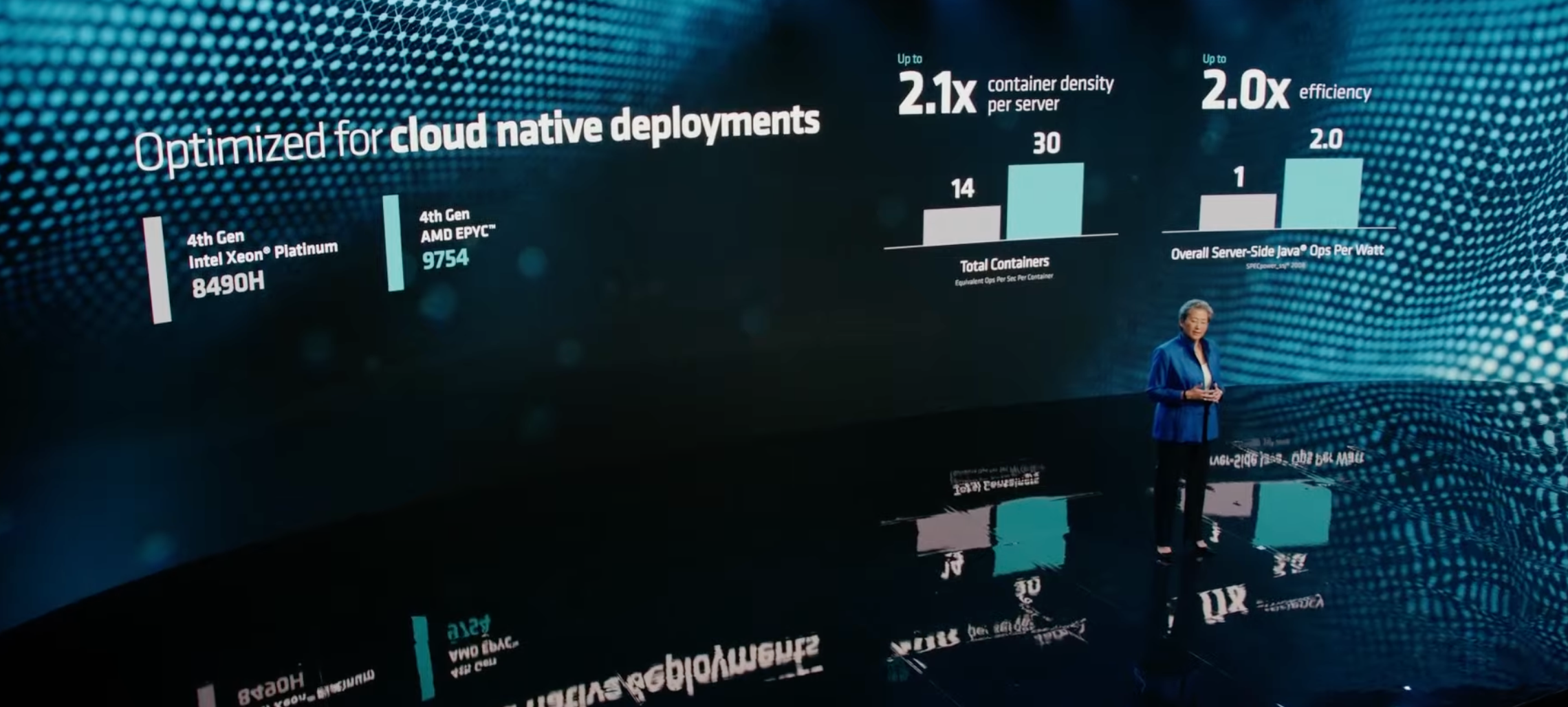

Lisa Su has now transitioned to talking about cloud-native processors, explaining that they are throughput-oriented and require the highest end density and efficiency. Bergamo is the entry for this market, and uses up to 128 cores per socket with a consistent x86 ISA support. The chip has 83 billion transistors and offers the highest vCPU density available.

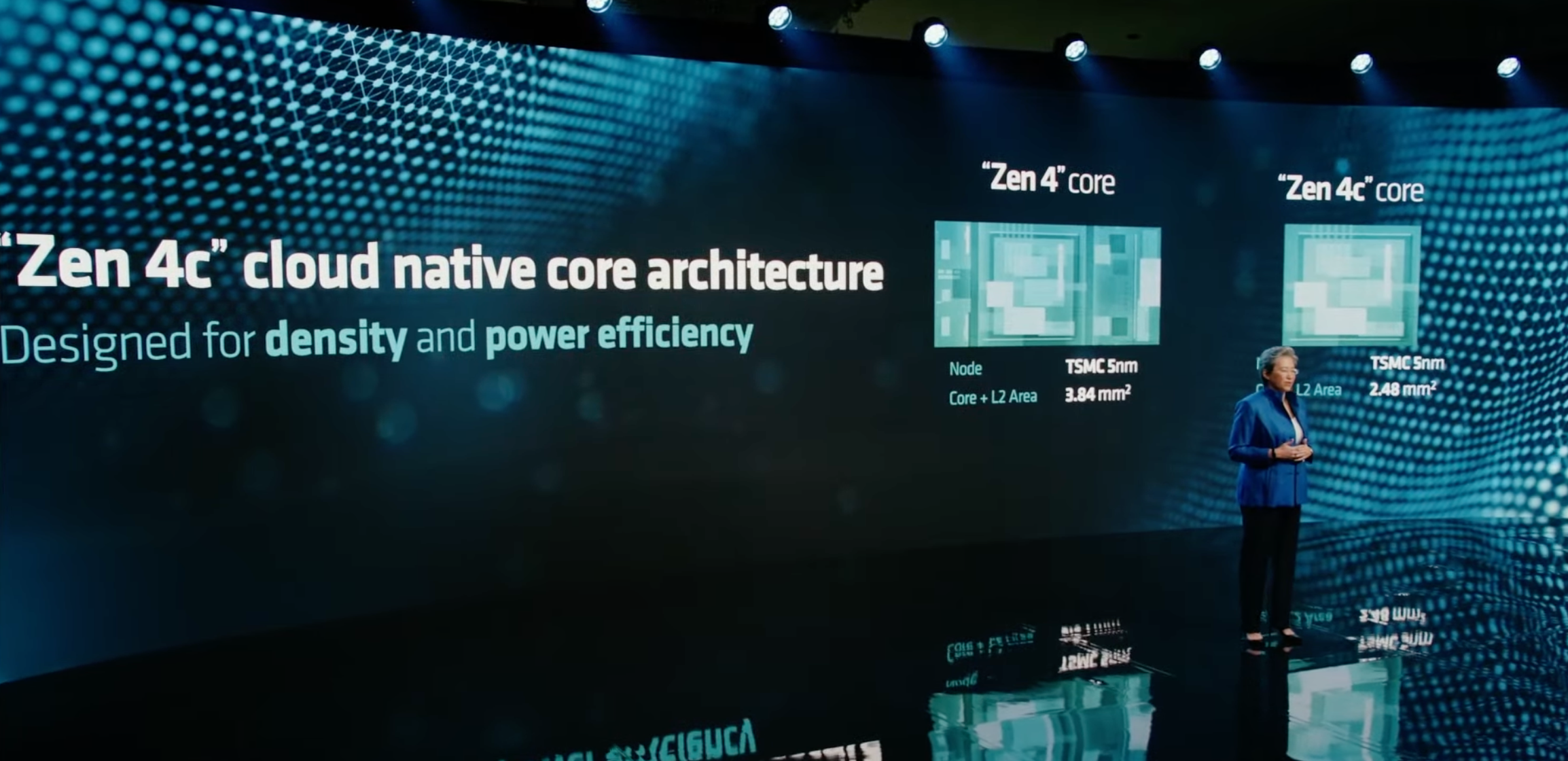

The Zen 4c core offers higher density than standard Zen 4 cores, yet maintains 100% software compatibility. AMD optimized the cache hierarchy, among other trimmings, for a savings of 35% on the die area. The CCD core chiplet is the only change.

Here is the die breakdown.

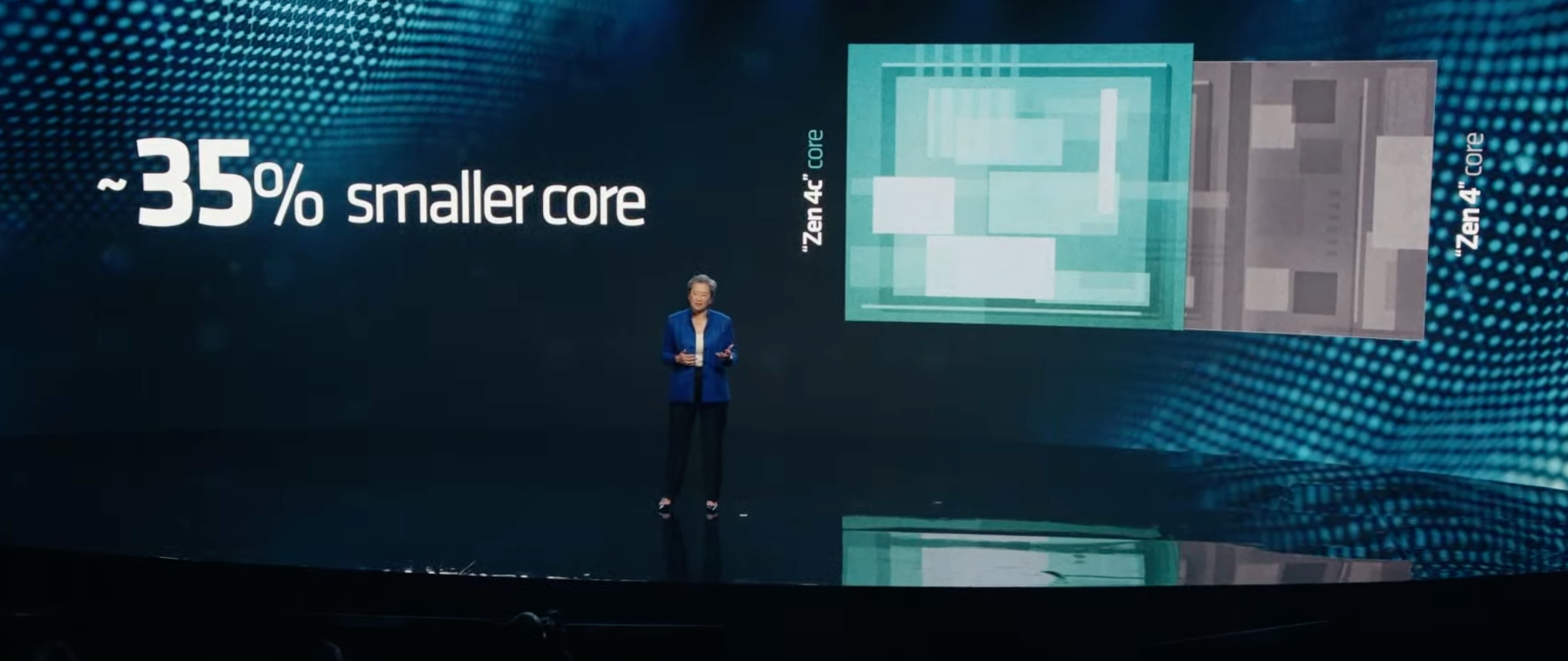

The core is 35% smaller than standard Zen 4 cores.

Here is a diagram of the chip package.

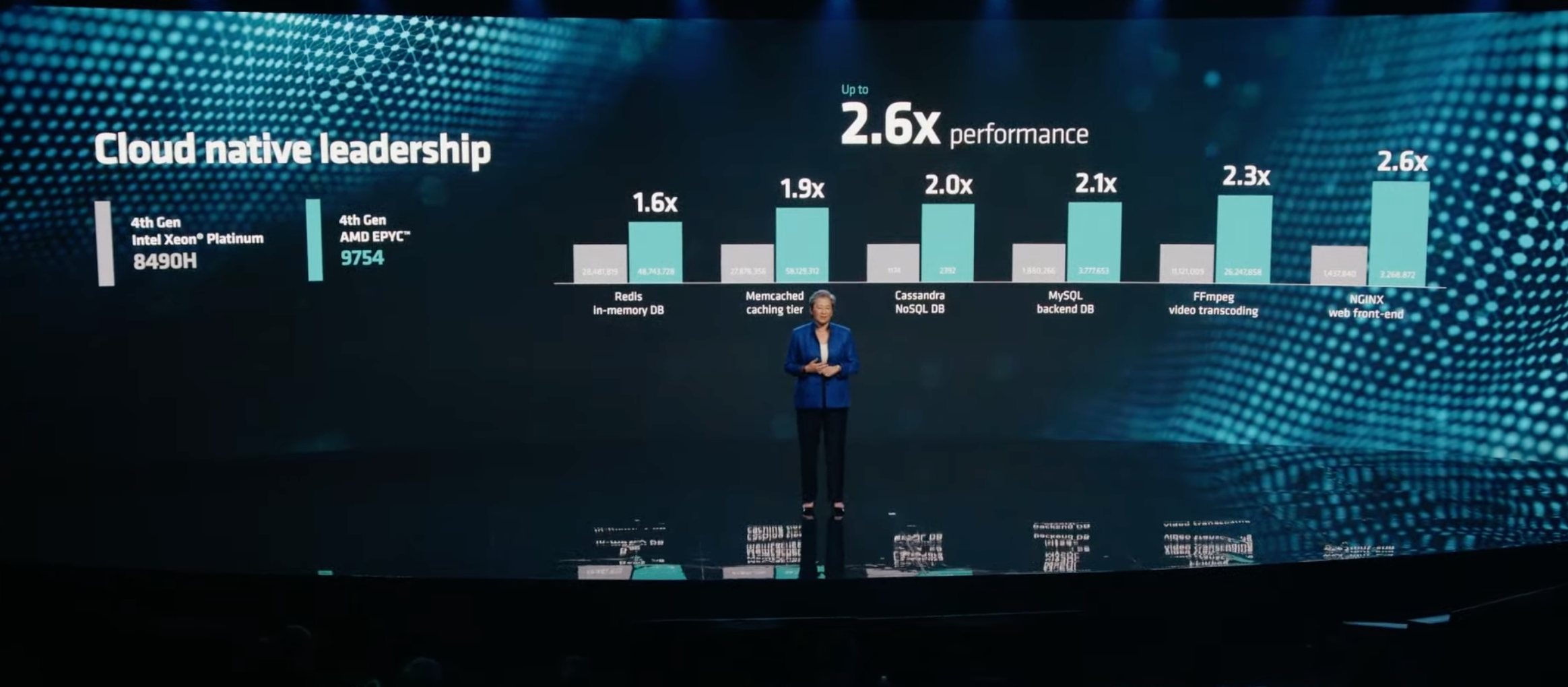

Bergamo is shipping now to AMD's cloud customers. AMD also shared the following performance benchmarks.

A Meta representative joined Lisa Su on the stage to talk about the company's use of AMD's EPYC processors for its infrastructure. Meta is also open-sourcing its AMD-powered server designs.

Meta says that it has learned that it can rely upon AMD for both chip supply and a strong roadmap that it delivers on schedule. Meta plans to use Bergamo, which offers 2.5X more performance than the previous-gen Milan chips, for its infrastructure. Meta will also use Bergamo for its storage platforms.

Dan McNamara, AMD's SVP and GM of the Server Business Unit, has come to the stage to introduce two new products. Genoa-X will add more than 1 GB of L3 cache with 96 cores.

Gen0a-X is available now. Four SKUs, 16 to 96 cores. SP5 socket compatibility, so it will work with existing EPYC platforms.

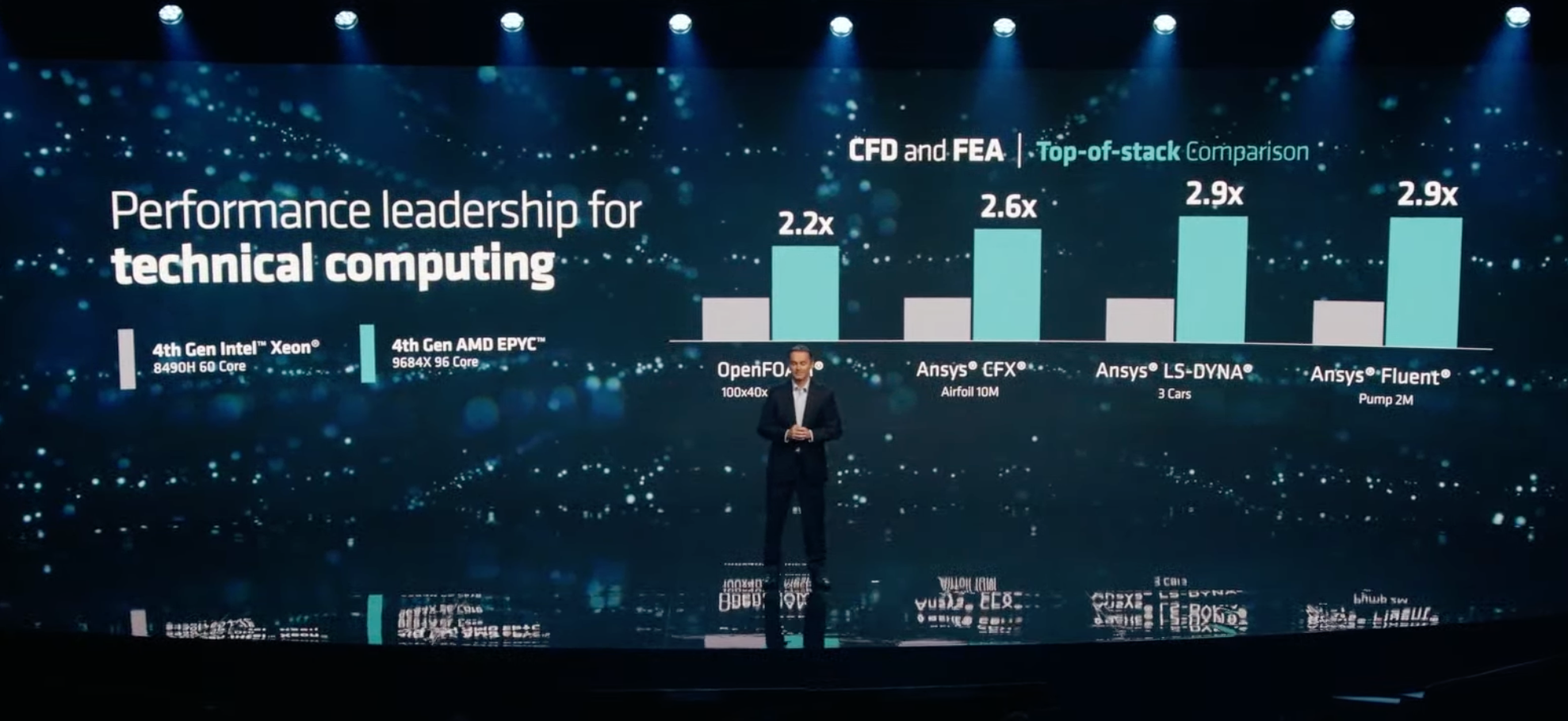

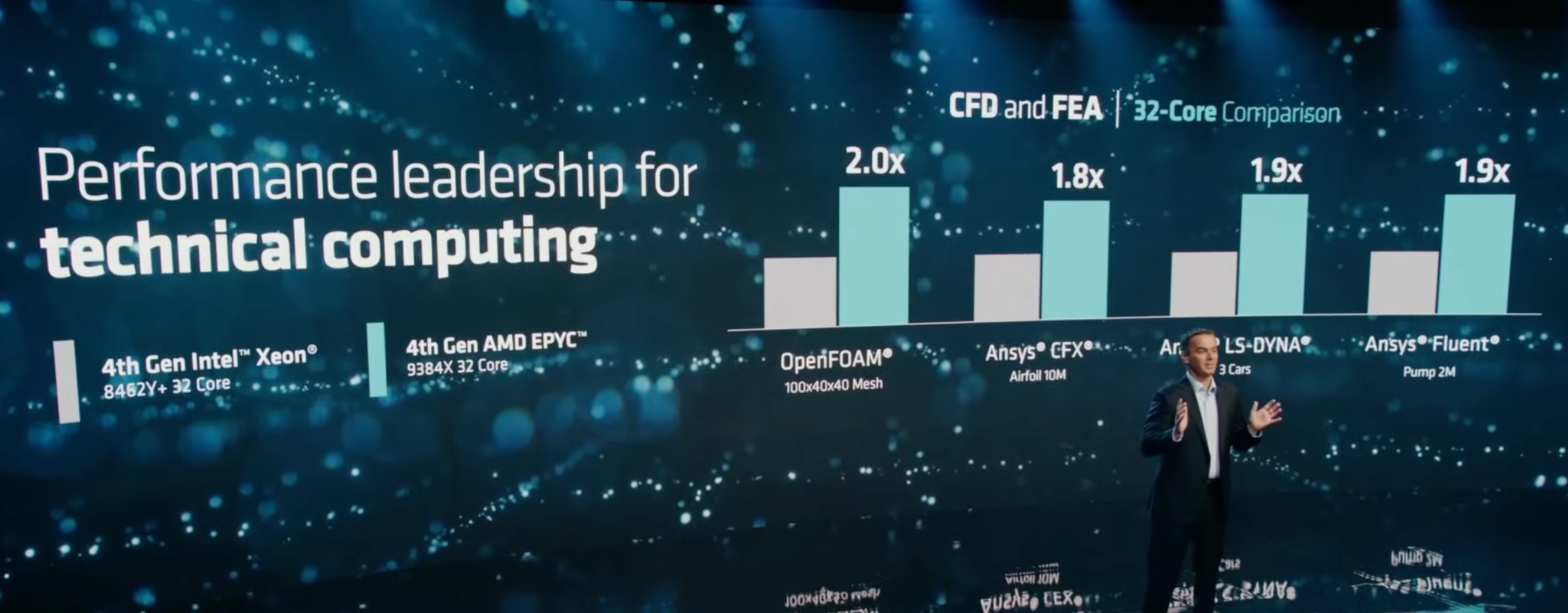

McNamara showed performance benchmarks of Genoa-X against Intel's 80 core Xeon.

Here we can see a comparison of Genoa-X against an Intel Xeon with the same number of cores.

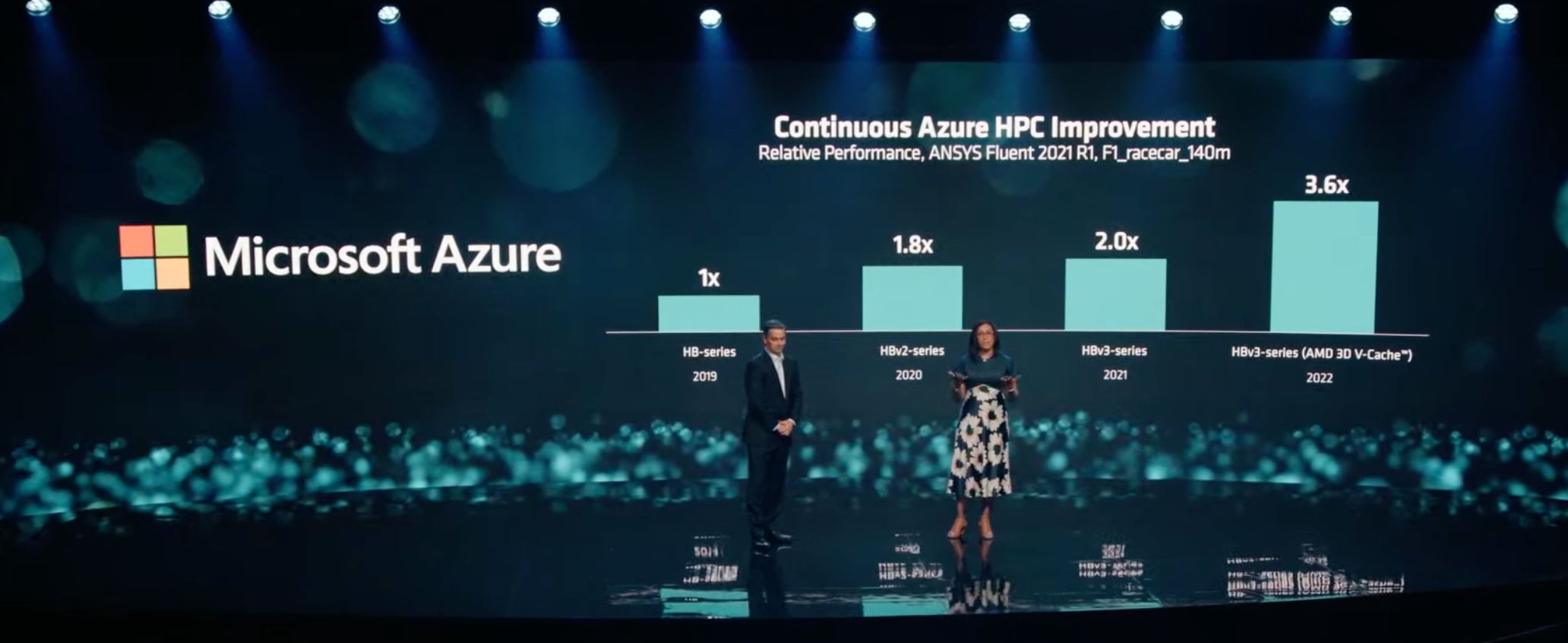

A Microsoft representative joined McNamara on the stage to show Azure HPC performance benchmarks. In just four years, Azure has seen a 4X improvement in performance with the EPYC processors.

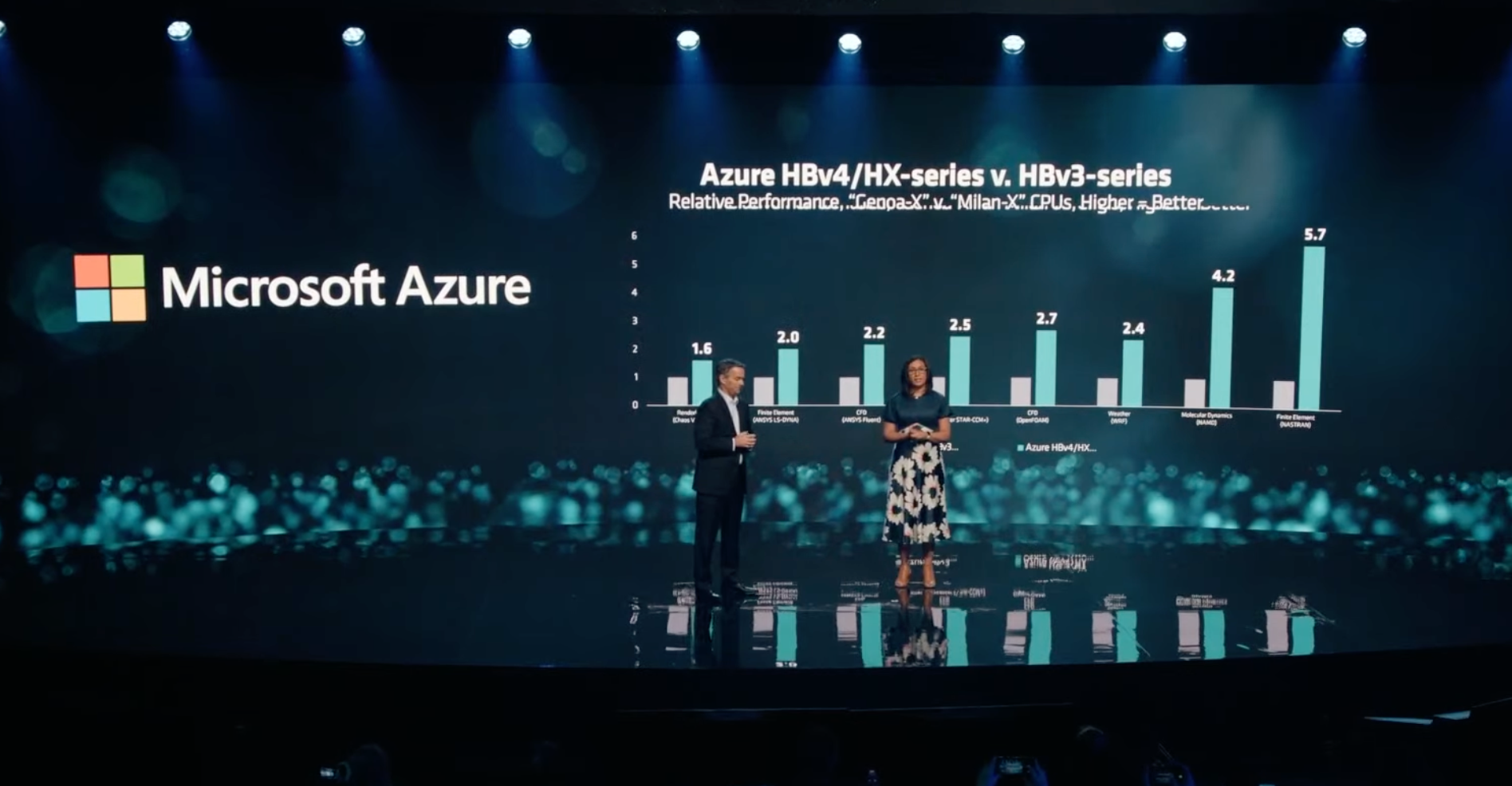

Azure announced the general availability of its new HBv4 and HX-series instances with Genoa-X, and new HBv3 instances. Azure also provided benchmarks to show the performance gains, which top out at 5.7X gains

AMD's Sienna is optimized for Telco and Edge workloads but comes to market in the second half of the year.

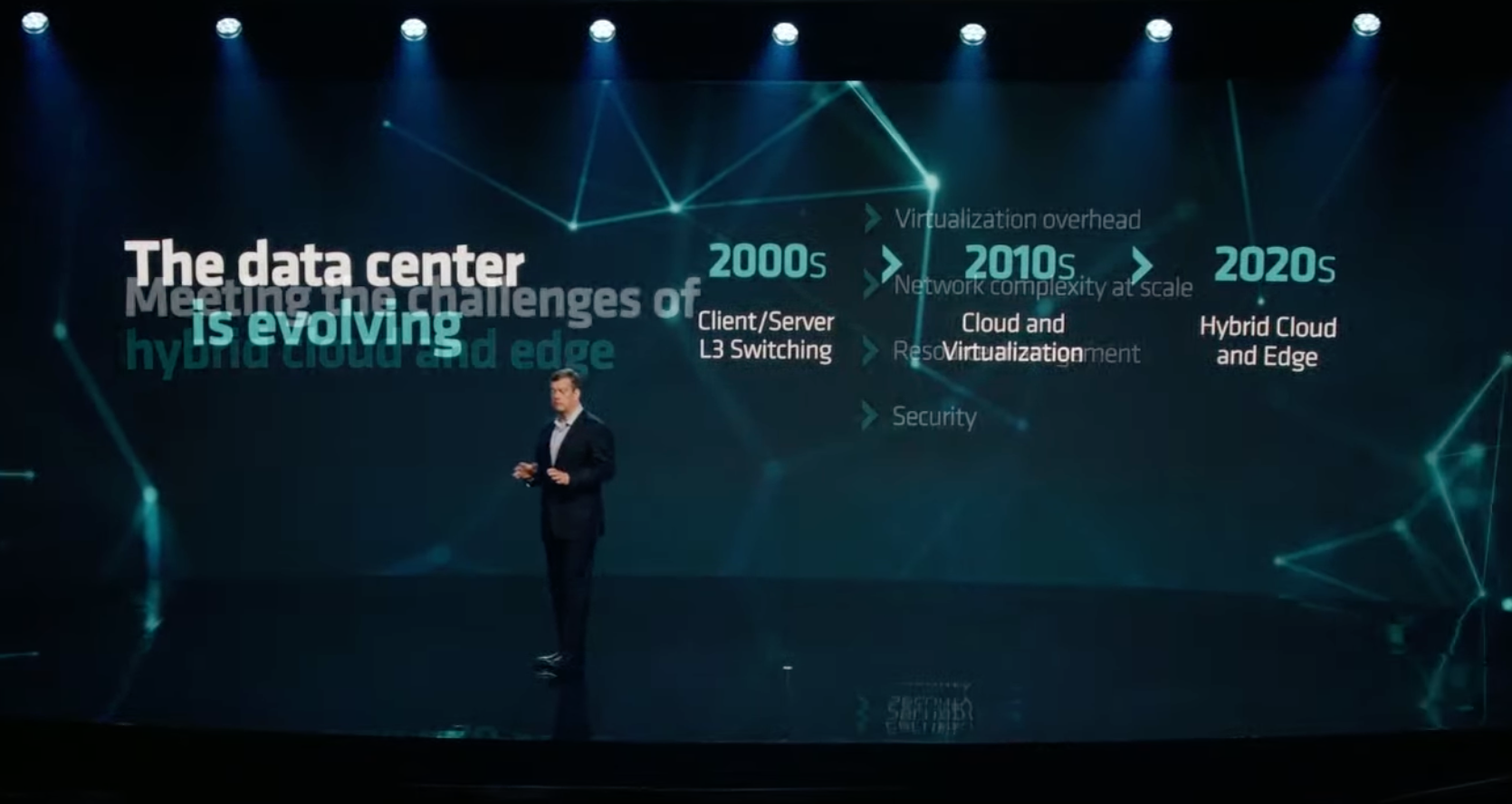

AMD's Forrest Norrod, MD's executive vice president and general manager of the Data Center Solutions Business Group, has come to the stage to share information about how the data center is evolving.

Citadel Securities has joined Norrod on the stage to talk about their shift in workloads to AMD's processors, powering a 35% increase in performance. They use over a million concurrent AMD cores.

Citadel also uses AMD's Xilinx FPGAs for its work in financial markets with its high frequency trading platform. It also uses AMD's low-latency solarflare networking.

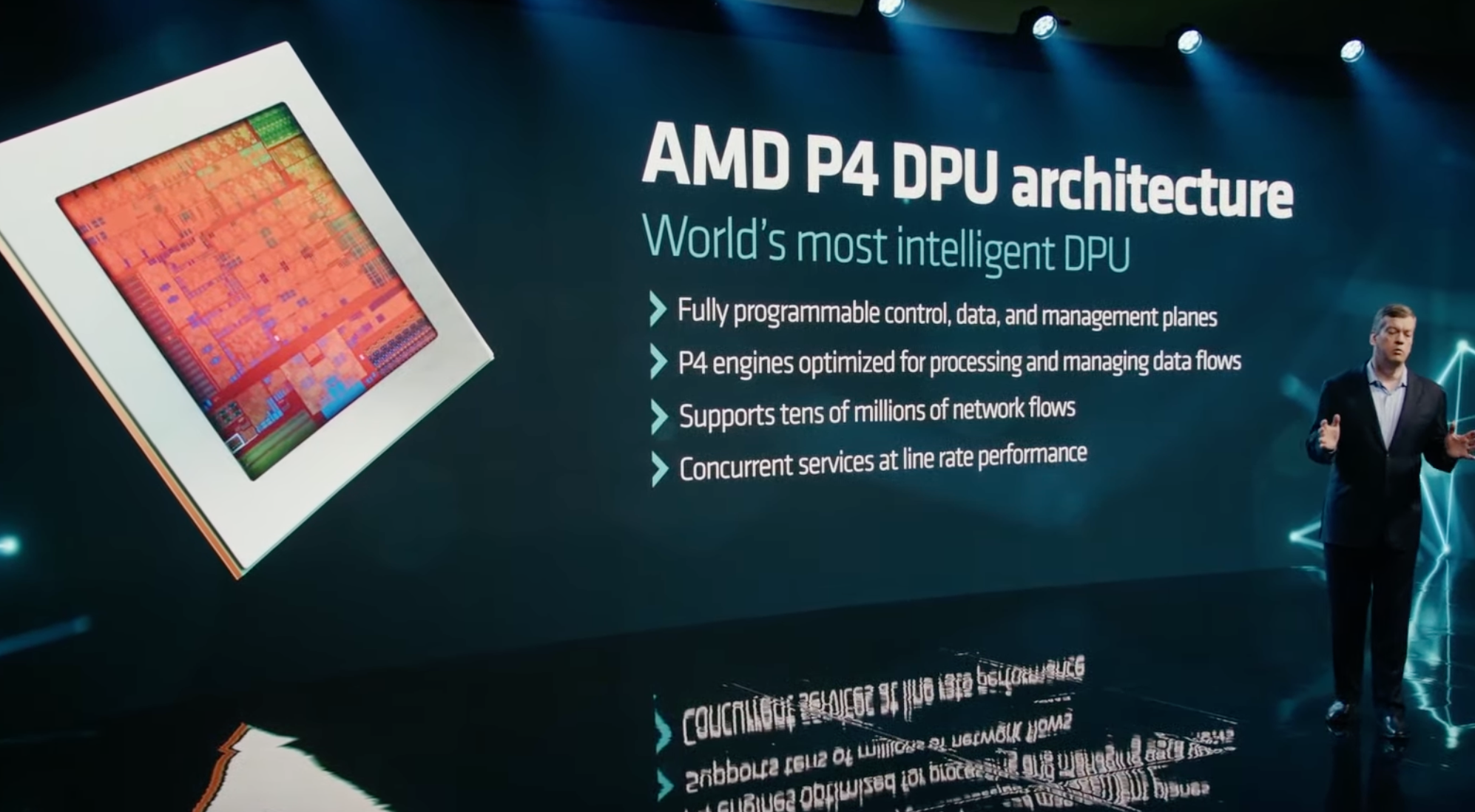

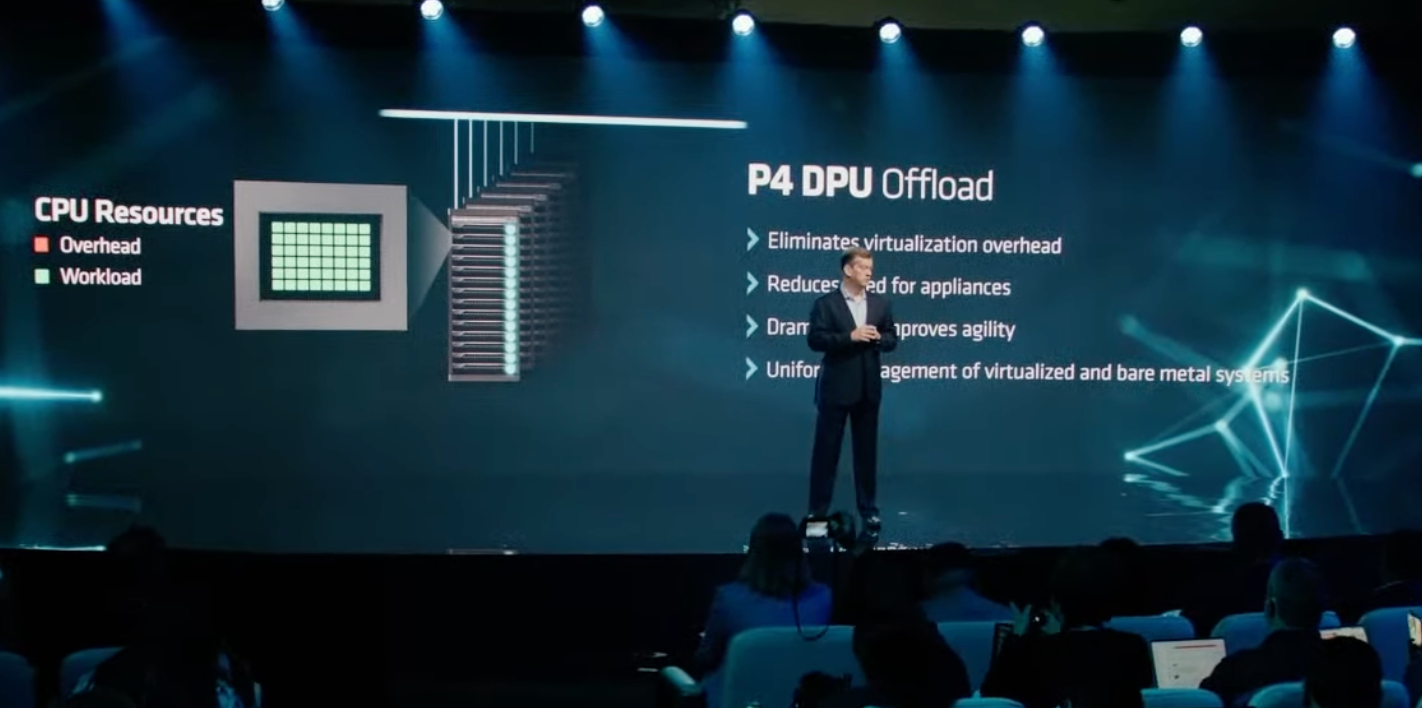

AMD purchased Pensando to acquire DPU technology. Norrod explained how AMD is using these devices to reduce networking overhead in the data center.

AMD's P4 DPU offloads networking overhead and improves server manageability.

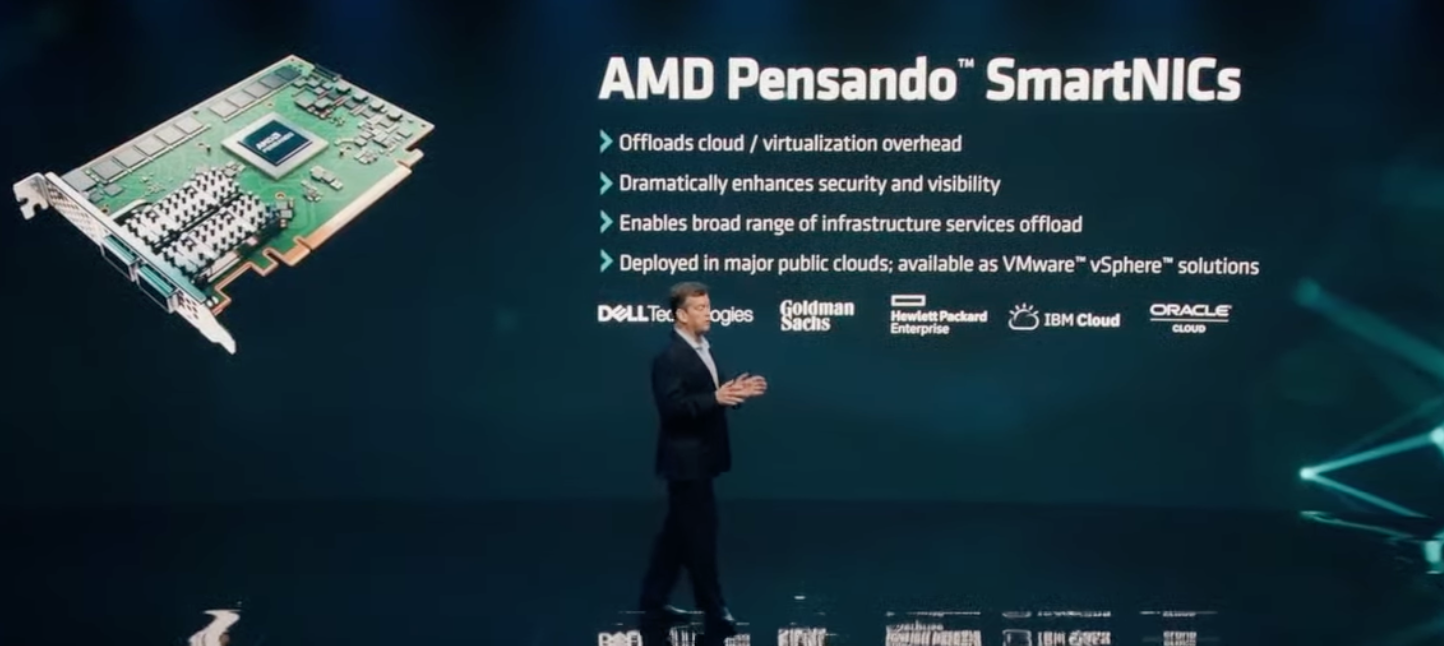

AMD's Pensando SmartNICs are an integral part of the new data center architectures.

The next step? Integrating P4 DPU offload into the network switch itself, thus providing services at the rack level. This comes as the Smart Switch they've developed with Aruba Networks.

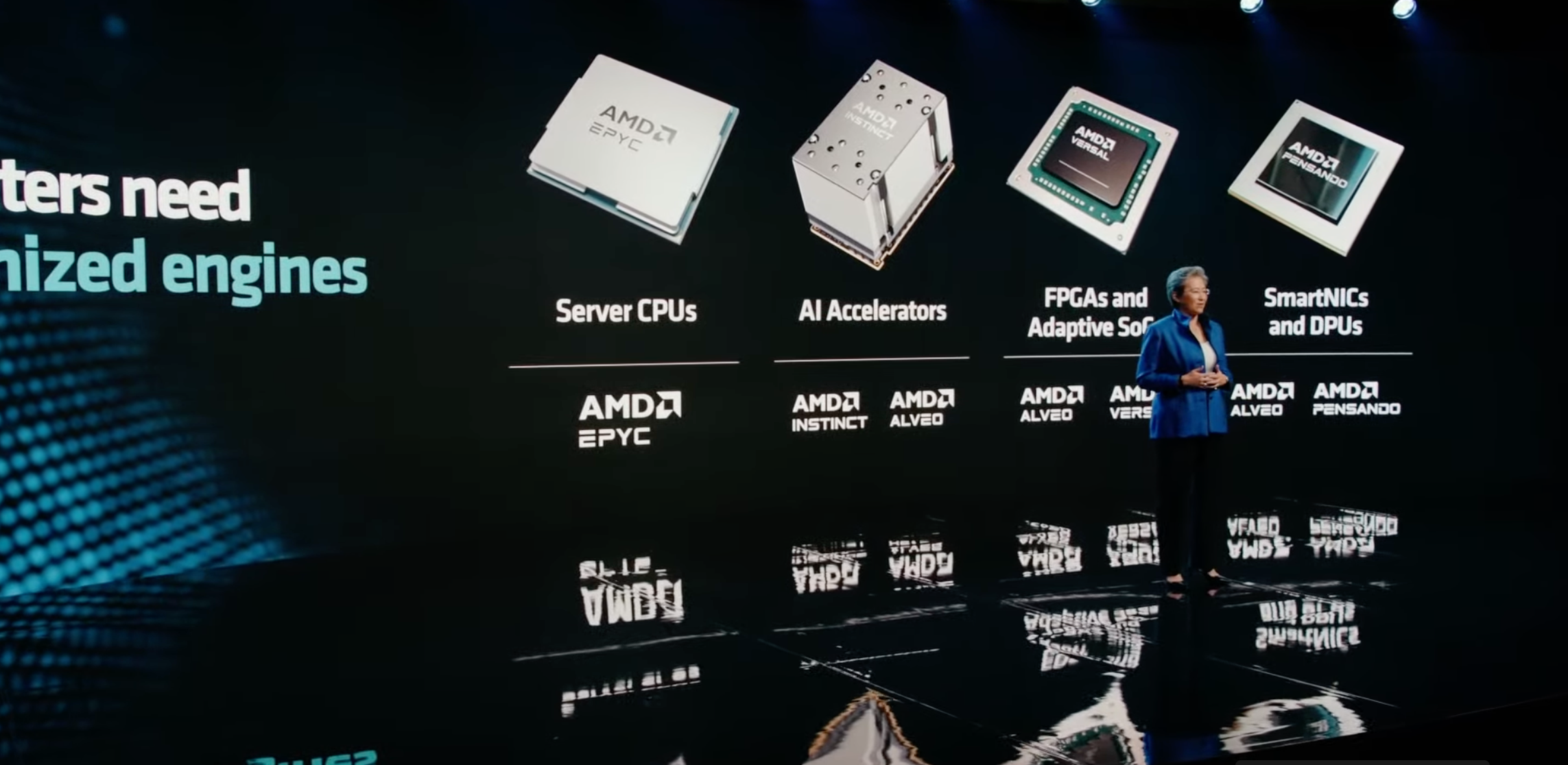

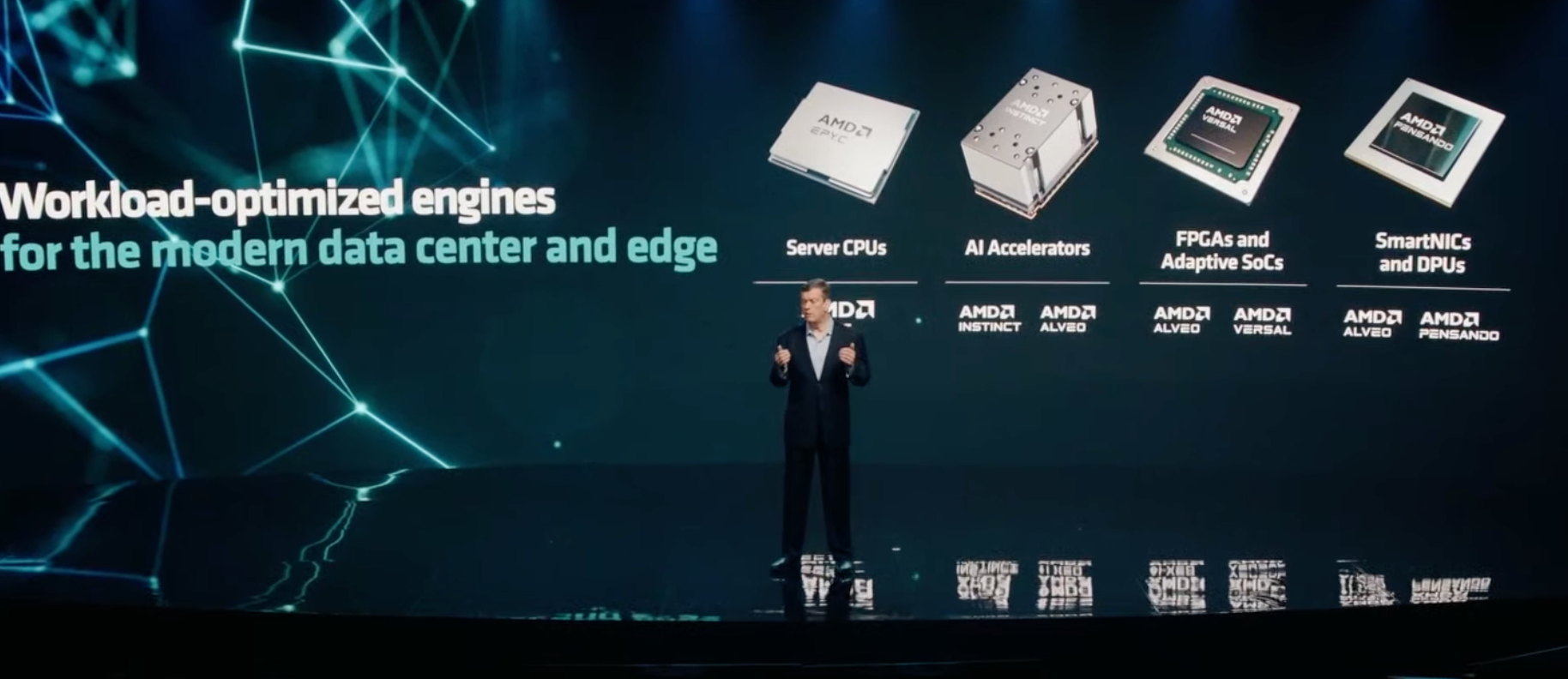

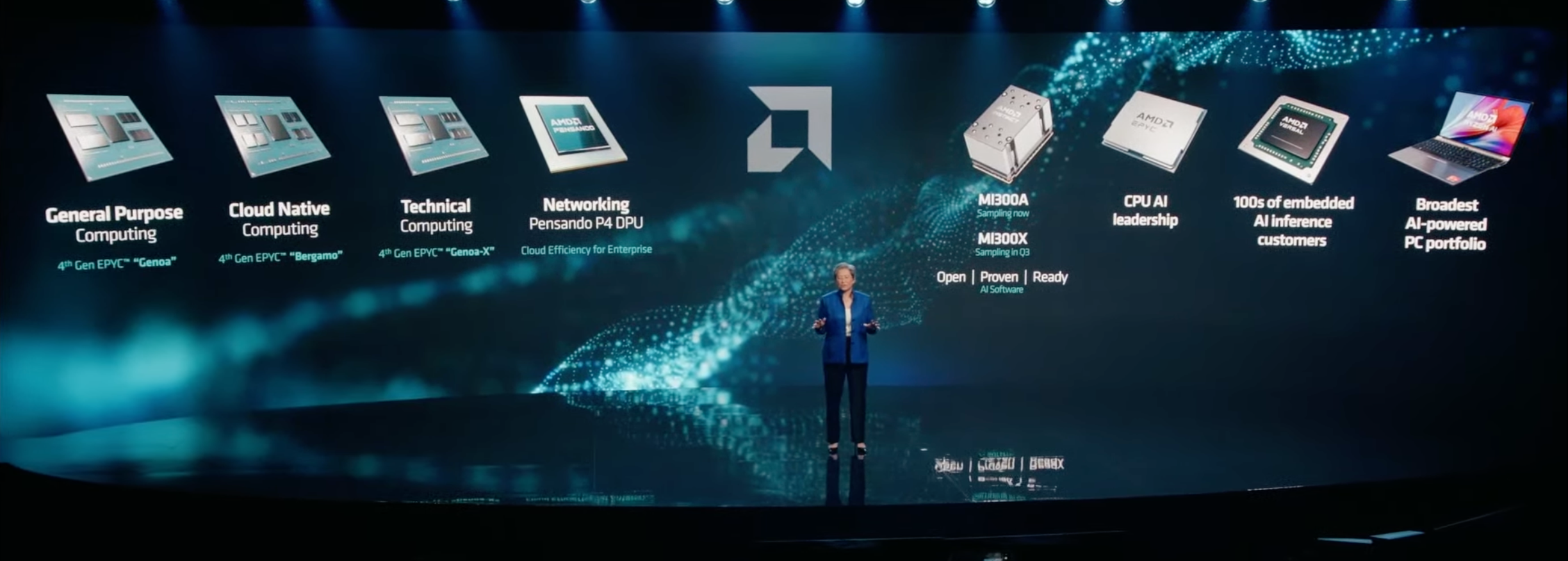

Lisa Su has come back to the stage to talk about AMD's broad AI silicon portfolio, including the Instinct MI300

Lisa Su outlined the massive market opportunity for the AI market driven by large language models (LLMs), causing the TAM to grow to around $150 billion.

AMD Instinct GPUs are already powering many of the world's fastest supercomputers.

AMD President Victor Peng came to the stage to talk about the company's efforts around developing the software ecosystem. That's an important facet, as Nvidia's CUDA software has proven to be a moat. AMD plans to use an 'Open, Proven, and Ready' philosophy for its AI software ecosystem development, which Peng is in charge of.

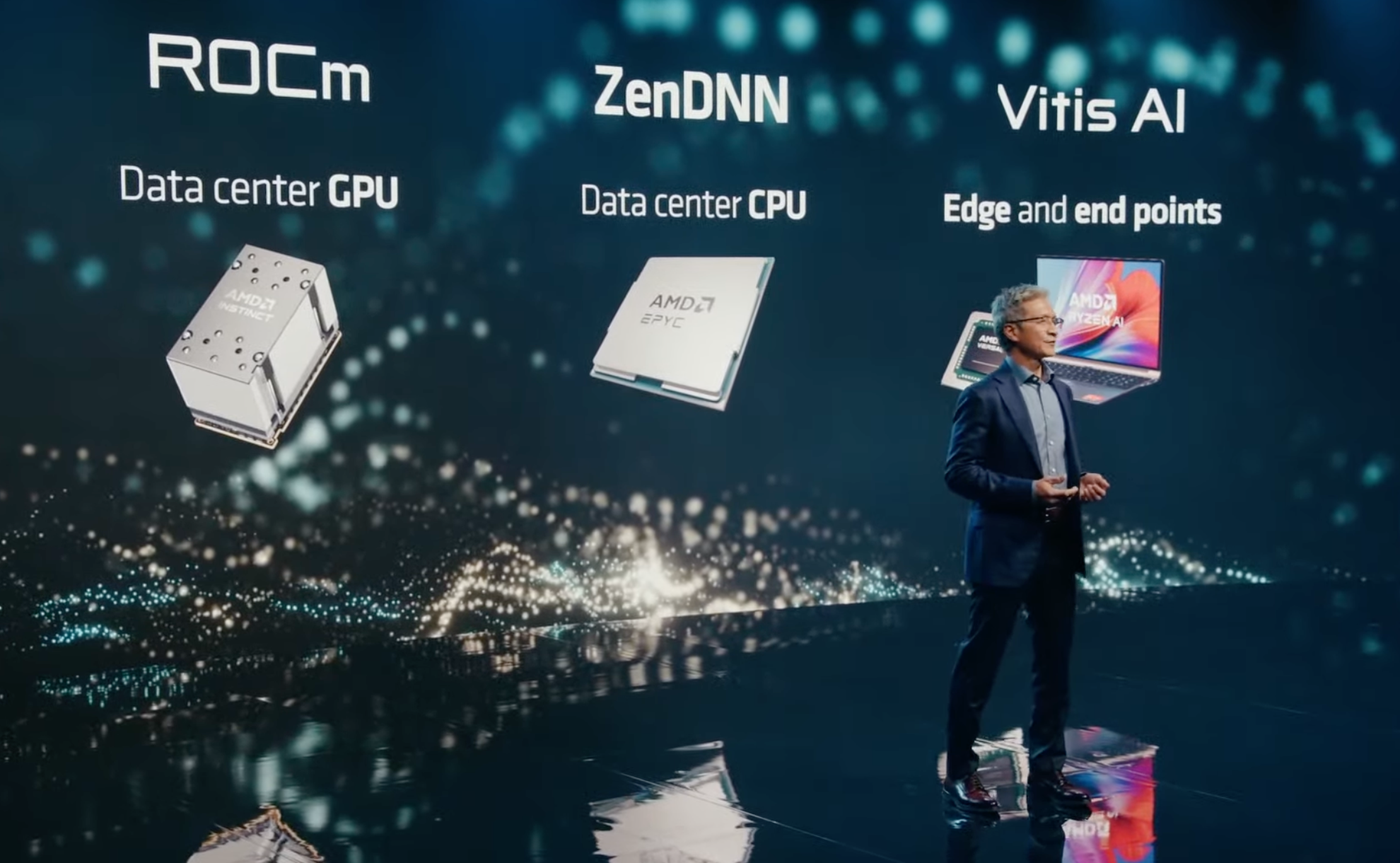

Peng showed some of AMD's latest hardware efforts.

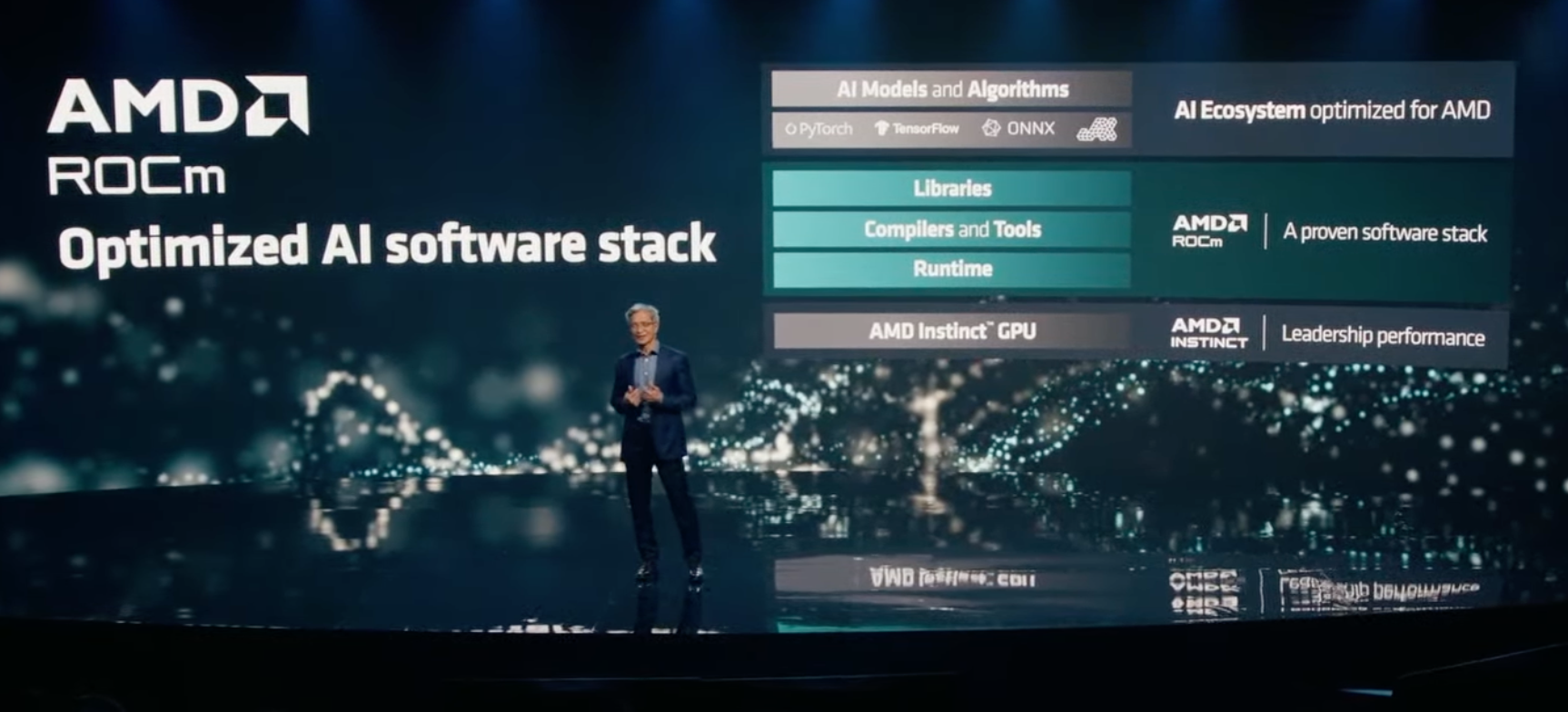

AMD's ROCm is a complete set of libraries and tools for its optimized AI software stack. Unlike the proprietary CUDA, this is an open platform.

AMD is continually optimizing the ROCm suite.

PyTorch is one of the most popular AI frameworks in the industry, and they've joined Peng on the stage to talk about their collaboration with ROCm. The new PyTorch 2.0 is nearly twice as fast as the previous version. AMD is one of the founding members of the PyTorch Foundation.

Here are details of PyTorch 2.0.

AMD is shifting to talking about AI models, with Hugging Face joining Peng on the stage. AMD and Hugging Face announced a new partnership, optimizing their models for AMD CPUs, GPUs, and other AI hardware.

Lisa Su has returned to the stage, and now we expect to learn about the biggest announcement of the show: The Instinct MI300. This is for training larger models, like LLMs behind the current AI revolution.

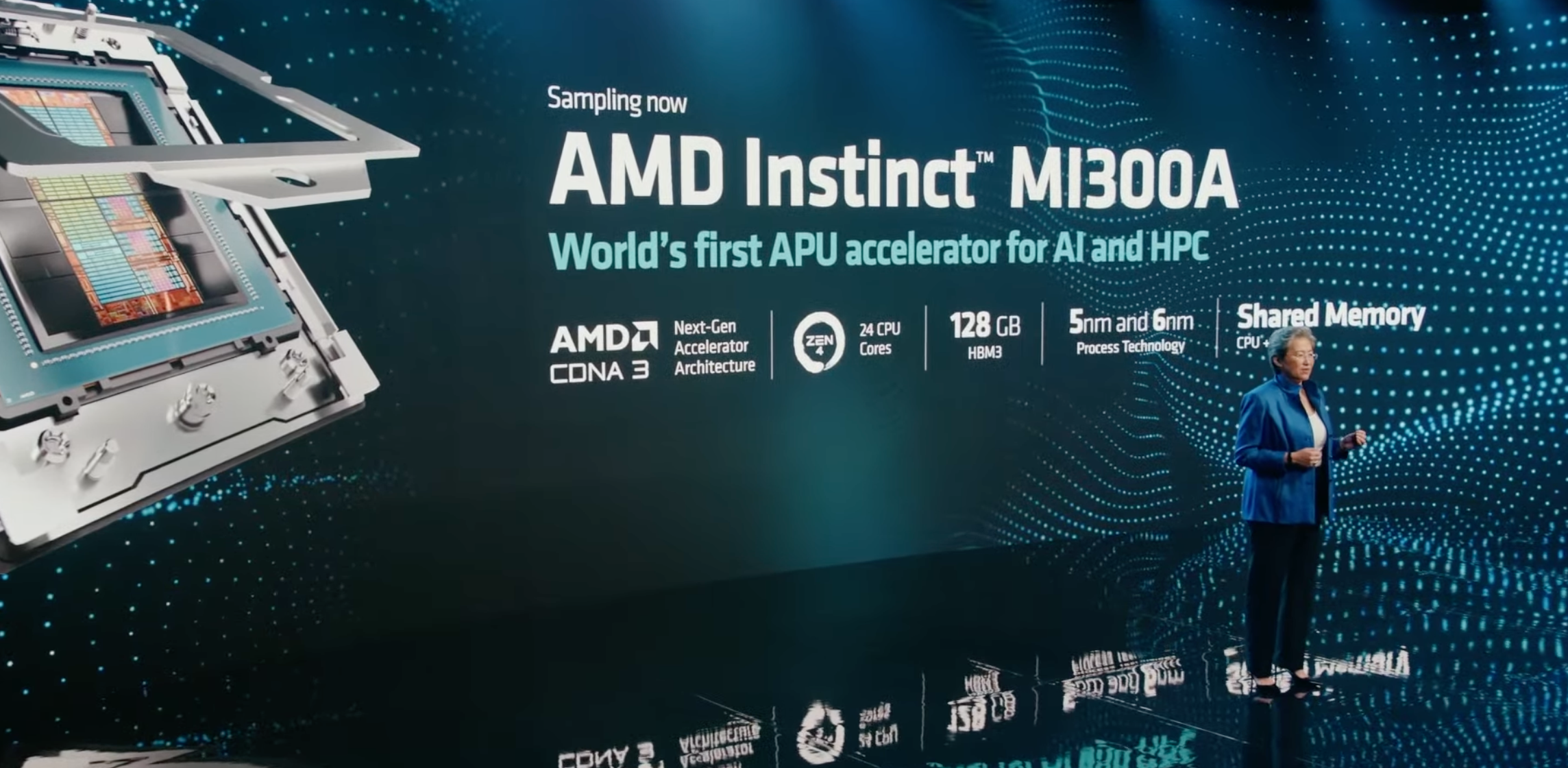

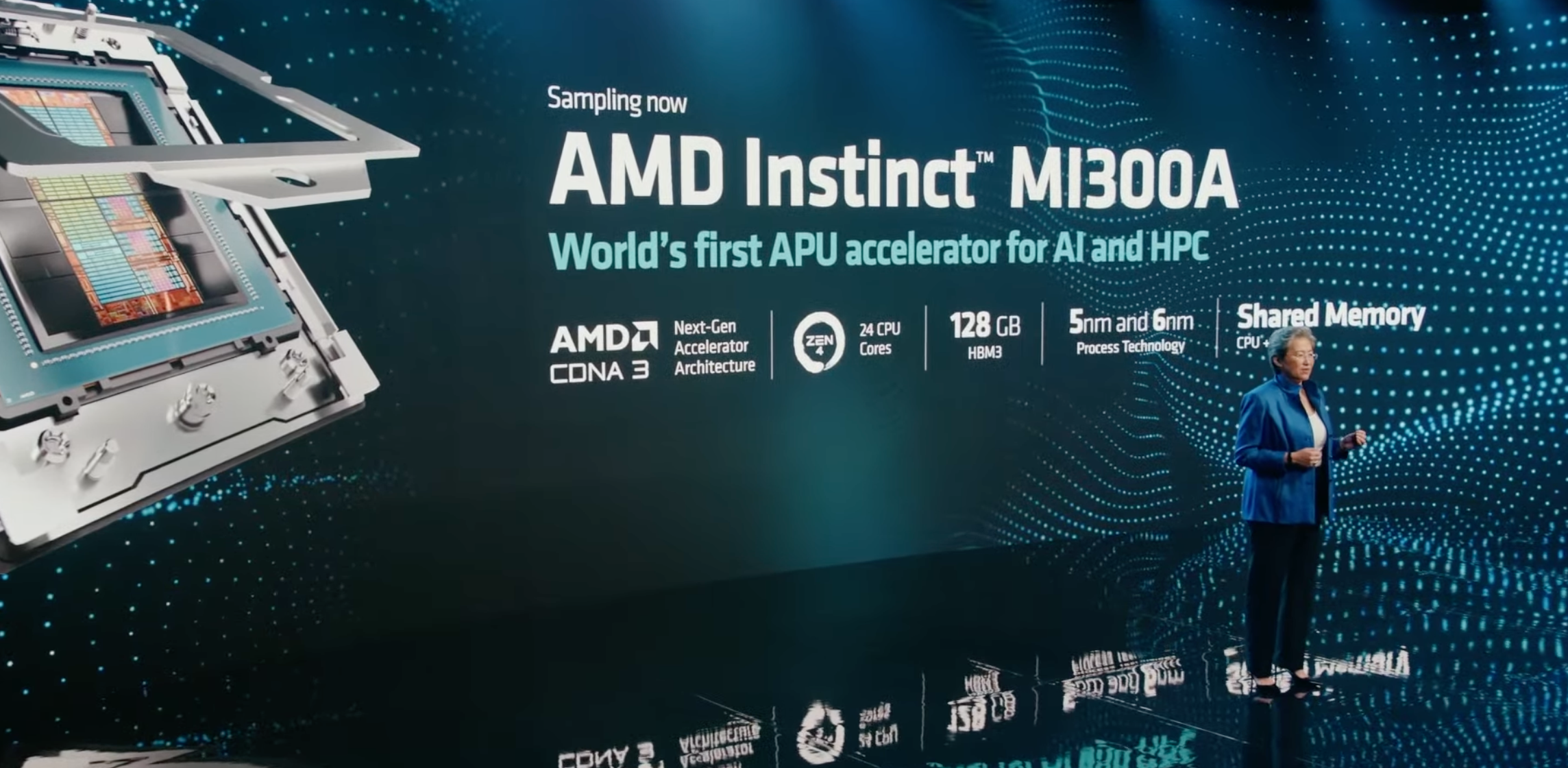

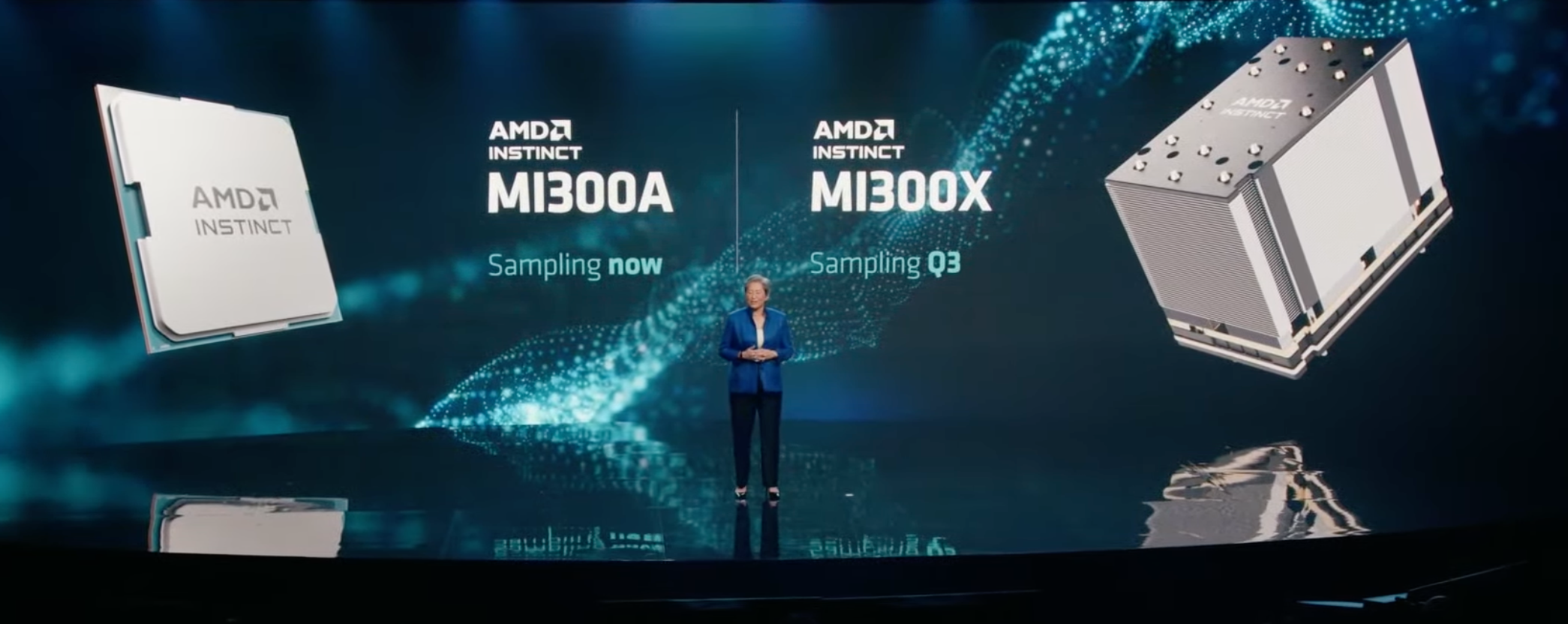

SU is talking about the Instinct roadmap, and how the company previewed the MI300 with the CDNA 3 GPU architecture paired with 24 Zen 4 CPU cores, tied to 128GB of HBM3. This gives 8x more performance and 5x higher efficiency than the MI250.

146 billion transistors across 13 chiplets.

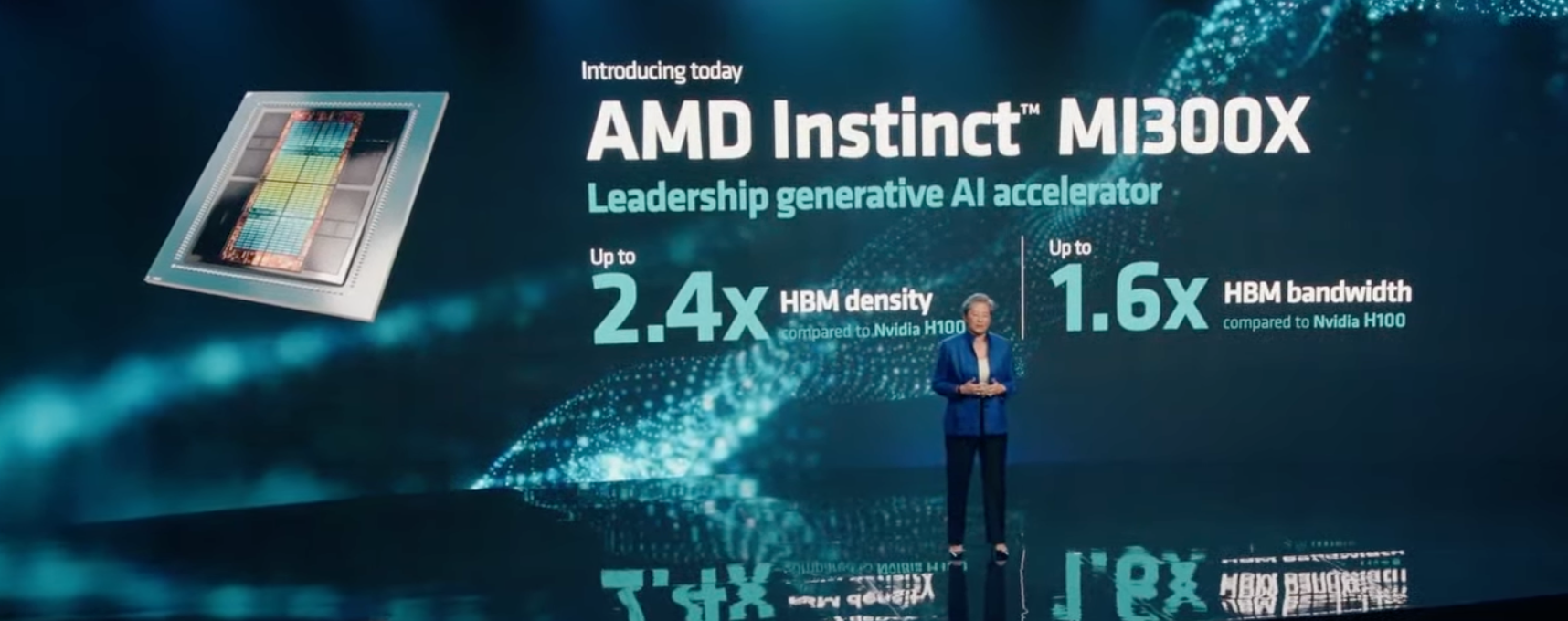

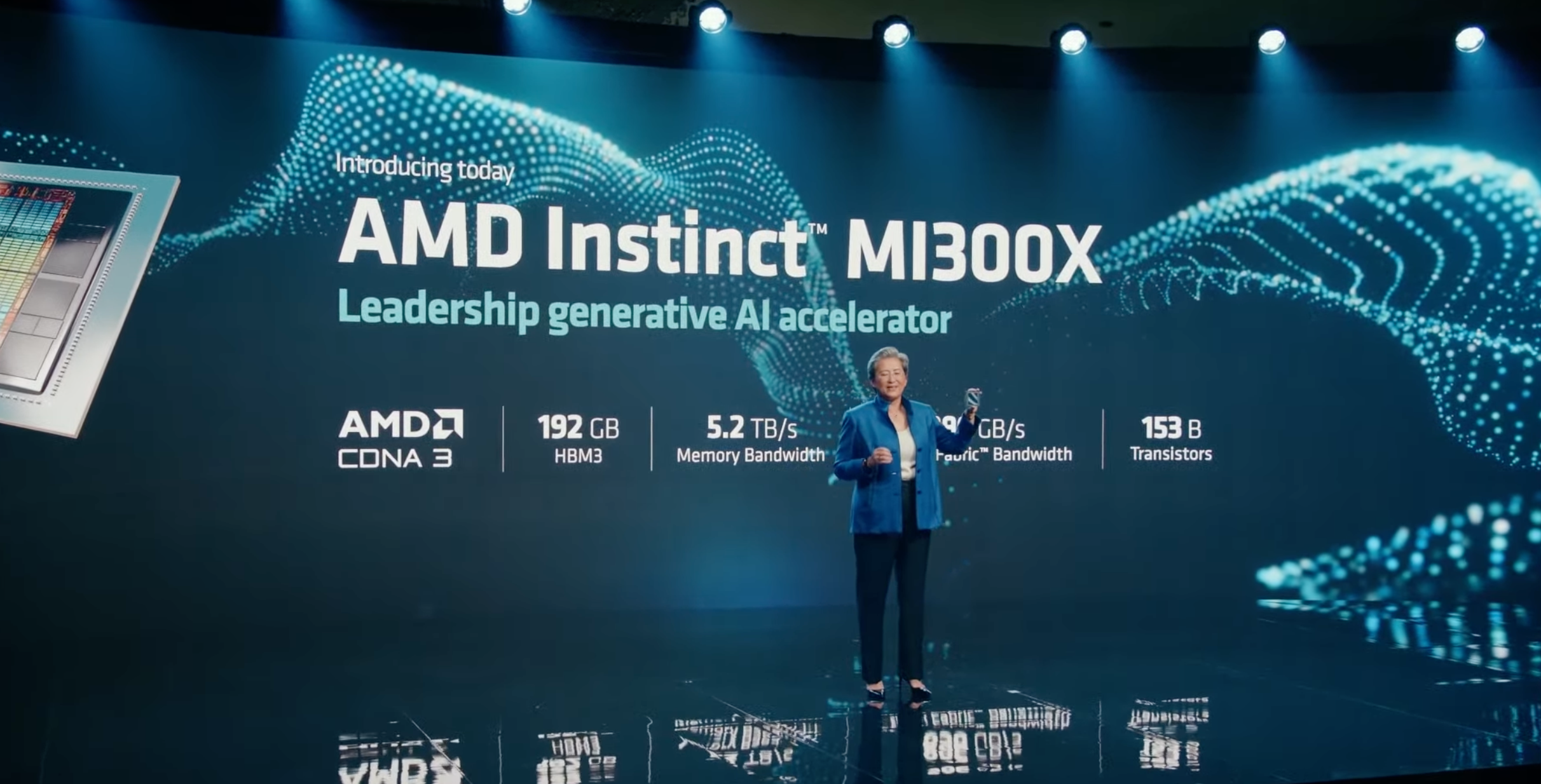

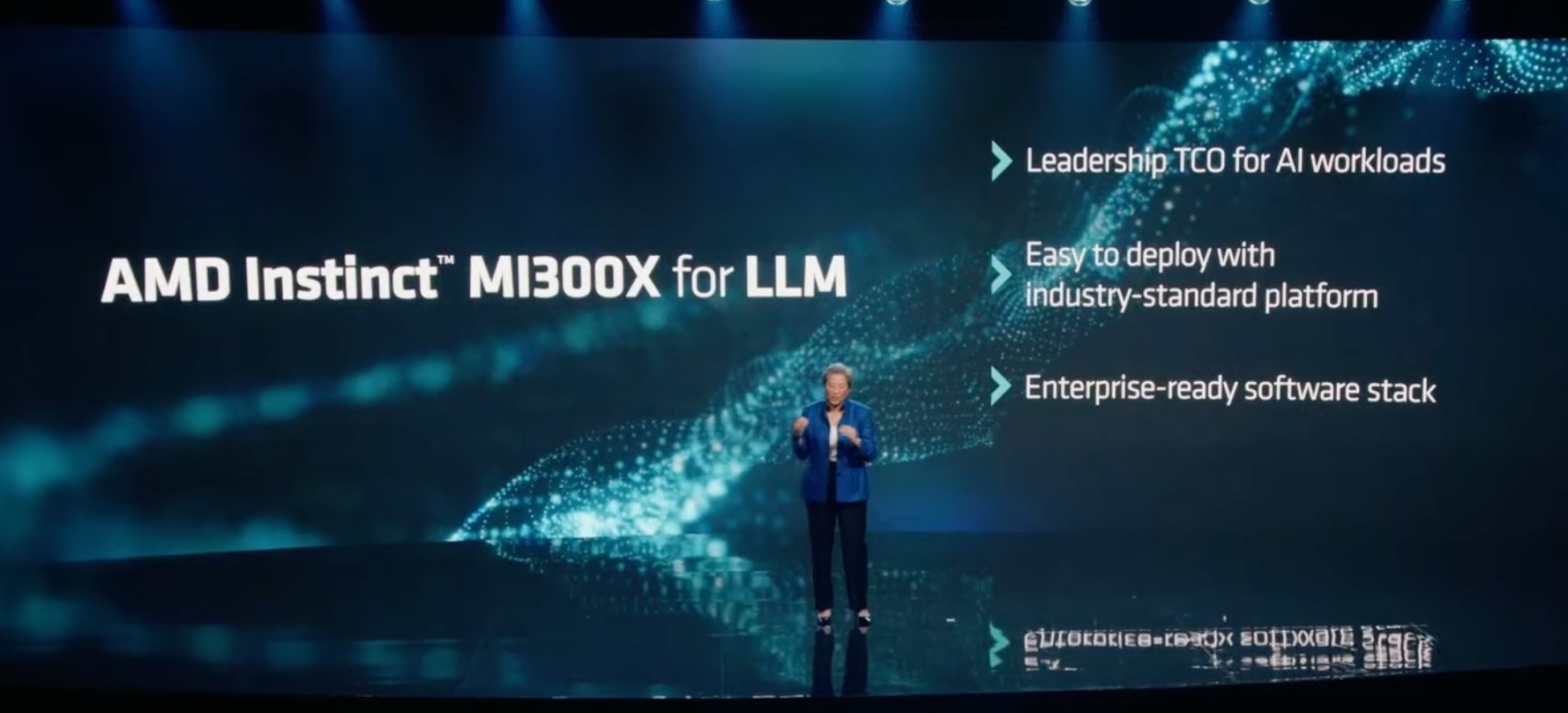

There will be a GPU-only MI300, the MI300X. This chip is optimized for LLMs. this delivers 192GB of HBM3, 5.2 TB/s of bandwidth, and 896 GB/s of Infinity Fabric Bandwidth.

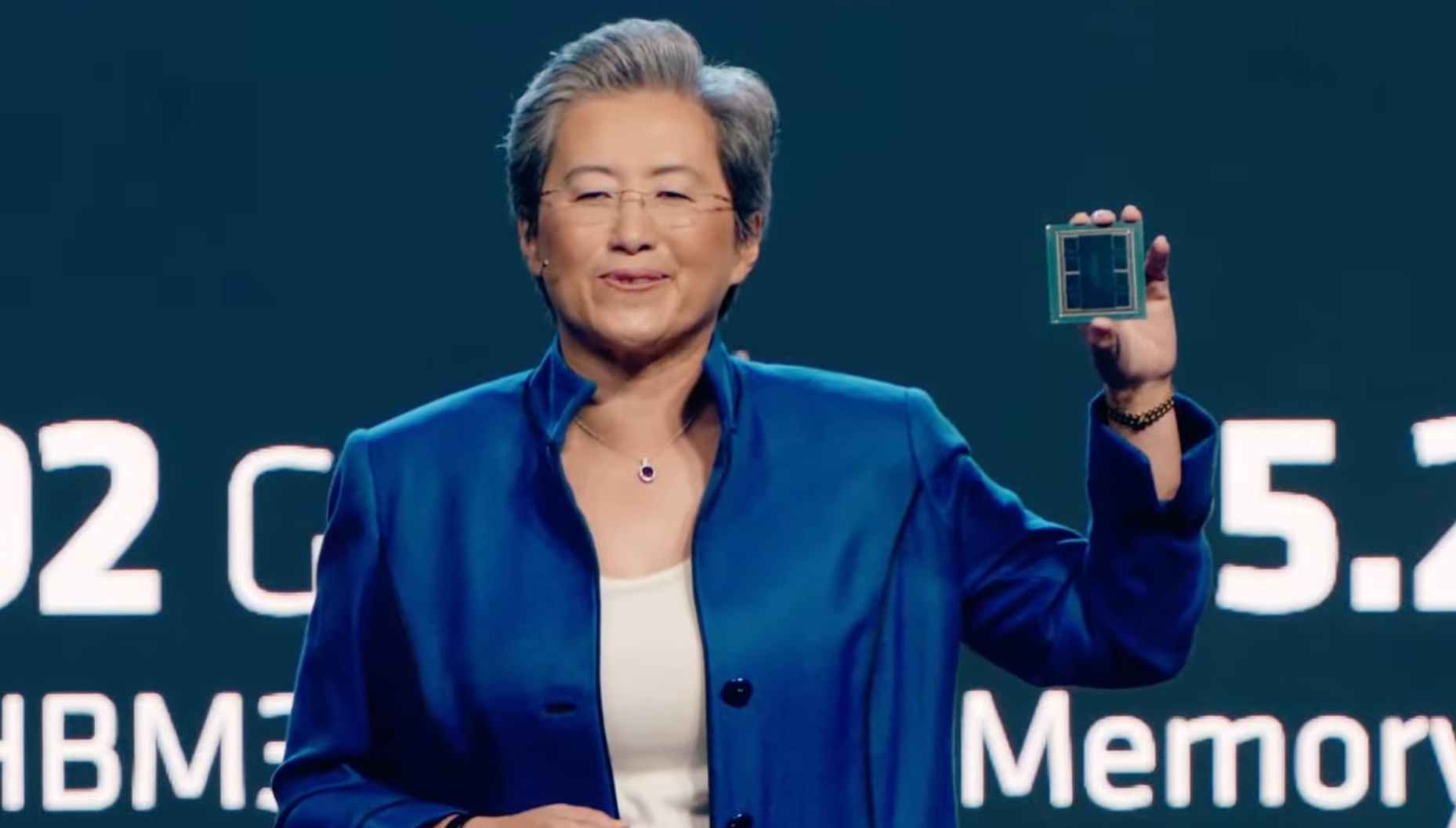

And here's new chip. 153 billion transistors all in one package with 12 5nm chiplets.

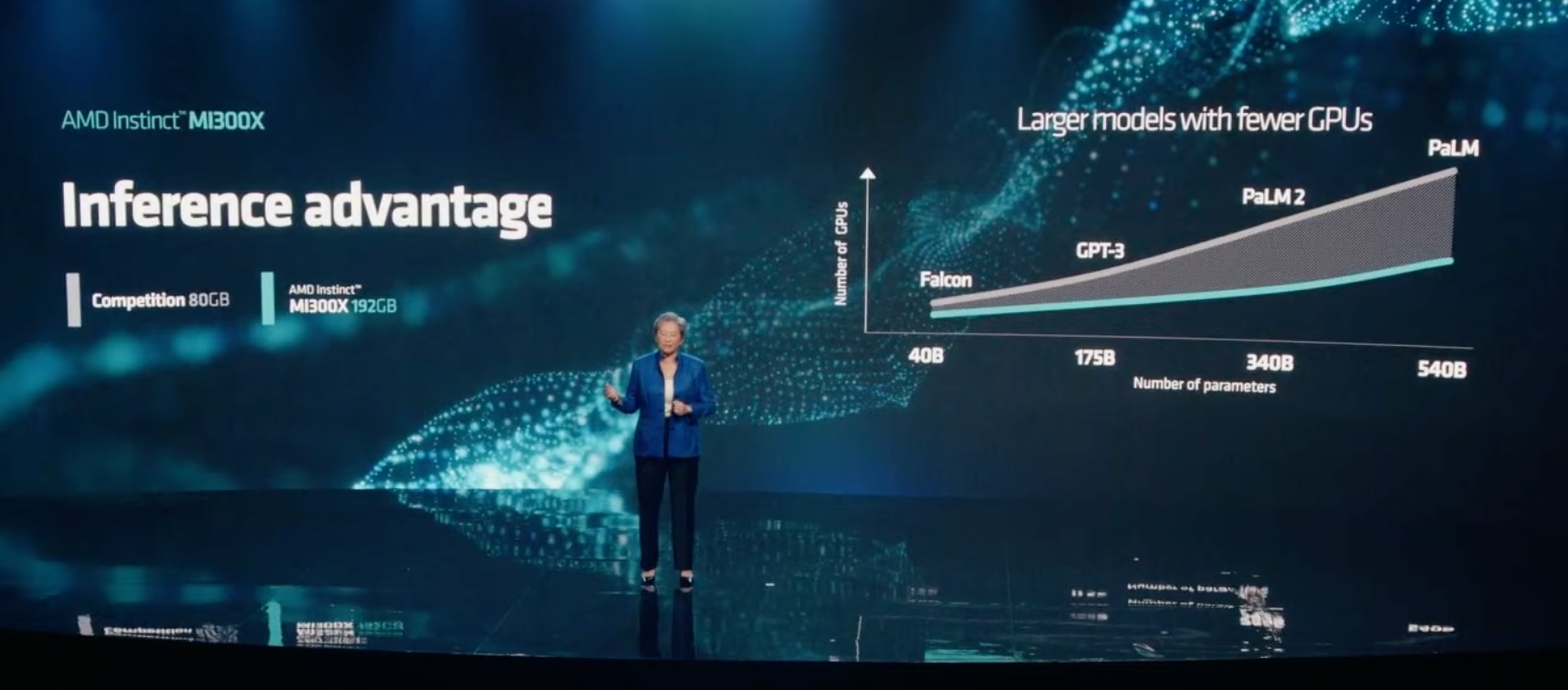

MI300X offers 2.4X HBM density than the Nvidia H100 and 1.6X HBM bandwidth than the H100, meaning that AMD can run larger models than Nvidia's chips.

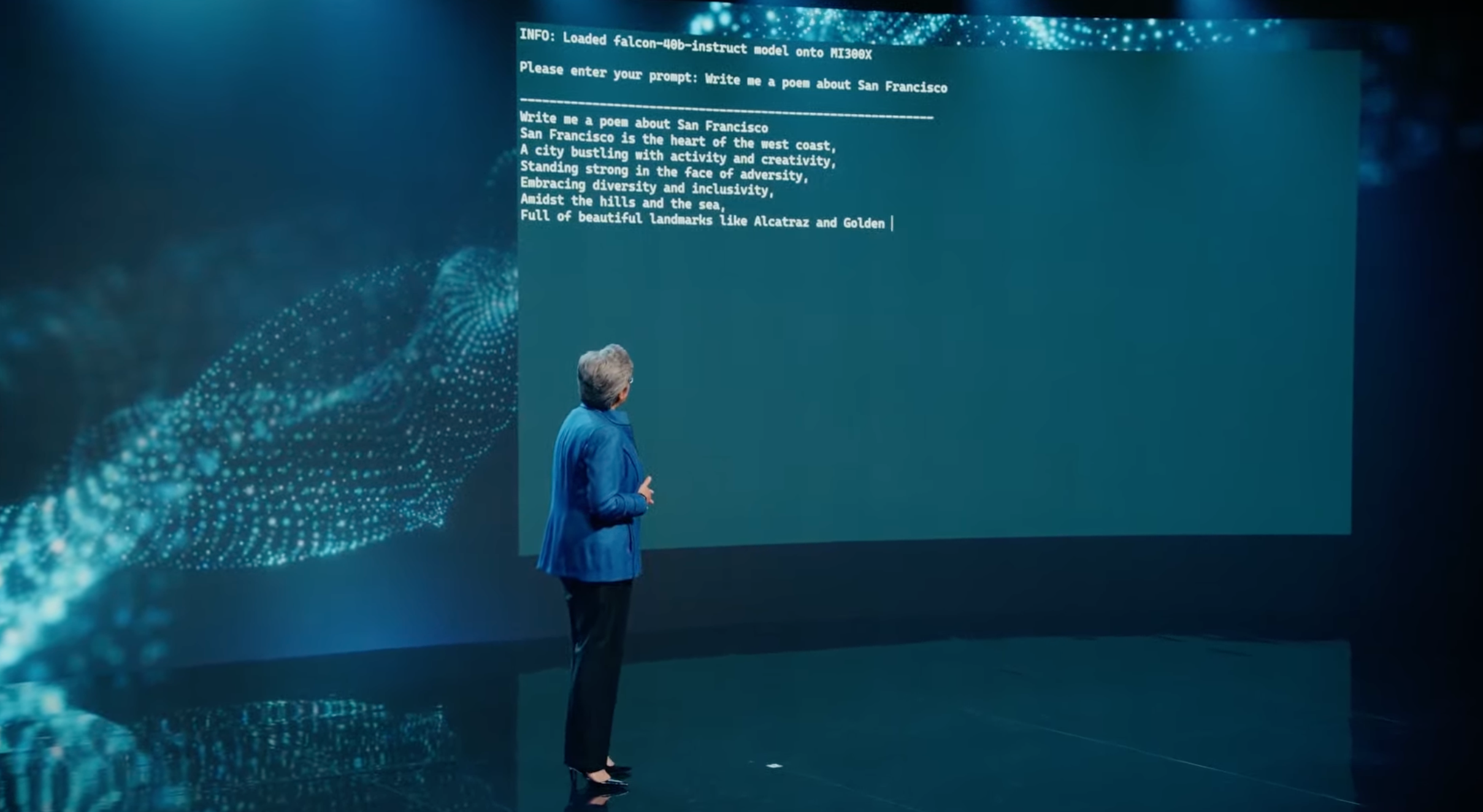

Lisa Su conducted a demo of the MI300X running a Hugging Face AI model. The LLM wrote a poem about San Francisco, where the event is taking place. This is the first time a model this large has been run on a single GPU. A single MI300X can run a model up to 80 billion parameters.

This allows fewer GPUs for large language models, thus delivering cost savings.

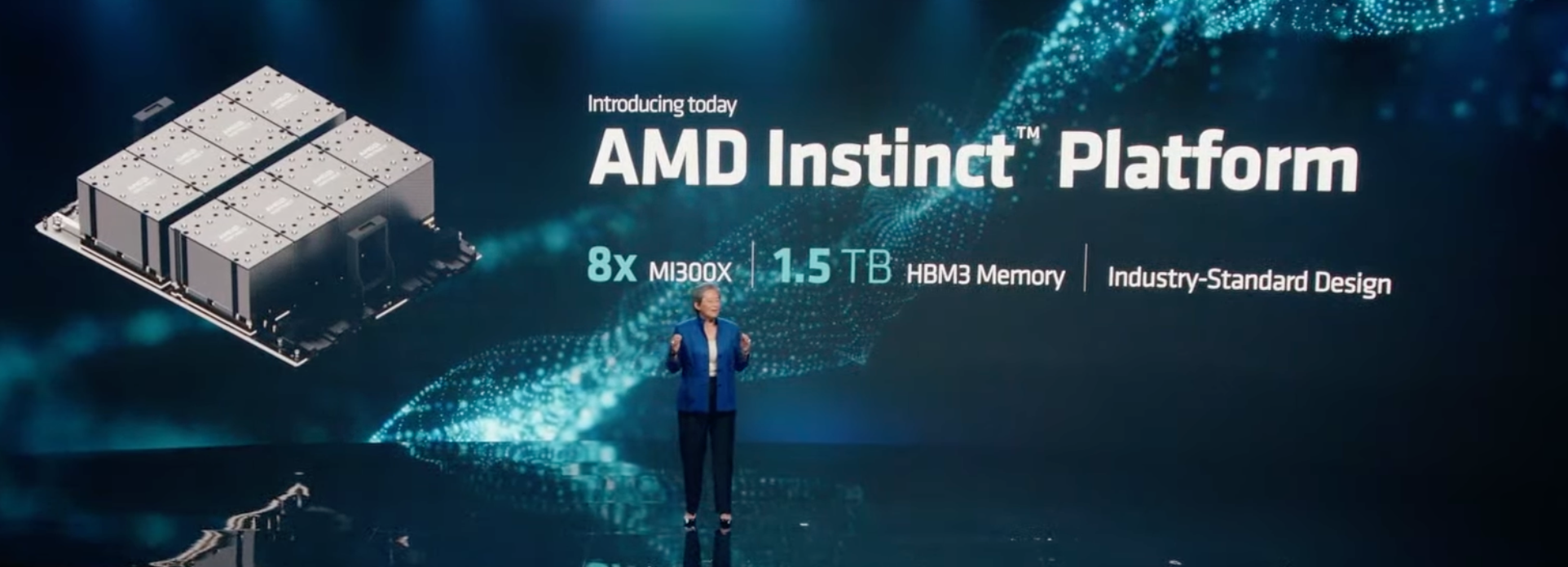

SU also announced the AMD Instinct Platform, which has 8 MI300X in an industry-standard OCP design, offering a total of 1.5TB of HBM3 memory.

MI300A, the CPU+GPU model, is sampling now. The MI300X and 8-GPU Instinct Platform will sample in the third quarter, and launch in the fourth quarter.

Lisa Su wrapped up the presentation. Here's a few more wrap up slides. Stay tuned for our ongoing coverage over the coming hours.

The event has concluded, and you can see our overview with the live blog below, However, here are links to our deeper coverage of each topic:

AMD Expands MI300 With GPU-Only Model, Eight-GPU Platform with 1.5TB of HBM3

AMD EPYC Genoa-X Weilds 1.3 GB of L3 Cache, 96 Cores

AMD Details EPYC Bergamo CPUs With 128 Zen 4C Cores, Available Now

AMD Intros Ryzen 7000 Pro Mobile and Desktop Chips, AI Comes to Pro Series