Why you can trust Tom's Hardware

Comparison Products

Normally, we would throw up a bunch of products to compete against the RocketAIC 7608AW, but things have changed this time around. The only storage we have that could compare is the HighPoint Rocket 1608A from last year, which uses the same RAID controller but Samsung 990 Pro drives instead of 9100 Pros. This isn’t a fair comparison because we’re using the 8TB 9100 Pros on the 7608AW, and it would have been more appropriate to have the 1608A with the 4TB 990 Pros, if anything. Still, you get to see how these brand-new high-end 8TB PCIe 5.0 drives perform in a large array. We have to admit that it is pretty badass.

One alternative would be to use 8TB SanDisk WD Black SN8100s, which should be available later this year. 8TB performance compared to 2TB would have to be extrapolated for now. As for last gen, we think the 8TB WD Black SN850X is the most realistic drive you’d get for an AIC like this. It’s again tough to see how this would play out with eight drives, but the biggest difference would be in large sequential performance. It’s more affordable than the first 8TB consumer SSDs that appeared on the market — the Sabrent Rocket Q and Rocket 4 Plus — so that is our Gen 4 recommendation if you plan to experiment at a lower cost.

However, we’re not comparing any of these today for one very important reason: the platform. While we did put the RocketAIC 7608AW through its paces on our normal Intel-based testing platform, we found some performance anomalies that failed to show its full potential. It’s no mystery that AMD and Intel platforms perform differently with storage, and that’s aside from other quirks. We actually tested on two different Intel platforms before settling on AMD as the most representative of what you’d get out of this hardware. We also had to adjust our test selection for similar reasons. As a result, we’re not making direct comparisons, but the information above is still relevant if you’re planning to invest in the RocketAIC 7608AW.

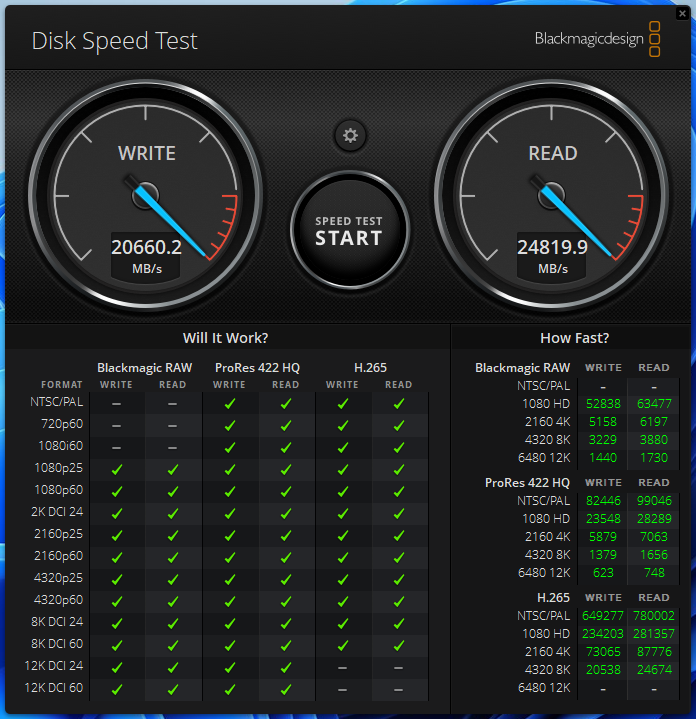

Media Testing — Blackmagic Disk Speed Test

Blackmagic is not a test we typically use, but it is often relied upon by content creators and can be useful within the context of media. High-end storage in particular is a necessity when dealing with large multimedia files and assets. This test evaluates a range of storage formats and measures read and write throughput.

Overall performance is pegged at 20.66 GB/s and 24.82 GB/s for writes and reads. Reads are “easier” to do than writes, so performance is generally higher there. These numbers exceed the capability of any single PCIe 5.0 drive but might seem underwhelming for an 8-drive array. In fact, this isn’t bad when you consider that normal file transfers occur at a queue depth of one. Higher queue depths allow for superior parallelization – already the backbone of solid state storage performance – and enable you to get the most out of a solution like this. 20-25 GB/s is nothing to sneeze at for media, though, keeping in mind that this AIC hosts 64TB of storage.

Normally, we would show 3DMark results in this section. As you can probably guess, you don’t see any gains with gaming by throwing more drives at it. Games depend on low-queue-depth reads — both sequential and random — with variable I/O sizes. You’re not really going to see any latency improvements here. The RocketAIC 7608AW is better applied to areas where you need lots of bandwidth, which includes multimedia. Larger block sizes and higher queue depths are even better. That’s closer to a DirectStorage workload, but we have yet to really see that in action in the real world. If you’re someone who deals with many large files on a daily basis, the RocketAIC 7608AQ can make more sense, especially if you are working with multiple arrays on this single AIC.

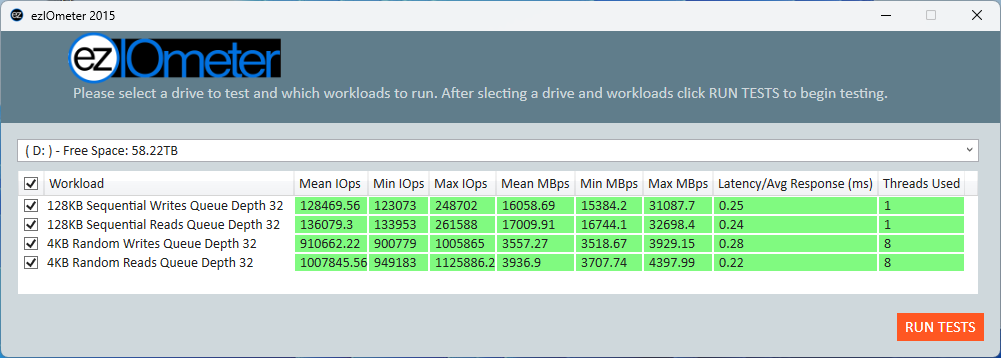

NVMe Testing — ezIOmeter

ezIOMeter is designed to make IOMeter more accessible to general users with a less complicated UI. IOmeter is old but robust and can handle more complex workloads that have become possible with the shift from SATA to NVMe (PCIe) SSDs. We use IOMeter for our sustained write testing, but it can be useful in other areas as well. For an alternative under Linux, ezFIO is a good analogue. These tools can be useful for getting specific performance information from storage. In this case, we’re testing 4KB random and 128KB sequential I/O with a QD of 32 as a balance between consumer and enterprise workloads.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

This test gives us a quick look at the RocketAIC 7608AW’s performance profile. You need at least a QD of 8 to really hit all the drives, and a QD of 32 allows 8 drives to have full 16KB access with 4KB I/O. Sequential I/O is often single-threaded, while smaller, random I/O needs more threads for higher efficiency. With all that in mind, the 8-drive AIC hits around 32 GB/s for sequential and around 1 million IOPS for random 4K. It becomes rapidly clear that 4KB performance needs some changes to improve throughput. This can come from higher queue depths and smaller stripe sizes. With mixed workloads in particular, this solution is overkill for most users, as you can’t reach the full potential.

Sequential performance is more reasonable as 32 GB/s is already over twice what you can get from a single PCIe 5.0 drive, even under the very best circumstances. Larger I/O sizes and higher queue depths can help more, but 128KB remains an excellent compromise when testing. There’s a reason many RAID setups default to a 128KB stripe size, at least in software. Although this is an area where HDDs can actually make sense, especially if you need high capacity, SSDs still provide far better responsiveness and higher bandwidth.

Normally, we would cover PCMark 10 performance here, but software bottlenecks can keep you from reaping the benefits of many fast drives working together. The RocketAIC 7608AW’s latency isn’t bad, but you shouldn't be eyeing this solution if latency’s your priority. You should only invest in the RocketAIC for heavier tasks, where you will be moving lots of data, as for everyday productivity, it’s simply unnecessary.

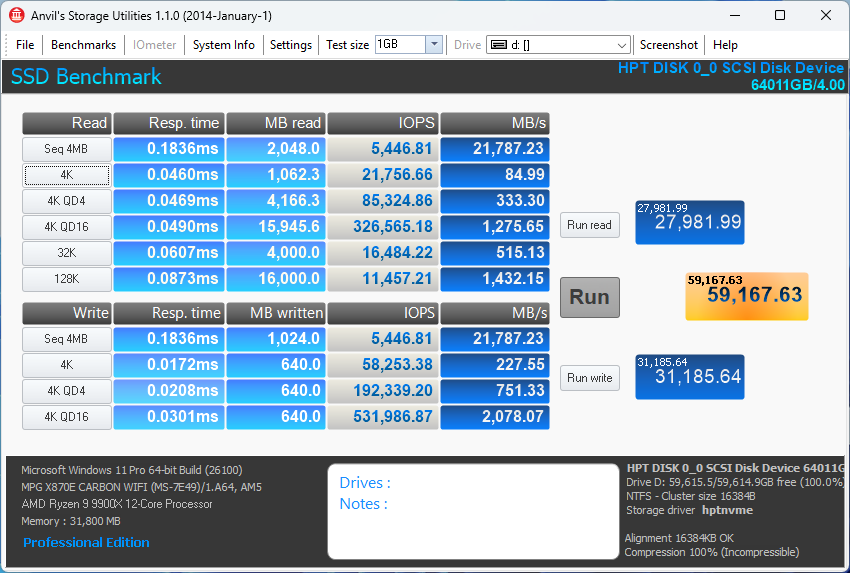

Transfer Rates — Anvil

Anvil’s Storage Utility is an older but still useful benchmark that gives quick results like CrystalDiskMark but with different testing parameters. It replaces DiskBench in this review, as it can provide an impression of read, write, and copy performance across multiple I/O sizes and relatively modest queue depths.

Anvil results in a score of almost 28K for reads and over 31K for writes for a combined total of 59.17K. Unsurprisingly, the RocketAIC 7608AW does quite well with the larger 4MB block size for sequential reads and writes, approaching 22 GB/s. The AIC is absolutely capable of hitting higher throughput, but this is dependent on a higher queue depth, and copying to the array itself also reduces peak performance. 4KB performance is unexceptional, and this is because such small I/O does not utilize the entire array until much higher queue depths.

This was reflected in our unofficial DiskBench testing, too, as we found that real-world benefit for everyday transfers just isn’t there. You need heavier workloads. This doesn’t completely rule out the RocketAIC 7608AW from lighter workloads, though. We happen to be using eight very fast drives in a striped array or RAID 0, but there is nothing preventing you from running multiple arrays where you have more flexibility for transfers. Productivity could be improved with such a configuration.

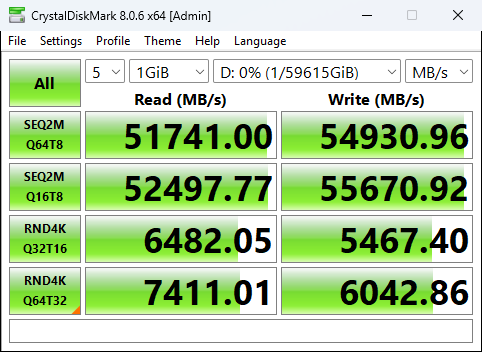

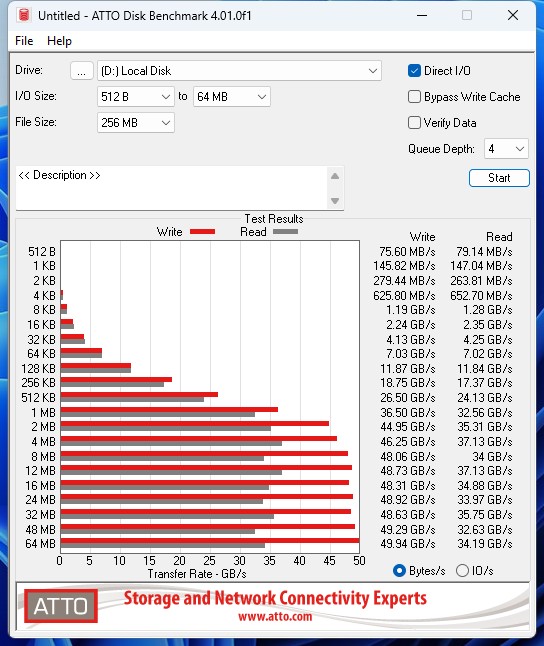

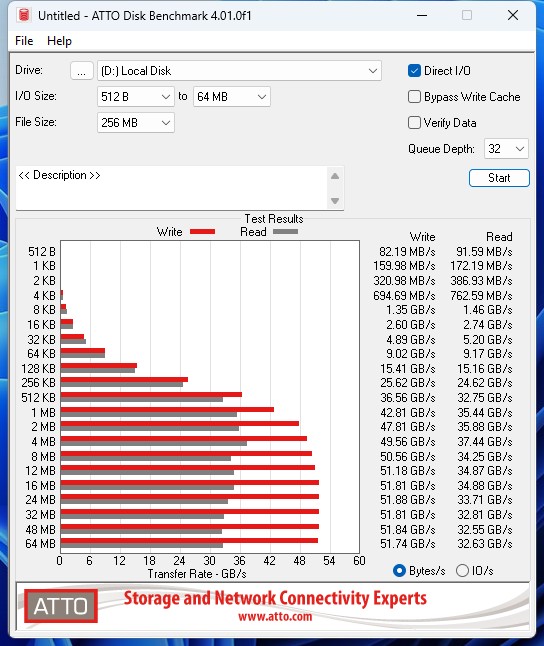

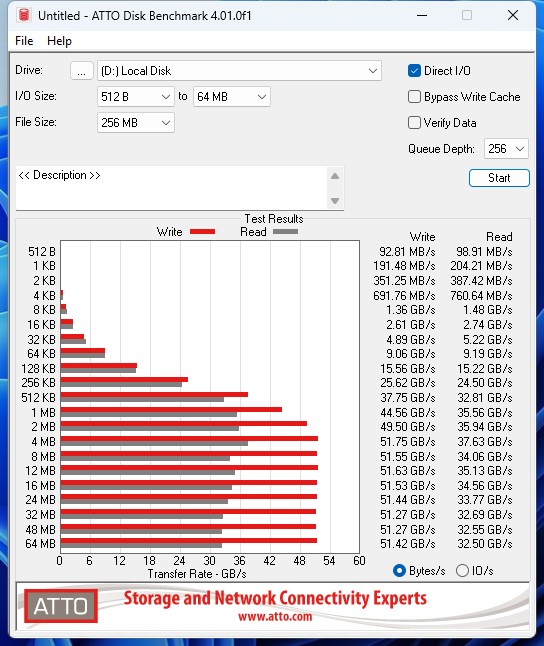

Synthetic Testing — ATTO / CrystalDiskMark

ATTO and CrystalDiskMark (CDM) are free and easy-to-use storage benchmarking tools that SSD vendors commonly use to assign performance specifications to their products. Both of these tools give us insight into how each device handles different file sizes and at different queue depths for both sequential and random workloads.

ATTO is a synthetic benchmark, and even at a queue depth of 4, it reveals the true power of the RocketAIC 7608AW. The RocketAIC pulls far away from any single drive once larger block sizes are used. Yes, this is an area where HDDs could make sense, although even then, higher queue depth is preferable. Lower block sizes don’t benefit from the RocketAIC’s extra throughput potential, which makes sense as stripe sizes are usually larger. With a typical stripe size of 64KiB or 128KiB, eight drives would begin powering through 512KiB and 1MiB blocks. The use of 8TB drives rather than 2TB can also affect results here, as the largest-capacity drives often perform a little worse than smaller ones due to I/O management and addressing overhead.

With a queue depth of 512 (Q32T16) or more, 4K random read performance is quite good in CDM. Going up to QD2048 (Q64T32) is faster yet, but there are clear diminishing returns. These numbers aren’t particularly impressive given that we’re using eight drives, but again, this is a limitation of small I/O and a single RAID 0. We recommend pairing the RocketAIC 7608AW with a fast system to get the most out of its smaller I/O. We tested QD1 latency on our Intel platforms and found it remains surprisingly good, too, but it’s only hitting one drive. Ideally, it would perform as well as a single 8TB 9100 Pro, and it more or less did for us.

There are considerations here — latency from the PCIe switch and other factors that might influence the results to some degree — but largely you’re not going to see any improvement with more drives. You will see more improvement with high queue depths, but this is counterproductive with such small I/O sizes unless the stripe size is reduced. Using a 4Kn rather than 512e format on the drives – only some consumer drives support this — could also help here. In real enterprise environments where capacity is king, the granularity is actually getting larger in many cases, so 16KiB for the stripe is probably a good compromise rather than going down to 4KiB.

Going back a bit, the sequential results in CDM – which use a more appropriate 2MiB I/O size — are far superior on the Rocket 7608AW compared to single drives or even a PCIe 4.0 drive team on the Rocket 1608A. We’ve found that this is true regardless of queue depth, but using a queue depth of 8 or higher allows the AIC to stretch its wings. We can see in ATTO that at QD32 we’re already hitting diminishing returns, too. There’s no real difference between QD128 (Q16T8) and QD512 (Q64T8) in our CDM testing, so hitting the proposed 56 GB/s limit isn’t too difficult. If you need this level of performance, the AIC is a more compact solution compared to throwing a ton of separate drives at the problem, at least.

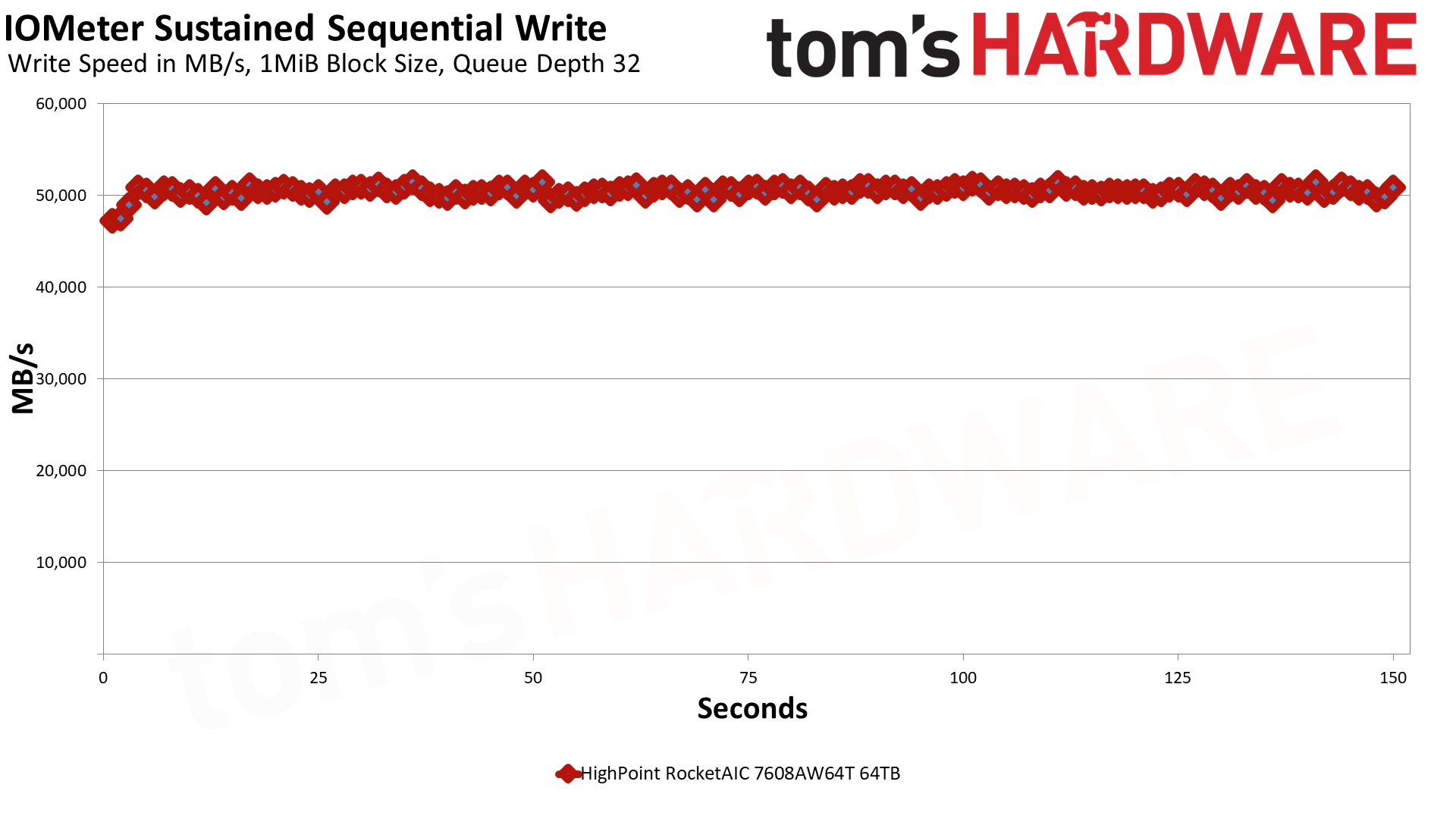

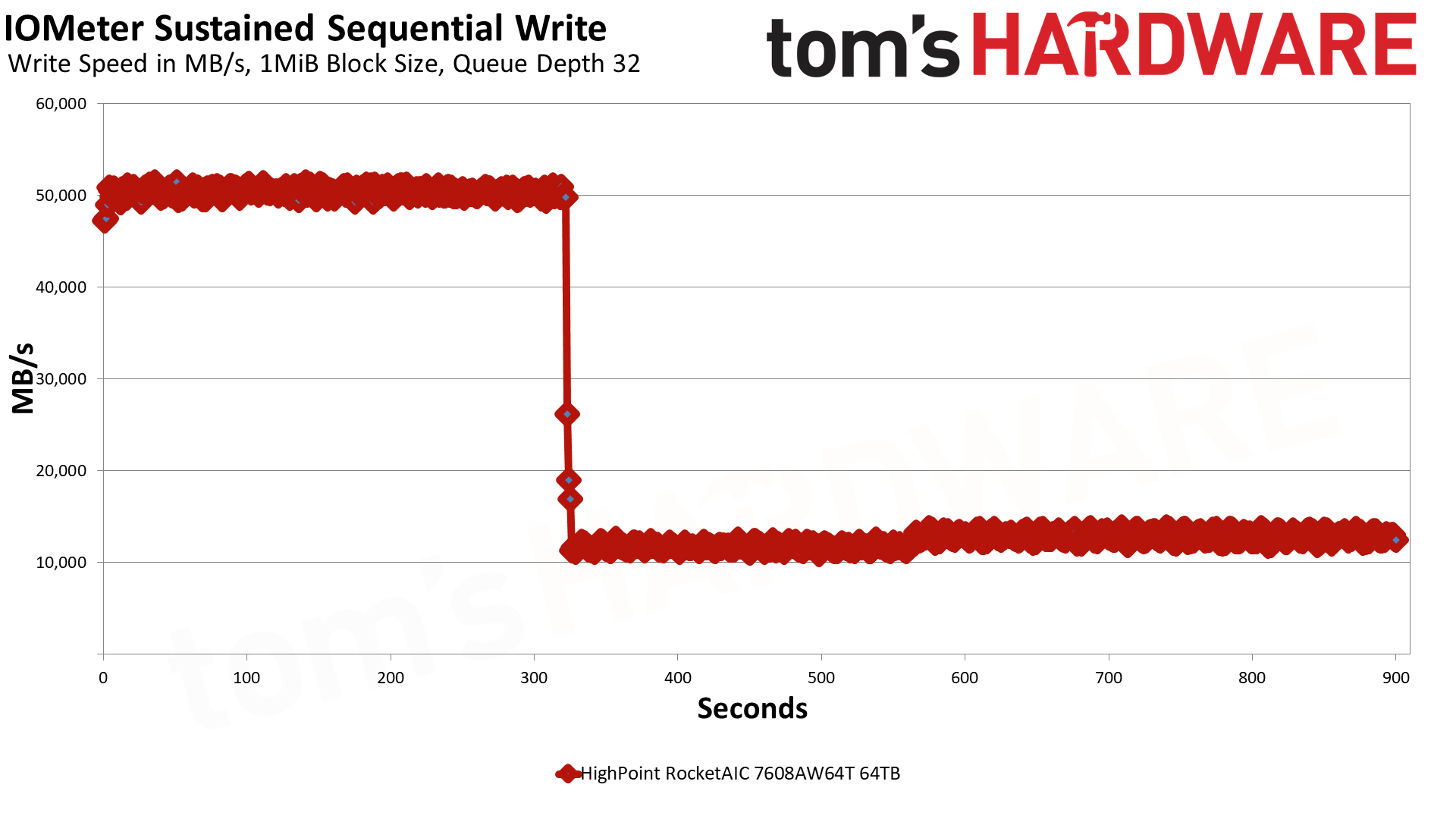

Sustained Write Performance and Cache Recovery

Official write specifications are only part of the performance picture. Most SSDs implement a write cache, which is a fast area of pseudo-SLC (single-bit) programmed flash that absorbs incoming data. Sustained write speeds can suffer tremendously once the workload spills outside of the cache and into the "native" TLC (three-bit) or QLC (four-bit) flash. Performance can suffer even more if the drive is forced to fold, which is the process of migrating data out of the cache in order to free up space for further incoming data.

We use Iometer to hammer the SSD with sequential writes for 15 minutes to measure both the size of the write cache and performance after the cache is saturated. We also monitor cache recovery via multiple idle rounds. This process shows the drive's performance in various states, as well as its steady-state write performance.

With eight drives in a RAID 0 and a queue depth of 32, we can reach about 50.4 GB/s sustained. This is in the fastest single-bit cache mode. The array can write at this speed for just over 322 seconds – that is, over 5 minutes – with a cache larger than 16TB. With the drives combined, the total cache should be eight times that of the single drive, so that would be expected from the ~2TB cache of a single 8TB 9100 Pro. More drives also mean a faster TLC or steady state result with the RocketAIC 7608AW, dipping first to 11.7 GB/s before maintaining up to 12.9 GB/s, compared to a single drive’s 1.7 GB/s. We also ran this test on our Intel platforms, with slightly higher steady-state results but reduced peak write performance. The cache is the same size regardless.

This sustained write performance is, as expected, incredible, especially for sustained writes that would exceed the cache. It’s more common for enterprise drives to forgo a pSLC cache when long-tail or steady state performance is more important. Consistency is the name of the game, and Samsung’s TurboWrite — even with the relatively larger caches on the 8TB 9100 Pros as compared to the 2TB — is able to deliver relatively smooth TLC writes. Enterprise drives of this capacity could potentially do better, but if you’re building this off-the-shelf for a home lab or small business, it will get the job done. We can only recommend careful consumer drive selection, as many drives with large caches can have inconsistent steady state performance.

Power Consumption and Temperature

Our chart for power consumption is missing because our Quarch measurement system is not compatible with this card. We still recorded power consumption, though, with a peak below 72W and lesser workloads pulling just below 40W. This isn’t far off from our results with the Rocket 1608A and, given the controller’s rated power, is pretty reasonable. Our workload only pulls about half the maximum wattage of these drives, which makes sense given the relatively modest returns. If we ballparked power efficiency, it would probably be disappointing, but one would expect that from a powerhouse setup like this. We would point out that using less efficient PCIe 5.0 drives, such as those based on Phison’s E26 controller, would be inadvisable.

As far as temperatures go, we’re looking at 3 distinct metrics: the RAID controller, the hottest drives, and the average drive temperature. The controller reached a maximum between 72°C and 73°C, which is well within Arm's limits. The drive is rated for an ambient temperature range below this, but in terms of throttling, there is plenty of headroom. The hottest drives reached a temperature of about 66°C in all our testing, with a 60° peak on our reduced AMD benchmarks, which is an excellent result.

Taking the coolest drives into consideration, which reached as low as 50-55°C with an overall average around 58°C, the mean drive temperature was quite good. We would expect this to be the case given the RocketAIC 7608AW’s significant heatsink and active cooling. These drives aren’t even close to throttling.

Active cooling can be noisy, so this might be an area where you would want to exert some direct control. However, this is workload-dependent, so adjust accordingly. You can find a good balance between temperature and noise level. It’s often the case that the thermal bottleneck is at the interface – thermal padding, in this case – and HighPoint went with dual layers for that reason. If you can reduce or even avoid active cooling with the drives being at 75°C or less peak, you’re probably in good shape.

Test Bench and Testing Notes

CPU | Row 0 - Cell 2 | Row 0 - Cell 3 | |

Motherboard | Row 1 - Cell 2 | Row 1 - Cell 3 | |

Memory | Row 2 - Cell 2 | Row 2 - Cell 3 | |

Graphics | AMD Radeon Graphics | Row 3 - Cell 2 | Row 3 - Cell 3 |

Storage | Row 4 - Cell 2 | Row 4 - Cell 3 | |

CPU Cooling | Row 5 - Cell 2 | Row 5 - Cell 3 | |

Case | Row 6 - Cell 2 | Row 6 - Cell 3 | |

Power Supply | Row 7 - Cell 2 | Row 7 - Cell 3 | |

Operating System | Windows 11 Pro 24H2 | Row 8 - Cell 2 | Row 8 - Cell 3 |

We use a Zen 5 platform with most background applications, such as indexing, Windows updates, and anti-virus, disabled in the OS to reduce run-to-run variability. Each SSD is prefilled to 50% capacity and tested as a secondary device. Unless noted, we use active cooling for all SSDs.

HighPoint RocketAIC 7608AW Bottom Line

The HighPoint RocketAIC 7608AW is an excellent piece of hardware, and when paired with eight 8TB Samsung 9100 Pros, it is simply a sight to behold. 64TB of superfast storage in a single PCIe card is pretty neat. Expensive, but neat.

There are very real applications for this level of performance — the total price tag isn’t nearly as bad as it seems — but it’s probably out of reach for the average user. The AIC itself is expensive enough that you’d only want to use it with larger, faster drives, which would put it in the $4K to $6K range even with 4TB and 8TB Gen 4 WD Black SN850Xs. Heck, you could throw in 4TB WD Black SN7100s from SanDisk and get it under $4K, though you probably want SSDs with DRAM for this type of device.

On the other hand, you don’t need to go with more expensive PCIe 5.0 drives for the most part. The AIC has 32 lanes downstream for 16 lanes upstream, so if you have a full host of eight Gen 4 drives — or, actually, four PCIe 5.0 drives — you have enough throughput to make things work. Still, the 1608A was $500 less when we reviewed it last year with very similar hardware, so we think the price here is a bit high. SSDs also seem to be in an upward pricing trend, given the exponential growth in AI demand, so things will only get more expensive. This storage solution is simply not for the faint of heart and should only be considered by enthusiasts, business owners, or the wealthy and inquisitive. That said, now is a good time to invest, but until the 8TB Black SN8100 is out, your ceiling is limited to the 9100 Pro.

The good news is that there are less expensive options for the storage-hungry. It’s very possible to get AICs with two or four SSDs slots at a bargain price, although the cheapest ones require PCIe bifurcation on your motherboard. This usually limits you to adding just two drives on a single AIC. You can get 4-SSD cards with their own bifurcation, but these cost more and often have upstream bandwidth limitations. The alternative is to jump up to higher-end HighPoint solutions, which, frankly, is quite doable if you only need four PCIe 4.0 drives, for instance. You do need an x16 slot for maximum performance, which is essentially limited to HEDT or server boards, with consumer motherboards being x4 or x8 electrically and still requiring x8/x8 bifurcation. In other words, the total investment required to get the most out of an AIC like this is considerable, but compromises can be made if you’re mostly aiming for capacity.

We do think the RocketAIC 7608AW is an excellent solution for a very specific problem, and the 8TB 9100 Pros are the perfect match if you want truly incredible sustained write performance. The real competition here would be enterprise-grade SSDs, but they will have more cumbersome form factors and their own costs. An AIC can be a convenient solution, and consumer SSDs can work for certain use cases. We also have nothing bad to say about HighPoint’s software, although there are many other options available for someone with experience who is so inclined. That’s the easiest way to say that this AIC is flexible but not the best solution in all cases, given what’s available. It has enough features to keep it competitive, and it provides a relatively easy entry point from the perspective of management, which is enough to keep it attractive for anybody who was already seriously considering multiple 8TB 9100 Pros for their next project.

MORE: Best SSDs

MORE: Best External SSDs

MORE: Best SSD for the Steam Deck

- 1

- 2

Current page: HighPoint RocketAIC 7608AW Performance Results

Prev Page HighPoint RocketAIC 7608AW Features and Specifications

Shane Downing is a Freelance Reviewer for Tom’s Hardware US, covering consumer storage hardware.

-

thestryker This is a really interesting device and I'd love to see a cheaper one, perhaps with a narrower interface and fewer slots, which could be used for home/small business type storage expansion.Reply

Every time I see one of these it just makes me sad what happened due to the Broadcom acquisition of PLX. To my knowledge there are only two companies making PCIe switches above PCIe 3.0, Broadcom and Microchip, both of which charge very high premiums. This card would always be expensive due to the features, but in a rational world where there's actual competition it'd be closer to $1000 than $2000.

ASMedia is the only other company I can think of which ships volume PCIe switches but theirs cap out at PCIe 3.0. I used to think it was due to them just being a smaller player, but doing some parts research earlier this year led me to finding out they're an Asus company. While I don't expect them to be competing at the leading edge or enterprise it's surprising to me that they don't make PCIe 4.0 switches. I would think if nothing else this should make for a great competitive advantage for Asus. They also have advanced USB controllers so it's not like they don't have any engineering work with PCIe 4.0 going on. -

Soul_keeper I remember when their PATA cards would sell for something low like $100.Reply

Pricing these days is insane. They used to target consumers, the boxes were on the shelves at the local pc stores. Now everyone just assumes were have big $ like the crypto/ai big guys. I'm running 3x nvme software raid in linux because of the price barrier for one of these cards.

With a fast cpu, software raid can match/beat these addon cards. -

Cervisia Reply

ASMedia makes the AM5 chipset ("Promontory 21"), which contains a PCIe switch with four PCIe 4.0 upstream lanes and twelve downstream lanes (eight PCIe 4.0, four PCIe 3.0).thestryker said:ASMedia … cap out at PCIe 3.0.

In theory, it would be possible to use this chip as a plain PCIe switch. -

palladin9479 ReplySoul_keeper said:I remember when their PATA cards would sell for something low like $100.

Pricing these days is insane. They used to target consumers, the boxes were on the shelves at the local pc stores. Now everyone just assumes were have big $ like the crypto/ai big guys. I'm running 3x nvme software raid in linux because of the price barrier for one of these cards.

With a fast cpu, software raid can match/beat these addon cards.

This isn't for consumers, this is profession workstation or server stuff. Where I'm at we have a team that uses specialized data modeling software to analyze smart power meter utilization data from the entire USA and generate utilization models and pricing guidelines. They frequently consume many TB worth of data for each run, and those runs take hours to process largely due to disk IO contention. This was after we moved them from a NAS to local NVME on a specialized workstation, before then runs took 18~24 hours. We have discussed purchasing a solution like this for them as a way to get their runs under an hour as they really want to do more frequent modeling.