Intel Core i7-7820X Skylake-X Review

Why you can trust Tom's Hardware

Workstation & HPC Performance

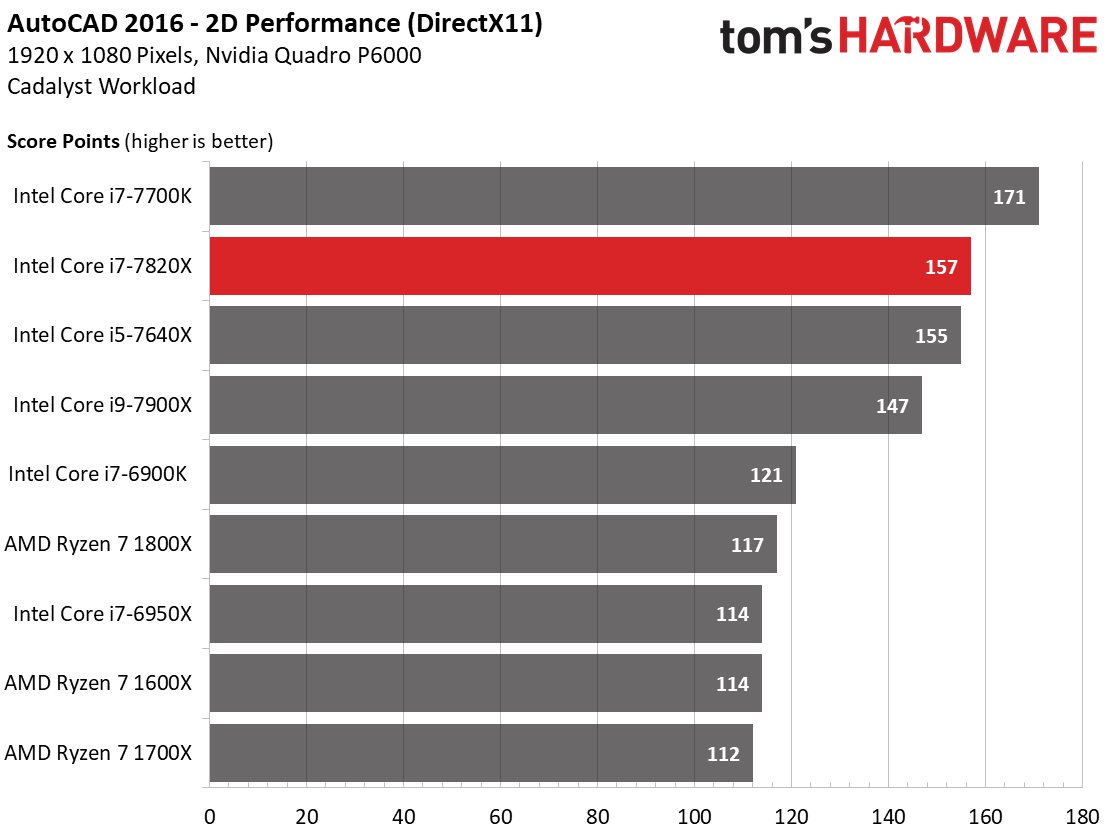

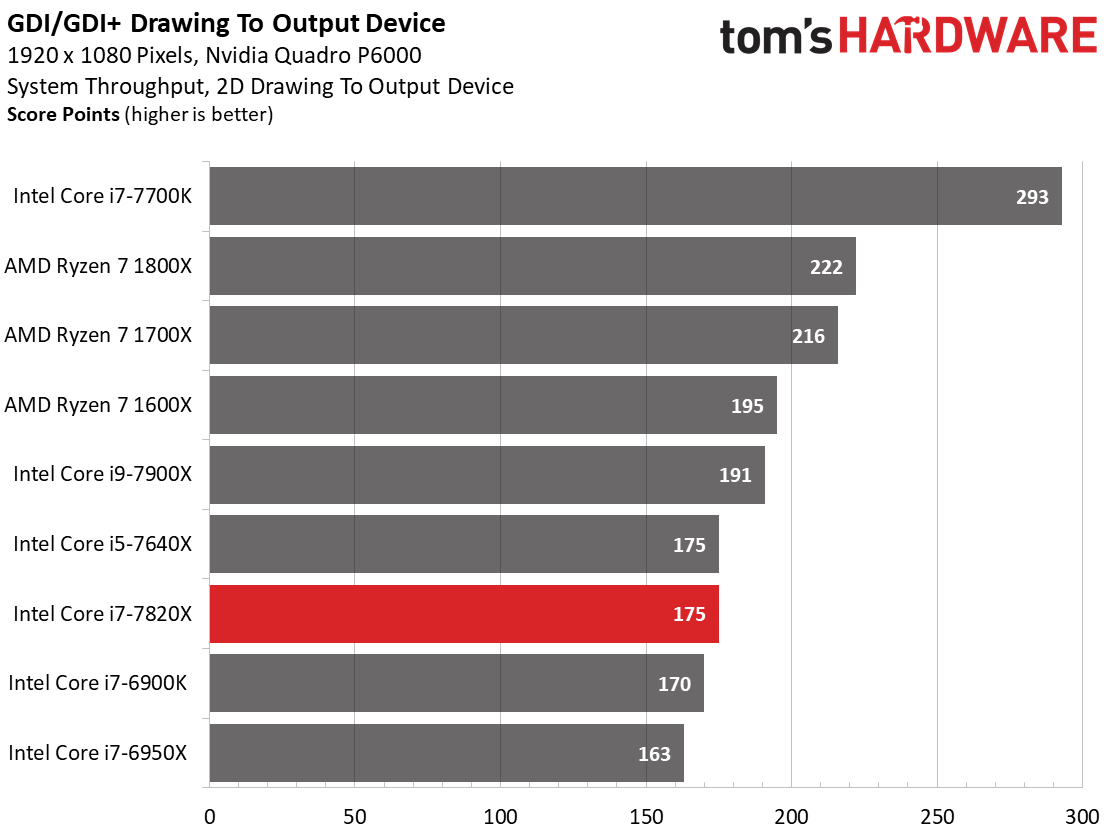

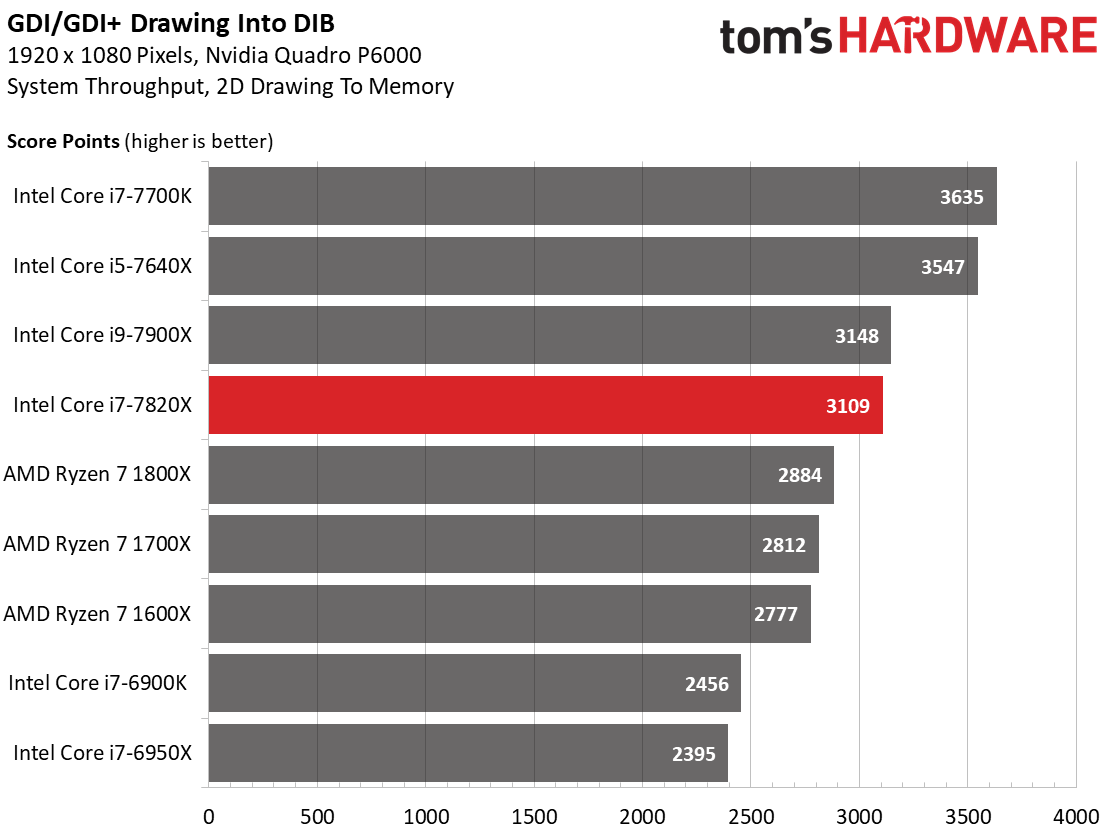

2D Benchmarks: DirectX and GDI/GDI+

If you want to know more about our HPC benchmarks, check out the AMD Ryzen 7 1800X CPU Review. We didn't just copy results from that story, though. Rather, after a number of BIOS updates and software configuration changes, we retested everything. This gives us a more up-to-date picture, reflecting improvements of up to 15% that AMD worked hard to enable.

Intel's Core i7-7820X outstrips the -7900X in our AutoCAD 2D workload due to its frequency and IPC throughput advantage. The Core i9-7900X wins in the GDI/GDI+ benchmarks, though. Both processors provide more performance than a Broadwell-E-based Core i7-6900K.

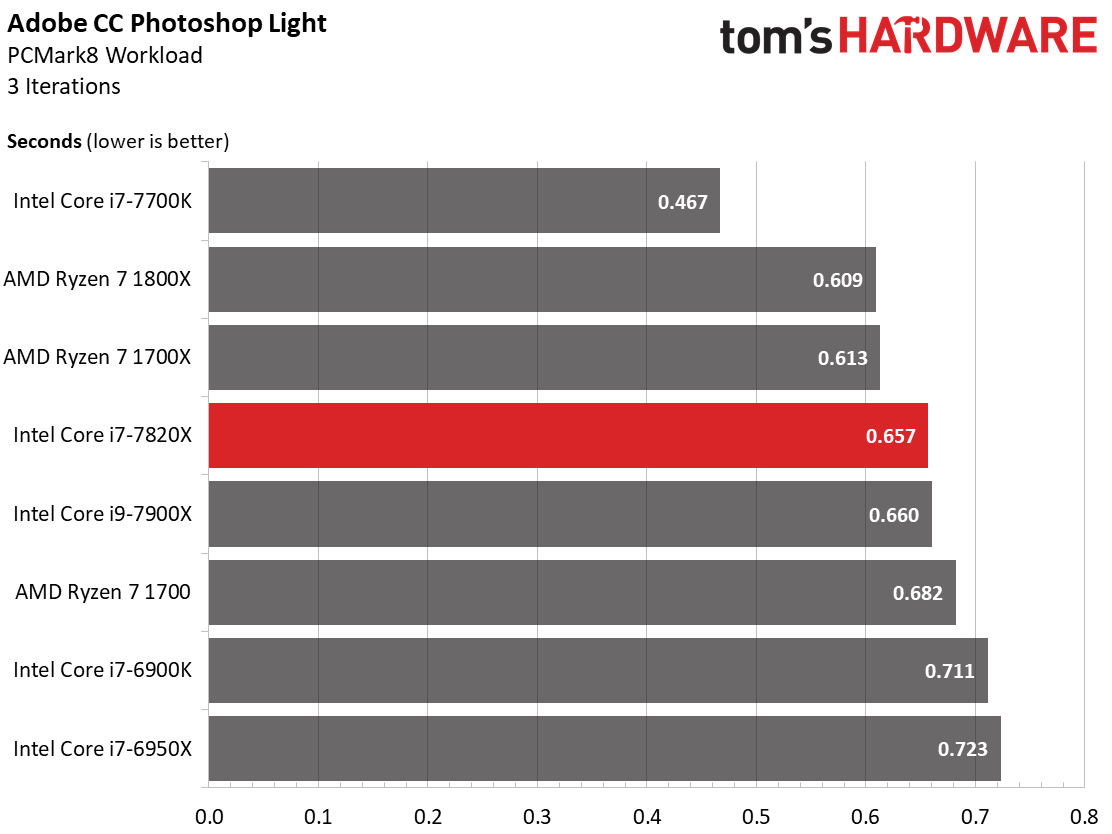

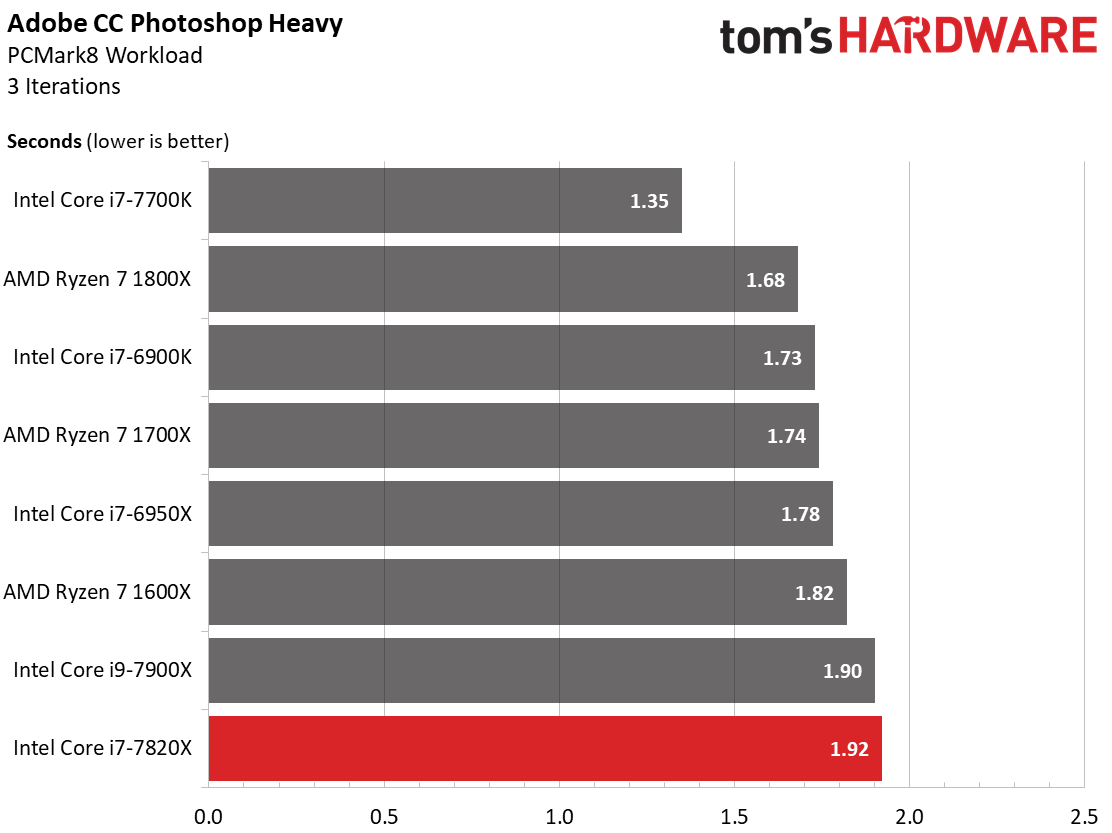

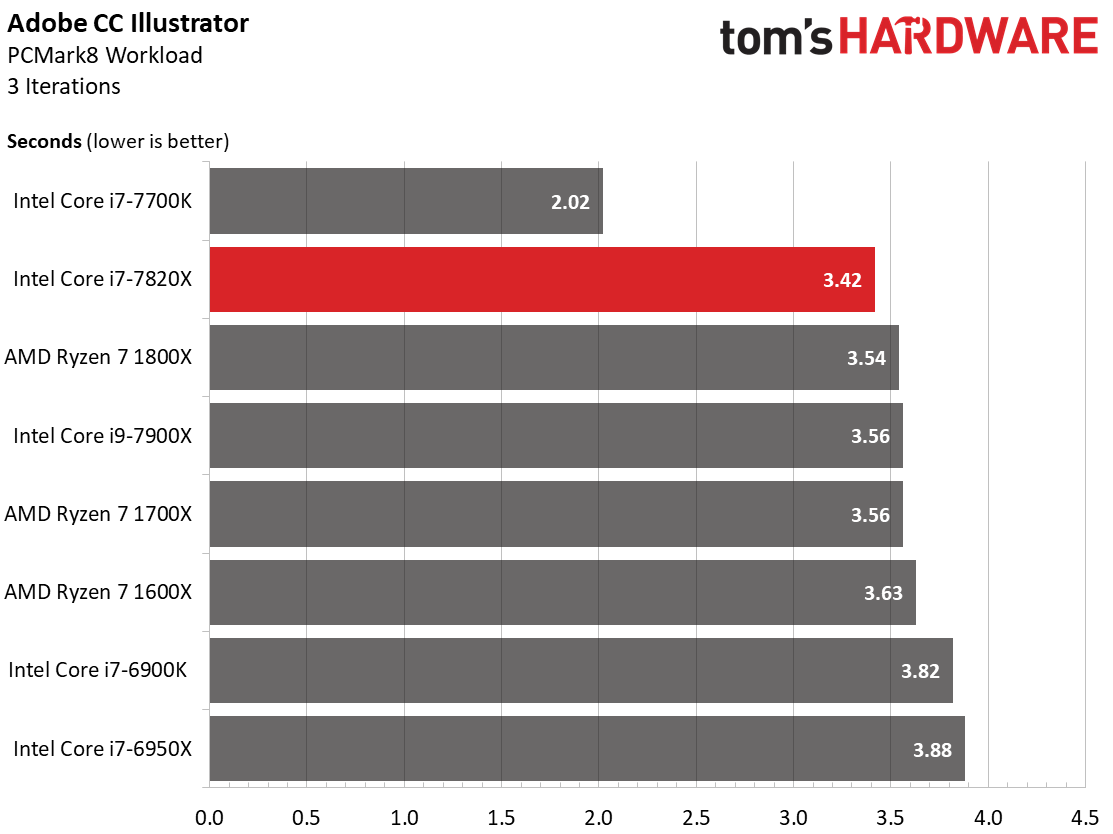

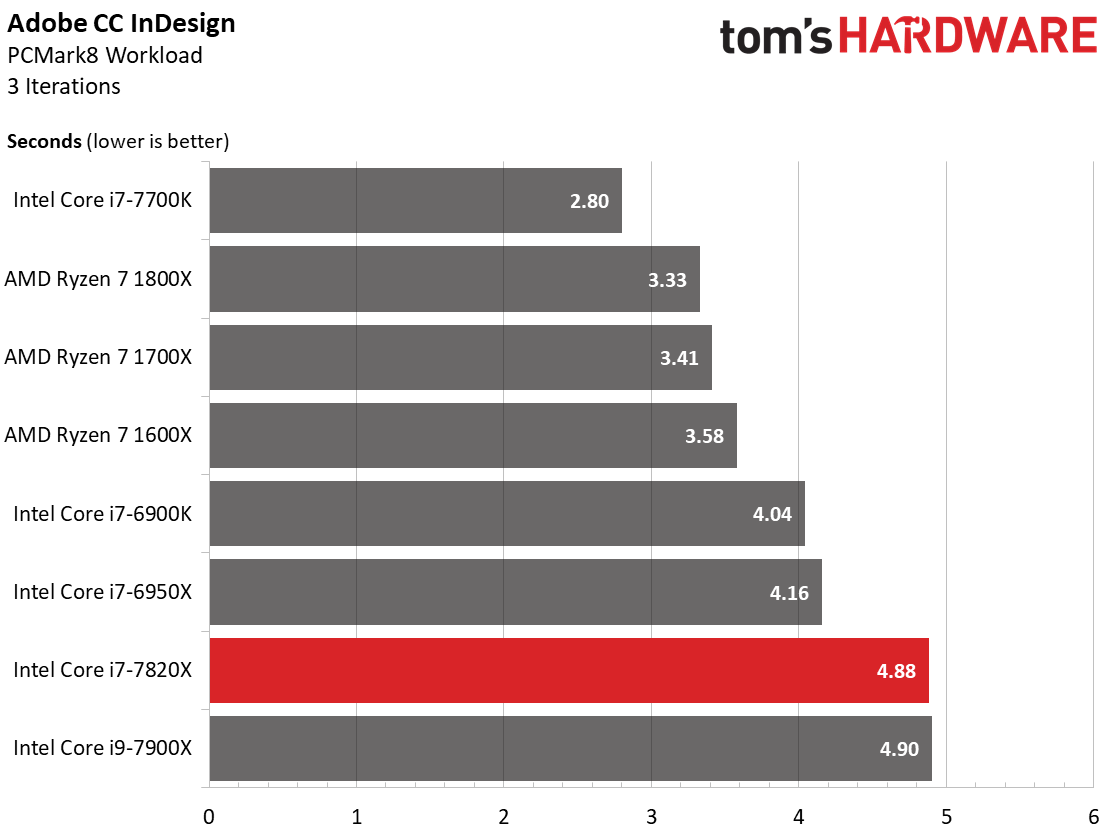

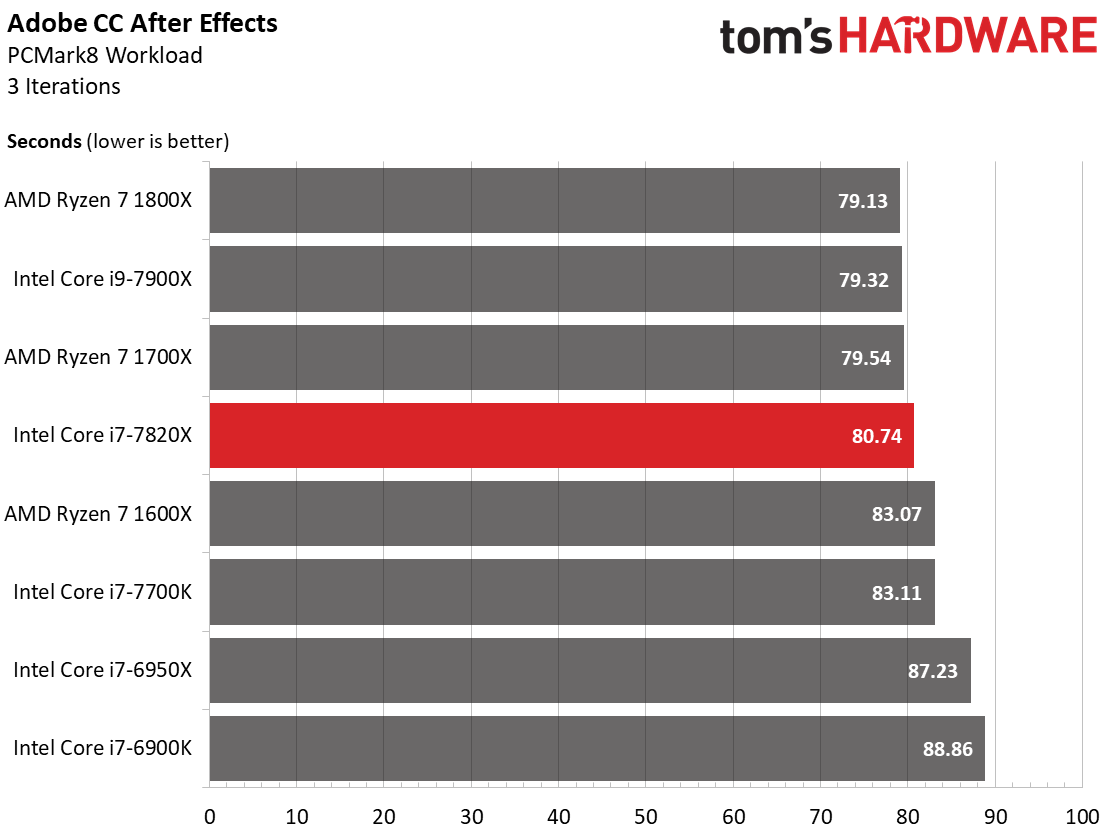

2D Benchmarks: Adobe Creative Cloud

Per-cycle performance plays a role in these lightly-threaded applications, giving the -7820X an advantage in several tests. Both Skylake-X models suffer lower performance than we'd expect in the Photoshop Heavy and InDesign workloads. Hopefully Adobe is planning an update that'll address this anomaly.

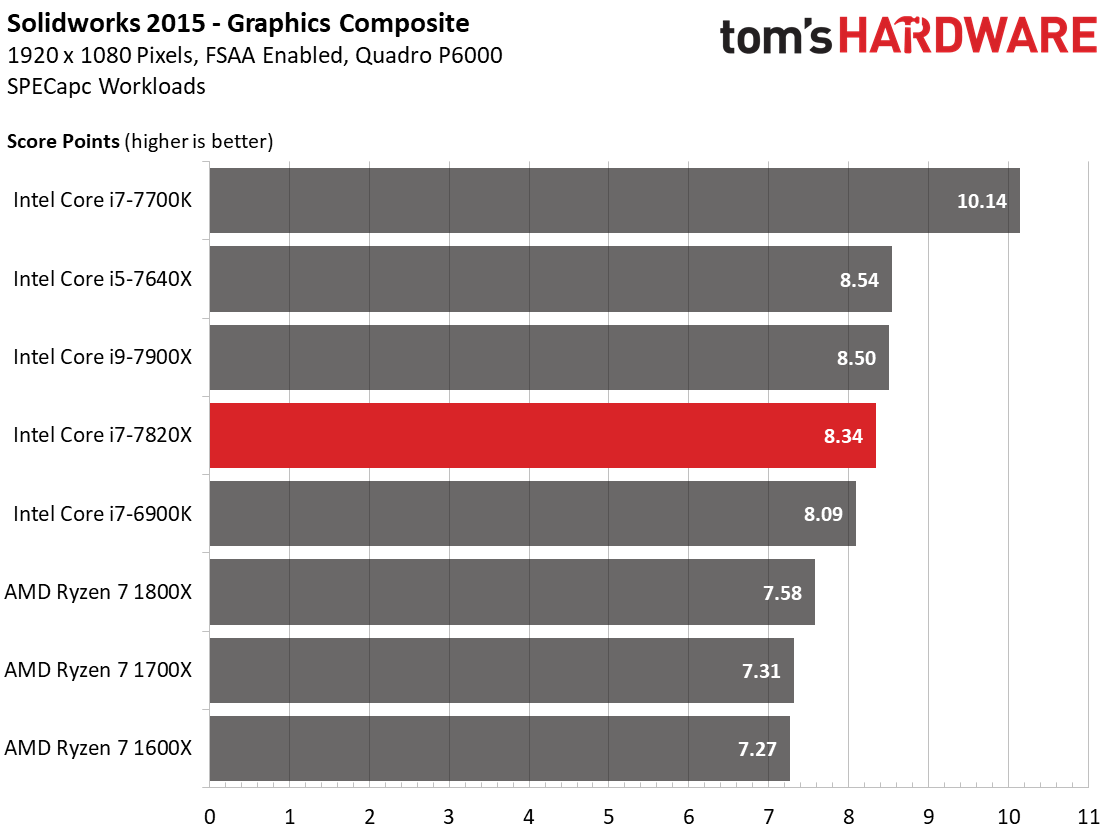

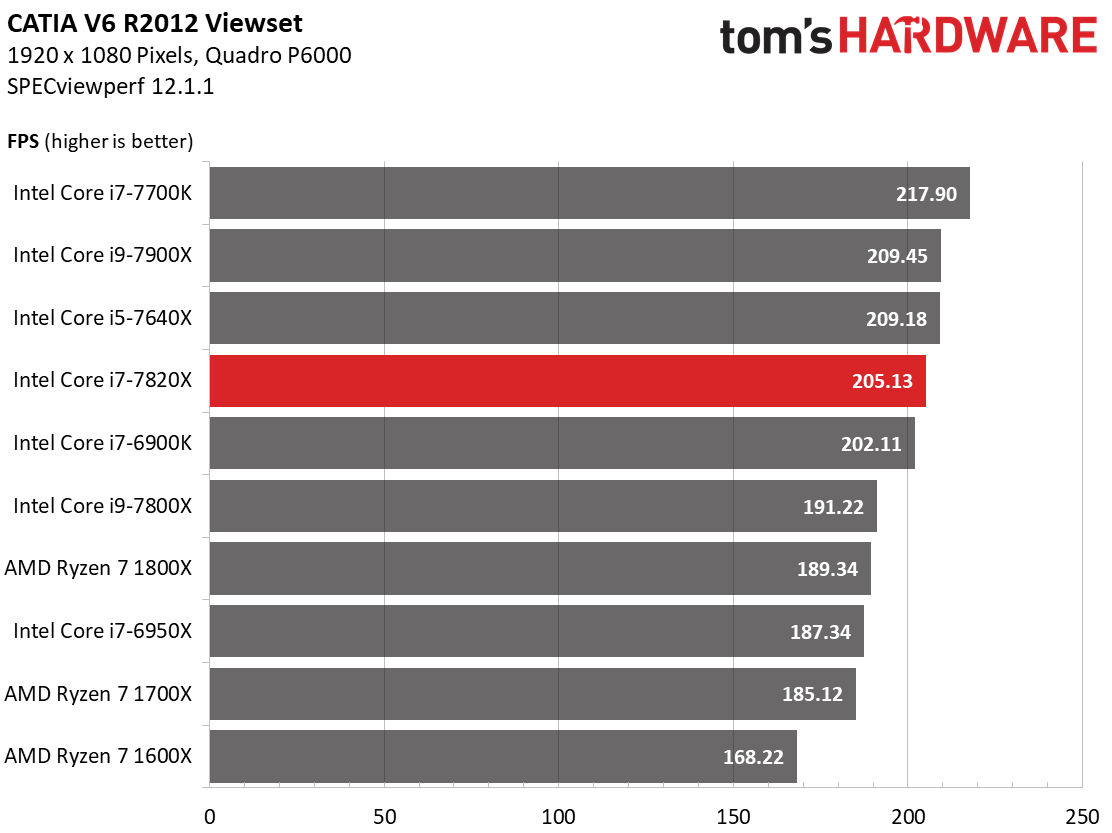

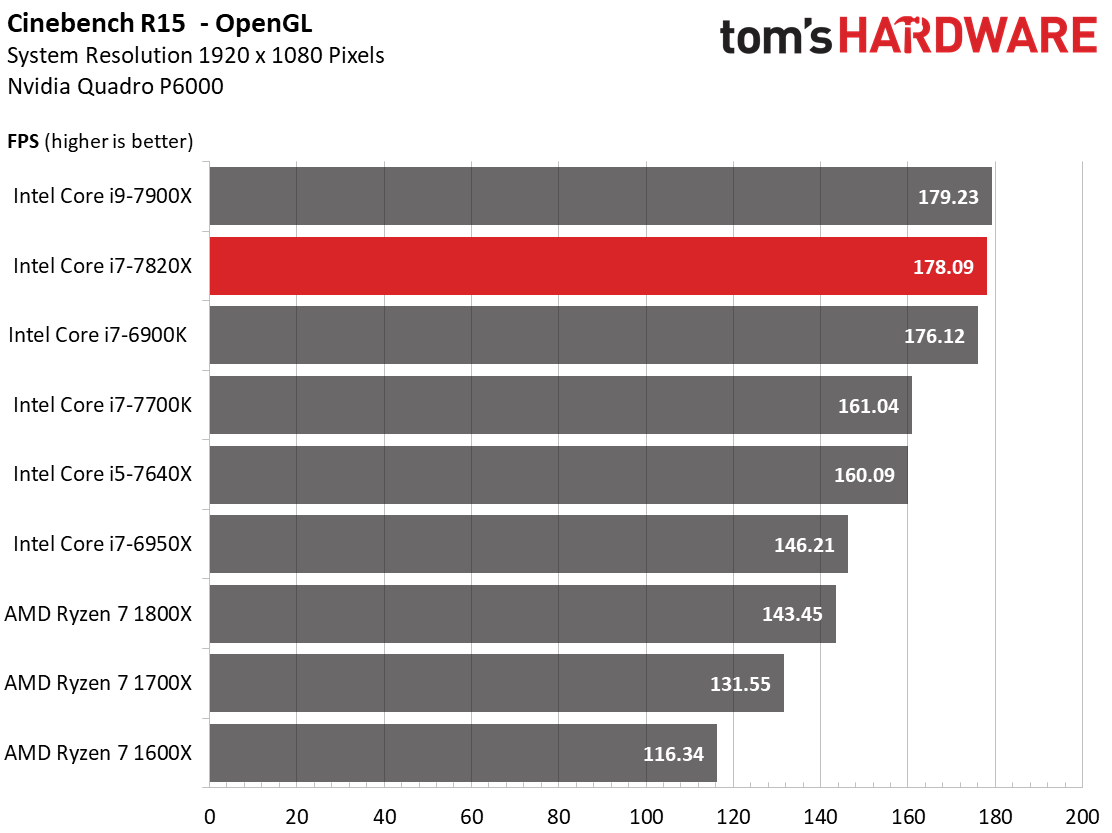

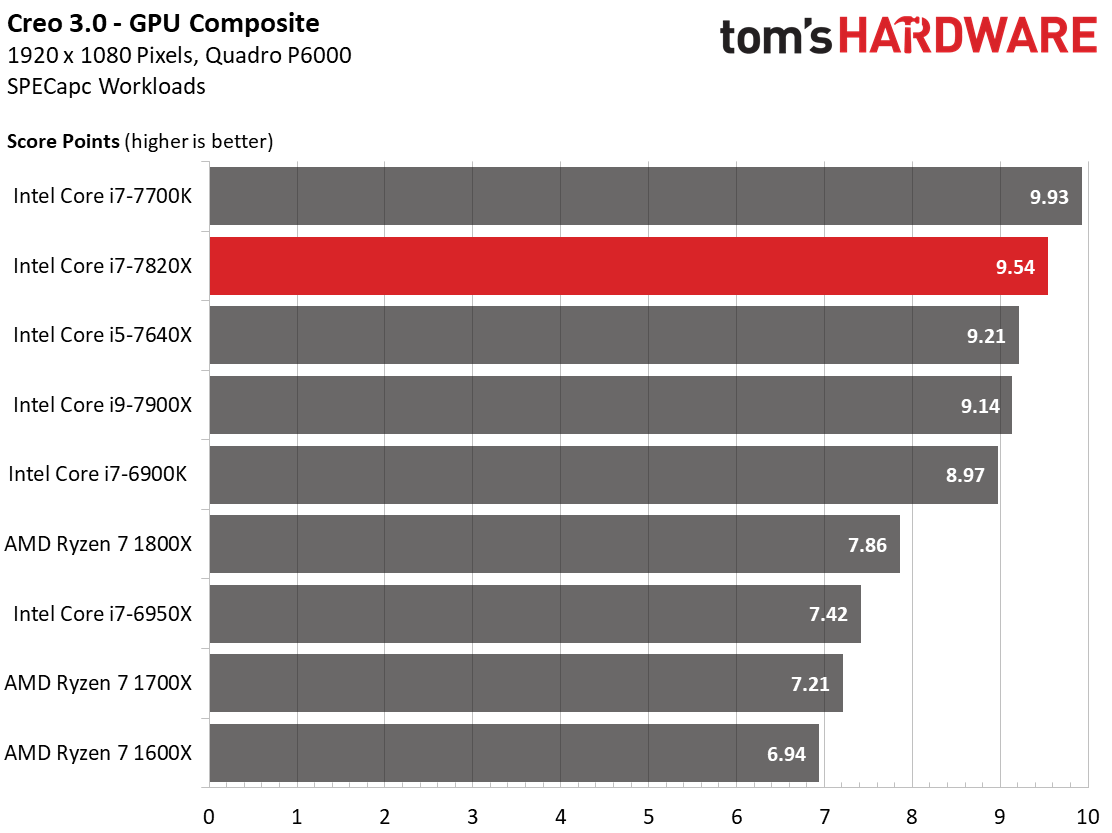

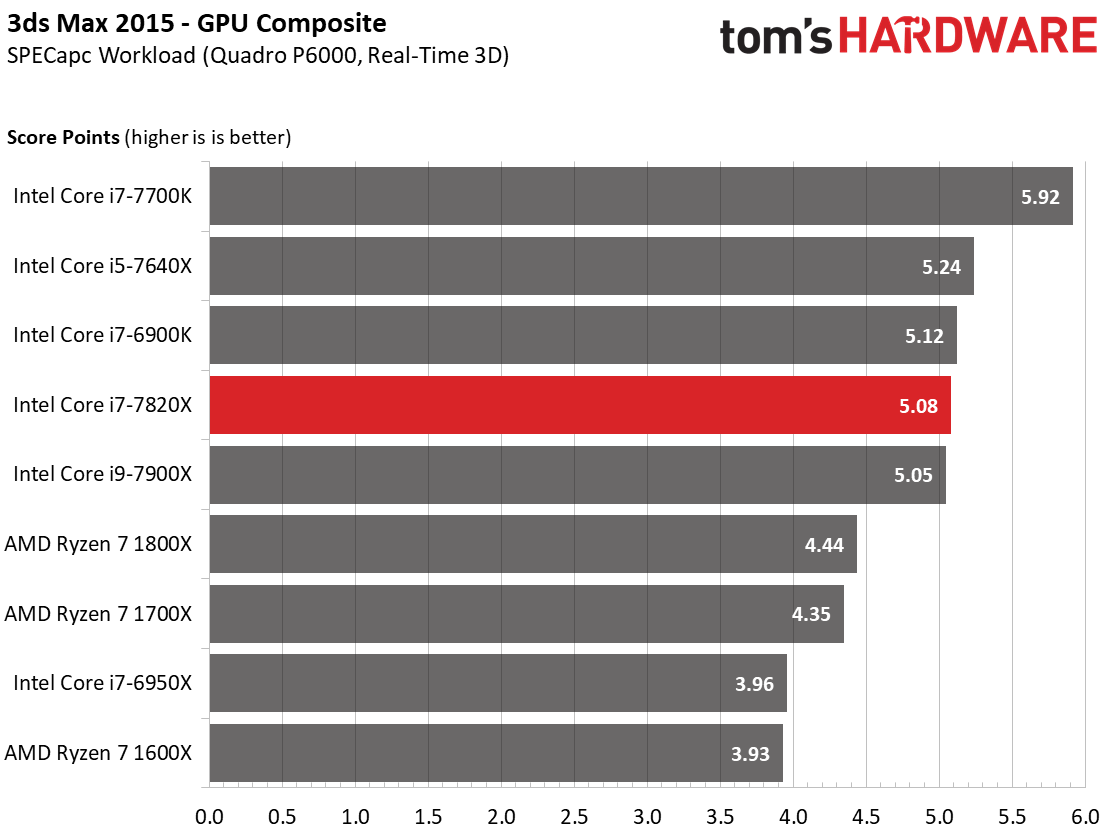

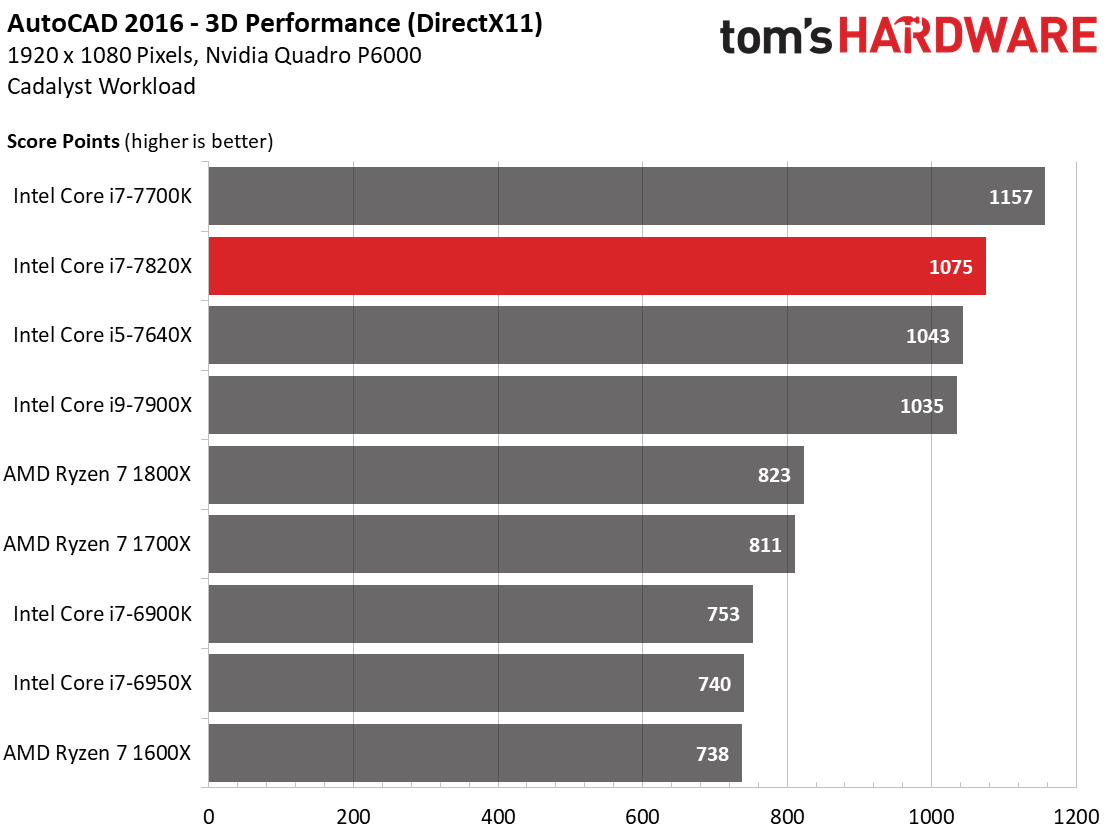

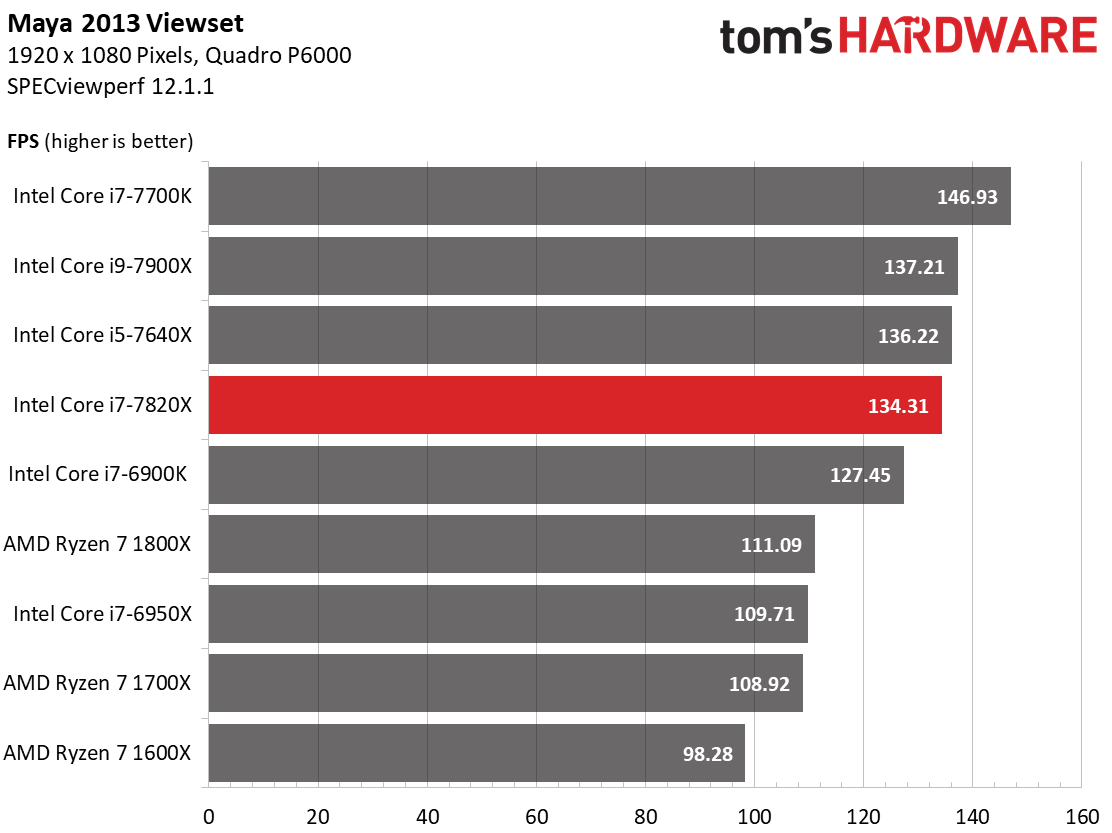

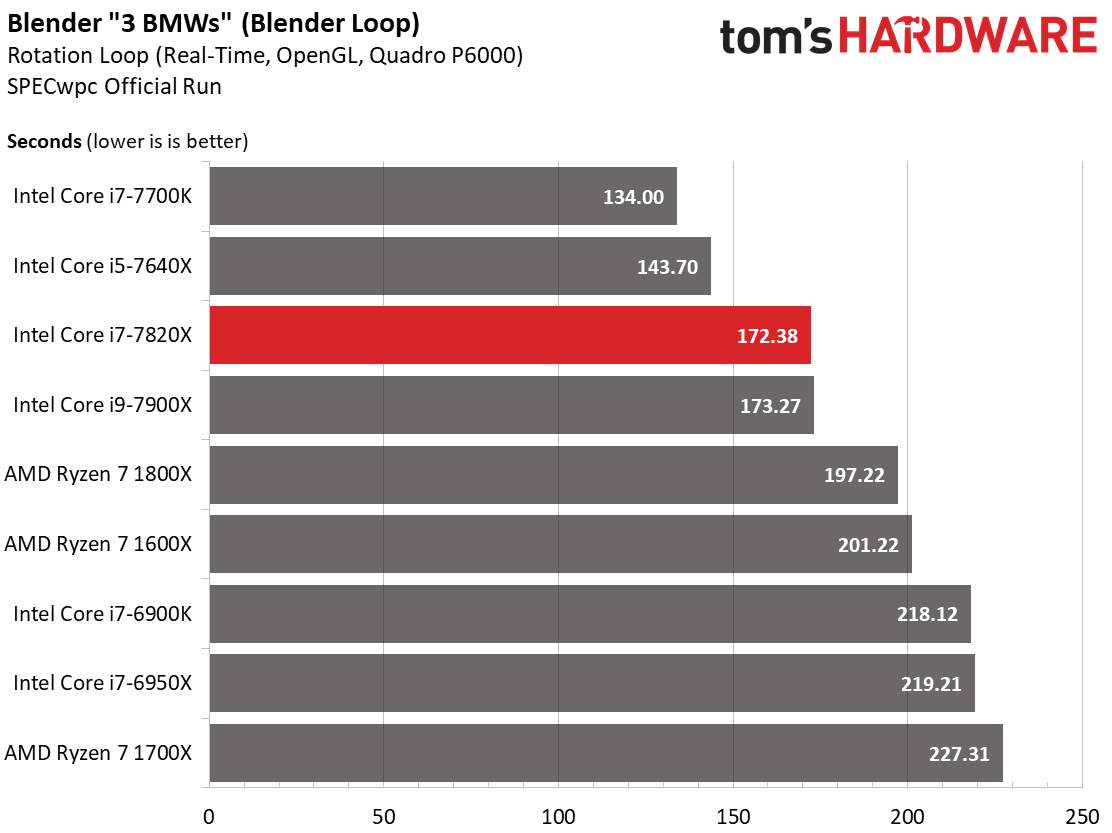

3D Benchmarks: DirectX and OpenGL

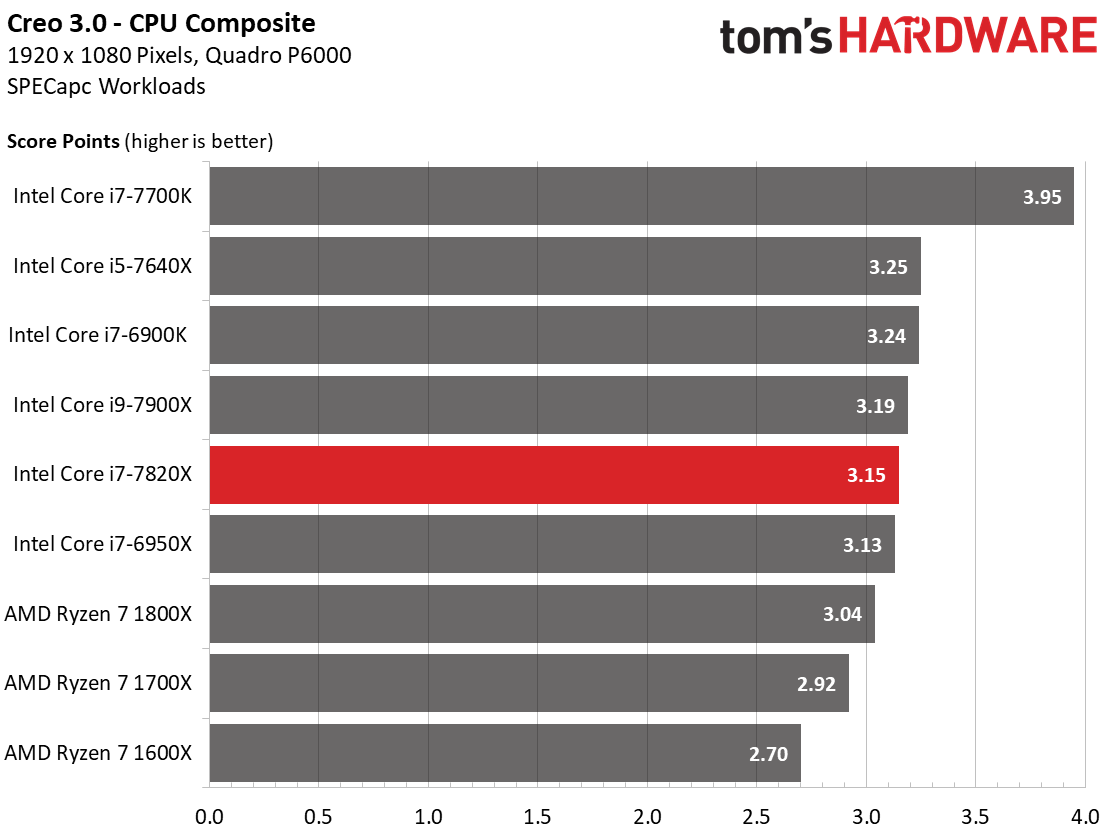

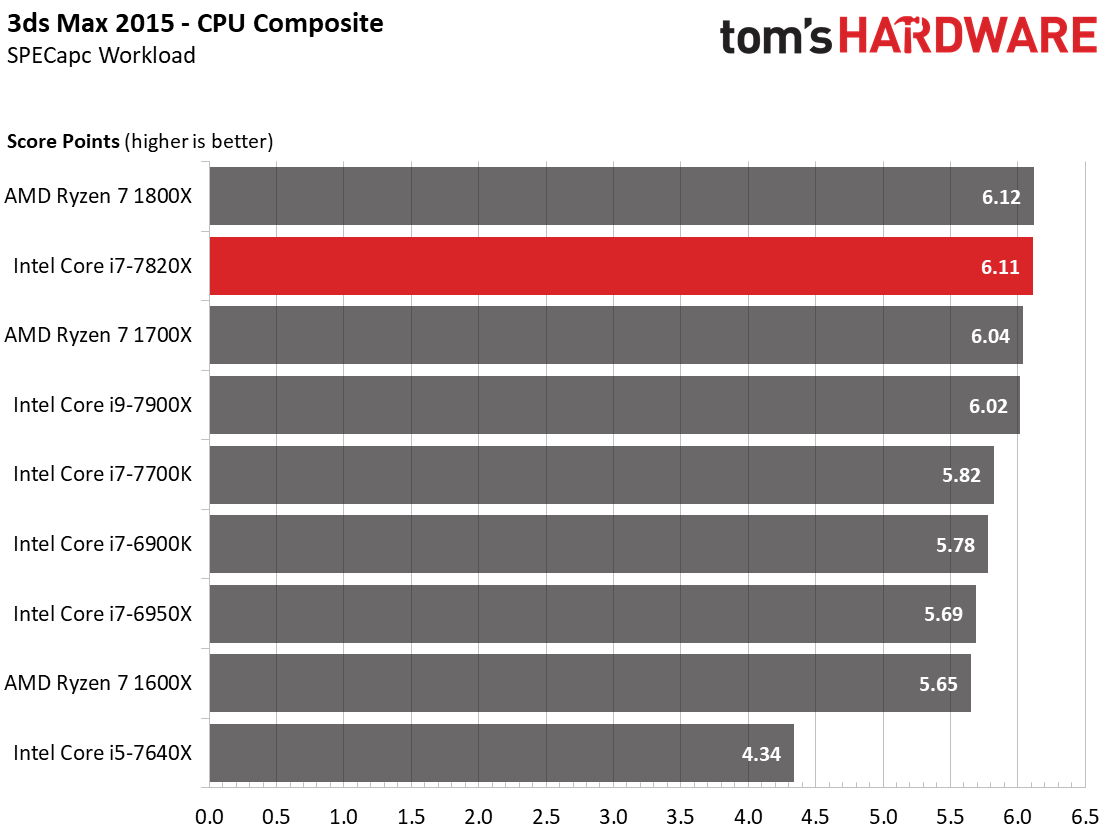

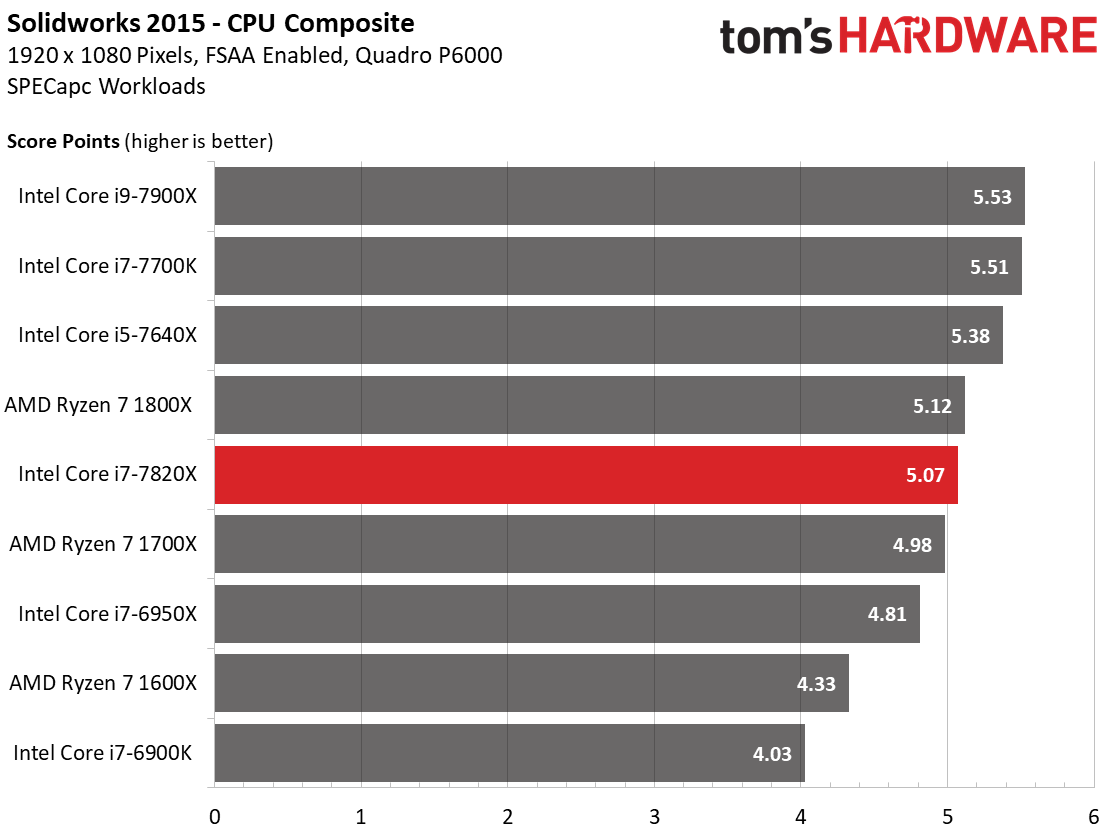

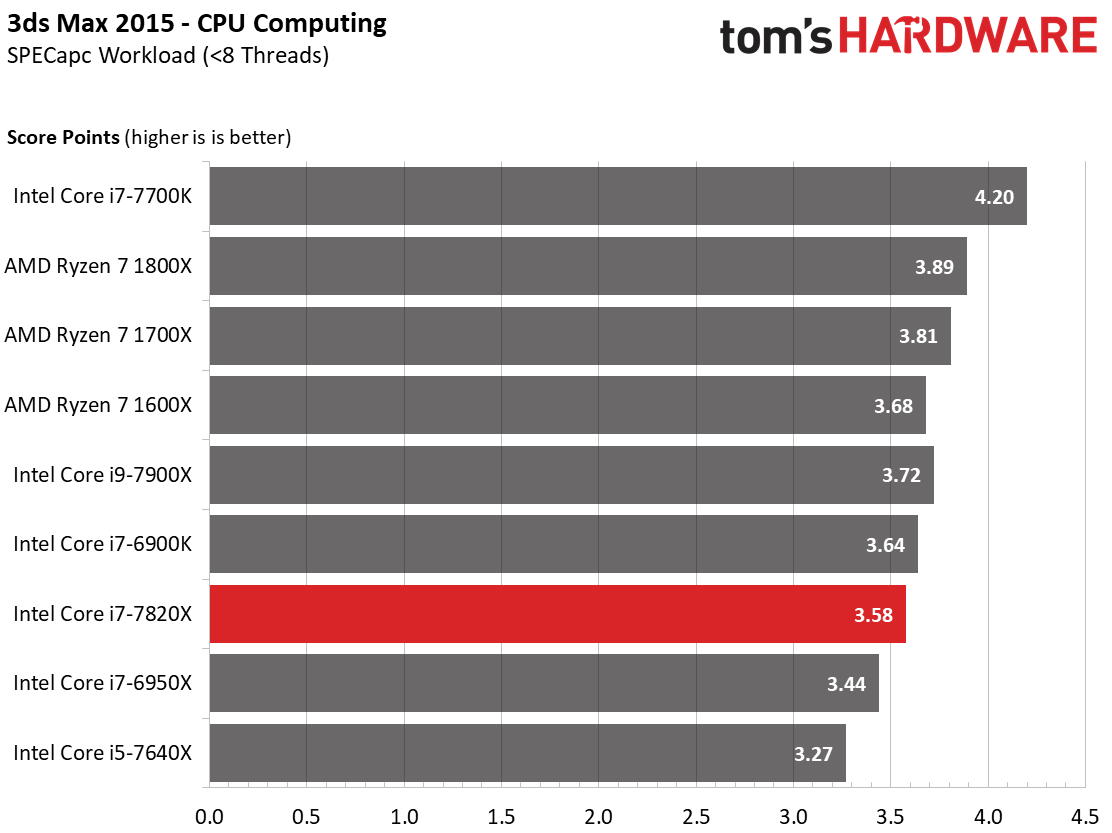

The Core i7-7700K vigorously cuts through most of these workloads, indicating that prefer high clock rates, all else being equal.

Both Skylake-X-based chips trade places through several of the tests; the distance between them remains small, though.

CPU Performance: Workstation

Broadwell-E leads the Skylake-X-based processors in a few of these workloads, reminding us that Intel's mesh topology may lead to performance regressions in some cases.

The Ryzen 7 1800X is incredibly competitive during this round of testing.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

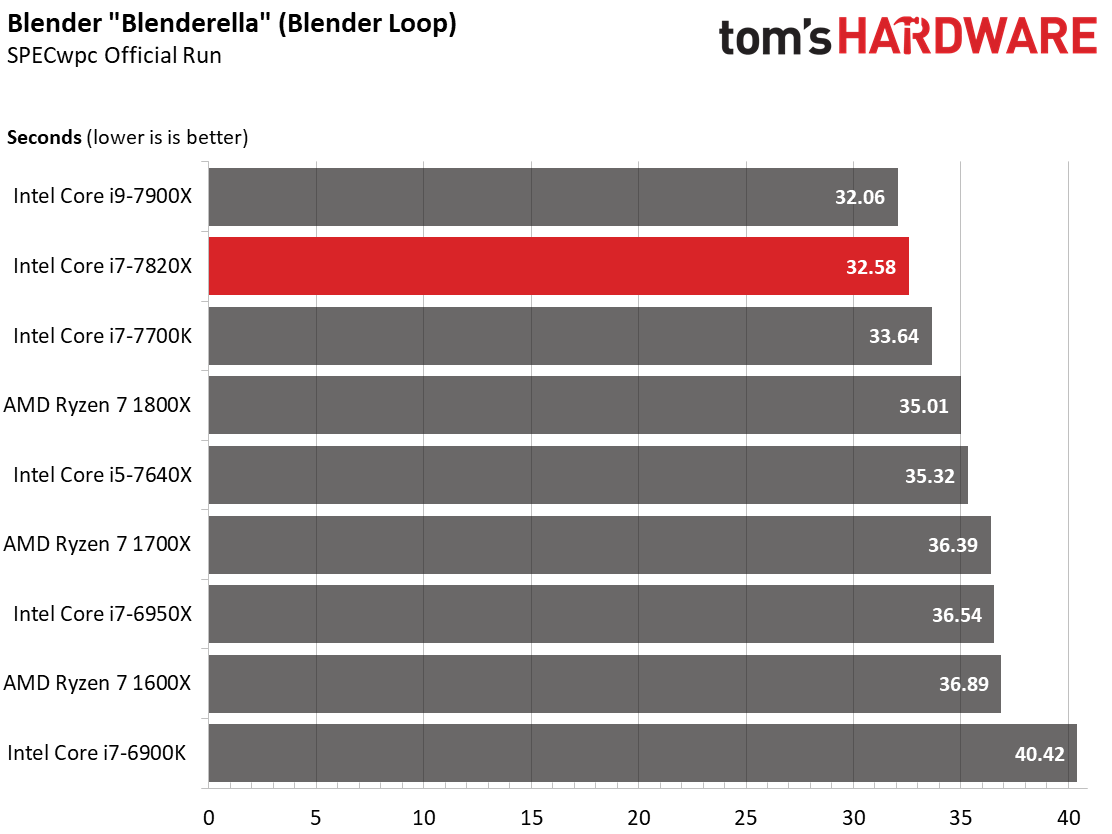

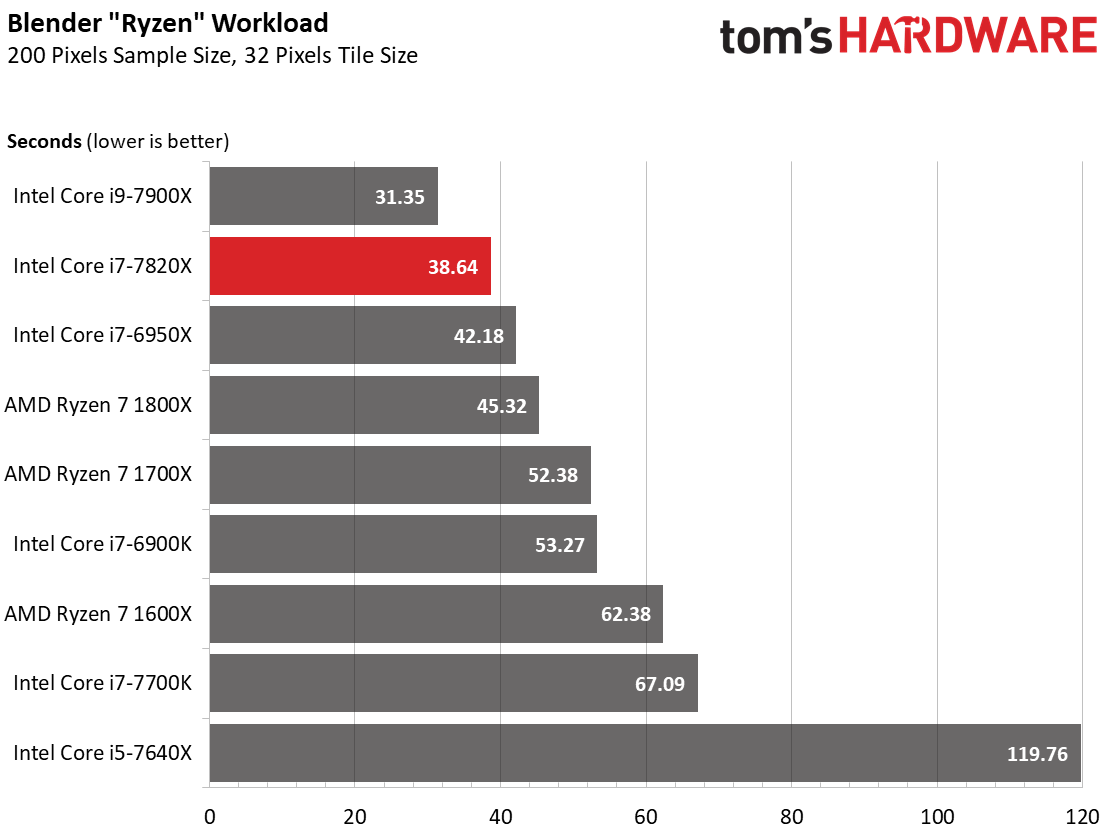

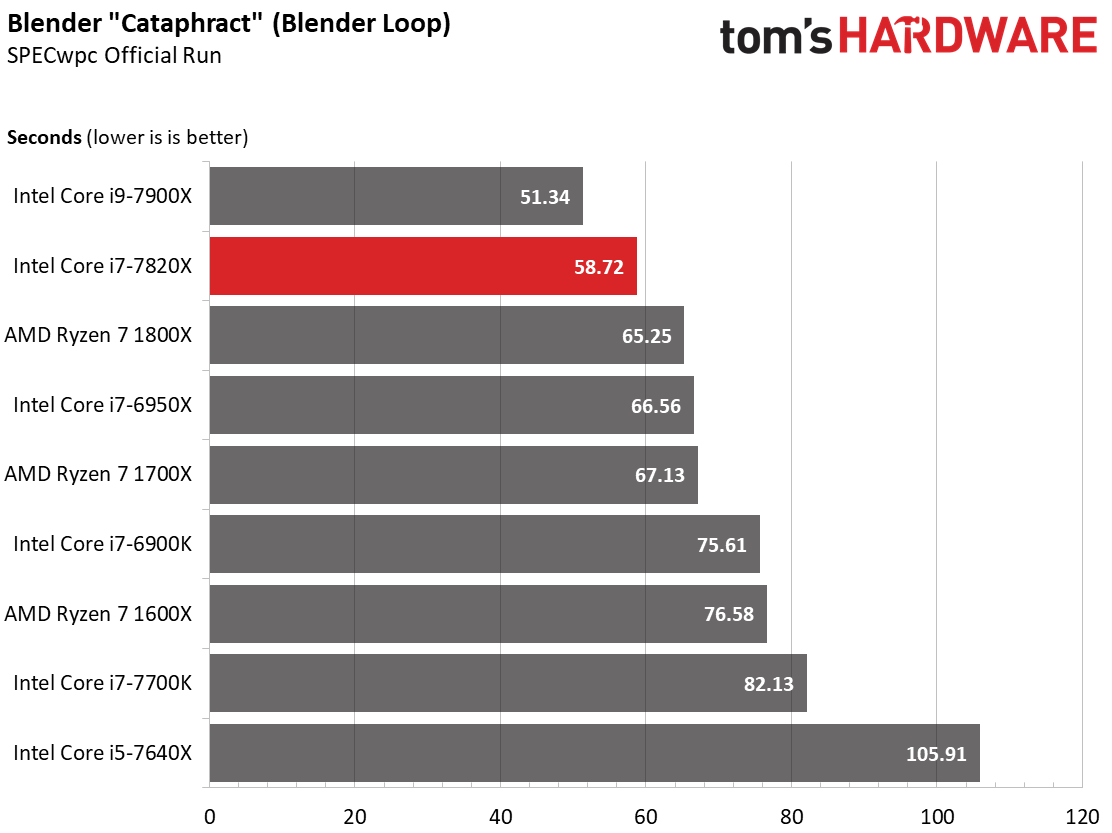

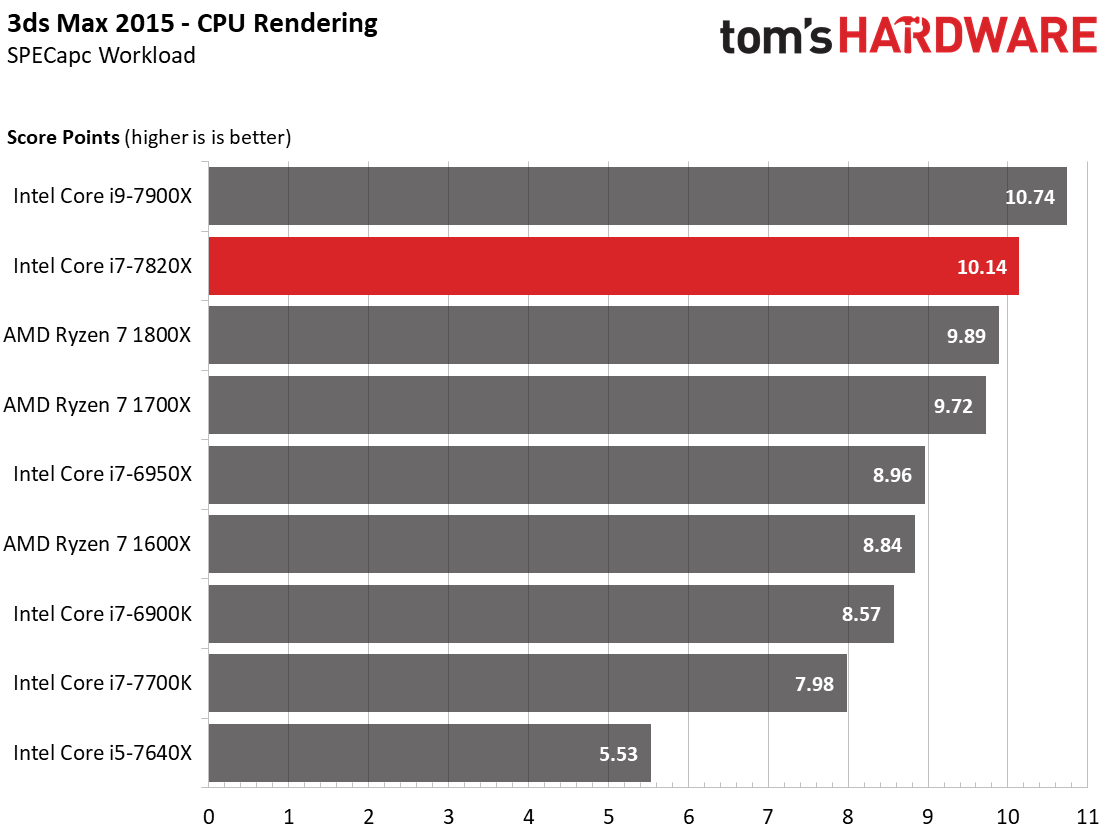

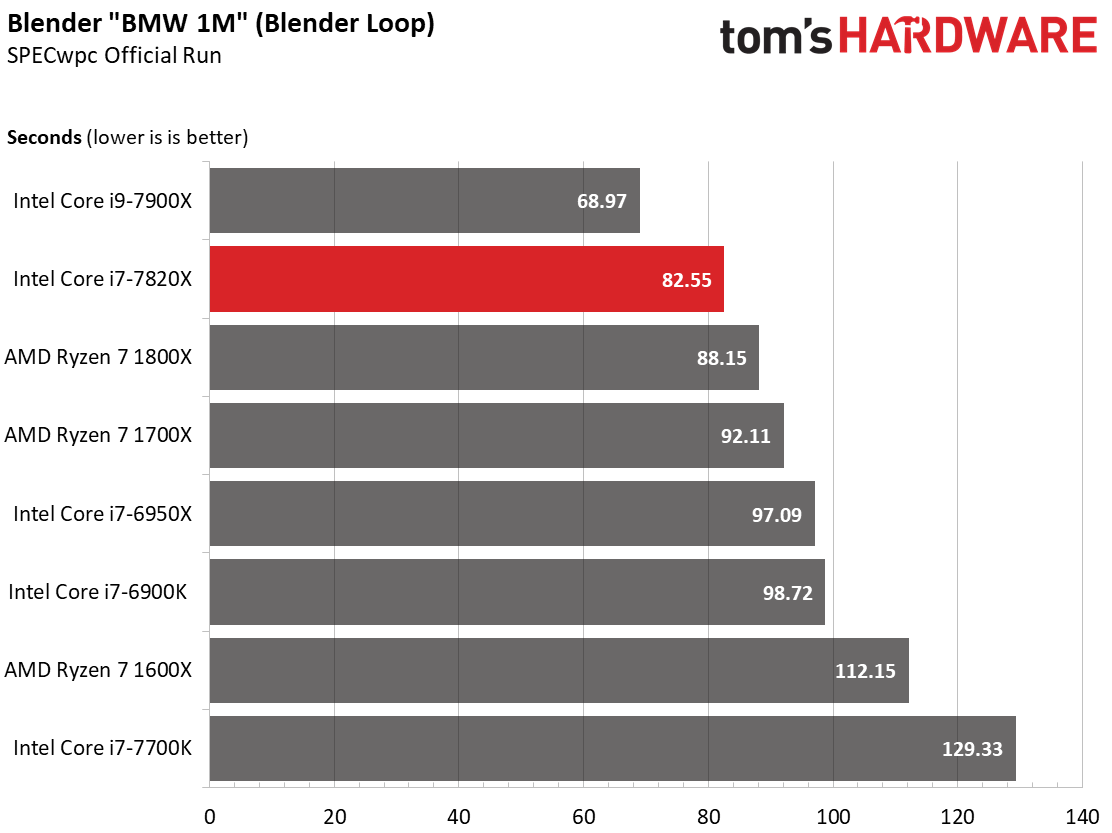

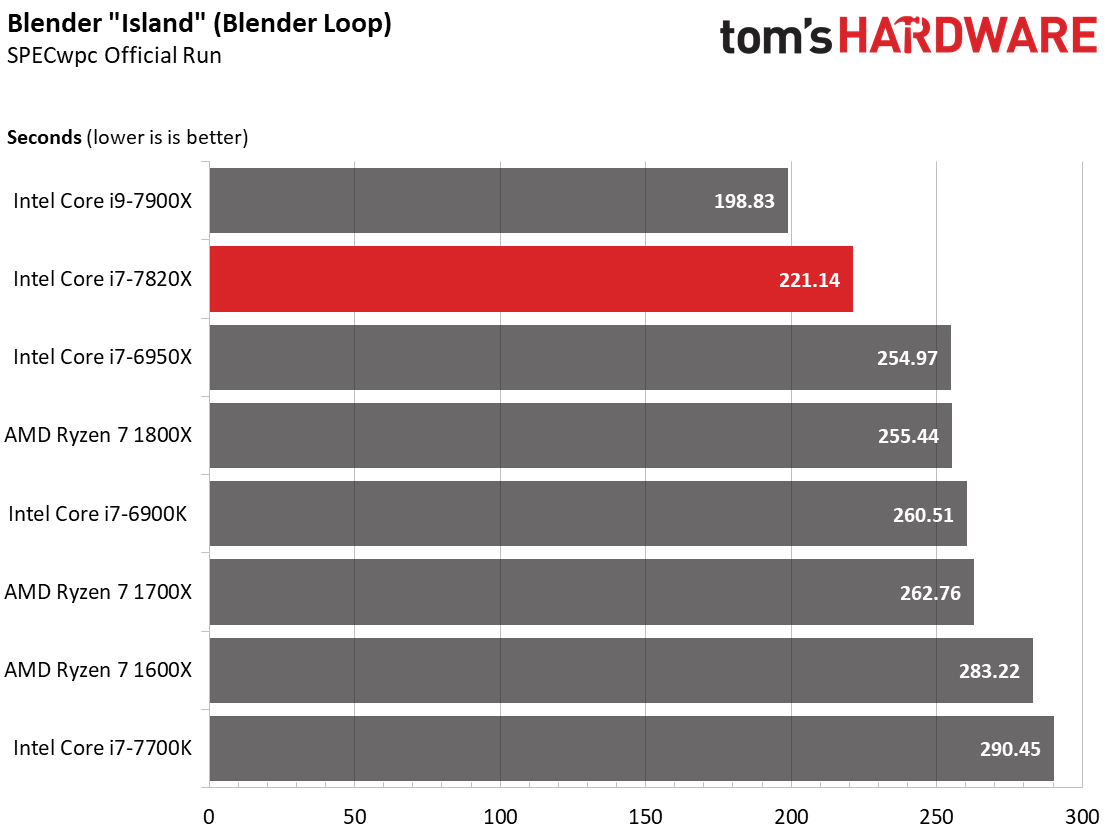

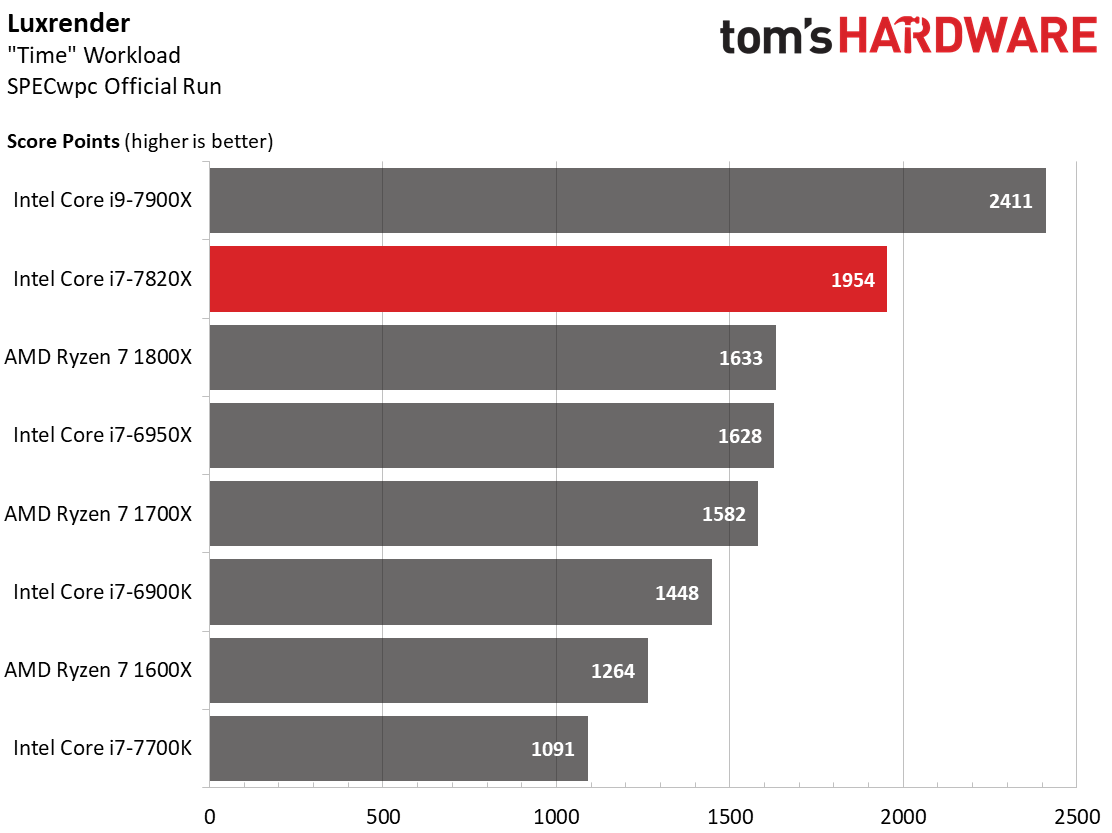

CPU Performance: Photorealistic Rendering

Rendering benefits from brute-force parallelism, so the 10-core Core i9-7900X naturally provides the best performance.

The workload utilizes all cores fully, so it also provides a good multi-threaded comparison between the eight-core -7820X and Ryzen 7 1800X. Intel's processor takes the lead due to its per-cycle performance advantage, but Ryzen is surprisingly competitive given its lower price and value-oriented platform. It also doesn't require a custom water-cooling loop to reach its potential, whereas Skylake-X does.

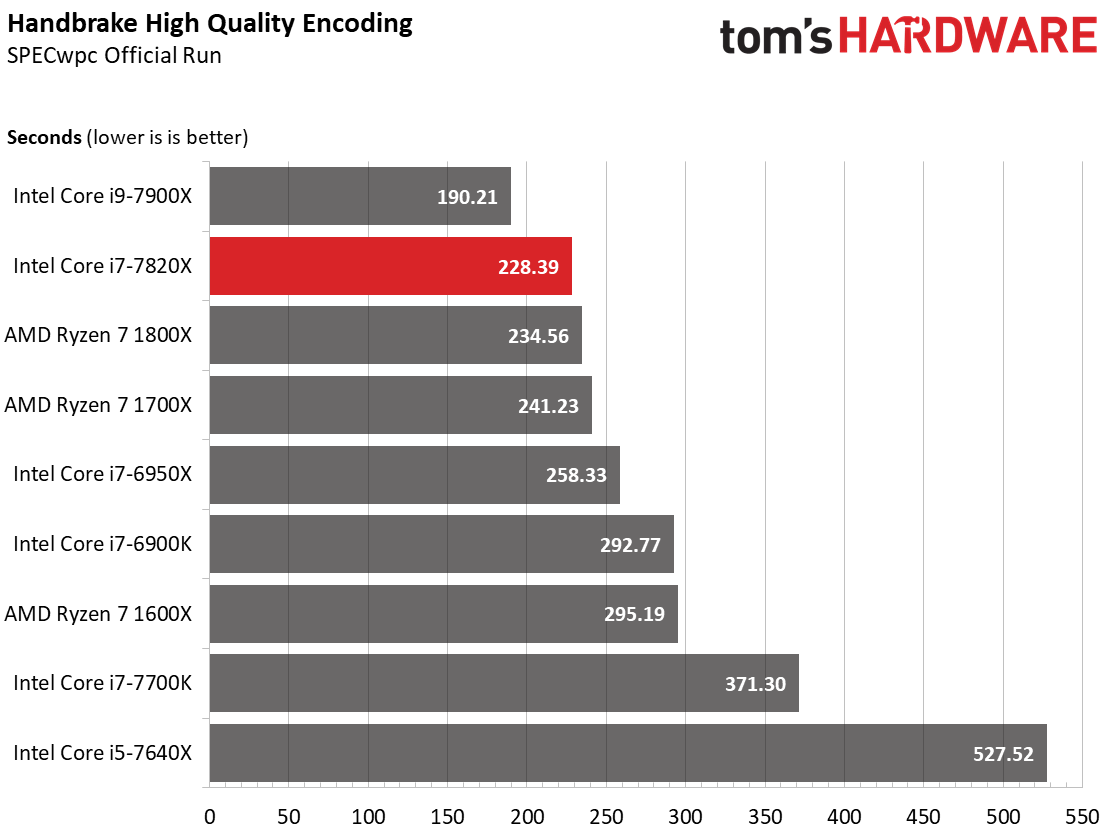

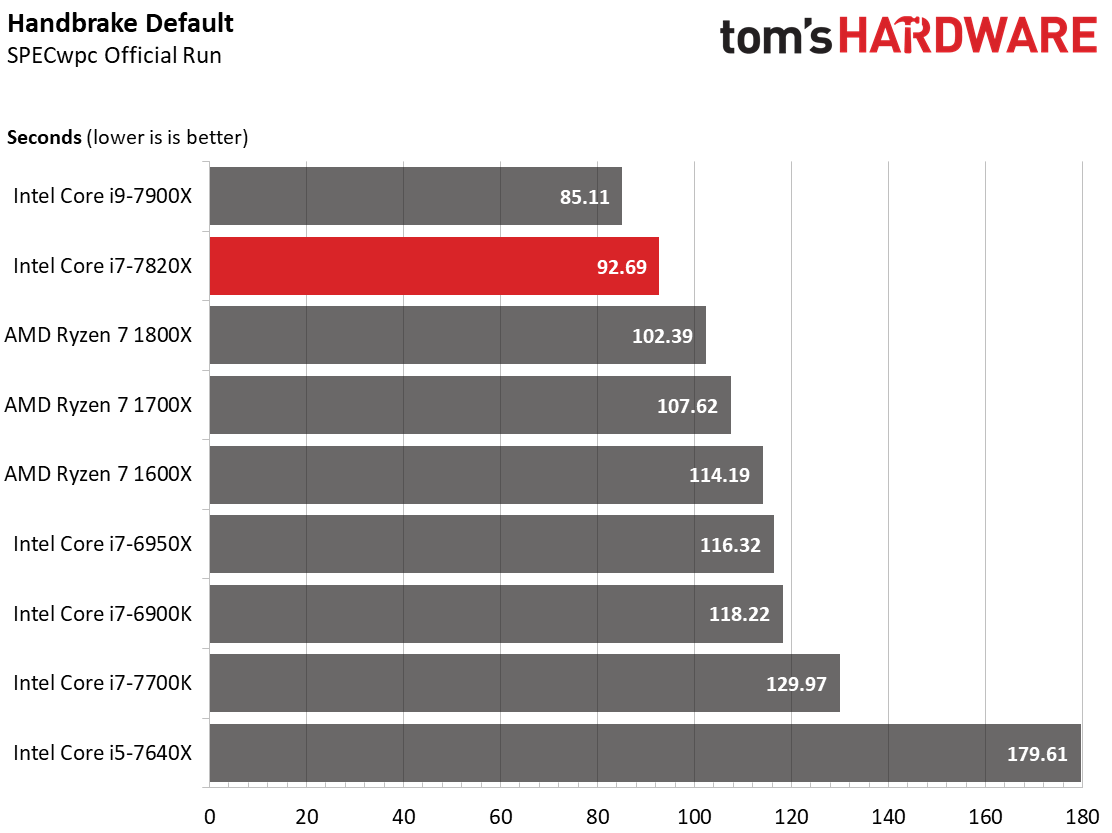

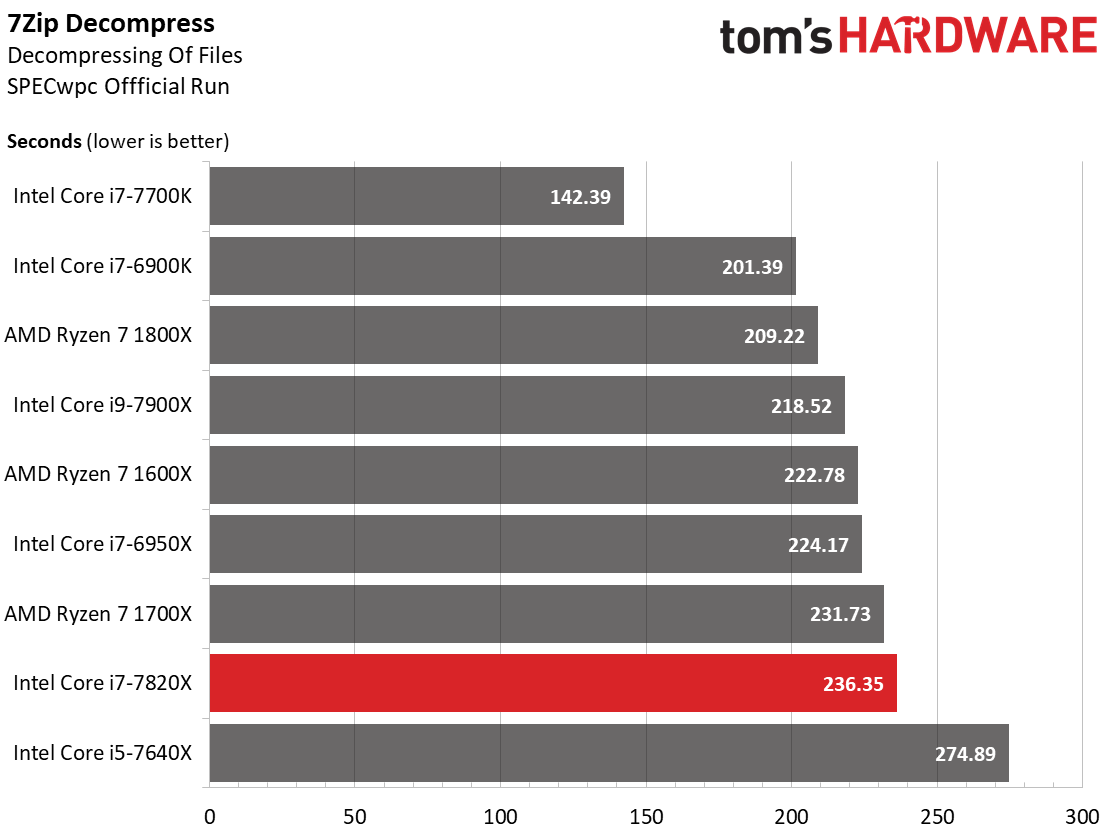

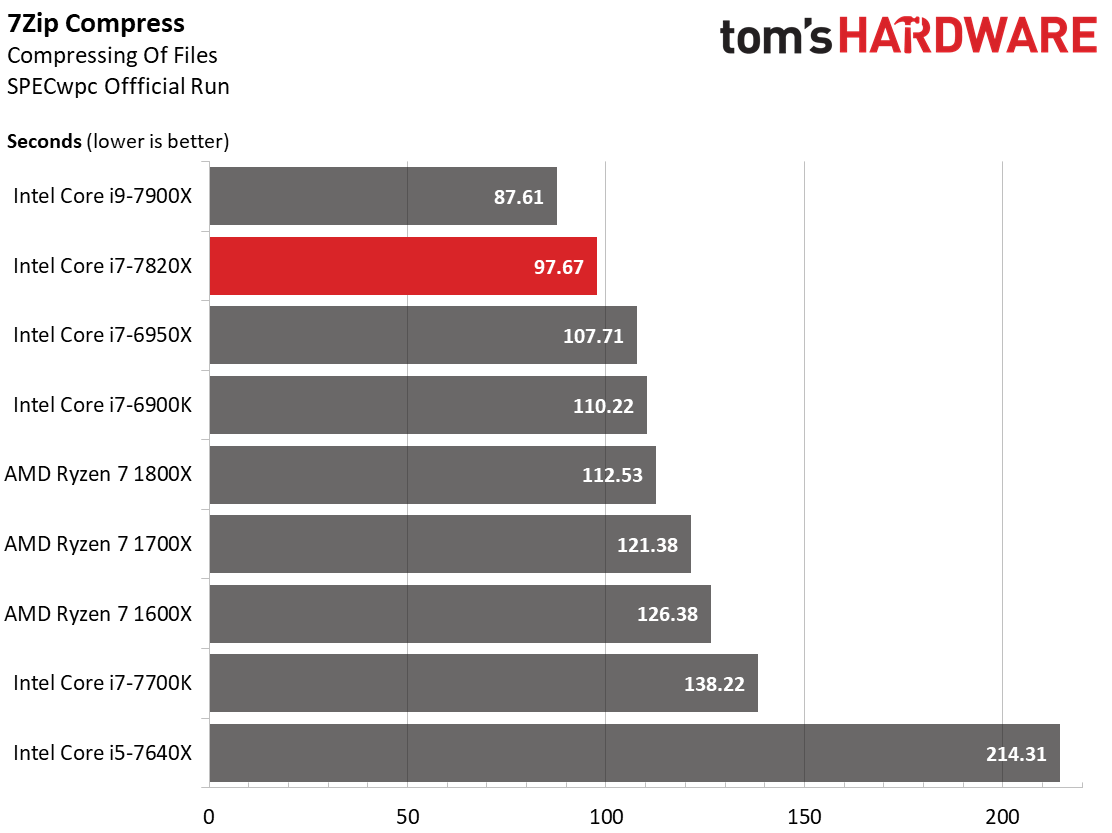

CPU Performance: Encoding & Compression/Decompression

The -7820X falls into a predictable place during our threaded encoding workload. Nipping at its heels is AMD's nagging (and much less expensive) Ryzen 7 1800X.

Core i7-7820X struggles mightily with our lightly-threaded decompression workload. Its place in the chart is much lower than we'd expect, given the way Intel implements its Turbo Boost technology.

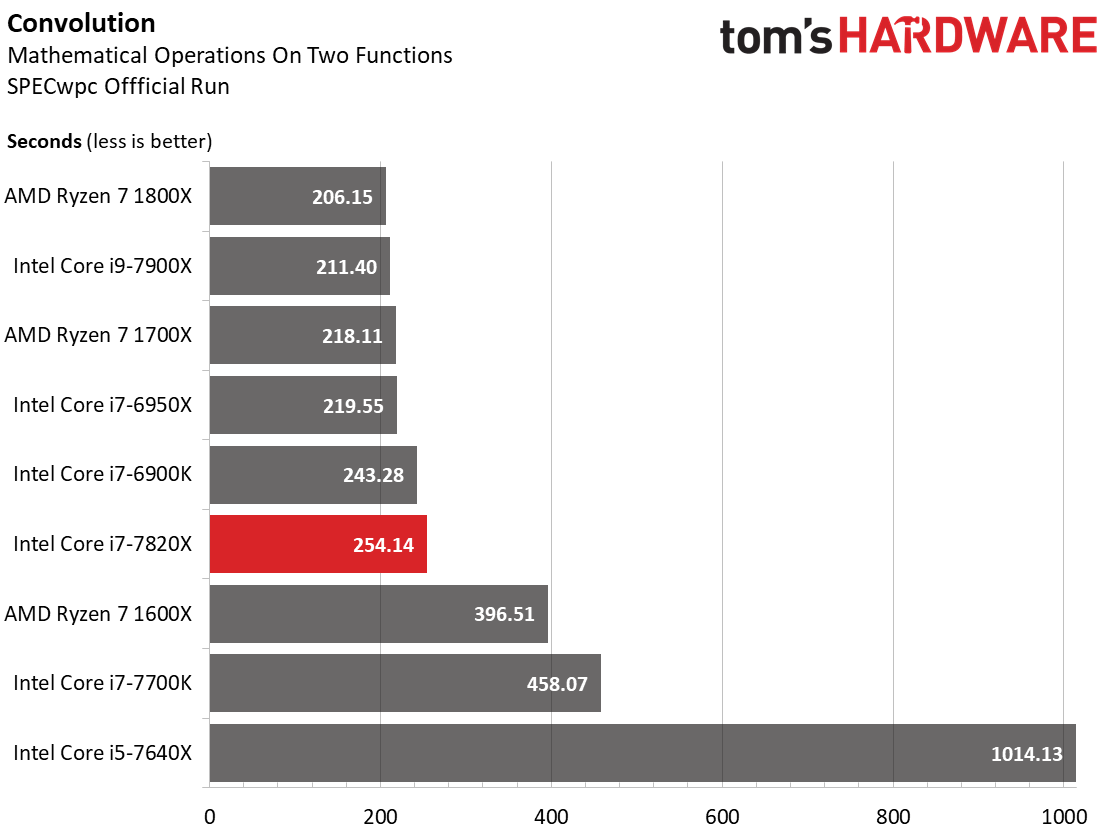

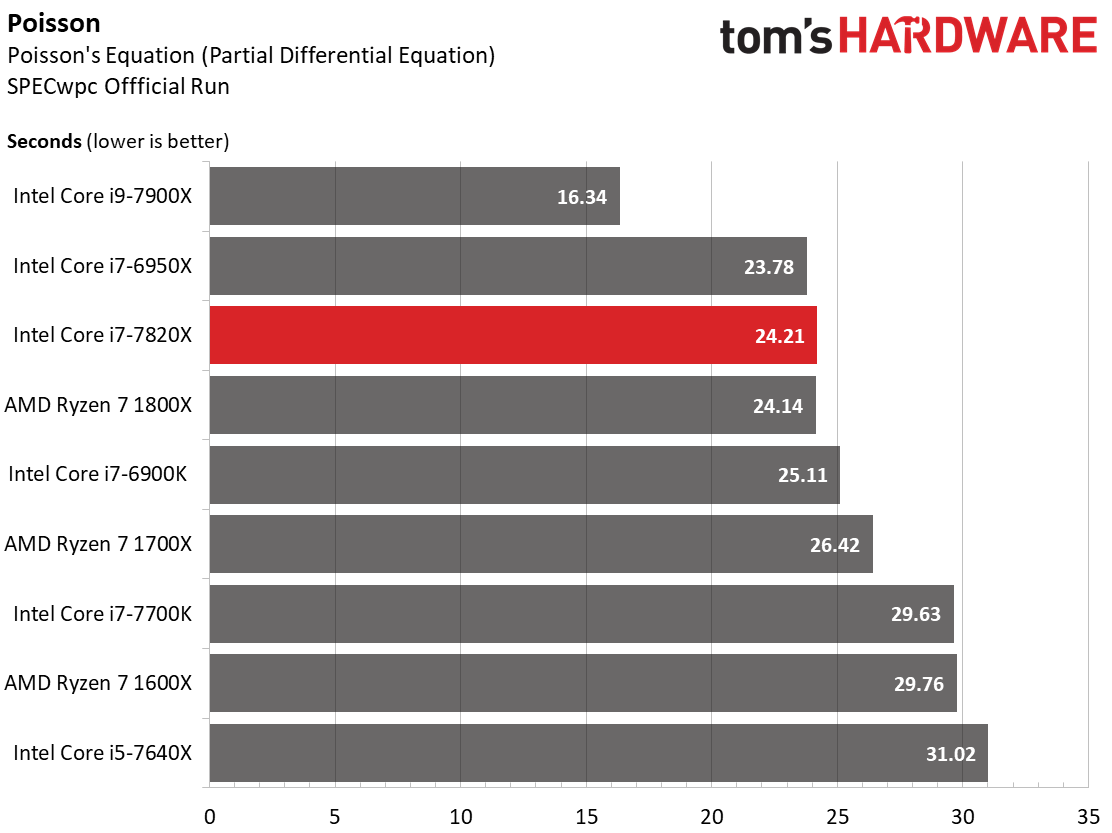

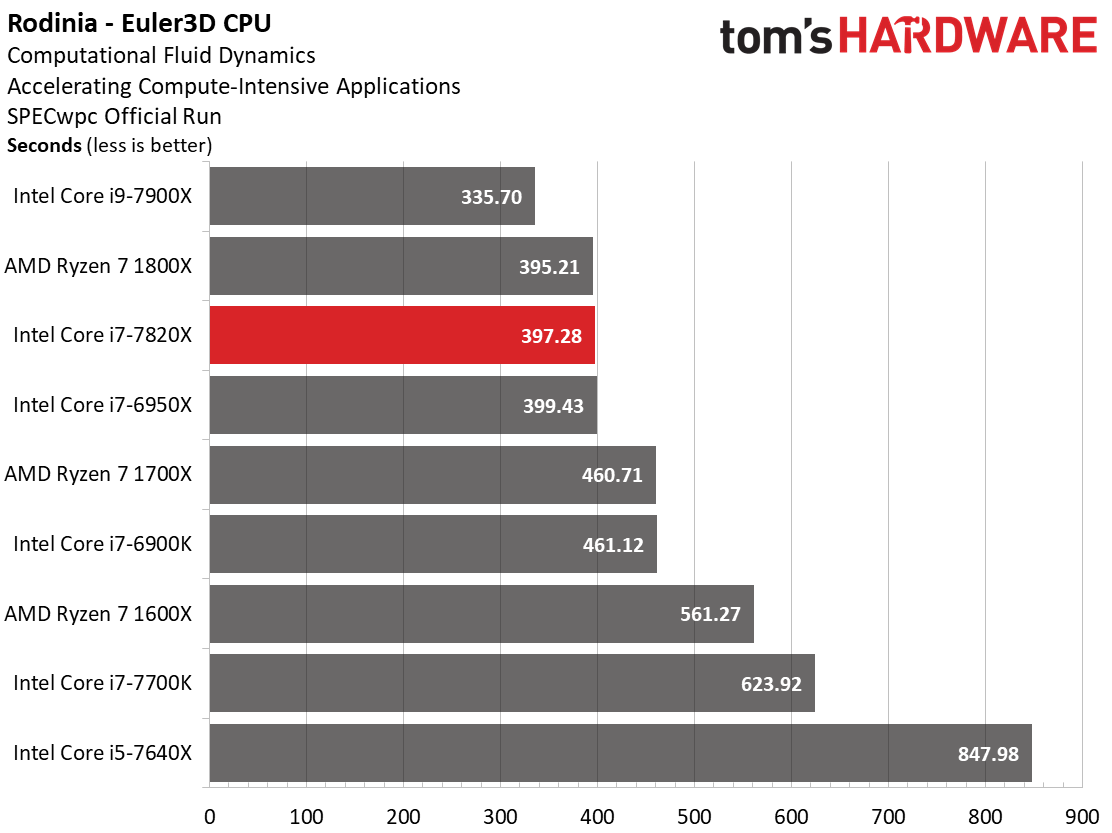

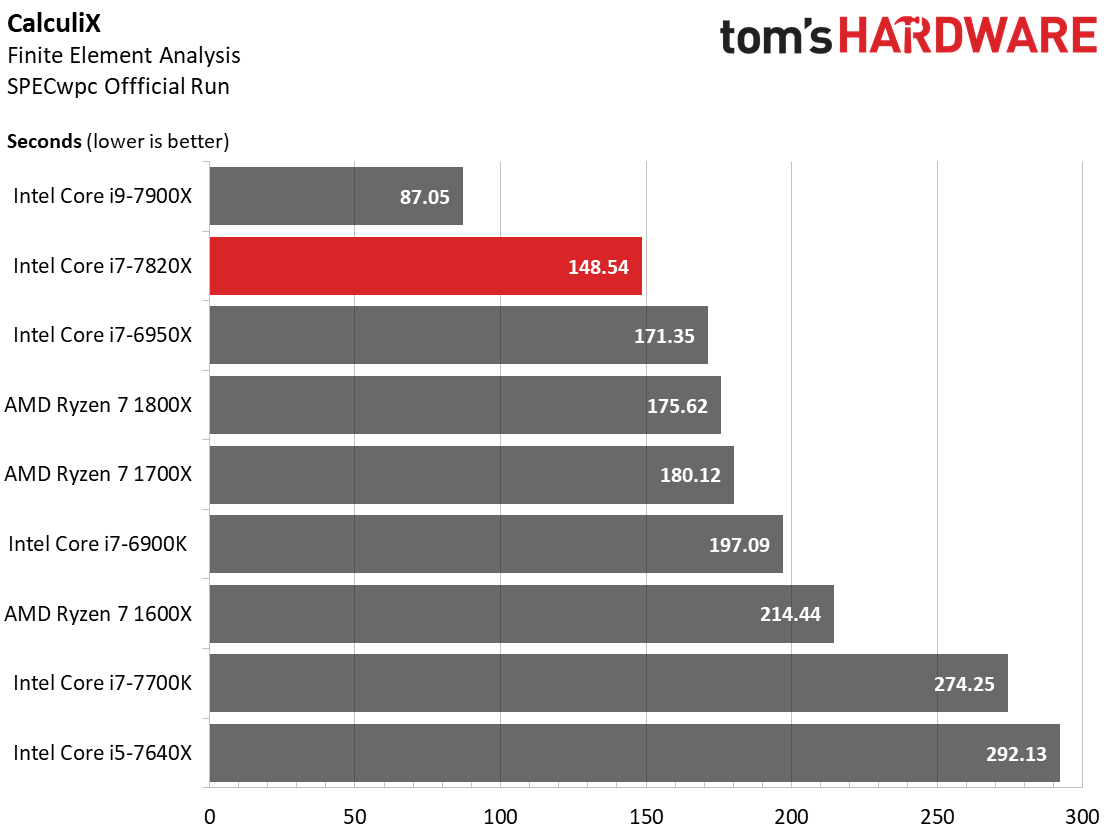

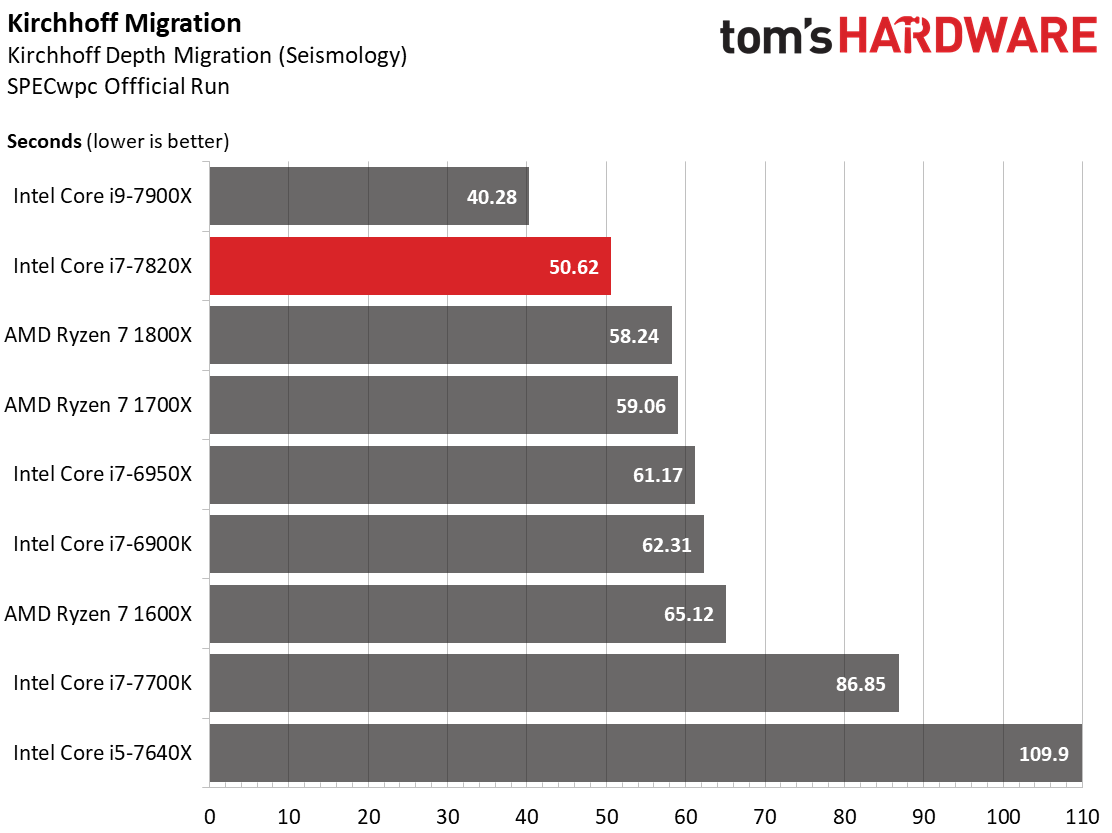

High Performance Computing (HPC)

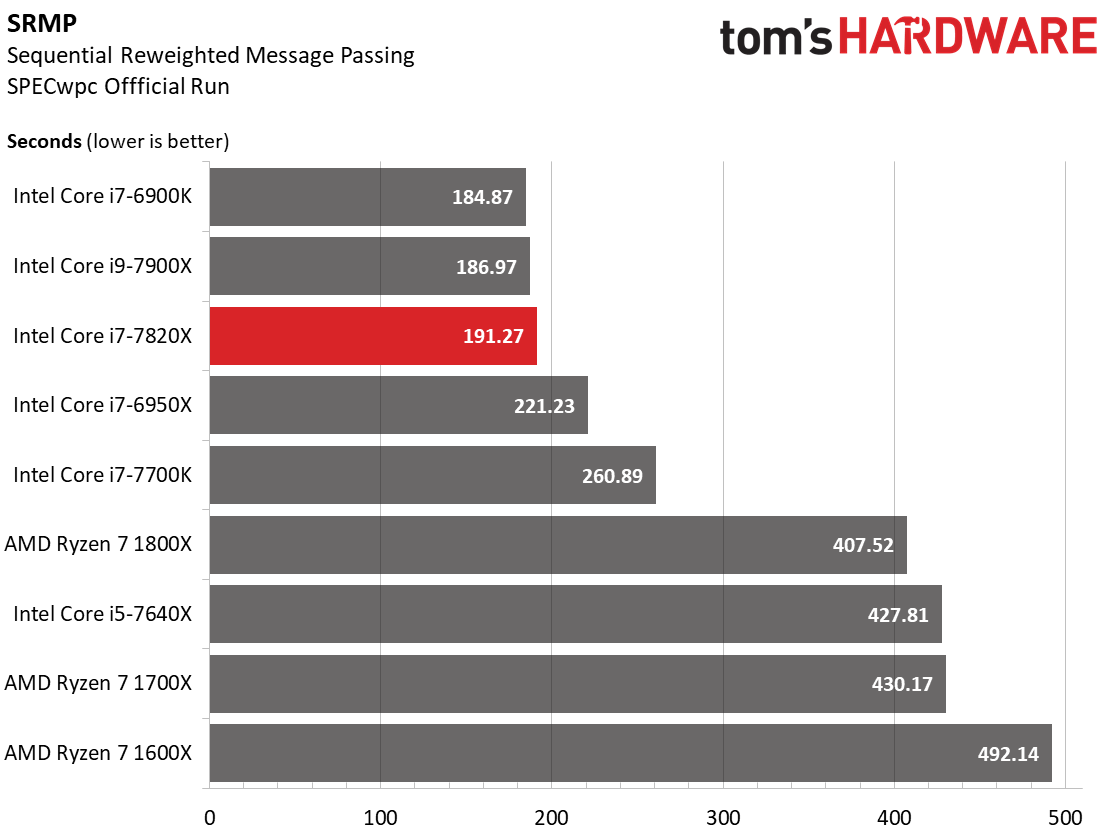

Complex HPC applications largely benefit from the -7820X's high clock rate and beefy core count. But aside from the SRMP workload, AMD's Ryzen 7 1800X again proves to be the fly in Intel's high-priced ointment.

MORE: Best CPUs

MORE: Intel & AMD Processor Hierarchy

MORE: All CPUs Content

Current page: Workstation & HPC Performance

Prev Page Project CARS & Rise of the Tomb Raider Next Page Power Consumption & Thermals

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

cknobman Just want to say the Ryzen 7 1800x isnt $500 anymore and has not been for weeks now.Reply

The processor is selling for $420 or less. Heck I bought mine yesterday from Fry's for $393 -

artk2219 Reply19984543 said:Just want to say the Ryzen 7 1800x isnt $500 anymore and has not been for weeks now.

The processor is selling for $420 or less. Heck I bought mine yesterday from Fry's for $393

Not to mention the fact that you can find the 1700 for even less, and more than likely be able to bump the clocks to atleast match the 1800x. Microcenter was selling them for 269.99 last week. -

Ne0Wolf7 At least they've done something, but it still too expensive to sway me.Reply

Perhaps full blown profesionals who need something a bit better than what Ryzen has right now but can go for an i9 would appreciate this, but even hen he/she/it would probably wait to see what threadripper had to offer. -

Scorpionking20 So many years past, I can't wrap my head around this. Competition in the CPU space? WTH is this?Reply -

Houston_83 I think the article has some incorrect information on the first page.Reply

"However, you do have to tolerate a "mere" 28 lanes of PCIe 3.0. Last generation, Core i7-6850K in roughly the same price range gave you 40 lanes, so we consider the drop to 28 a regression. Granted, AMD only exposes 16 lanes with Ryzen 7, so Intel does end the PCIe comparison ahead."

Doesn't Ryzen have 24 lanes? Still under intel but I'm pretty sure there's more than 16 lanes. -

artk2219 Reply19984718 said:I think the article has some incorrect information on the first page.

"However, you do have to tolerate a "mere" 28 lanes of PCIe 3.0. Last generation, Core i7-6850K in roughly the same price range gave you 40 lanes, so we consider the drop to 28 a regression. Granted, AMD only exposes 16 lanes with Ryzen 7, so Intel does end the PCIe comparison ahead."

Doesn't Ryzen have 24 lanes? Still under intel but I'm pretty sure there's more than 16 lanes.

Ryzen does have 24 lanes, but only 16 are usable, 8 are dedicated to chipset and storage needs. -

JimmiG Reply19984740 said:19984718 said:I think the article has some incorrect information on the first page.

"However, you do have to tolerate a "mere" 28 lanes of PCIe 3.0. Last generation, Core i7-6850K in roughly the same price range gave you 40 lanes, so we consider the drop to 28 a regression. Granted, AMD only exposes 16 lanes with Ryzen 7, so Intel does end the PCIe comparison ahead."

Doesn't Ryzen have 24 lanes? Still under intel but I'm pretty sure there's more than 16 lanes.

It does, but only 16 are usable, 8 are used for chipset and storage needs.

16X are available for graphics as 1x16 or 2x8.

4X dedicated for M.2

4X for the chipset that's split into 8x PCI-E v2 by the X370 and allocated dynamically IIRC -

Zifm0nster Would love to give this a chip a spin.... but availability has been zero.... even a month after release.Reply

I actually do have work application which can utilize the multi-core. -

Math Geek does look like intel was caught off guard by amd this time around.Reply

will take em a couple quarters to figure out what to do. but i'm loving the price/performance amd has brought to the table and know intel will have no choice but to cut prices.

this is always good for the buyers :D -

Amdlova Why we have overclocked cpus ons bench but dont have power compsumation! this review is biased to intel again !? are tomshardware fake news ?Reply