U.S. investigates whether DeepSeek smuggled Nvidia AI GPUs via Singapore

Nvidia denies wrongdoing, but Singapore now accounts for 22% of its revenue.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

You are now subscribed

Your newsletter sign-up was successful

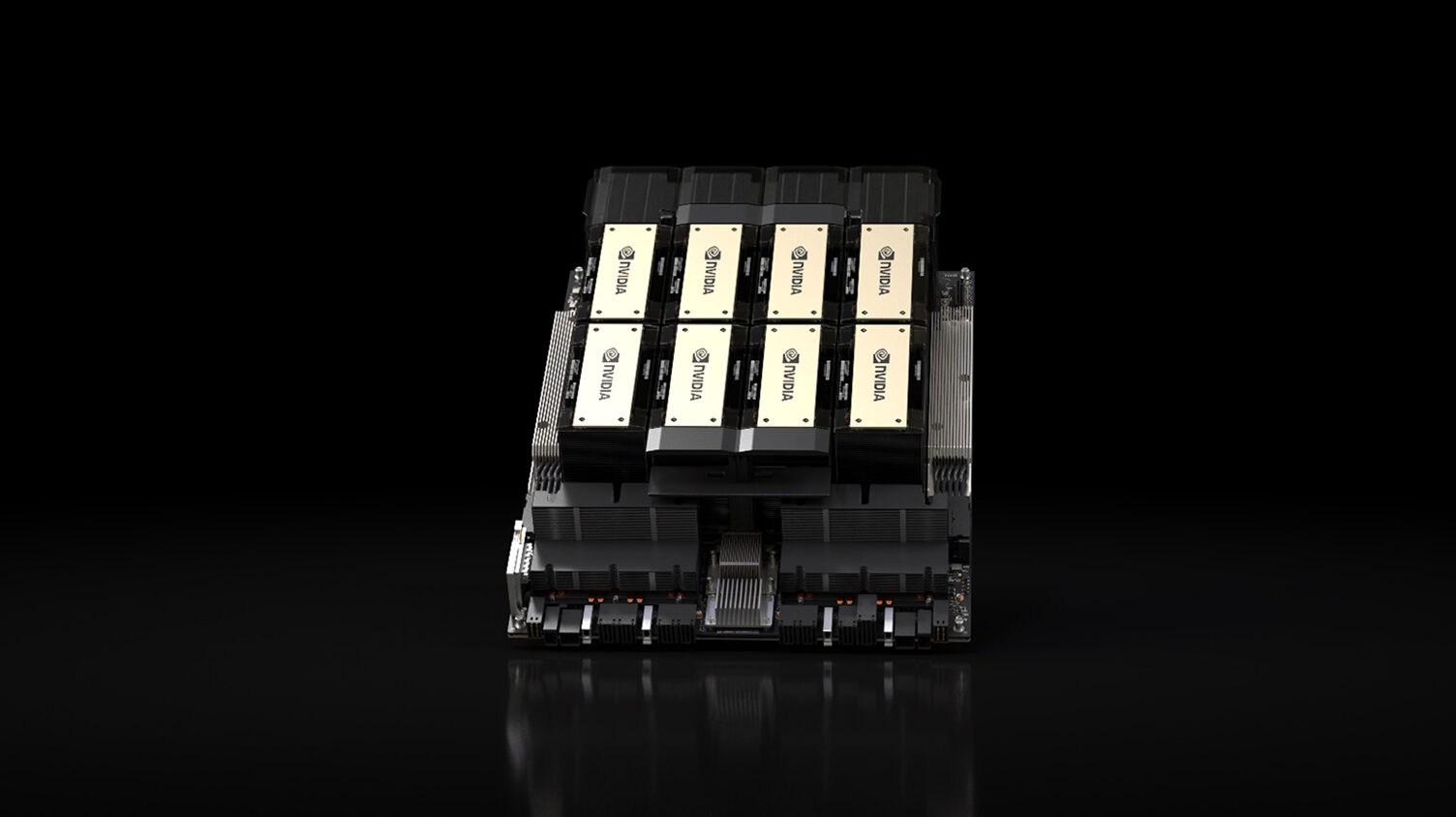

Bloomberg reports that the U.S. government is investigating whether DeepSeek acquired Nvidia's restricted GPUs for AI workloads through intermediaries in Singapore, bypassing U.S. export restrictions. Concerns have grown as DeepSeek's AI model R1 shows capabilities on par with leading OpenAI and Google models. Adding to the concerns, Singapore's share of Nvidia's revenue increased from 9% to 22% in two years.

DeepSeek has not disclosed the specific hardware used to train its R1 model. However, it previously indicated that it used a limited number of H800 GPUs — 2,048 — to train its V3 model with 671 billion parameters in just two months, or 2.8 million GPU hours. By contrast, it took Meta 11 times more compute resources (30.8 million GPU hours) to train its Llama 3 model with 405 billion parameters using a supercomputer featuring 16,384 H100 GPUs over 54 days. Observers believe that R1 also consumes fewer resources than competing models. However, R1 was likely trained on a more powerful cluster than the one used for V3.

This assumption has led to speculation that the company relied on restricted Nvidia GPUs that cannot be freely imported to China. Authorities, including the White House and the FBI, are investigating whether DeepSeek obtained restricted AI GPUs through third-party firms in Singapore. So far, officials have not publicly confirmed whether laws were broken, but Nvidia maintains that it follows all legal requirements.

The U.S. has tightened restrictions on advanced GPU exports to China for several years. In 2023, the Biden administration imposed new rules limiting the performance of GPUs that can be sold to China and multiple other nations without an export license from the U.S. Department of Commerce. However, Singapore was not among the restricted countries, so many believe it was a loophole for Chinese entities to get their hands on Nvidia's high-end H100 GPUs. According to the report, representatives John Moolenaar and Raja Krishnamoorthi have called for strict licensing measures unless Singapore strengthens oversight on shipments.

Singapore plays a vital role in Nvidia's global business, accounting for 22% of its revenue as of Q3 FY2025, up from 9% in Q3 FY2023 when the first significant restrictions on AI GPU sales to Chinese were introduced, as @tphuang noticed. However, the company clarifies that most transactions with Singapore involve shipments sent elsewhere, not China. Nvidia reports sales based on 'bill to' locations, which do not always reflect where the products are ultimately used.

"The revenue associated with Singapore does not indicate diversion to China," a statement by Nvidia reads. "Our public filings report 'bill to' not 'ship to' locations of our customers. Many of our customers have business entities in Singapore and use those entities for products destined for the U.S. and the West. We insist that our partners comply with all applicable laws, and if we receive any information to the contrary, act accordingly."

Howard Lutnick, nominated by Donald Trump to lead the Commerce Department, claimed during his confirmation hearing that DeepSeek managed to evade U.S. trade restrictions. He argued that China should compete fairly without using American hardware and vowed to take a hard stance on enforcing chip sales limits if confirmed.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Anton Shilov is a contributing writer at Tom’s Hardware. Over the past couple of decades, he has covered everything from CPUs and GPUs to supercomputers and from modern process technologies and latest fab tools to high-tech industry trends.

-

hotaru251 ReplyHe argued that China should compete fairly

when the product (ai) is done stealing every bit of knowledge possible no person taking part is competing fair as the base of the product is unfairly used content. -

Integr8d Reply

"When scientists, engineers work together globally..." says it all. This is a massive logical fallacy that literally flies in the face of what DeepSeek has supposedly created (a small, private venture and wildly efficient AI model). Dribbling out feel-good platitudes doesn't change reality._Shatta_AD_ said:There we go again, US stifling the competition and seeding doubts in an attempt to taint progress whenever the competitor surpasses them. They’re inhibiting the progress of humanity, industry and technology as a whole. Instead of welcoming competition, this whole US must remain dominant ideology is the downfall of global tech progress and worsening the trend of monetization of technology thereby denying accessibility.

Sure researchers and developers work hard to develop new tech and must be rewarded but denying accessibility to the broader public by putting a massive paywall behind everything isn’t going to improve that tech. It’ll reach a point of stagnation and complacency. Nvidia is a prime example. The lack of competition has turn the level of innovation down a few notches and now simply relying on AI to do the heavy lifting or so called ‘improvements’ rather than hard improvements in rasterization/rendering architecture. This will only get worse the more the US keep stifling out competition. What the US is doing at this point is asking the rest of the world to reinvent the wheel, heck the whole car and its basic operating principle.

When scientists, engineers work together globally, that’s when all sorts of major new discoveries are made.

The problem with countries like China is that they leech technology. And what they can't leech, they steal. That is because the East and the West diverge on the concepts of Intellectual Property. But it's more appropriate to say that it's rich countries versus poor countries. Rich countries invest a lot into high-technology. They expect a return on their investments. Poor countries naturally side with the concept of 'free' and 'sharing' because they have nothing to lose and everything to gain.

China has done well and is feeding its people based on their hard work in the fields where they can contribute. But the Intellectual Property has not been earned. It has been stolen. And China MUST respect this value. If it doesn't, America can easily open its door and import millions of workers directly. This is not a problem for us. -

Mcnoobler I'm going to go on a limb here... all those people who bought 4090s and only got the PCB, was because the actual chips went to China. How do they track that? China can get the boards, it was only the chips that were banned.Reply -

Gururu So OpenAI has a government funded hit job on DeepSeek. OpenAI should sue nVidia IMO for selling to every smuggler in the business.Reply -

bit_user It'd be funny if they added up all the power those GPUs should be using and it turned out to exceed the capacity of Singapore's electric grid.Reply -

bit_user Reply

The concerns seem legit. Experts have looked at their claimed optimizations and they still don't add up to what should be needed to train on the hardware they claimed._Shatta_AD_ said:seeding doubts in an attempt to taint progress whenever the competitor surpasses them.

The sanctions are meant to inhibit AI-powered warfare by the listed entities._Shatta_AD_ said:They’re inhibiting the progress of humanity, industry and technology as a whole.

Blackwell did improve rasterization._Shatta_AD_ said:Nvidia is a prime example. The lack of competition has turn the level of innovation down a few notches and now simply relying on AI to do the heavy lifting or so called ‘improvements’ rather than hard improvements in rasterization/rendering architecture.

Source: https://www.tomshardware.com/pc-components/gpus/nvidia-geforce-rtx-5090-review/4But it could only do so in line with how much bigger the die could get. That's because we've been optimizing the rasterization problem for the past 30 years and it's basically now at a point where performance basically is a function of how many transistors you can throw at the problem and how fast they can switch. Right now, that's running up against surging demand for the latest nodes for AI applications, so Blackwell ended up being stuck on a 4 nm-class node.

As for DLSS, I think the tech is solid. Framegen isn't worth much, as it really doesn't help unless your base framerate is already pretty high (north of 60 fps), but the image-scaling part of it is great for people with 4k monitors who want the benefits they provide for productivity apps but don't want to sacrifice on framerate.

You've provided no evidence to support this. You can't fit a trend to one (questionable) data point._Shatta_AD_ said:This will only get worse the more the US keep stifling out competition.

No, that's not what it's asking._Shatta_AD_ said:What the US is doing at this point is asking the rest of the world to reinvent the wheel, heck the whole car and its basic operating principle. -

bit_user Reply

Is that the thinking? Or did they maybe buy RTX 4090D boards and just transplant the GPUs from regular RTX 4090's to them?Mcnoobler said:I'm going to go on a limb here... all those people who bought 4090s and only got the PCB, was because the actual chips went to China. How do they track that? China can get the boards, it was only the chips that were banned. -

Gaidax Reply

I mean sure, but that does not refute the very real "possibility" that China got full blown AI GPUs from various third-party companies outside China that skirted the rules.Mcnoobler said:I'm going to go on a limb here... all those people who bought 4090s and only got the PCB, was because the actual chips went to China. How do they track that? China can get the boards, it was only the chips that were banned.

And that "possibility" is like, yeah they got it, let's not play pretend. -

scottslayer The Semianalysis estimate from last year was 1 million banned chips and 800,000 non-banned chips coming into China via other countries every month.Reply -

YSCCC Reply

It's a "why not both", I do believe they try import the full blown ones via some overseas companies or smuggling, and then scalp all those 4090 and desolder the chip to their own boards to do AI works, it's not difficult to get a schematic of a PCB for asus as example and print their ownbit_user said:Is that the thinking? Or did they maybe buy RTX 4090D boards and just transplant the GPUs from regular RTX 4090's to them?