Up To 8TB Of SSDs In RAID 0: Highpoint Ultimate Acceleration Drive SSD7100, First Look

Intel's Skylake-X processors offer storage enthusiasts additional PCI Express lanes that are useful for attaching NVMe drives, provided you purchase the expensive models. Intel hasn't unleashed vROC features for our motherboards yet, but soon will. Highpoint's SSD7101B has landed in our lab; it ships with up to 8TBs of 960 Pro SSDs, which gives us an idea of the benefits of vROC.

Oh, you don't know about vROC? It stands for Virtual RAID On CPU. Nearly a decade ago, Intel started planning to bring more downstream features up to the CPU die, like the memory controller that eliminated the need for a North Bridge altogether.

Intel's vROC allows you to build extensive SSD arrays with up to 20 devices. That's something you can currently do via software with Windows Storage Spaces, but you can't boot from the software array. Intel's virtual RAID adds a layer of code and hardware before the Windows boot sequence, which makes RAID possible. This is similar to the PCH RAID we have now on more advanced Intel chipsets, but it's more effective with the hardware directly on the processor.

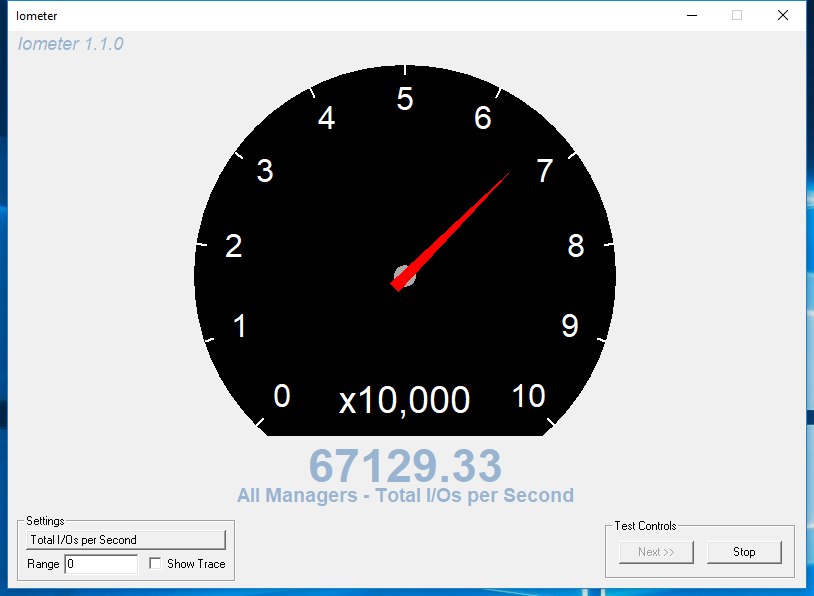

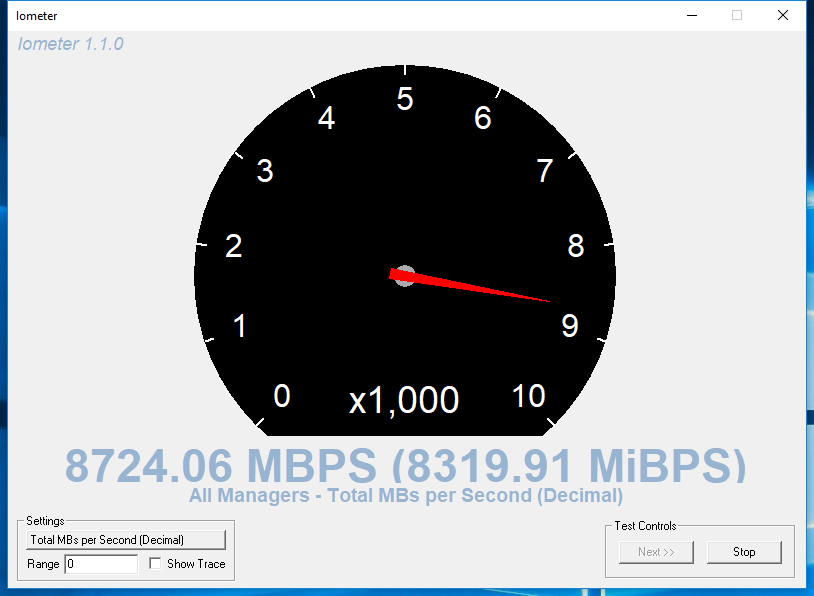

Initial Performance Results

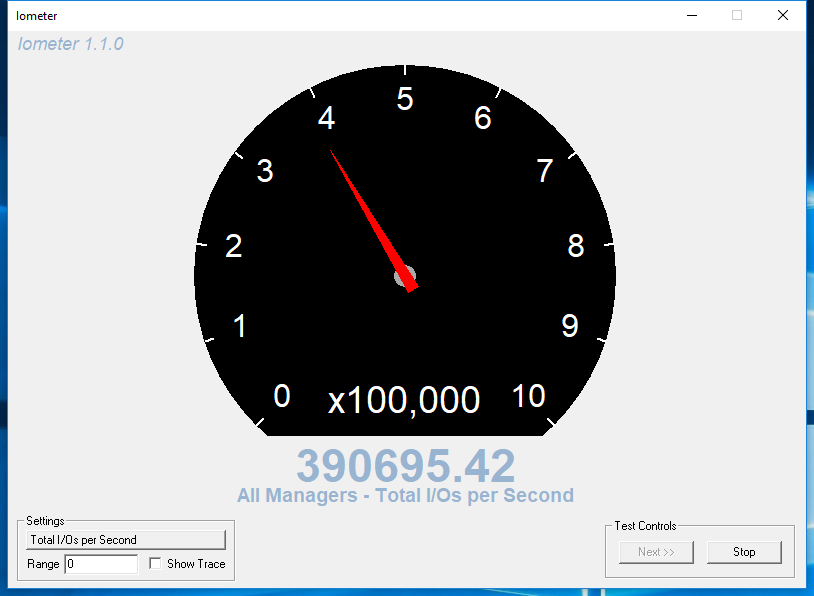

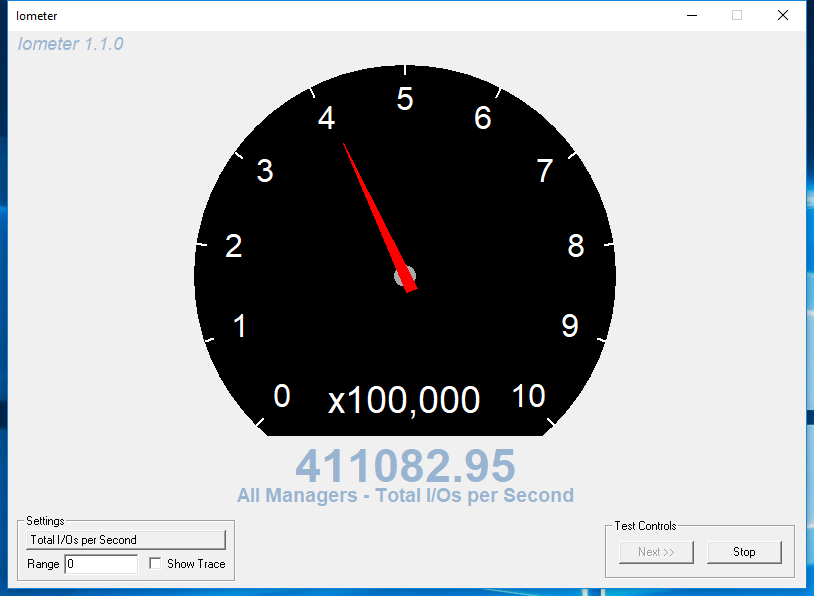

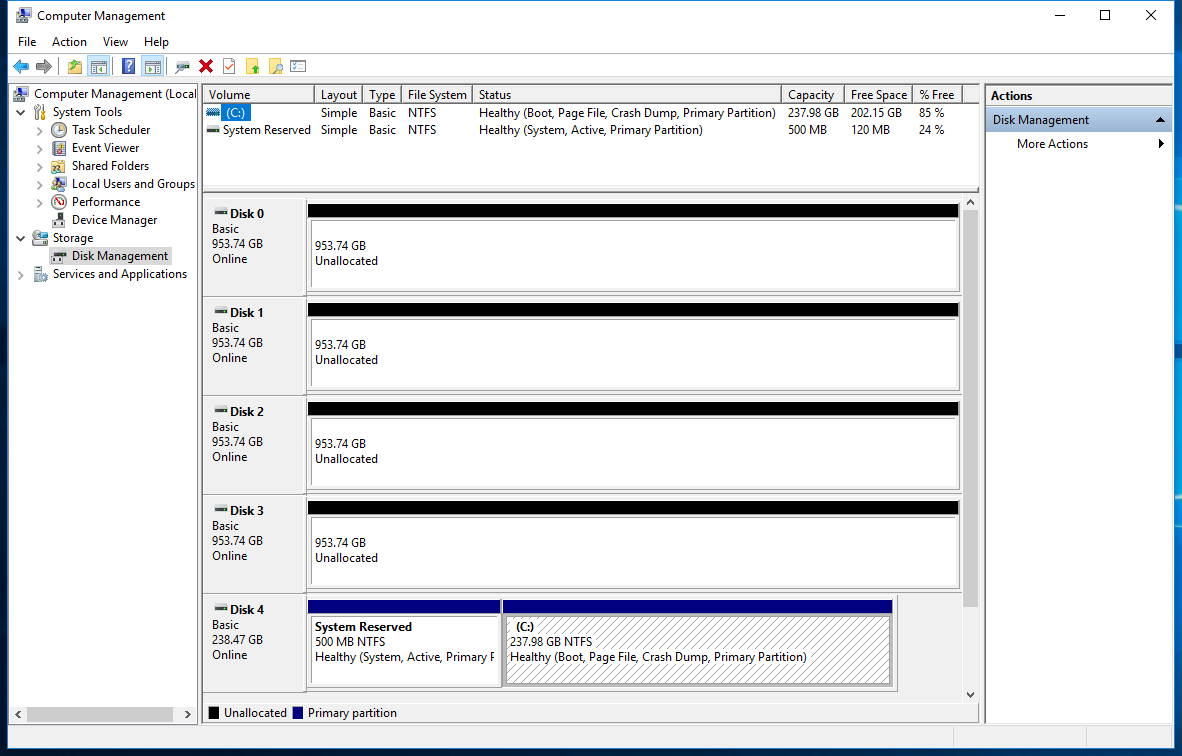

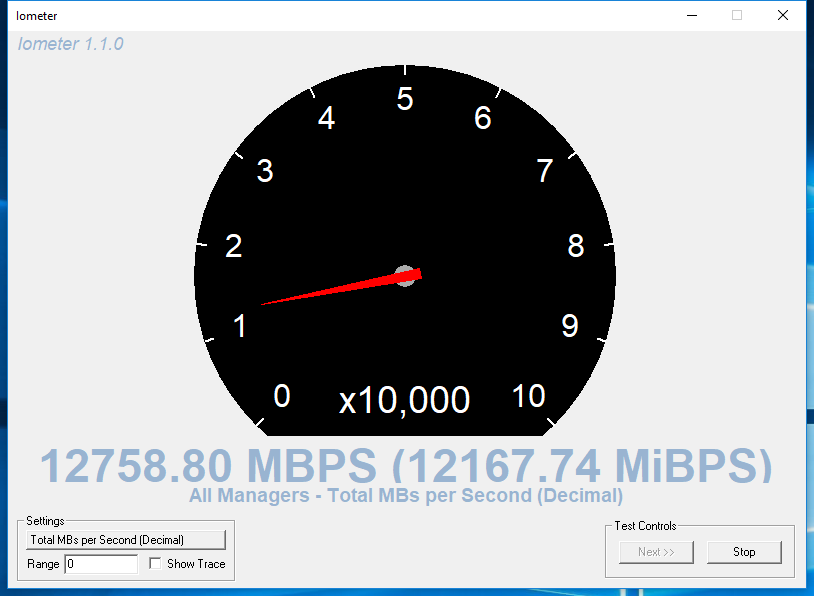

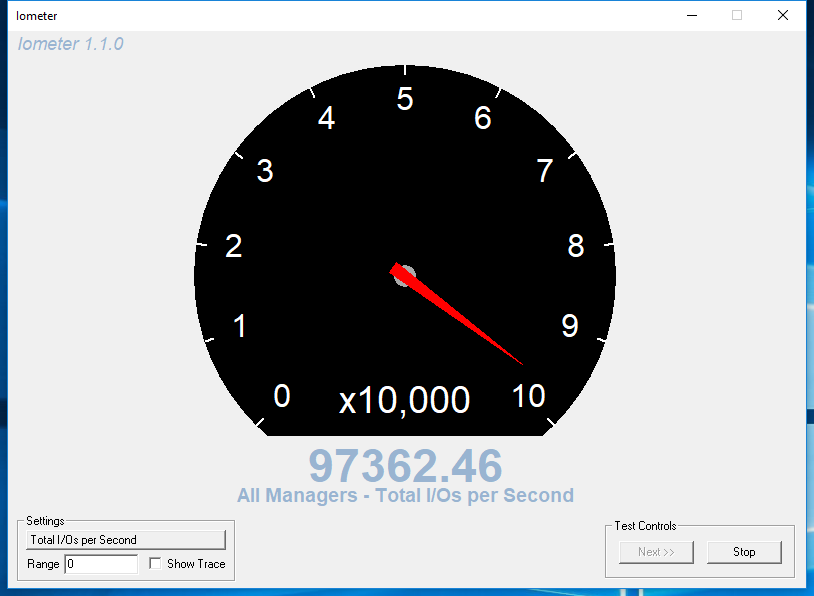

To run some quick numbers, we popped our sample card from HighPoint into a desktop system that had a special Z97 chipset. Samsung provided HighPoint with four 960 Pro 1TB SSDs for our upcoming review. We ran through some simple four-corner tests to gauge initial performance, with four workers each using a queue depth of eight. That means we are testing the card at QD32.

Sequential Read

Sequential Write

Random Read

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Random Write

Paired with four Samsung 960 Pro 1TB SSDs, the card delivered nearly 13,000 MB/s of sequential read performance. Sequential write performance is knocking on the door of 7,000 MB/s. The random read performance with each drive addressed separately was nearly 400,000 IOPS, and random writes came to 411,000 IOPS with the drives in a fresh state.

First Look

We showed a few similar products from motherboard manufacturers like MSI and Asus, but neither company was willing to discuss pricing. MSI went as far to say that its special bifurcation card will ship only in prebuilt systems.

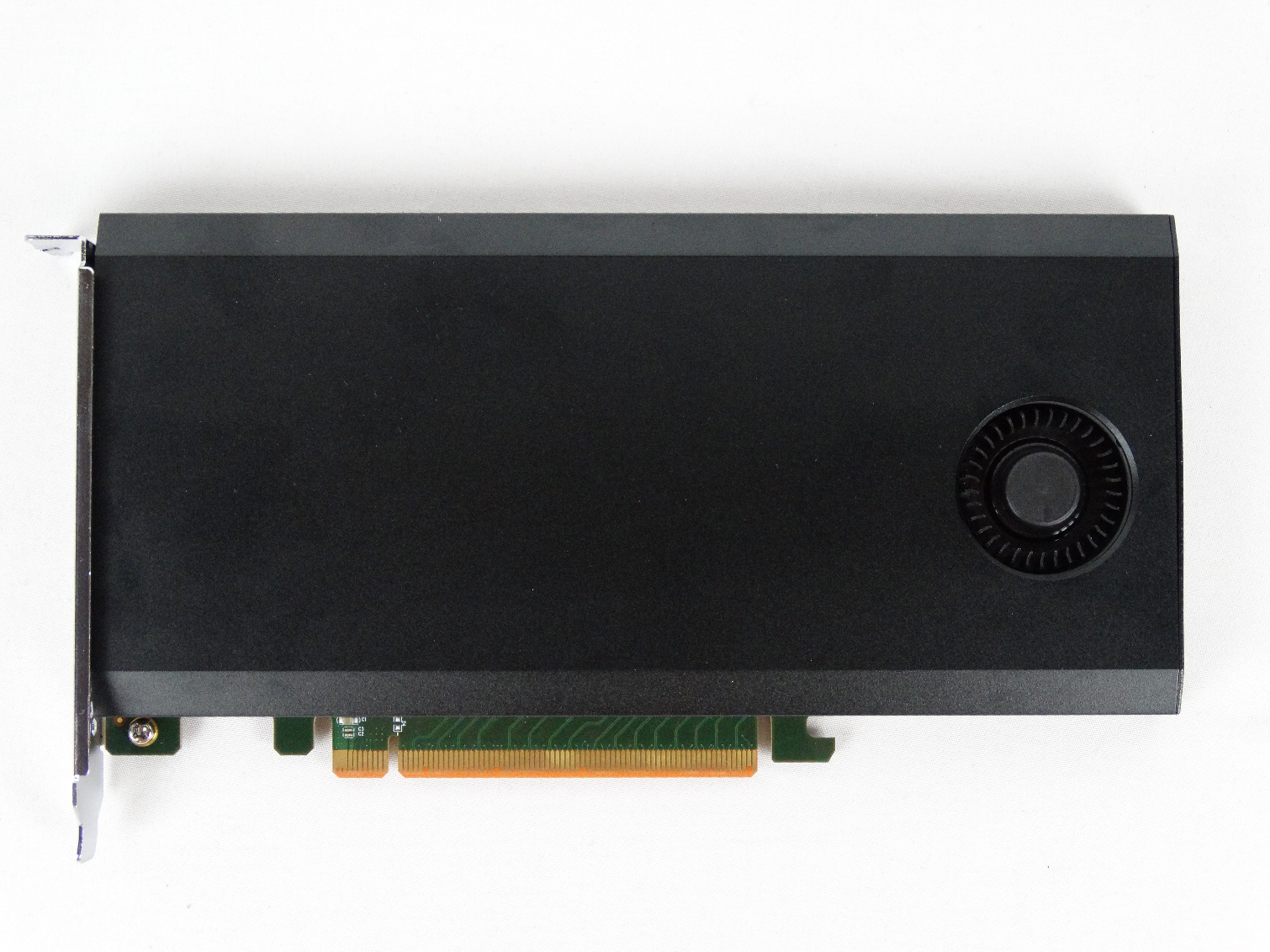

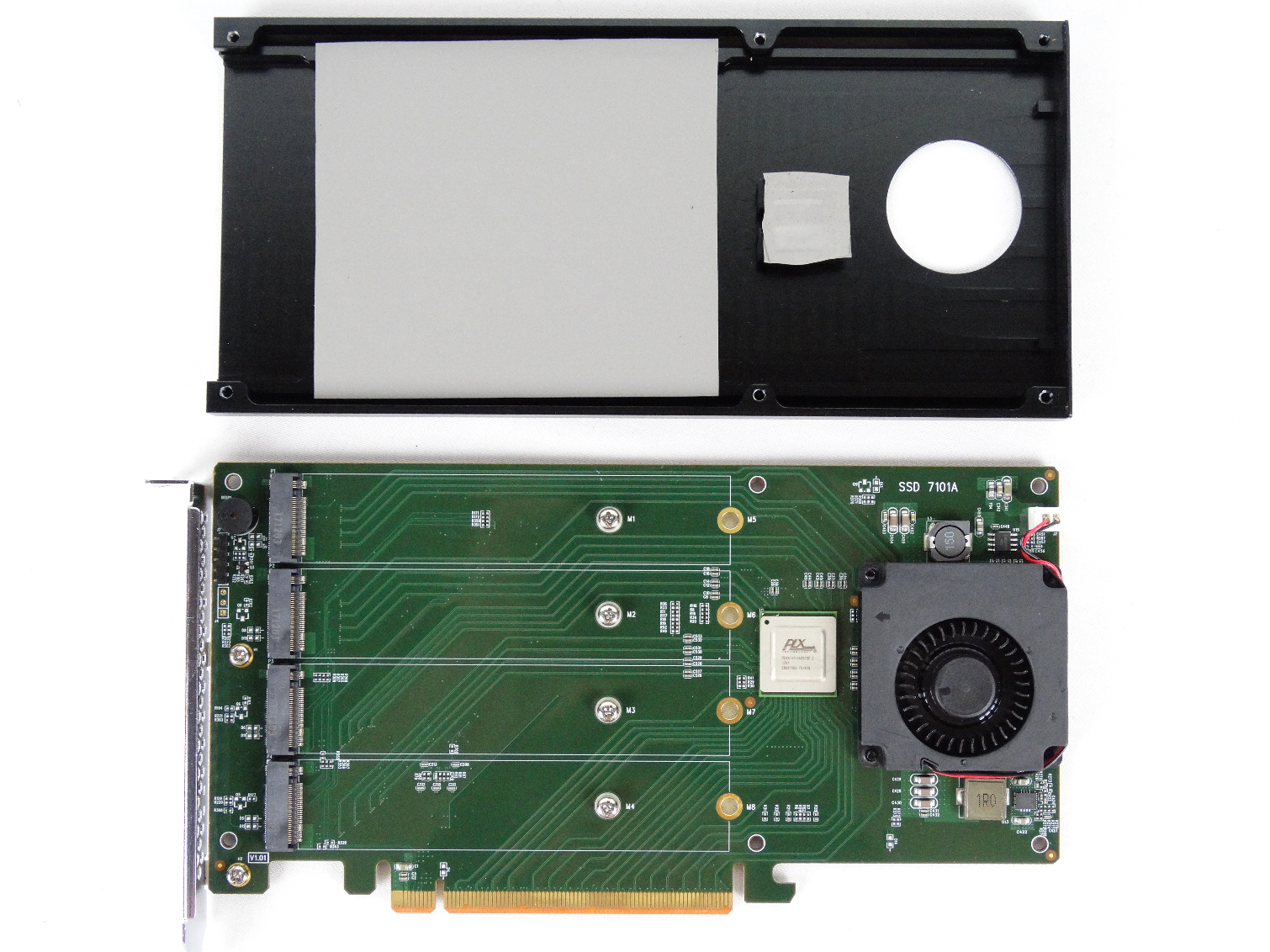

The HighPoint SSD7101A-1 should permanently solve the thermal throttling issue by using a small squirrel cage fan to blow air around the M.2 SSDs. The card supports both 2280 and 22110 form factor drives.

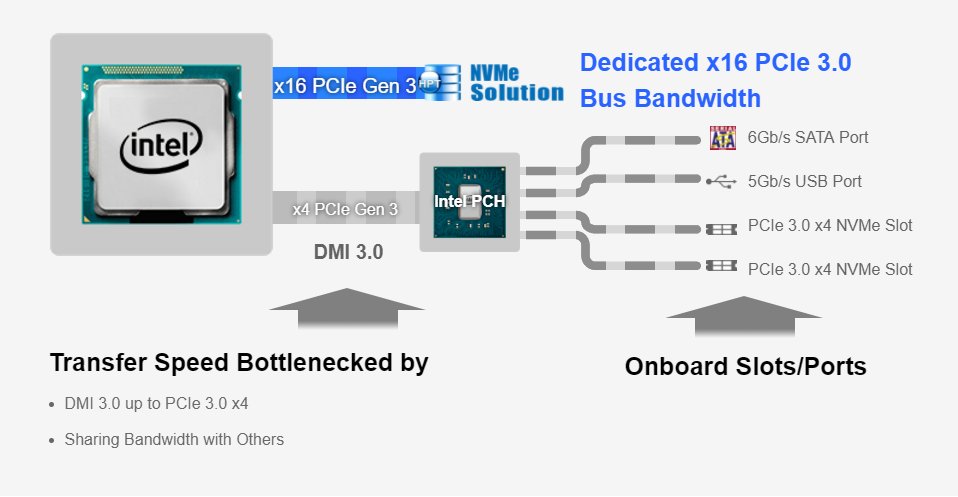

If it's not obvious already, the SSD7101A-1 gets around passing the storage data through the restrictive DMI data path. The DMI bus still has a hard limit of PCIe 3.0 x4, and it shares that bandwidth with other devices such as SATA, USB, networking, and so on. Intel relied on the DMI to hang several ports from the chipset, but the company never intended to utilize the limited bandwidth all at once.

The HighPoint SSD7101A, and similar cards, shoot the data straight to the CPU on motherboards with enough lanes, just like a video card. Without the vROC feature, the system sees the drives as individual SSDs. In this configuration, the SSD7101A acts like an HBA. You can use the drives separately or with Windows software RAID.

How It Works

Intel's vROC can support up to 20 storage devices, but we won't see enthusiast motherboards with that many slots anytime soon. That's where products like the HighPoint SSD7100 Series come in. Several companies will release PCI Express cards that house up to four NVMe SSDs that utilize PCIe 3.0 x4 each. The cards use a special chip from PLX (now part of Avago) to bifurcate the PCIe 3.0 x16 lanes on a single PCIe slot.

The ability to split PCIe lanes has been around for some time now in the server market. The technology is trickling down to consumer products, and even some existing motherboards already have the feature. Currently, it makes sense only on the X99 and X299 platforms, though. We managed to get it to work on products as old as Z87, but you can't run a discrete video card because Intel's consumer platform is stingy with PCI Express lanes.

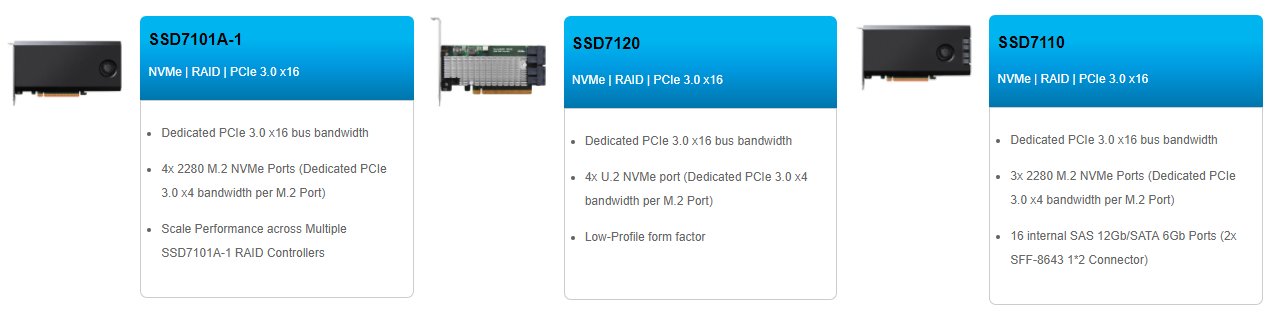

The SSD7100 Series consists of four devices, but only three are on the website. The SSD7120 and SSD7110 utilize the U.2 cable specification. The 7120 connects to four NVMe SSDs, but the 7110 attaches sixteen SAS drives using the older AHCI protocol.

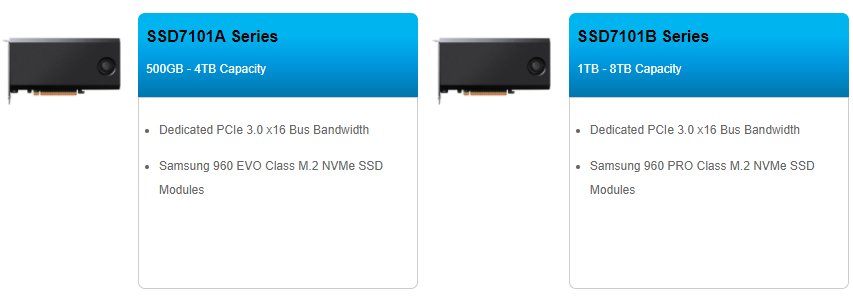

The SSD7101A and its variants are different. These cards hold four M.2 NVMe SSDs each, and you can combine more than one in each PC. The SSD7101A ships with Samsung 960 EVO SSDs pre-installed, and the SSD7101B ships with 960 Pro SSDs with up to 8TB of capacity.

HighPoint has only certified Samsung 960 Series NVMe SSDs because it says that's the only product people want to use for high-performance systems.

The SSD7101A-1 is a $399 add-in card that ships without SSDs. You can use 960 Series products or give other M.2 NVMe drives a shot. A rumor emerged at Computex that Intel would only support Intel SSDs with the vROC feature, but that is false. You will need to purchase a hardware dongle to enable RAID 5 and RAID 10 features, though.

This is just the beginning of our time with the HighPoint SSD7101A.

MORE: Best SSDs

MORE: How We Test HDDs And SSDs

MORE: All SSD Content

Chris Ramseyer was a senior contributing editor for Tom's Hardware. He tested and reviewed consumer storage.

-

msroadkill612 Hmm, , i wonder what benchmarks would be for a similar nvme array would be on the native nvme of TR or Epyc.Reply

I suspect the results are similar.

All sounds suss. They imply they are avoiding the limitations of pcie3, yet they are using a; pcie3 hardware/interface/device, a samsung 960 Pro.

I think all intel is doing, is remedying a deficiency in their native nvme ports. The slide u show of intel PCH is a disgrace to Intel - what lies? To pretend to have all those ports available, yet they havnt a hope of working concurrently. Either of the 2x nvme ports they claim would completely max out the 4 lanes on the PCH. Its underhanded deception.

It also bears noting this card isnt free, but a canny amd x399 mobo shopper, could get at least 3 full strength 4 lane nvme ports included - gleaned from the few moboS i have seen. -

Kraszmyl @ msroadkill612Reply

Well I can at least show you what it looks like on x99 , http://imgur.com/a/a68Sd -

USAFRet Reply19945520 said:This thing should be in the $199-$250 price range without SSD . the PLX chip is $80-$100 as I recall . the rest is dirt cheap .. asking $400 for an empty card is way too much. they dont even have emergency rechargeable battery included with the card at that price...

Remember that some motherboards with with PLX chips cost around $400 ... that includes sound , Lan , chip set , slots , power components , socket , PLX chip , ports , M2, and a some 8 layers much bigger PCB etc ...

Bring that card price down please ... $250 is OKAY

Please justify your pricing thoughts.

If you have need of 8TB SSD in RAID 0, and have the relevant backup situation required for a RAID 0...the difference between $200 and $400 for this interface card is not even a flyspeck.

That $200 that you think it is over priced by is less than a single days pay for the tech who will install and manage this.

Trivial. -

kanewolf Reply19945520 said:This thing should be in the $199-$250 price range without SSD . the PLX chip is $80-$100 as I recall . the rest is dirt cheap .. asking $400 for an empty card is way too much. they dont even have emergency rechargeable battery included with the card at that price...

Remember that some motherboards with with PLX chips cost around $400 ... that includes sound , Lan , chip set , slots , power components , socket , PLX chip , ports , M2, and a some 8 layers much bigger PCB etc ...

Bring that card price down please ... $250 is OKAY

If you believe it is overpriced -- THEN DON'T BUY ONE. If there are enough people of the same mindset then the vendor will either have to lower the price or will fail as a company. The vendor has done market research and believe that they can MAXIMIZE their profits at the price they have set. -

USAFRet Reply19946111 said:

This is not hardware RAID , this is only a PCIe x16 to 4x PCIe x4 split card in real.

it is the same if you use 4 x4 PCIe slots on the motherboard , nothing more. it Just save you space. instead of using 4 slots you use one x16 slot. Thats all whats in it.

OK...so what?

(even if that were actually true)

Their marketing dept has put a "retail" price of $400 on it.

As said..that theoretical MSRP $400 means exactly squat.

You don't just 'buy this' and slam it into your uber gaming PC, along with another $2,000 of SSD's.

Your consultant simply provides you with a solution that meets your data and performance needs, of which this thing is a part of. Probably not even an individual line item on the invoice. -

USAFRet Reply19946111 said:

and I can use this for 1TB using 256GBx4 NVME SSD m2 cards to get 13000MB/s speed. this is not only for 8 TB ...

Eventually, concepts like this filter down to the consumer level.

Just like NVMe

Just like SSD

Just like HDD

Just like 64bit/32bit/16bit....

That this particular thing is $400 is meaningless.

Lastly, I invite you to describe your particular use case that justifies 4 x 256GB NVMe drives in a RAID array.

Actual numbers (not theoretical benchmarks) with and without would be helpful...

The real world jump from floppy drive to HDD was huge. Speed AND size

The real world jump from HDD to SSD was huge, in terms of speed

The real world jump from SSD to NVMe, big, but not as large as before.

SSD + RAID 0? Useless, in the vast majority of actual use cases

NVMe + RAID 0? So far, useless in the vast majority of actual use cases.

Optane? A rehash of IRST, just with a faster cache tech.

Now...if you're a movie production house, in early development of Cars 5, or Toy Story 8....this may be useful to you now.

Otherwise...it is simply "nice to know about". -

dE_logics "can't boot from the software array"Reply

Oh, gosh. 1 OS 1 limit and 1 world. I knew it. All this because you want to make Windows somehow faster and extend the times between reformats. -

USAFRet Reply19946233 said:

lol ... the same thing was said when we moved from 500MB/s Sata SSD to 3000MB/s Nvme , it is the same topic repeated again and again ...

well welcome to the future :) ... when it is cheap to have crazy speed it is nice to have ... and it is becoming cheaper.

I guess you missed the part where I said exactly the same thing.

Whatever...I have a deck to go finish deconstructing before it rains again. -

Jayson_15 RAID 0 is so risky though, even more so than a single drive because you're increasing the potential for failure over multiple drives. Make frequent backups.Reply