Intel Confirms HBM Memory for Sapphire Rapids, Details Ponte Vecchio Packaging

CPUs, GPUs and HBM, oh my!

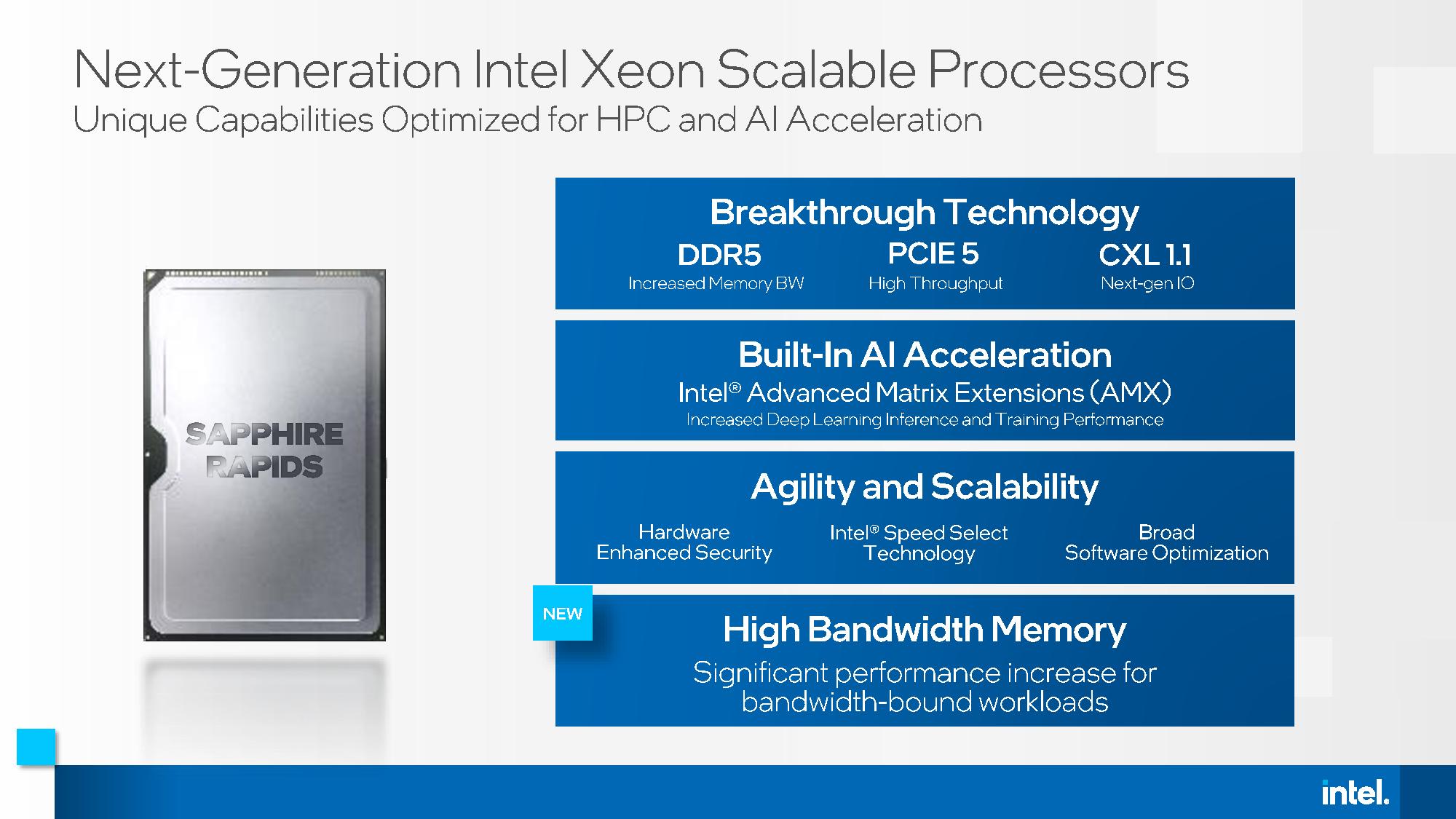

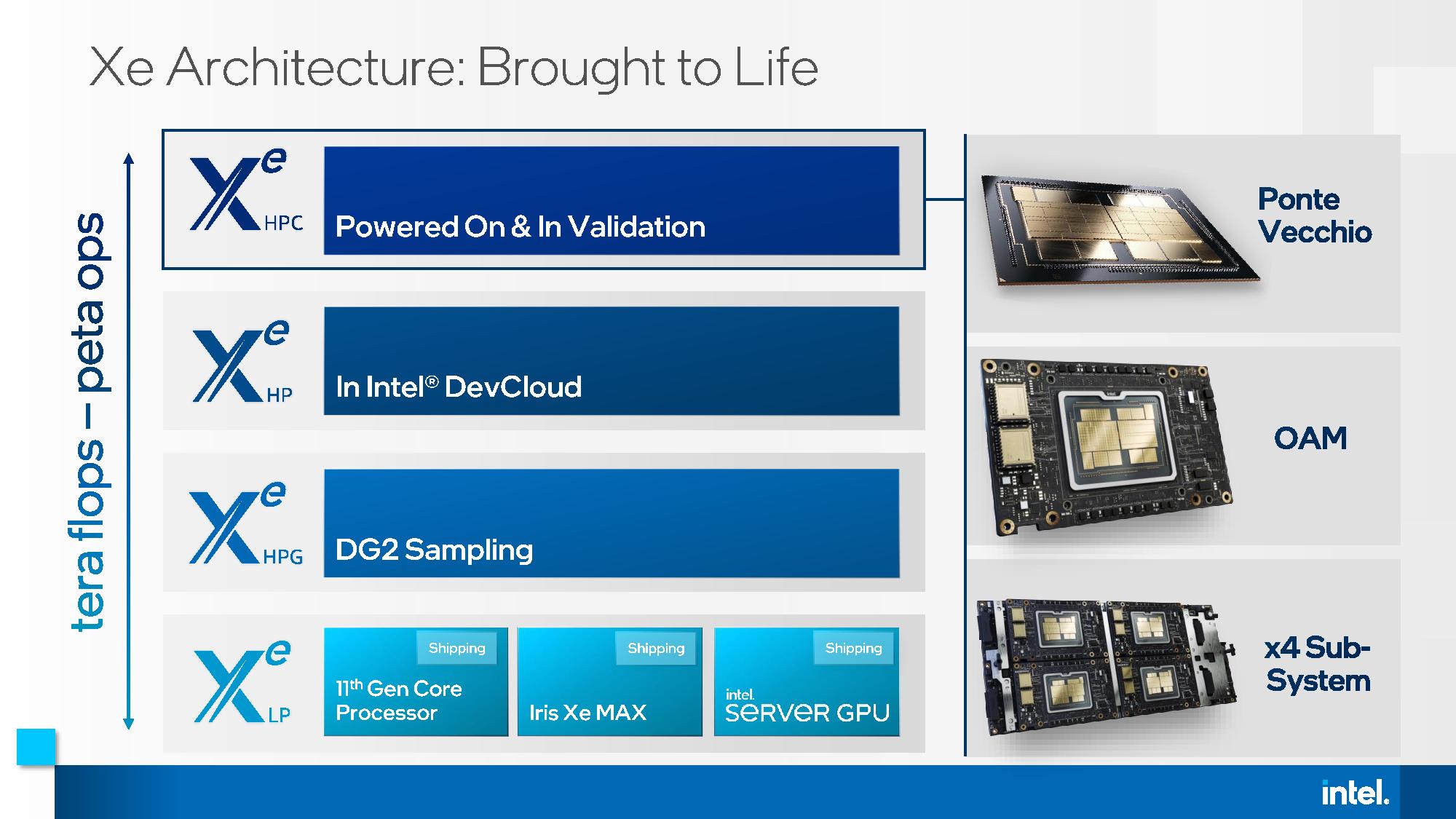

Intel made a slew of announcements at the International Supercomputing (ISC) 2021 conference today, talking up its broad range of solutions for supercomputing and HPC applications. The big news on the hardware front is that Intel's Sapphire Rapids data center chips would come with HBM memory, DDR5, PCIe 5.0, and support for CXL 1.1. Additionally, Intel confirmed that its Ponte Vecchio GPUs will come in an OAM form factor that purportedly consumes up to 600W per package. The Ponte Vecchio GPUs will come in clusters of either four or eight GPUs.

Intel Xeon Sapphire Rapids Coming with HBM (High Bandwidth Memory)

Intel has trickled out plenty of information about its Sapphire Rapids Xeon server chips, some intentionally, some unintentionally. We already know that the chips will come with the Golden Cove architecture etched onto the 10nm Enhanced SuperFin process. Intel first powered the chips on in June 2020, and they come with DDR5, PCIe 5, and CXL 1.1 support and will drop into Eagle Stream platforms. The chips also support Intel Advanced Matrix eXtensions (AMX) targeted at improving performance in training and inference workloads.

Now Intel has divulged that the chips will come with HBM memory, though the company hasn't specified how much capacity the chips will support or what type of memory they will use. This additional memory will ride on the same package as the Sapphire Rapids CPU cores, though it isn't yet clear if the HBM will be under the heatspreader or connected as part of a larger package.

Intel has confirmed that the chips will be available to all of its customers as part of the standard Sapphire Rapids product stack, though they will come to market a bit later than the HBM-less variants, though in the same rough time frame.

Intel hasn't tipped its hat yet on how it will expose the HBM to the operating system: This could conceivably be as an L4 cache or as an adjunct to standard main memory. However, Intel did divulge that the chips can be used either with or without main memory (DDR5), meaning there are likely several options for various memory configurations.

Intel says the HBM memory will help with memory-bound workloads that aren't as sensitive to core counts, perhaps signaling these chips will come with fewer cores than standard models. That makes sense given the need to possibly accommodate the HBM under the same heatspreader. Target workloads include computational fluid dynamics, climate and weather forecasting, AI training and inference, big data analytics, in-memory databases, and storage applications.

Intel hasn't divulged more details, but recent leaks point to the chips coming with up to 56 cores and 64GB of HBM2E memory in a multi-chip package with EMIB interconnects between the die.

Intel Ponte Vecchio OAM Form Factors

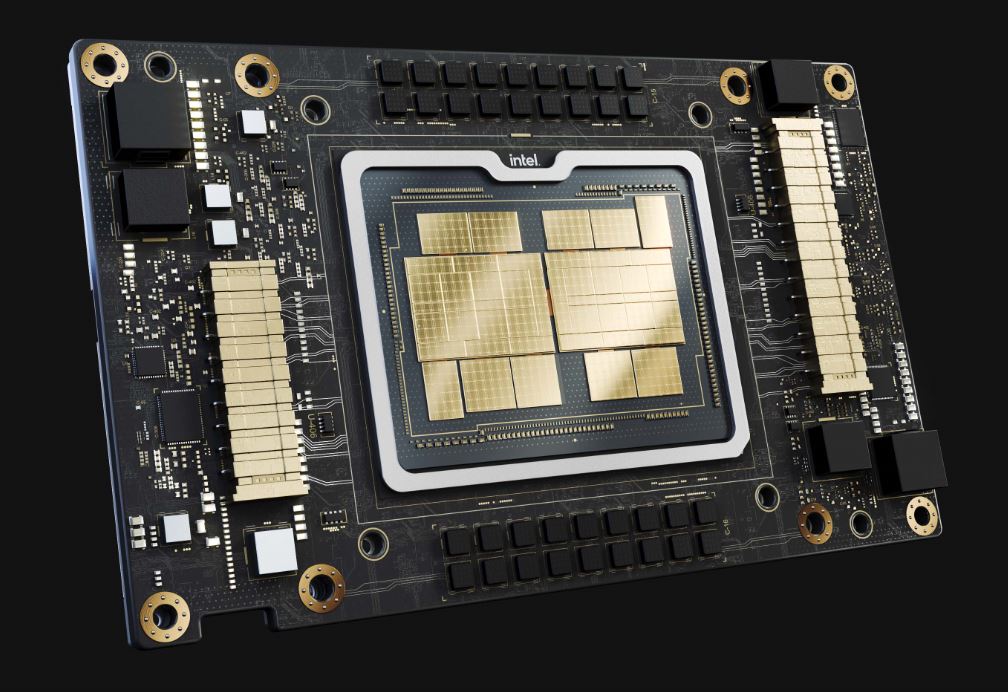

Intel has announced that its Xe HPC silicon, which it uses to create the 47-tile Ponte Vecchio chips with 100 billion transistors, is now being validated for systems in new multi-GPU implementations. Additionally, the company shared that the chips will come in OAM (Open Accelerator Module) form factors, confirming earlier reports.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

The OAM form factor is designed for use in the OCP (Open Compute Platform) ecosystem, and consists of a GPU die mounted on a carrier that is then connected to the motherboard through a mezzanine connector. This type of arrangement is similar to Nvidia's SXM form factor.

These types of chip packages are far more common in servers than add-in card form factors because they allow for more powerful cooling systems than found on traditional card form factors, while also providing an optimized method of routing power to the OAM package through a PCB (as opposed to PCIe cabling).

Leaked Intel documents have listed the Ponte Vecchio OAM packages at a peak of 600W, and all signs point to at least some of the GPU models using liquid cooling. However, it is conceivable that less-powerful variants may have air cooling options.

Intel will offer nodes with either four or eight OAM packages installed, referred to as x4 or x8 subsystems. Those nodes will then be integrated into its customers' servers. Intel is currently validating these multi-GPU designs.

The first Ponte Vecchio GPUs will appear in the Department of Energy's Aurora supercomputer, but that exascale system has been delayed several times (six years and counting), with the latest coming at the hands of Intel's 7nm delays that ultimately resulted in 16 of the Ponte Vecchio compute tiles being produced by TSMC. You can read more about the design here.

Intel confirmed that Aurora doesn't use the x4 or x8 form factors described above — each Aurora blade will consist of six Ponte Vecchio GPUs paired with two Sapphire Rapids CPUs (3:1 ratio). Intel also has another Ponte Vecchio win with the SuperMUC-NG supercomputer in Munchen, Germany. This system will have 240 new nodes with Ponte Vecchio paired with Sapphire Rapids, but it isn't clear if those use the x4 or x8 sleds.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

JayNor "each Aurora blade will consist of six Ponte Vecchio GPUs paired with two Sapphire Rapids CPUs (6:1 ratio)."Reply

6:1 ratio of what?