Nvidia GeForce GTX 1080 Pascal Review

The Display Pipeline, SLI And GPU Boost 3.0

Pascal's Display Pipeline: HDR-Ready

When we met with AMD in Sonoma, CA late last year, the company teased some details of its Polaris architecture, one of which was a display pipeline ready to support high dynamic range content and displays.

It comes as no surprise that Nvidia’s Pascal architecture features similar functionality—some of which was even available in Maxwell. For instance, GP104’s display controller carries over 12-bit color, BT.2020 wide color gamut support, the SMPTE 2084 electo-optical transfer function and HDMI 2.0b with HDCP 2.2.

To that list, Pascal adds accelerated HEVC decoding at 4K60p and 10/12-bit color through fixed-function hardware, which would seem to indicate support for Version 2 of the HEVC standard. Nvidia previously used a hybrid approach that leveraged software. Moreover, it was limited to eight bits of color information per pixel. But we’re guessing the company's push to support Microsoft’s controversial PlayReady 3.0 specification necessitated a faster and more efficient solution.

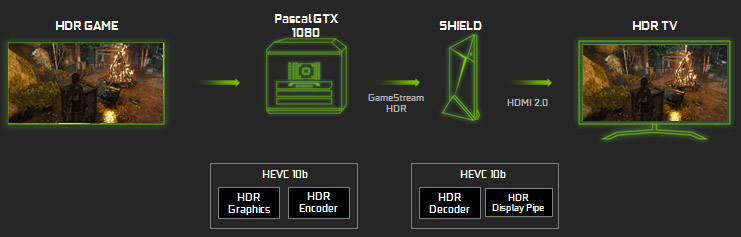

The architecture also supports HEVC encoding in 10-bit color at 4K60p for recording or streaming in HDR, and Nvidia already has what it considers a killer app for this. Using GP104’s encoding hardware and upcoming GameStream HDR software, you can stream high dynamic range-enabled games to a Shield appliance attached to an HDR-capable television in your house. The Shield is equipped with its own HEVC decoder with support for 10-bit-per-pixel color, preserving that pipeline from end to end.

| Header Cell - Column 0 | GeForce GTX 1080 | GeForce GTX 980 |

|---|---|---|

| H.264 Encode | Yes (2x 4K60p) | Yes |

| HEVC Encode | Yes (2x 4K60p) | Yes |

| 10-bit HEVC Encode | Yes | No |

| H.264 Decode | Yes (4K120p up to 240 Mb/s) | Yes |

| HEVC Decode | Yes (4K120p/8K30p up to 320 Mb/s) | No |

| VP9 Decode | Yes (4K120p up to 320 Mb/s) | No |

| 10/12-bit HEVC Decode | Yes | No |

Complementing its HDMI 2.0b support, GeForce GTX 1080 is DisplayPort 1.2-certified and DP 1.3/1.4-ready, trumping Polaris’ DP 1.3-capable display controller before it sees the light of day. Fortunately for AMD, version 1.4 of the spec doesn’t define a faster transmission mode—HBR3’s 32.4 Gb/s is still tops.

As mentioned previously, the GeForce GTX 1080 Founders Edition card sports three DP connectors, an HDMI 2.0b port and one dual-link DVI output. As with the GTX 980, it’ll drive up to four independent monitors at a time. Instead of topping out at 5120x3200 using two DP 1.2 cables, though, the 1080 supports a maximum resolution of 7680x4320 at 60Hz.

SLI: Now Officially Supporting Two GPUs

Traditionally, Nvidia’s highest-end graphics cards came armed with a pair of connectors up top for roping together two, three or four boards in SLI. Enthusiasts came to expect the best scaling from dual-GPU configurations. Beyond that, gains started tapering off as potential pitfalls multiplied. But some enthusiasts still pursued three- and four-way setups for ever-higher frame rates and the associated bragging rights.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Times are changing, though. According to Nvidia, as a result of challenges achieving meaningful scaling in the latest games, no doubt related to DirectX 12, GeForce GTX 1080 only officially supports two-way SLI. So why does the card still have two connectors? Using new SLI bridges, both connectors can be used simultaneously to enable a dual-link mode. Not only do you get the benefit of a second interface, but Pascal also accelerates the I/O to 650MHz, up from the previous generation’s 400MHz. As a result, bandwidth between processors more than doubles.

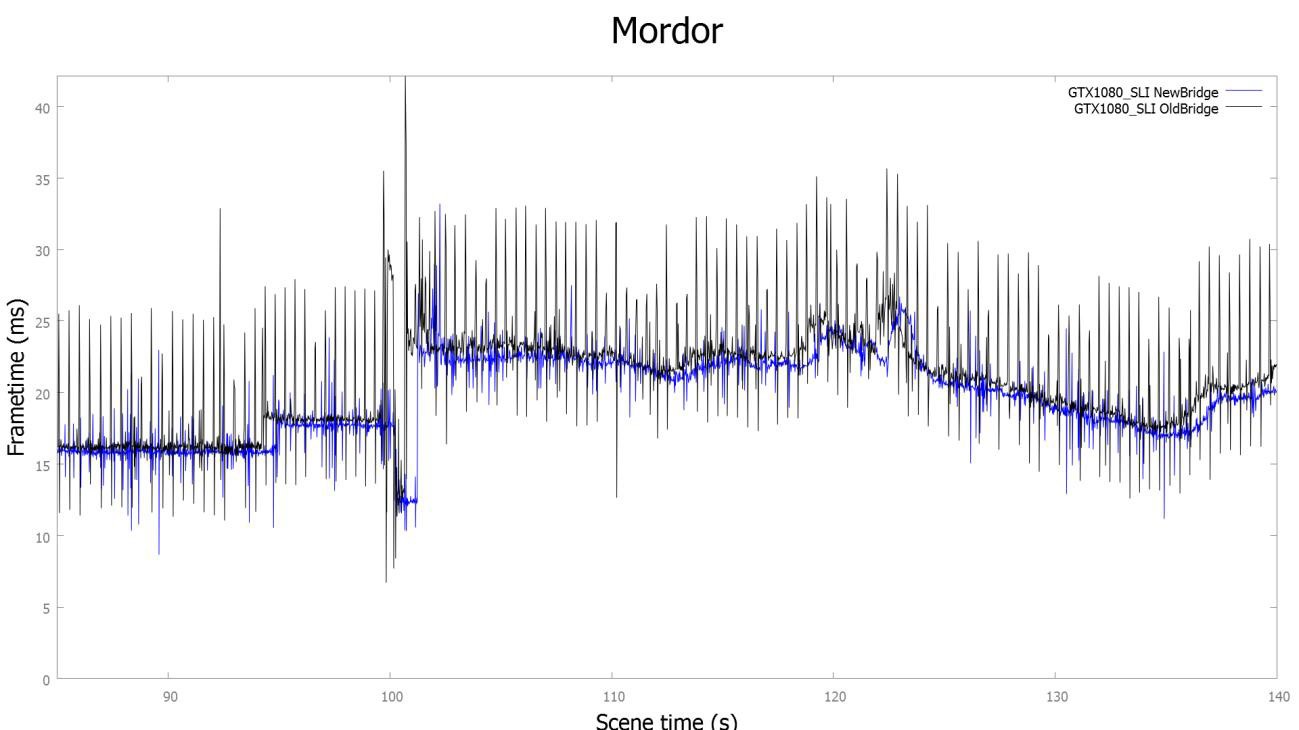

Many gamers won’t see the benefit of a faster link. Its impact is felt primarily at high resolutions and refresh rates. However, Nvidia did share an FCAT capture showing two GeForce GTX 1080s playing Middle earth: Shadow of Mordor across three 4K displays. Linking both cards with the old bridge resulted in consistent spikes in frame time, suggesting a predictable problem with timing that manifests as stuttering. The spikes weren’t as common or as severe using the new bridge.

Nvidia adds that its SLI HB bridges aren’t the only ones able to support dual-link mode. Existing LED-lit bridges may also run at up to 650MHz if you use them on Pascal-based cards. Really, the flexible/bare PCB bridges are the ones you’ll want to transition away from if you’re running at 4K or higher. Consult the chart below for additional guidance from Nvidia:

| Header Cell - Column 0 | 1920x1080 | 2560x1440@ 60Hz | 2560x1440 @ 120Hz+ | 4K | 5K | Surround |

|---|---|---|---|---|---|---|

| Standard Bridge | x | x | Row 0 - Cell 3 | Row 0 - Cell 4 | Row 0 - Cell 5 | Row 0 - Cell 6 |

| LED Bridge | x | x | x | x | Row 1 - Cell 5 | Row 1 - Cell 6 |

| High-Bandwidth Bridge | x | x | x | x | x | x |

So what brought about this uncharacteristic about-face on three- and four-way configurations from a company all about selling hardware and driving higher performance? Cynically, you could say Nvidia doesn’t want to be held liable for a lack of benefit associated with three- and four-way SLI in a gaming market increasingly hostile to its rendering approach. But the company insists it’s looking out for the best interests of customers as Microsoft hands more control over multi-GPU configurations to game developers, who are in turn exploring technologies like split-frame rendering instead of AFR.

Enthusiasts who don’t care about any of that and are hell-bent on breaking speed records using older software can still run GTX 1080 in three- and four-way setups. They’ll need to generate a unique hardware signature using software from Nvidia, which can then be used to request an “unlock” key. Of course, the new SLI HB bridges won’t work with more than two GPUs, so you’ll need to track down one of the older LED bridges built for three/four-way setups at GP104’s 650MHz link rate.

A Quick Intro To GPU Boost 3.0

Eager to extract even more performance from its GPUs, Nvidia is fine-tuning its GPU Boost functionality yet again.

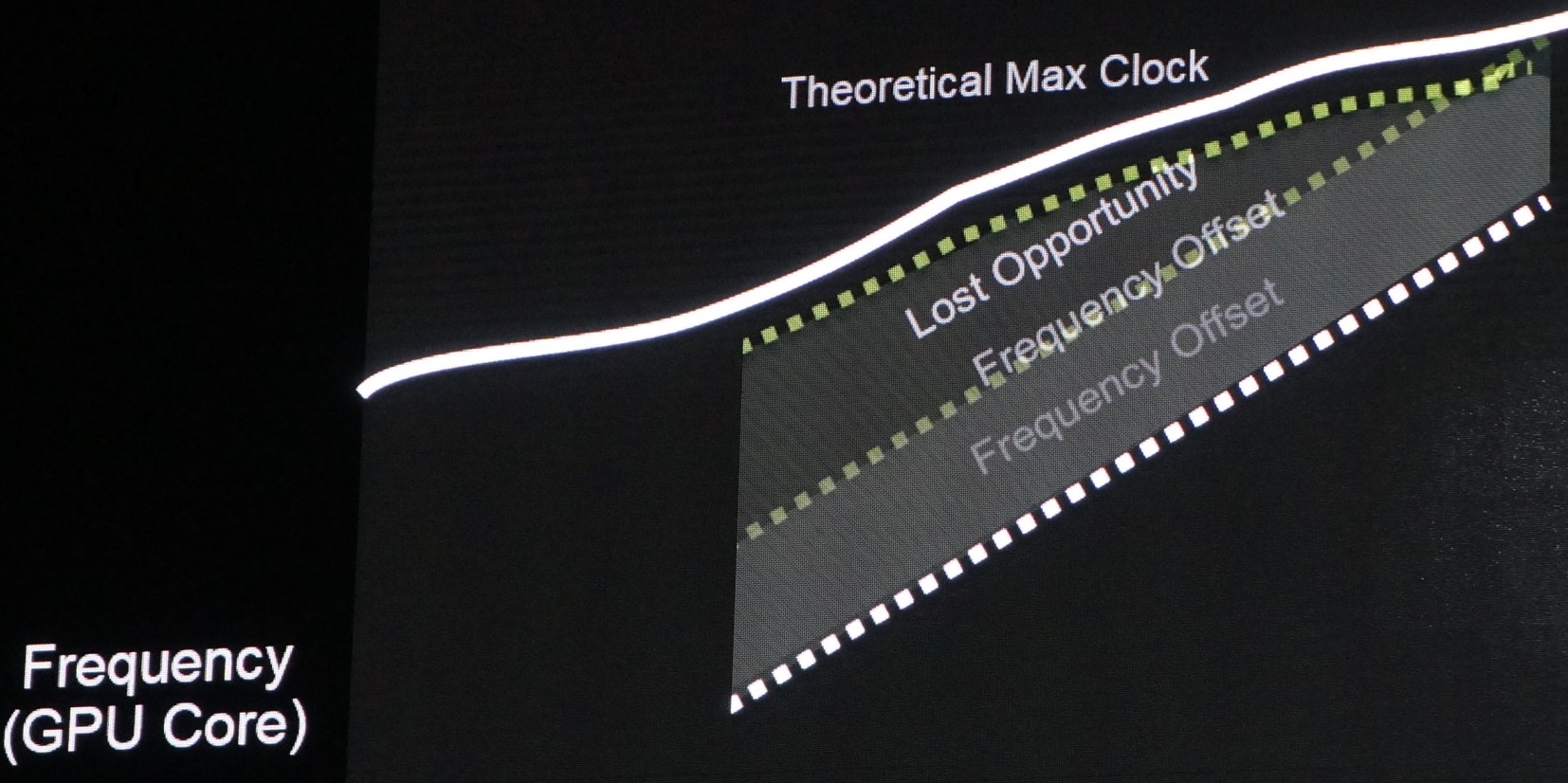

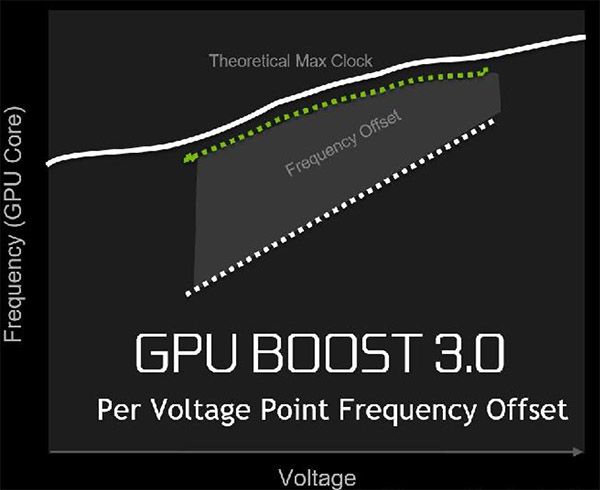

In the previous generation (GPU Boost 2.0), a fixed frequency offset could be set, shifting the voltage/frequency curve by a defined amount. Potential headroom above that line basically amounted to lost opportunity.

Now, GPU Boost 3.0 lets you define frequency offsets for individual voltage points, increasing clock rate up to a certain temperature target. And rather than forcing you to experiment with stability up and down the curve, Nvidia accommodates overclocking scanners that can automate the process, creating a custom V/F curve unique to your specific GPU.

Current page: The Display Pipeline, SLI And GPU Boost 3.0

Prev Page Simultaneous Multi-Projection And Async Compute Next Page How We Tested Nvidia's GeForce GTX 1080-

JeanLuc Chris, were you invited to the Nvidia press event in Texas?Reply

About time we saw some cards based of a new process, it seemed like we were going to be stuck on 28nm for the rest of time.

As normal Nvidia is creaming it up in DX11 but DX12 performance does look ominous IMO, there's not enough gain over the previous generation and makes me think AMD new Polaris cards might dominate when it comes to DX12. -

slimreaper Could you run an Otoy octane bench? This really could change the motion graphics industry!?Reply

-

F-minus Seriously I have to ask, did nvidia instruct every single reviewer to bench the 1080 against stock maxwell cards? Cause i'd like to see real world scenarios with an OCed 980Ti, because nobody runs stock or even buys stock, if you can even buy stock 980Tis.Reply -

cknobman Nice results but honestly they dont blow me away.Reply

In fact, I think Nvidia left the door open for AMD to take control of the high end market later this year.

And fix the friggin power consumption charts, you went with about the worst possible way to show them. -

FormatC Stock 1080 vs. stock 980 Ti :)Reply

Both cards can be oc'ed and if you have a real custom 1080 in your hand, the oc'ed 980 Ti looks in direct comparison to an oc'ed 1080 worse than the stock card in this review to the other stock card. :) -

Gungar @F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)Reply -

toddybody Reply@F-minus, i saw the same thing. The gtx 980Ti overclocks way better thn 1080, i am pretty sure OC vs OC, there is nearly no performance difference. (disappointing)

LOL. My 980ti doesnt hit 2.2Ghz on air. We need to wait for more benchmarks...I'd like to see the G1 980ti against a similar 1080. -

F-minus Exactly, but it seems like nvidia instructed every single outlet to bench the Reference 1080 only against stock Maxwell cards, which is honestly <Mod Edit> - pardon. I bet an OCed 980Ti would come super close to the stock 1080, which at that point makes me wonder why even upgrade now, sure you can push the 1080 too, but I'd wait for a price drop or at least the supposed cheaper AIB cards.Reply -

FormatC I have a handpicked Gigabyte GTX 980 Ti Xtreme Gaming Waterforce at 1.65 Ghz in one of my rigs, it's slower.Reply