Nvidia GeForce RTX 2070 Founders Edition Review: Replacing GeForce GTX 1080

Why you can trust Tom's Hardware

Power Consumption

Slowly but surely, we’re spinning up multiple Tom’s Hardware labs with Cybenetics’ Powenetics hardware/software solution for accurately measuring power consumption.

Powenetics, In Depth

For a closer look at our U.S. lab’s power consumption measurement platform, check out Powenetics: A Better Way To Measure Power Draw for CPUs, GPUs & Storage.

In brief, Powenetics utilizes Tinkerforge Master Bricks, to which Voltage/Current bricklets are attached. The bricklets are installed between the load and power supply, and they monitor consumption through each of the modified PSU’s auxiliary power connectors and through the PCIe slot by way of a PCIe riser. Custom software logs the readings, allowing us to dial in a sampling rate, pull that data into Excel, and very accurately chart everything from average power across a benchmark run to instantaneous spikes.

The software is set up to log the power consumption of graphics cards, storage devices, and CPUs. However, we’re only using the bricklets relevant to graphics card testing. Nvidia's GeForce RTX 2070 Founders Edition gets all of its power from the PCIe slot and one eight-pin PCIe connector.

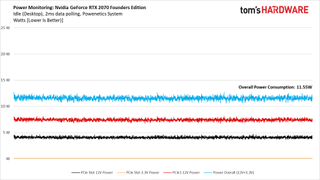

Idle

An average idle power measurement of 11.5W is a big improvement over the numbers we saw from GeForce RTX 2080 and 2080 Ti.

But Nvidia’s GeForce RTX 2070 Founders Edition still uses quite a bit more power than the GTX 1080 Founders Edition before it. The Turing architecture is vindicated only by Radeon RX Vega 64’s frenetic power curve, which bounces all over the place.

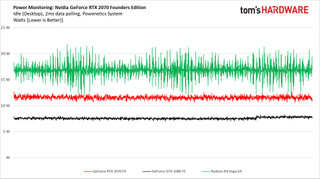

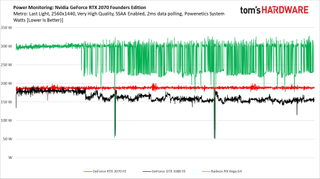

Gaming

Our usual Metro: Last Light run at 1920x1080 isn’t taxing enough to push GeForce RTX 2070 to maximum utilization, so we increase the resolution to 2560x1440 and enable SSAA. Three loops through the benchmark are clearly delineated by power dips between them.

Most of GeForce RTX 2070 Founders Edition’s power comes from its eight-pin auxiliary connector. Add in the PCIe slot and you get an average of 187.7W through our gaming workload.

AMD’s Radeon RX Vega 64 averages 277W through three runs of the Metro: Last Light benchmark, while GeForce GTX 1080 Founders Edition sees its power consumption pulled back before the first run ends, yielding a 162W average.

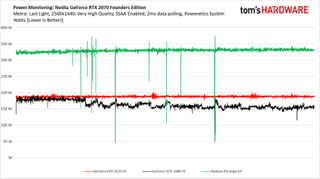

Edit, 10/16/18: For anyone wanting to see what a third-party Radeon RX Vega 64 looks like in our power consumption charts, the above graph substitutes out AMD's reference design and replaces it with a Nitro+ Radeon RX Vega 64. The throttling behavior stops thanks to Sapphire's superior thermal solution, but overall consumption rises significantly.

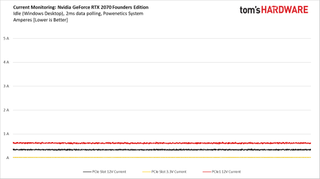

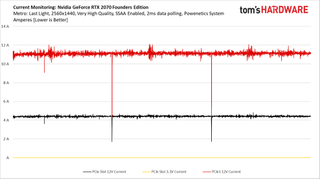

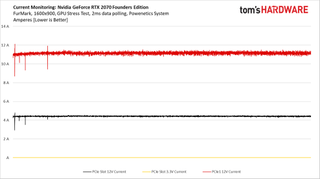

Nvidia has no trouble keeping current draw from the PCIe slot well under the 5.5A limit.

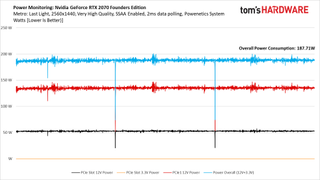

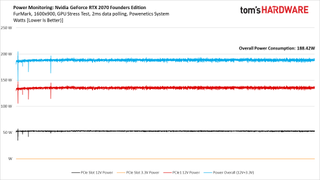

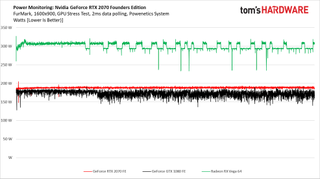

FurMark

Maximum utilization yields a much more even line chart as we track ~10 minutes under FurMark.

Average power use rises slightly to 188.4W. Again, Nvidia does an excellent job balancing between the PCIe slot and its auxiliary power connector.

GeForce RTX 2070 maintains higher power consumption than GeForce GTX 1080, but lands way under AMD’s Radeon RX Vega 64.

Current over the PCIe slot is perfectly acceptable.

MORE: Best Graphics Cards

MORE: Desktop GPU Performance Hierarchy Table

MORE: All Graphics Content

Current page: Power Consumption

Prev Page Results: Tom Clancy’s Ghost Recon, The Witcher 3, and WoW Next Page Temperatures and Fan SpeedsStay on the Cutting Edge

Join the experts who read Tom's Hardware for the inside track on enthusiast PC tech news — and have for over 25 years. We'll send breaking news and in-depth reviews of CPUs, GPUs, AI, maker hardware and more straight to your inbox.

-

coolio0103 200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardwareReply -

80-watt Hamster Frustrating how, in two generations, Nvidia's *70-class offering has gone from $330 to $500 (est). Granted, we're talking more than double the performance, so it can be considered a good value from a strict perf/$ perpsective. But it also feels like NV is moving the goalposts for what "upper mid-range" means.Reply -

TCA_ChinChin gtx 1080 - 470$Reply

rtx 2070 - 600$

130$ increase for less than 10% fps improvement on average. Disappointing, especially with increased TDP, which means efficiency didn't really increase so even for mini ITX builds, the heat generated is gonna be pretty much the same for the same performance. -

cangelini Reply21406199 said:200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardware

This quite literally will replace 1080 once those cards are gone. The conclusion sums up what we think of 2070 FE's value, though. -

bloodroses Reply21406199 said:200$ more expensive a rtx 2070 than a gtx 1080 Strix or msi which is the same perfomance than the FE 2070. Bye bye 1080? Really? This page is dropped to the lowest part of the internet news pages. Bye bye Tom's hardware

Prices will come down on the RTX 2070's. GTX 1080's wont be available sooner or later. Tom's Hardware is correct on the assessment of the RTX 2070. Blame Nvidia for the price gouging on early adopters; and AMD for not having proper competition. -

demonhorde665 wow seriously sick of the elitism at tom's . seems every few years they push up what they deem "playable frame rates" . just 3 years ago they were saying 40 + was playable. 8 years ago they were saying 35+ and 12+ years ago they were saying 25+ was playable. now they are saying in this test that only 50 + is playable? . serilously read the article not just the frame scores , the author is saying at several points that the test fall below 50 fps "the playable frame rate". it's like they are just trying to get gamers to rush out and buy overpriced video cards. granted 25 fps is a bit eye soreish on today's lcd/led screens , but come on. 35 is definitely playable with little visual difference. 45+ is smooth as butterReply

yeah it would be awesome if we could get 60 fps on every game at 4k. it would be awesome just to hit 50 @ 4k, but ffs you don't have to try to sell the cards so hard. admit it gamers on a less-than-top-cost budget will still enjoy 4k gaming at 35 , 40 or 45 fps. hell it's not like the cards doing 40-50 fps are cheap them selves… gf 1070's still obliterate most consumer's pockets at $420-450 bucks a card. the fact is top end video card prices have gone nutso in the past year or two... 600 -800 dollars for just a video card is f---king insane and detrimental to the PC gaming industry as a whole. just 6 years ago you could build a decent mid tier gaming rig for 600-700 bucks , now that same rig (in performance terms) would run you 1000-1200 , because of this blatant price gouging by both AMD and nvidia (but definitely worse on nvidia's side). 5-10 years from now ever one will being saying that 120 fps is ideal and that any thing below 100 fps is unplayable. it's getting ridiculous. -

jeffunit With its fan shroud disconnected, GeForce RTX 2070’s heat sink stretches from one end of the card, past the 90cm-long PCB...Reply

That is pretty big, as 90cm is 35 inches, just one inch short of 3 feet.

I suspect it is a typo. -

tejayd Last line in the 3rd paragraph "If not, third-party GeForce RTX 2070s should start in the $500 range, making RTX 2080 a natural replacement for GeForce GTX 1080." Shouldn't that say "making RTX 2070 a natural replacement". Or am I misinterpreting "natural"?Reply -

Brian_R170 The 20-series have been a huge let-down . Yes, the 2070 is a little faster than the 1080 and the 2080 is a little faster than the 1080Ti, but they're both are more expensive and consume more power than the cards they supplant. Shifting the card names to a higher performance bar is just a marketing strategy.Reply