CNEX Labs Looks Like Missing 3D XPoint Puzzle Piece

We had the rare opportunity to catch an exclusive glimpse of Micron’s QuantX 3D XPoint prototype during the recent Flash Memory Summit, and we were also able to secure some juicy details. Micron based its QuantX SSDs on the new 3D XPoint memory that Intel and Micron promise will upend storage and memory as we know it. As good as our exclusive details were, they were only the beginning; we were able to track down the purported controller vendor and get the full low-down on a high-flying new SSD architecture.

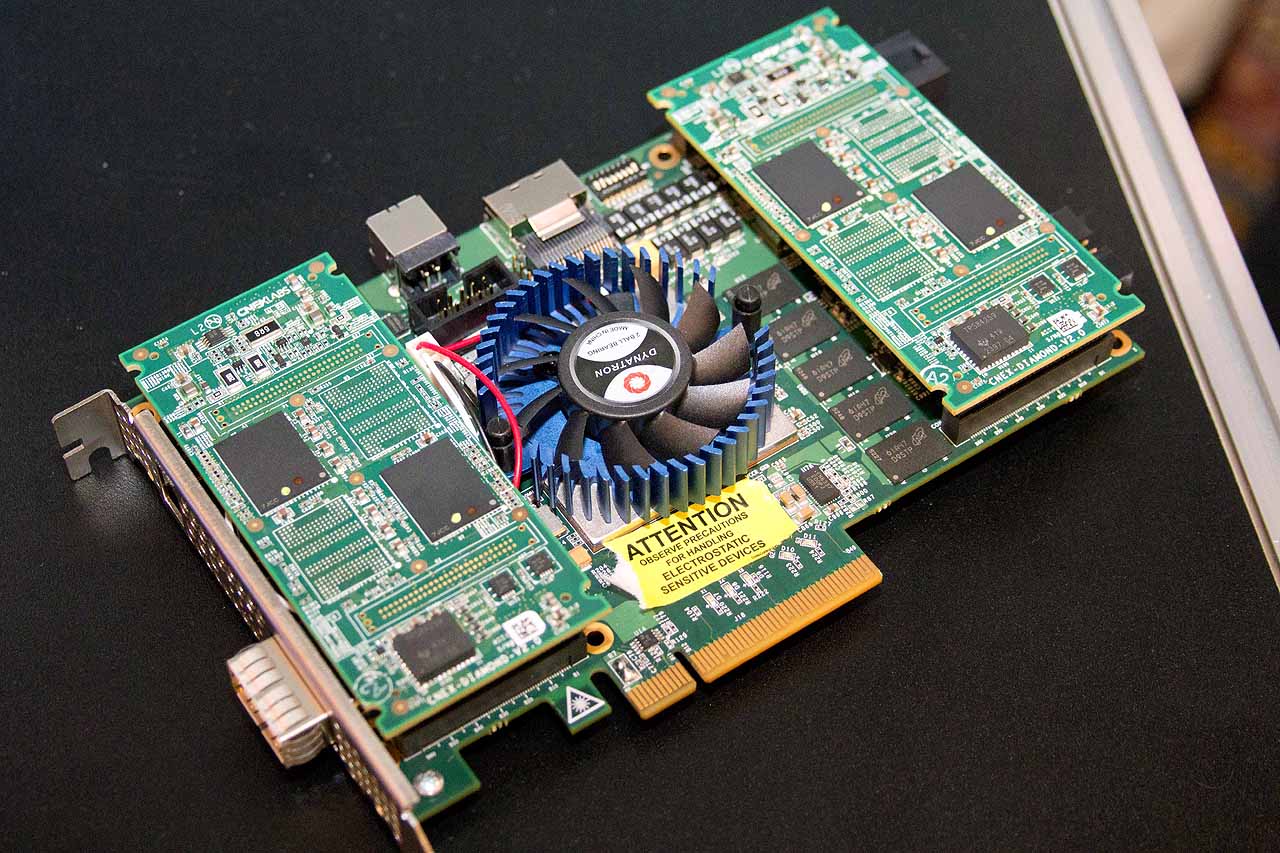

Development boards are always somewhat cramped affairs, because they are merely larger versions of the refined and sophisticated end device, but they are indicative of overall design choices and help identify key components. One discovery in particular on the prototype sent us on a hunt for more information.

A Micron representative commandeered a yellow sticker from a nearby electrostatic bag to cover the name of an undisclosed partner on the PCB before they allowed us to take our pictures, but the representative was apparently unaware that both of the daughterboards on the card are clearly labeled with “CNEX – Diamond V2.0.”

This was an interesting coincidence: CNEX Labs, the company behind the CNEX branding, had reached out to us before the show for a briefing. Unfortunately, our schedules didn’t align.

After our discovery, we suddenly found that our schedule magically aligned, and we hastily arranged a meeting. CNEX quickly threw up a wall of silence and refused to discuss anything directly related to a QuantX or 3D XPoint-specific product, or the name Micron, for that matter. However, CNEX shared details of its SSD controllers and the software and hardware architecture that it designed to operate with any persistent memory, such as NAND, 3D XPoint, ReRAM and PCM, et al. We also discussed (in a broad sense) the company’s vision for the future of persistent memory.

We also scheduled a follow-up meeting with the Micron QuantX development team and requested more information. The company indicated that it does have an exclusive arrangement with an undisclosed third-party SSD controller partner, but Micron representatives adamantly refused, in very strong terms, to confirm or deny if CNEX Labs is involved in QuantX development.

And yet we live in the land of common sense, so we firmly believe that all signs point to CNEX as the exclusive (at least for now) QuantX SSD controller vendor. After all, CNEX branding is emblazoned on the prototype in bold letters.

Get Tom's Hardware's best news and in-depth reviews, straight to your inbox.

Our conversation with CNEX outlined the challenges associated with unlocking the true performance capabilities of today’s NAND and future persistent memories. This requires circumventing some of the established SSD architectural rules to cut through the layers of abstraction between the host and the storage, and it may just give us a glimpse of how 3D XPoint-powered devices will operate.

Who Is CNEX Labs?

CNEX Labs is a privately owned startup founded in June 2013. The company has had four funding rounds from seven investors (Micron, Cisco, Samsung, Seagate and VCs Sierra Ventures, Walden, and UMC Capital) for a total of $45.5 million (source: Crunchbase). The company, which is still operating in startup mode, touts on its limited website that the company secured funding through venture capital and strategic investments from storage and networking companies in the Fortune 500.

The company has a roster that reads like a who’s who of the semiconductor industry. Alan Armstrong, CEO, who has 14 years of tenure at Marvell under his belt, heads the team, and he loaded his bench with heavy-hitting veterans from such industry bastions as Huawei, SandForce, SanDisk, Samsung, Smart Storage Systems and Cisco.

CNEX targets SSD manufacturers, such as large fabs like Micron, and also targets hyperscale data center operators, such as Facebook, Google, Baidu and Tencent, many of which build their own SSDs. CNEX’s value proposition is that its controllers enable multiple types of memory, and it provides customers with unprecedented access and control of the underlying media, regardless of what memory the vendor employs.

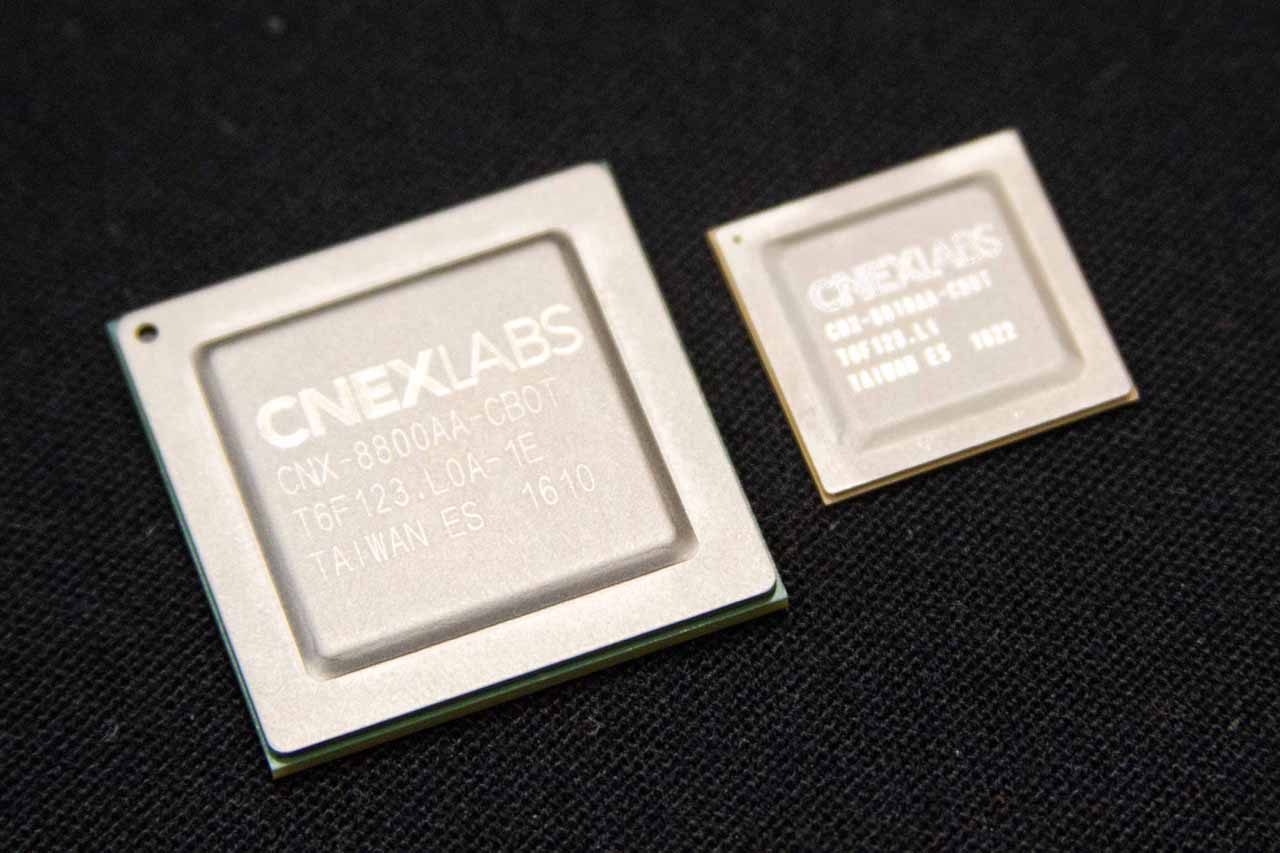

CNEX SSD Controllers

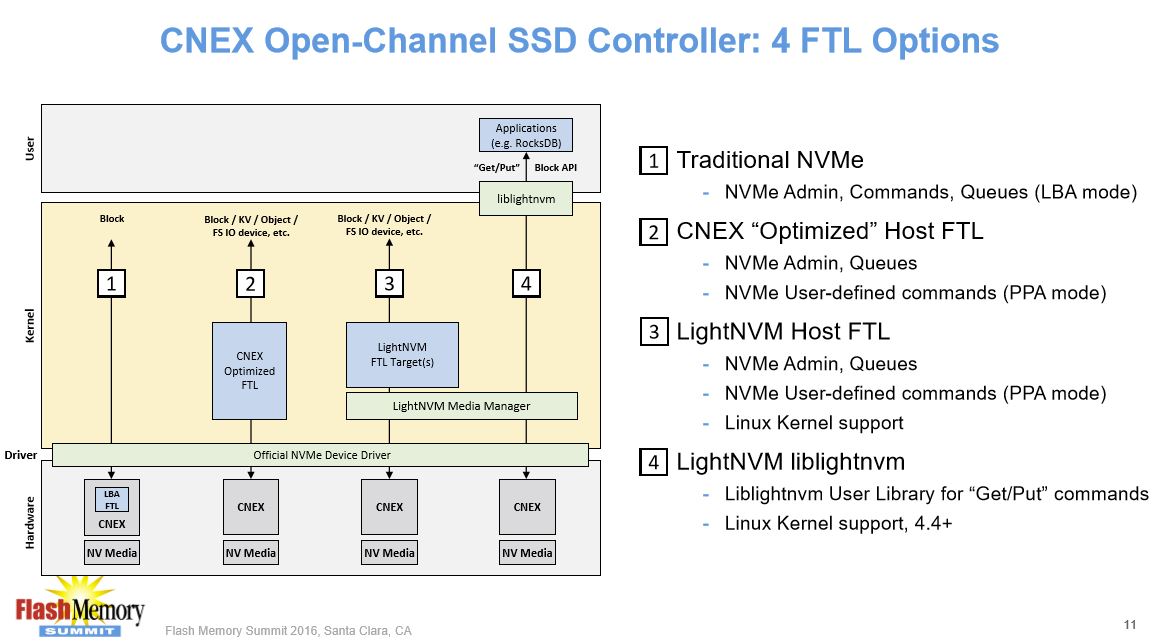

The CNEX Labs SSD controllers feature an innovative design geared to offer its customers a number of options that range from plug-and-play NVMe to support for Open-Channel and LightNVM, which gives the user complete control of the underlying storage media. The solution can be as simple as providing the user with a fully NVMe-compatible controller that is plug-and-play with all existing systems, but the company also offers much more granular control of the SSD, literally down to the cell level, to its users.

What Does This Have To Do with 3D XPoint?

3D XPoint doesn't work in the normal way that NAND does. For instance, Micron disclosed to us that program and erase cycles (P/E Cycles) do not really apply; it doesn’t have to erase the media before it writes to it, so the company simply overwrites existing data using a method called "forced writes." Forced writes are one of the many problems that the increased capabilities of CNEX controllers are designed to solve, but to understand how it works, we have to begin with the basics

How SSDs Work

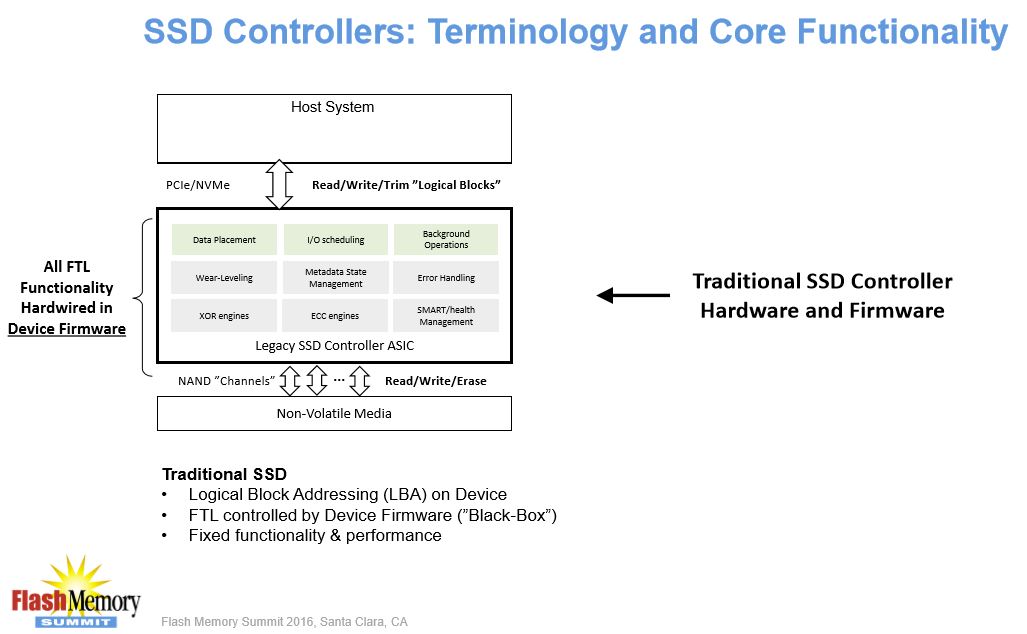

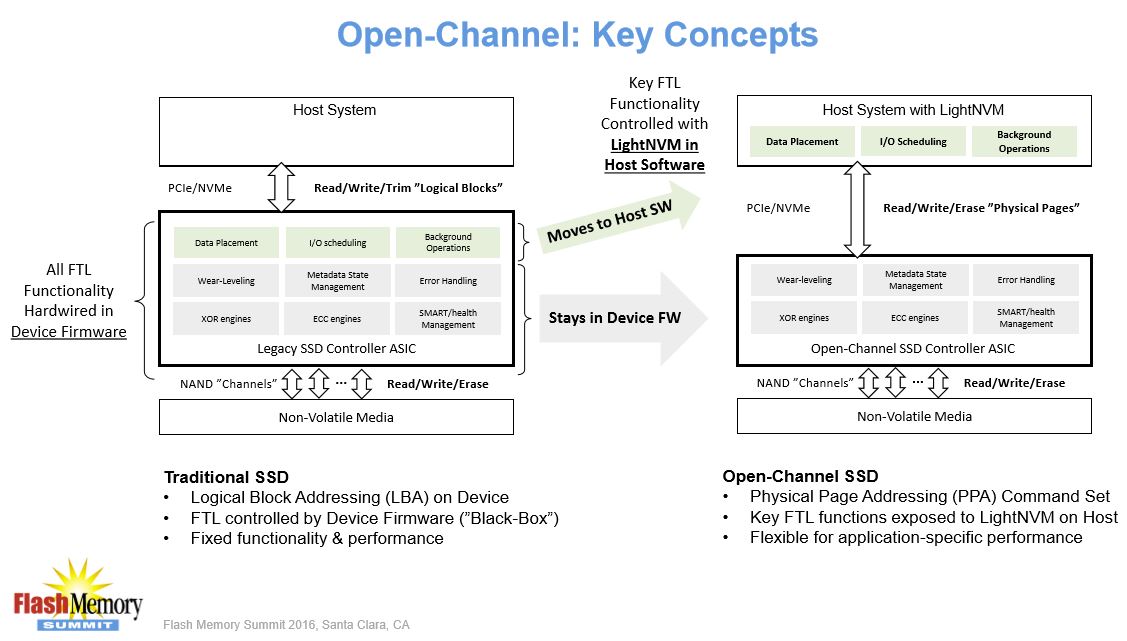

A normal SSD is basically a black box. Users simply plug the device in and the SSD controller serves as the conductor of a symphony of internal SSD functions that are constantly playing in the background, commonly referred to as the Flash Translation Layer (FTL). A normal SSD controller handles I/O scheduling, wear leveling, XOR engines, background operations, and data placement internally. The FTL, which runs in the controller firmware, is of particular importance because it is responsible for directing the incoming data to its final destination.

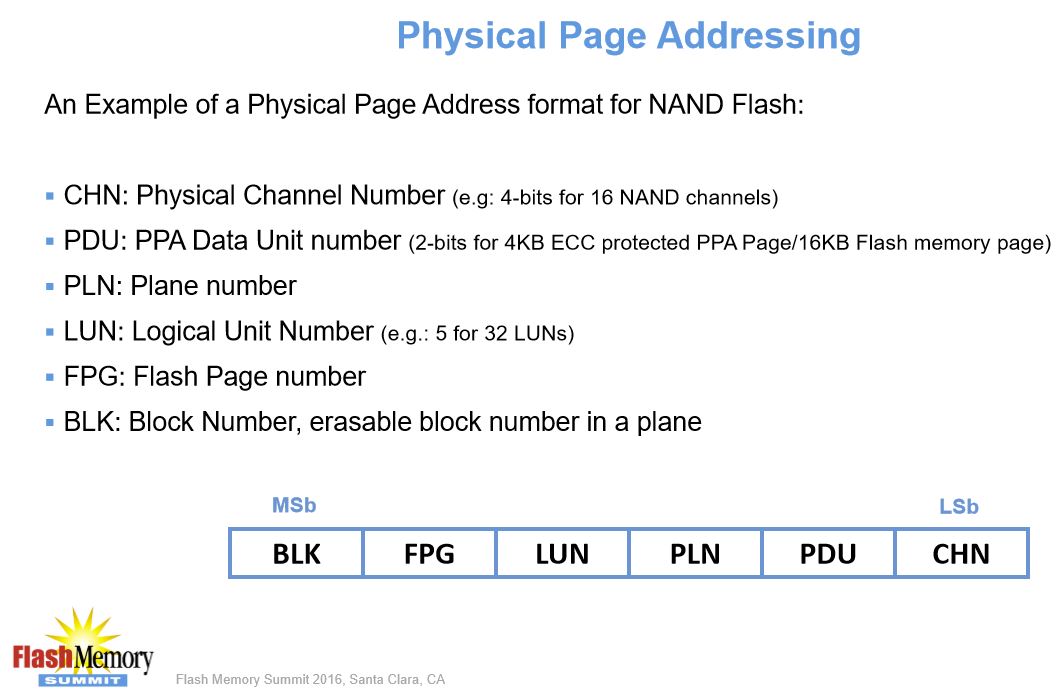

Because of the nature of SSD design, and the need to spread wear evenly across the cells, the SSD does not place the data exactly where the host directs it. The SSD FTL maps Logical Block Addresses (LBA) from the host to the Physical Page Addresses (PPA) on the media.

Essentially, the SSD has two maps of the LBA addresses: the logical map outlines where the host "thinks" it is placing data and the physical map notes where the SSD actually places the data. When the host issues read or write requests, the SSD translates the address from the logical address to the physical address, and either places data into, or retrieves it from, the correct place. The FTL manages this abstracted logical-to-physical (L2P) mapping process. Other processes like wear leveling and garbage collection use the FTL heavily during normal operation to ensure that the SSD manages endurance and performance in accordance with the SSD vendors' specifications.

The great thing about the embedded FTL is that the device stands alone; the user has to do nothing, and the SSD is as simple as plug-and-play. The bad thing is that there is limited computational horsepower behind the controller, and the lack of control leaves some truly amazing capabilities on the table.

How CNEX Labs, And Just About Everyone Else, Thinks SSDs Should Work

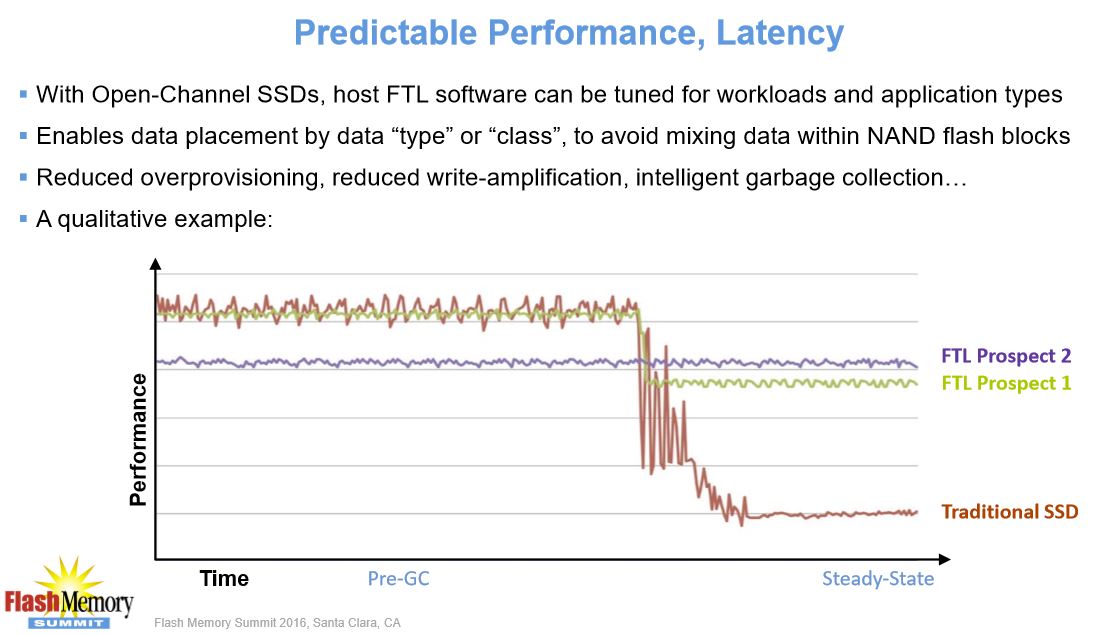

There is broad movement in the industry to streamline SSD design and allow users more control over the inner workings of the SSD. Open-Channel SSDs offload portions of the internal FTL to the host, which allows the host to juggle the SSD management tasks. This new functionality utilizes the LightNVM FTL abstraction layer in tandem with NVMe, and Linux 4.4 and newer kernels support it.

LightNVM allows the user, or even an application (such as RocksDB, which Facebook demoed running with 3D XPoint), to assume control of the key processes, such as garbage collection and data placement. Meanwhile, the hardware still controls the other processes, such as ECC, XOR engines, error handling, and wear leveling. CNEX focuses on keeping the processes that need to be in the silicon in the silicon, but its approach employs hardware acceleration and a proprietary deterministic ECC to increase performance.

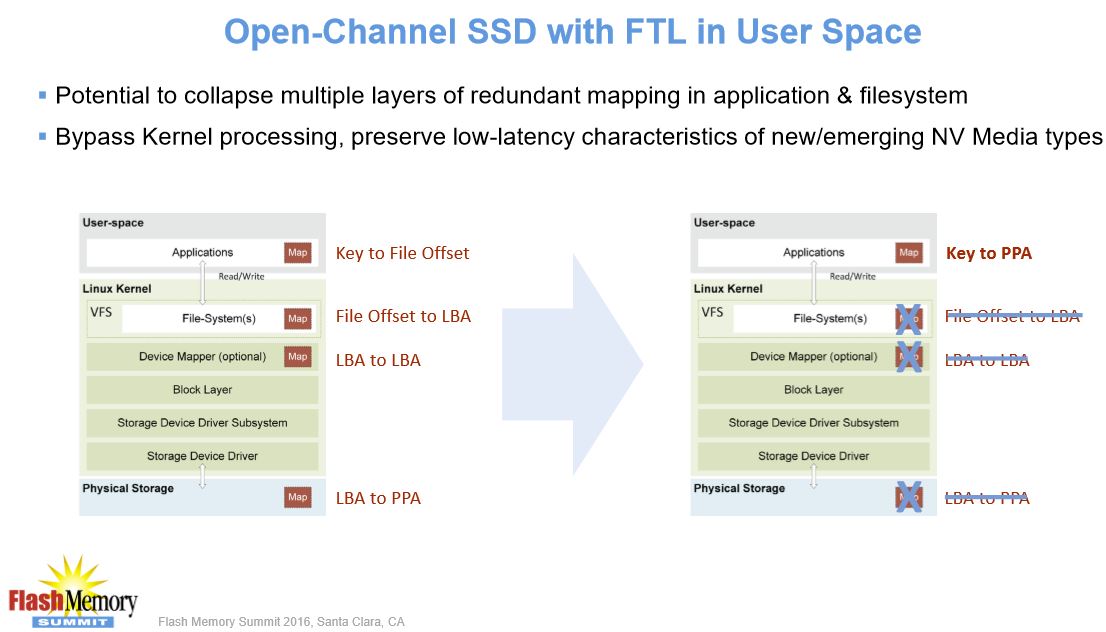

The host directs data directly to the actual physical address, which eliminates the complexity and performance penalties of L2P abstraction. The specification is NVMe compatible, and only three NVMe commands are changed (read, write, and erase) so that the host can place the data directly in its physical location. Data scientists can use unique management techniques on the host computer to preserve data locality, carve SSDs into multiple LUNs with different QoS profiles and QD allocations, support an unlimited number of data streams, and even employ direct-programmed (and customized) RAID and erasure coding. The system also reduces write amplification, which increases endurance.

The end result is increased performance, consistency, and endurance, along with reduced latency. Clever techniques can also schedule different SSDs to conduct garbage collection operations at different times, and the host-based FTL can manage multiple devices with one global FTL. The technique unlocks many more possibilities, particularly for all-flash JBOFs. For instance, if an SSD's endurance is about to expire the host could possibly switch it into read-only mode and only use it for caching.

How Do We Get There?

It all begins in silicon. Nearly all modern SSD controllers have embedded CPUs. For instance, the Microsemi controllers found on many of the enterprise SSDs we review feature more than 20 cores, but much of the core functionality runs in firmware. CNEX claims its controllers are more efficient because it uses a hardware-accelerated architecture, which cuts down the heavy firmware payload found with some controllers. CNEX also designed the architecture to reduce long development cycles and avoid sacrificing power, performance, consistency, and latency to run the inefficient embedded processes.

CNEX controllers enable a range of capabilities. The plug-and-play mode slips into an NVMe host and runs as normal, as evidenced by the "1" option in the chart above, and varying levels of customization place a portion of the FTL in different positions, including directly in the user space (4), which provides the most options and capabilities. The remaining operations run in the hardware-accelerated controller.

The FTL usually hold the LBA addresses in DRAM, which is costly, consumes precious PCB real estate, and adds firmware complexity. Moving the FTL into the host RAM eliminates SSD DRAM requirements on the SSD, which is interesting because the QuantX prototype featured DRAM cache on the board. However, Micron indicated that QuantX doesn't need DRAM, but it can use it for SSD management tasks or as a layer of 3D XPoint-backed DRAM, similar to an NVDIMM. This also aligns with the capabilities of DRAM-less CNEX-based SSDs.

The controller exports key metrics about each memory cell, such as the P/E ratio and voltage threshold, to the host. This technique provides enough information for the data placement algorithms to steer the data intelligently to the correct place. Managing the FTL in the host does require some computational horsepower, which CNEX claims is roughly equivalent to 1/10th of a single modern Xeon core, and the usual DRAM to NAND/persistent memory ratio of 1 GB of DRAM per TB of memory.

Back To 3D XPoint

Micron disclosed to us that during its search for a controller partner it was looking for a flexible SSD controller technology that has enough hardware acceleration to unlock the potential of 3D XPoint. The company also disclosed that it isn't using LDPC error correction, and is using a proprietary form of ECC. Both of these comments mesh nicely with the CNEX story. An off-the-shelf controller wouldn’t allow Micron to achieve its goals, so it found a partner that would allow them to influence the architecture.

The current QuantX prototypes use an FPGA to control the NAND, but this is likely an interim step to qualify the design before ASIC tapeout. Most of the hyperscalers also use FPGAs for their current designs, whereas CNEX can provide ASICs and all of the benefits that entails, such as reduced power consumption and a lower cost structure.

CNEX only provides the controllers; it doesn't manufacture end products. The company does provide reference architectures to help its customers design the SSDs, and that is likely the source of the "Diamond V2" branding. The design also alleviates the need for firmware engineers, which are a rare and hot commodity, and allows Linux programmers to control the SSDs.

And So It Begins

Using Open-Channel and LightNVM requires an incredible amount of engineering talent, so hyperscalers will likely use these approaches. Even OEMs such as Dell or HP really wouldn't be interested in that level of control, but it’s nice to have the option.

Open-Channel and LightNVM aren't exclusive to CNEX. Other companies, such as OCZ (coverage here) and Samsung are also working on similar techniques, but their development efforts center on NAND.

CNEX aims to provide its customers with either the whole painting or just a canvas and a set of oils; the controllers offer plug-and-play simplicity or users can go for varying levels of unparalleled granularity that unlock incredible possibilities. If Micron is using the CNEX architecture for its 3D XPoint-powered QuantX controllers, which it most assuredly is (according to me), then the company has made a wise choice.

Micron has the blank canvas, but the real question is just what kind of picture it is painting.

Micron disclosed to us that the QSFP+ connection on its development board is for NVMe Over Fabrics experimentation. CNEX also happens to specialize in a revolutionary new architecture that brings four 10 GbE controllers on die with the SSD controller (more on that soon), so we might only be seeing a small piece of the larger QuantX picture.

Paul Alcorn is the Editor-in-Chief for Tom's Hardware US. He also writes news and reviews on CPUs, storage, and enterprise hardware.

-

manleysteele A second well researched, well written review of their product in as many days. Take that, Micron and CNET.Reply -

KaiserPhantasma well written but how fast will it be released commercially? we see all these "great technologies" yet we can't seem to buy them (on the consumer not enterprise level)Reply -

wgt999 Paul, thanks for the sleuthing and the analysis. Most interesting. Micron seems very serious about delivering a breakthrough product. Can't wait to see it finishing and in production! Now, can they make enough XPoint memory to come anywhere close to meeting market demand?Reply -

Paul Alcorn Reply18436495 said:Paul, thanks for the sleuthing and the analysis. Most interesting. Micron seems very serious about delivering a breakthrough product. Can't wait to see it finishing and in production! Now, can they make enough XPoint memory to come anywhere close to meeting market demand?

That is the multi-billion dollar question. Also, pressure from competitors that utilize proven, and cheap, technology are going to create a significant barrier. See here for Samsung's dual-prong strategy.

http://www.tomshardware.com/news/samsung-3d-xpoint-z-nand-z-ssd,32462.html

-

kinney Reply18436628 said:18436495 said:Paul, thanks for the sleuthing and the analysis. Most interesting. Micron seems very serious about delivering a breakthrough product. Can't wait to see it finishing and in production! Now, can they make enough XPoint memory to come anywhere close to meeting market demand?

That is the multi-billion dollar question. Also, pressure from competitors that utilize proven, and cheap, technology are going to create a significant barrier. See here for Samsung's dual-prong strategy.

http://www.tomshardware.com/news/samsung-3d-xpoint-z-nand-z-ssd,32462.html

Yeah, could easily end up as another Rambus redux with 3DXpoint. -

jasonf2 Correct me if I am wrong but this technology seems to be alot like what is going on with directx12 on the GPU side where software developers are able to "get closer to the metal". It seems to me though that to be able to fully utilize this there would have to be a pretty good amount of rework on the OS side. Is the application specifically for supercomputer class big data workloads where this is pretty common or how are they planning on handling this? It seems to me that this is going to take a pretty specialized programmer working in system optimization to a specific controller to really take advantage. Or am I missing something?Reply -

wgt999 Reply

You're right. SCM - also called PM (persistent memory), basically demands a new computing and programming model. If you want a great introduction the work that needs to get done take a look at the SNIA spec on the Persistent Memory Programming Model. http://bit.ly/2aWmDSH Microsoft and Linux are well into implementing this but it's still going to take many years before it is fully implemented and application software takes full advantage of it.18440191 said:Correct me if I am wrong but this technology seems to be alot like what is going on with directx12 on the GPU side where software developers are able to "get closer to the metal". It seems to me though that to be able to fully utilize this there would have to be a pretty good amount of rework on the OS side. Is the application specifically for supercomputer class big data workloads where this is pretty common or how are they planning on handling this? It seems to me that this is going to take a pretty specialized programmer working in system optimization to a specific controller to really take advantage. Or am I missing something? -

Paul Alcorn Reply18441065 said:

You're right. SCM - also called PM (persistent memory), basically demands a new computing and programming model. If you want a great introduction the work that needs to get done take a look at the SNIA spec on the Persistent Memory Programming Model. http://bit.ly/2aWmDSH Microsoft and Linux are well into implementing this but it's still going to take many years before it is fully implemented and application software takes full advantage of it.18440191 said:Correct me if I am wrong but this technology seems to be alot like what is going on with directx12 on the GPU side where software developers are able to "get closer to the metal". It seems to me though that to be able to fully utilize this there would have to be a pretty good amount of rework on the OS side. Is the application specifically for supercomputer class big data workloads where this is pretty common or how are they planning on handling this? It seems to me that this is going to take a pretty specialized programmer working in system optimization to a specific controller to really take advantage. Or am I missing something?

It will surely take a while to filter down to broader use-cases, but some are very interested in using it. I think that the core CNEX value prop is that it allows the vendors plenty of room to navigate by either using plug-and-play NVMe or the Open-Channel/LightNVM approach. It will surely be interesting to see how much penetration the more advanced architectures gain into the data center.

That said, there are likely far more data center operators that are interested in proven and mature solutions.